-

Notifications

You must be signed in to change notification settings - Fork 25.7k

Description

-- with @pritamdamania, @mrshenli , @H-Huang, @Tierex

This document describes the proposal to upstream TorchElastic to PyTorch. For brevity this doc only discusses the topics at a high level. Topics that require an in-depth technical RFC will be updated with the link.

Goal

Training paradigm agnostic fault-tolerant/elastic distributed launcher (global restart on failure or scaling event)

Since TorchElastic adds fault tolerance and elasticity support to distributed PyTorch jobs (with assumptions mentioned below ). The first step towards a training paradigm agnostic distributed launcher is to upstream TorchElastic to PyTorch proper and over time blend it into the distributed PyTorch-core.

TorchElastic Overview

TorchElastic (https://pytorch.org/elastic), is a library to launch and manage distributed PyTorch worker processes handling complexities that naturally arise in distributed applications such as fault-tolerance, membership coordination, rank and role assignment, and distributed error reporting and propagation. Since inception (December 2019), TorchElastic has become the default way to run distributed PyTorch jobs at Facebook

At a high-level the TorchElastic agent (henceforth simply referred as “agent”) is started on each host and is responsible for creating and managing the worker processes. TorchElastic is compatible with any existing PyTorch script with the following assumptions:

- Rank and role assignment is done by the agent before workers are created

- All workers belonging to a process group is restarted on any worker failures

The assumptions above imply that:

- Work between checkpoints is lost on failures

- Membership is static up to failure/scaling event (e.g. number of workers for each rendezvous version is static)

- User need not manually assign

RANK,MASTER_ADDRESS,MASTER_PORT - A no-argument

dist.init_process_group()can and should be used

Diagram below shows how TorchElastic works:

Topics

sections below assume familiarity with TorchElastic, please refer to TorchElastic Overview for a high level overview

The high level discussion/design topics for upstreaming TorchElastic to PyTorch are:

Below we discuss each topic in detail. Each topic is organized into background and proposal sub-sections introducing the current state and context and the proposal for upstream.

1. Module Name

Background

TorchElastic’s GitHub repository (https://github.com/pytorch/elastic) contains both the core platform code as well as Kubernetes, AWS, Azure integration code. For the purpose of this doc we focus on upstreaming the core platform code which resides under the pytorch/elastic/torchelastic subdirectory as:

torchelastic

|- agent

|- distributed

|- events

|- metrics

|- multiprocessing

|- rendezvous

|- timer

|- utils

# module path is: torchelastic.<module> (e.g. torchelastic.agent)

Proposal

We propose the upstream to be done in two phases:

- Phase I (MVP):

torchelastic/**directory is moved totorch/distributed/elastic/**andtorch.distributed.elasticbe released as a Beta feature. - Phase II (over time): blend enhanced modules in

torchelasticto existing top level modules in torch. Some of these modules are (not an exhaustive list):torchelastic.multiprocessing→torch.multiprocessingtorchelastic.logging|events|metrics→ (new)torch.logging|events|metricstorchelastic.distributed→ (fold into)torch.distributed

2. Rendezvous

Background

While the concept of rendezvous in both TorchElastic and PyTorch are similar there are differences:

| TorchElastic | PyTorch | |

|---|---|---|

| Defines RendezvousHandler Interface | Yes | No |

| Registration Mechanism | explicit (as registry) | implicit (via import statements) |

| Implementations | etcd, zeus, mast | zeus |

| Required to run job | Yes | No |

| Part of | Agent process | Worker process |

Strictly speaking rendezvous is NOT needed to run distributed PyTorch as long as rank and role (master) assignment is done by some entity (e.g. for each worker the following env vars are set: RANK, MASTER_ADDRESS, MASTER_PORT). For PyTorch jobs invoked through TorchElastic users need not worry about rendezvous, rank or role assignment.

In OSS TorchElastic ships with an etcd based non-trivial rendezvous implementation which implies that it has a runtime dependency on etcd server and a compile time dependency on etcd client.

Proposal

We propose that:

EtcdRendezvousis upstreamed to torch as-is.- A dependency to

etc-clientis added torequirements.txtin torch - A

StandaloneRendezvousis implemented (on top ofc10d::TCPStore) that:- At a minimum supports fixed sized num nodes + fault tolerance (e.g. elasticity not MVP)

- Takes

MASTER_ADDRESSandMASTER_PORTas input from the user - A single point of failure on the elected

MASTER_ADDRESS - No external library/service dependency (hence the name “Standalone”)

ManualRendezvousis implemented that:- Takes

RANK,WORLD_SIZE,MASTER_ADDRESSandMASTER_PORTfrom the user (e.g. existing behavior in torch) - This makes it possible to keep the launcher CLI backwards compatible

- Takes

The proposal above will ensure that:

- Users DO NOT have to use etcd if they do not want to

- Users who want highly available rendezvous CAN use etcd (but have to setup the etcd server on their own)

Followups

- RFC on

StandaloneRendezvous - Check with release team that adding

etcd-clientdependency is OK (esp. for windows builds)

3. Launcher CLI

Background

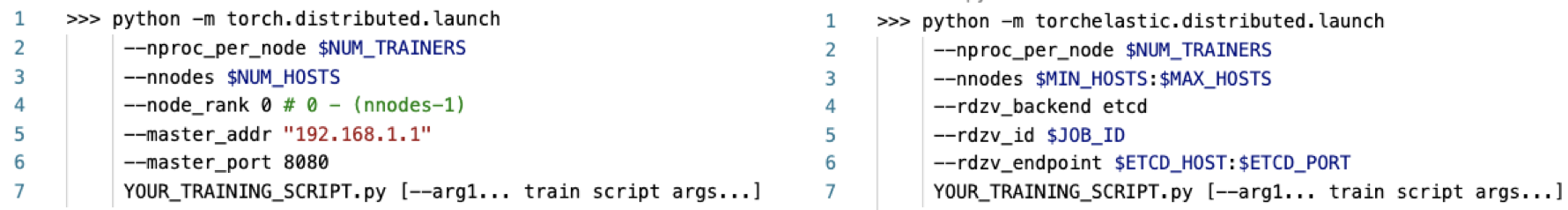

While one can use TorchElastic programmatically, TorchElastic includes an out-of-the-box launcher CLI that is a drop in replacement for torch.distributed.launch. The code-snippet below show launching YOUR_TRAINING_SCRIPT.py with the pt.launcher (left) versus the pet.launcher (right):

Proposal

Replace torch.distributed.launch with torchelastic.distributed.launch. The new launch code-snippet would look like:

>>> python -m torch.distributed.launch

--nproc_per_node $NUM_TRAINERS

--nnodes $NUM_HOSTS

--rdzv_backend standalone # (could be etcd if torchelastic is installed)

--rdzv_id $JOB_ID

YOUR_TRAINING_SCRIPT.py [--arg1... train script args...]

-- OR (arguments backwards compatible with existing launcher)

>>> python -m torch.distributed.launch

--nproc_per_node $NUM_TRAINERS

--nnodes $NUM_HOSTS

# uses ManualRendezvous

--node_rank 0

--master_addr "192.168.1.1"

--master_port 8080

YOUR_TRAINING_SCRIPT.py [--arg1... train script args...]

The behavior and arguments of the launcher is preserved and 100% backwards compatible.

4. Tutorials

Background

Tutorial

Currently there is a set of “parallel and-Distributed-Training” tutorials on pytorch.org (https://pytorch.org/tutorials/) which covers:

What is lacking is a “syllabus” (similar to an academic course) that the user can follow to learn how to work with distributed training in general with PyTorch. That is, while the existing tutorials guide the user through several paradigms it could be enhanced to teach the user basic primitives to build their own distributed applications with well-defined custom behaviors, topologies, failure handling.

Documentation

TorchElastic uses gh pages + sphinx, which is the same mechanism that PyTorch uses to document APIs. As long as the top level rst files are added to the main PyTorch docs gh branch everything else should work seamlessly.

Proposal

- Tutorial:

- Edit the existing tutorials to match the upstreamed usage pattern (this may be enough)

- Revamp the existing tutorials to more clearly guide the user across different “levels”

- Documentation:

- Merge rst files from TorchElastic gh-pages branch (https://github.com/pytorch/elastic/tree/gh-pages) to the correct places on the PyTorch gh-pages branch

5. Release

Open Questions

- How should we time the release? PyTorch 1.8? 1.9?

- Do we upstream in phases? (e.g. target some MVP for 1.8 and then fast follow with a 1.8.1 or complete in 1.9)

Followups

- Concrete timeline (with dates that matches 1.8, 1.9 release dates) with phase deliniations

6. Open Questions

- Should TSM also be open sourced with TorchElastic?

- Coordinating Kubeflow integration efforts (issue 117 ([request] Do we have plan to merge Kubernetes part to kubeflow/pytorch-operator? elastic#117)) with this upstream since once upstreamed, module paths for torchelastic changes and pypi packages will be deprecated → breaks pre-upstream integration

- Designate PoCs from:

- Documentation team - to revamp existing tutorials and ensure that docs are generated properly

- Release team - to coordinate release dates and versions

- Distributed PT - code reviews during upstreaming, review StandaloneRendezvous + other RFCs

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @agolynski @SciPioneer @H-Huang @mrzzd