-

Notifications

You must be signed in to change notification settings - Fork 25.7k

To add RMSProp algorithm documentation #63721

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

🔗 Helpful links

💊 CI failures summary and remediationsAs of commit add63b0 (more details on the Dr. CI page): ✅ None of the CI failures appear to be your fault 💚

1 job timed out:

❄️ 1 failure tentatively classified as flakybut reruns have not yet been triggered to confirm:

|

db4de6f to

8fa9fdb

Compare

8fa9fdb to

0701462

Compare

Codecov Report

@@ Coverage Diff @@

## master #63721 +/- ##

==========================================

+ Coverage 66.73% 66.81% +0.08%

==========================================

Files 695 695

Lines 90833 90845 +12

==========================================

+ Hits 60618 60700 +82

+ Misses 30215 30145 -70 |

8af380f to

3af2ef4

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the rendered version, that helps a lot!

torch/optim/rmsprop.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This was a typo in the previous doc right?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, actually \alpha generally stands for learning rate, however for the specific case of RMSProp alpha stands for coefficient of square average in the literature (including in PyTorch parameter alpha - smoothing constant).

So, the learning rate scheduler needed to have some other representation which i selected \gamma. We can change it to some other character if you like :)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't have a specific preference. If there is one character that is not used by any of the optimizers, we should use that one so that we can make all the optimizers consistent.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Cool, so i have changed everything to \gamma for learning rate :) now, there should be consistency.

torch/optim/rmsprop.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What about the momentum, weight decay and centered arguments?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we can link to the paper if people want to see the original algorithm. But the one we provide should properly reflect what is actually implemented. And all the arguments we support.

torch/optim/rmsprop.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This should be f_{t-1} no?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

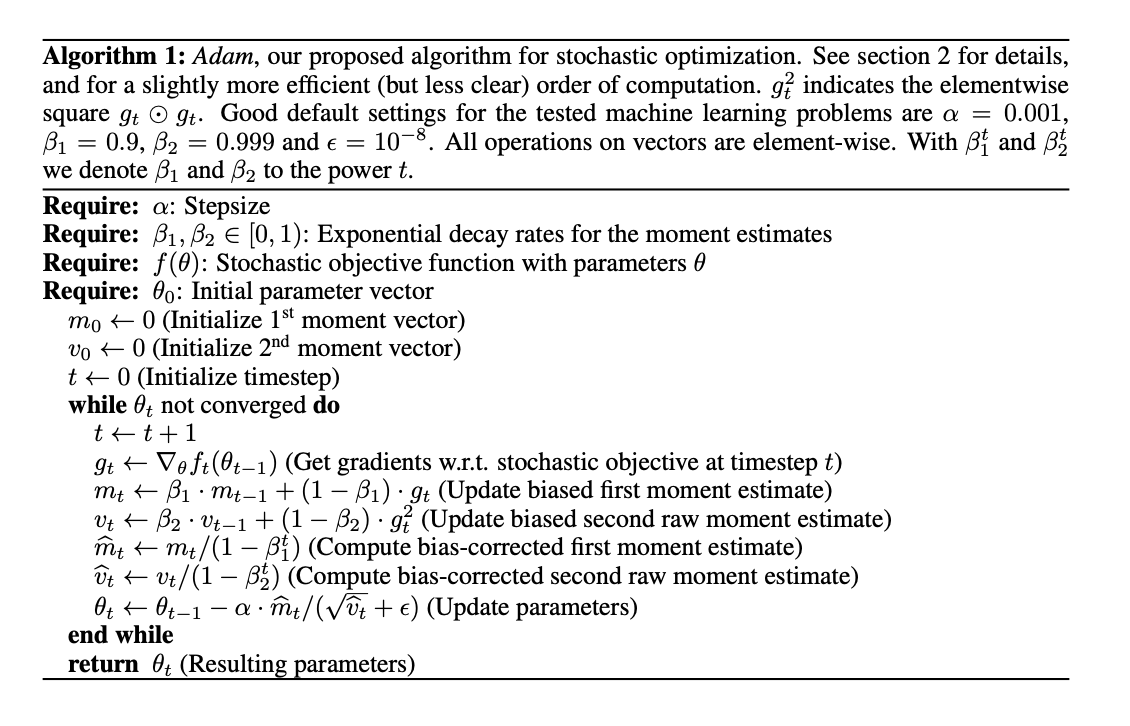

actually in many papers the notation is "f_t" in particular "f_t (\theta_{t-1})"

For example

- in the original paper of Adam in the page 2, in Algorithm 1 https://arxiv.org/pdf/1412.6980.pdf

- in the paper of RAdam page 5, Algorithm 2 : https://arxiv.org/pdf/1412.6980.pdf

To my understanding the idea is we use parameter \theta_{t-1} because the latest computed parameter is at timestep t-1. However, the stochastic gradient is computed at this step -t, that's why notation is f_t instead of f_{t-1}

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ho that's interesting.. But as long as it is consistent for all optimizers, I'm happy :)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it is consistent in PyTorch documentation and papers :)

Thanks for reviewing the PR |

8496590 to

500d5c3

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The overall structure looks good.

torch/optim/rmsprop.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

g^{ave} is missing from here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

oh, thanks for pointing out :) and its fixed

torch/optim/rmsprop.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The momentum buffer is initialized with 0s in the code. Why is this one 1s?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes , you're right, i modified it

500d5c3 to

e8e56bb

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

|

@iramazanli has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator. |

1 similar comment

|

@iramazanli has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator. |

e8e56bb to

add63b0

Compare

|

@iramazanli has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator. |

|

@iramazanli merged this pull request in aefa2f3. |

It has been discussed before that adding description of Optimization algorithms to PyTorch Core documentation may result in a nice Optimization research tutorial. In the following tracking issue we mentioned about all the necessary algorithms and links to the originally published paper #63236.

In this PR we are adding description of RMSProp to the documentation. For more details, we refer to the paper https://www.cs.toronto.edu/~tijmen/csc321/slides/lecture_slides_lec6.pdf