Alibaba Cloud Model Studio lets you call large language models (LLMs) through OpenAI compatible interfaces or the DashScope SDK.

This topic uses Qwen as an example to guide you through your first API call. You will learn how to:

Obtain an API key

Configure your local development environment

Call the Qwen API

Account settings

Register an account: If you do not have an Alibaba Cloud account, you must first register one.

Select a region: Model Studio provides the International Edition (Singapore) and the Mainland China Edition (Beijing). The two editions differ in terms of the console, endpoint, models, and their pricing, see Models and pricing.

International Edition: Endpoints and model services are located in international regions excluding Mainland China. The default endpoint is Singapore.

Mainland China Edition: Endpoints and model services are located in Mainland China. Currently, only the Beijing endpoint is supported.

Complete account verification: To activate the Mainland China Edition, this step is necessary. To activate the International Edition, you can skip this step.

Use your Alibaba Cloud account to complete account verification:

Choose Individual Verification or Upgrade to Enterprise, and click Verify now.

In the Additional Information section of the page that appears, select Yes for Purchase cloud resources or enable acceleration inside the Chinese mainland.

For other items, see Account verification overview.

Activate Model Studio: Use your Alibaba Cloud account to go to the Model Studio console (Singapore or Beijing). After you read and agree to the Terms of Service, Model Studio is automatically activated. If the Terms of Service do not appear, this indicates that you have already activated Model Studio.

Obtain an API key: Go to the Key Management (Singapore or Beijing) page, click Create API Key. Later, you will use the API Key to call the models.

When you create a new API key, set Workspace to Default Workspace. To use an API key from a sub-workspace, the Alibaba Cloud account owner must authorize the corresponding sub-workspace to use the specific models. For more information, see Authorize a sub-workspace to call models.

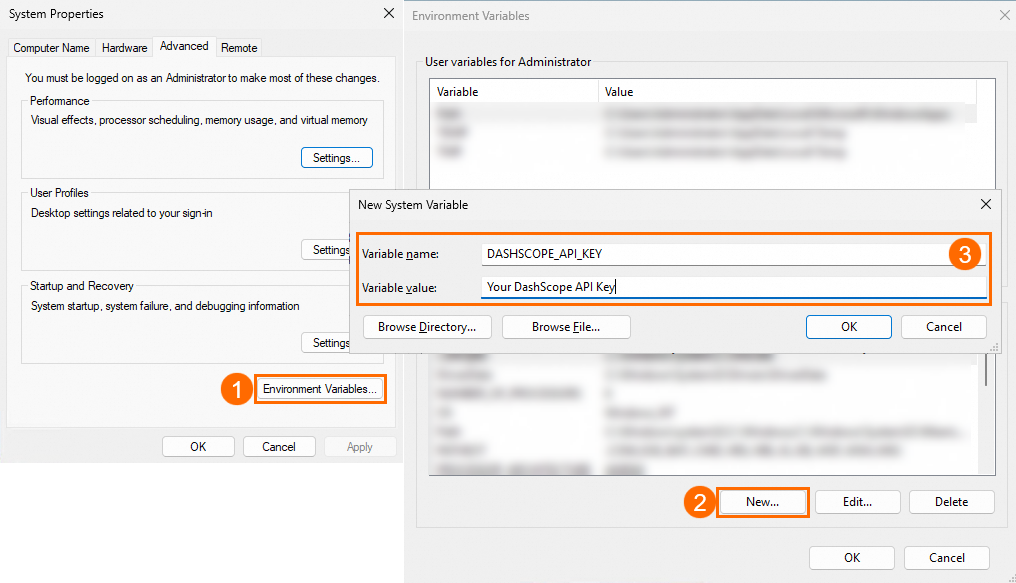

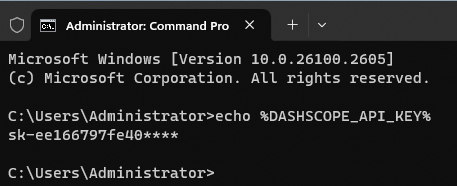

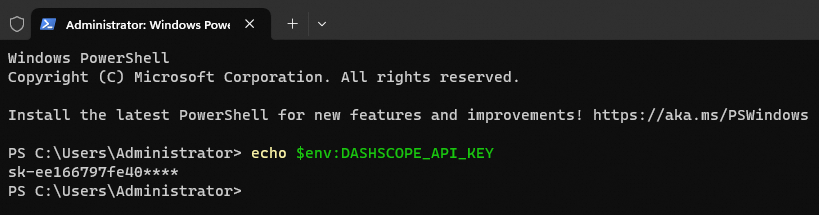

Set your API key as an environment variable

Set the API key as an environment variable to avoid hardcoding it in your code. This reduces the risk of leaks.

Choose a programming language

Select a programming language or tool that you are familiar with.

Python

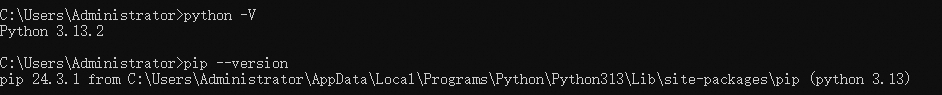

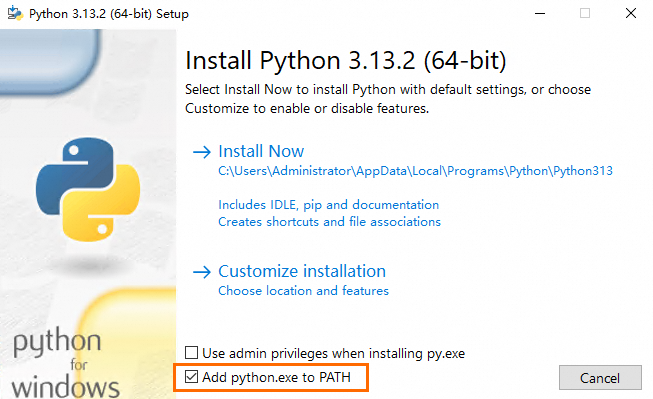

Step 1: Set up the Python environment

Check your Python version

Configure a virtual environment (Optional)

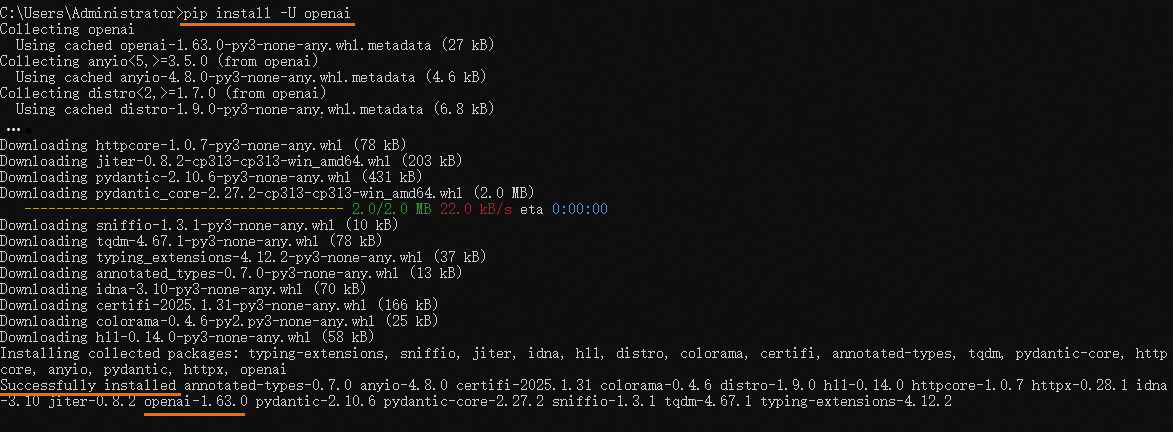

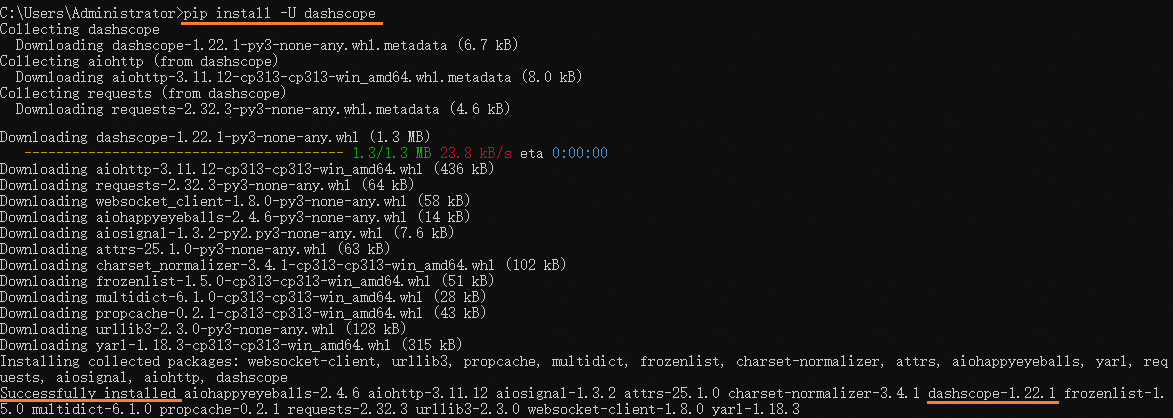

Install the OpenAI Python SDK or DashScope Python SDK

Step 2: Call the LLM API

OpenAI Python SDK

If you have installed Python and the OpenAI Python SDK, you can follow these steps to send your API request.

Create a file named

hello_qwen.py.Copy the following code into

hello_qwen.pyand save the file.import os from openai import OpenAI try: client = OpenAI( # The API keys for the Singapore and China (Beijing) regions are different. To obtain an API key, see https://modelstudio.console.alibabacloud.com/?tab=model#/api-key # If you have not configured an environment variable, replace the following line with your Model Studio API key: api_key="sk-xxx", api_key=os.getenv("DASHSCOPE_API_KEY"), # The following URL is for the Singapore region. If you use a model in the China (Beijing) region, replace the URL with: https://dashscope.aliyuncs.com/compatible-mode/v1 base_url="https://dashscope-intl.aliyuncs.com/compatible-mode/v1", ) completion = client.chat.completions.create( model="qwen-plus", messages=[ {'role': 'system', 'content': 'You are a helpful assistant.'}, {'role': 'user', 'content': 'Who are you?'} ] ) print(completion.choices[0].message.content) except Exception as e: print(f"Error message: {e}") print("For more information, see https://www.alibabacloud.com/help/en/model-studio/developer-reference/error-code")Run

python hello_qwen.pyorpython3 hello_qwen.pyon the command line.If the

No such file or directorymessage appears, specify the full file path of the file.After the command runs, the following output is returned:

I am a large-scale language model developed by Alibaba Cloud. My name is Qwen.

DashScope Python SDK

If you have installed Python and the DashScope Python SDK, you can follow these steps to send your API request.

Create a file named

hello_qwen.py.Copy the following code into

hello_qwen.pyand save the file.import os from dashscope import Generation import dashscope # The following URL is for the Singapore region. If you use a model in the China (Beijing) region, replace the URL with: https://dashscope.aliyuncs.com/api/v1 dashscope.base_http_api_url = 'https://dashscope-intl.aliyuncs.com/api/v1' messages = [ {'role': 'system', 'content': 'You are a helpful assistant.'}, {'role': 'user', 'content': 'Who are you?'} ] response = Generation.call( # The API keys for the Singapore and China (Beijing) regions are different. To obtain an API key, see https://modelstudio.console.alibabacloud.com/?tab=model#/api-key # If you have not configured an environment variable, replace the following line with your Model Studio API key: api_key = "sk-xxx", api_key=os.getenv("DASHSCOPE_API_KEY"), model="qwen-plus", messages=messages, result_format="message" ) if response.status_code == 200: print(response.output.choices[0].message.content) else: print(f"HTTP return code: {response.status_code}") print(f"Error code: {response.code}") print(f"Error message: {response.message}") print("For more information, see https://www.alibabacloud.com/help/en/model-studio/developer-reference/error-code")Run

python hello_qwen.pyorpython3 hello_qwen.pyon the command line.NoteThe command in this example must be run from the directory where the Python file is located. If you want to run it from another location, you must specify the full file path.

After the command runs, the following output is returned:

I am a large language model from Alibaba Cloud. My name is Qwen.

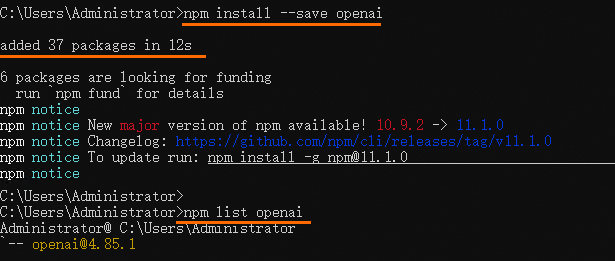

Node.js

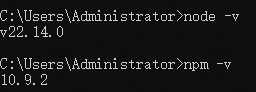

Step 1: Set up the Node.js environment

Check the Node.js installation status

Install the SDK

Step 2: Call the LLM API

Create a

hello_qwen.mjsfile.Copy the following code into the file.

import OpenAI from "openai"; try { const openai = new OpenAI( { // The API keys for the Singapore and China (Beijing) regions are different. To obtain an API key, see https://modelstudio.console.alibabacloud.com/?tab=model#/api-key // If you have not configured an environment variable, replace the following line with your Model Studio API key: apiKey: "sk-xxx", apiKey: process.env.DASHSCOPE_API_KEY, // The following URL is for the Singapore region. If you use a model in the China (Beijing) region, replace the URL with: https://dashscope.aliyuncs.com/compatible-mode/v1 baseURL: "https://dashscope-intl.aliyuncs.com/compatible-mode/v1" } ); const completion = await openai.chat.completions.create({ model: "qwen-plus", messages: [ { role: "system", content: "You are a helpful assistant." }, { role: "user", content: "Who are you?" } ], }); console.log(completion.choices[0].message.content); } catch (error) { console.log(`Error message: ${error}`); console.log("For more information, see https://www.alibabacloud.com/help/en/model-studio/developer-reference/error-code"); }Run the following command on the command line to send an API request:

node hello_qwen.mjsNoteThe command in this example must be run from the directory where the

hello_qwen.mjsfile is located. If you want to run it from another location, you must specify the full file path.Make sure that the SDK is installed in the same directory as the

hello_qwen.mjsfile. Otherwise, aCannot find package 'openai' imported from xxxerror is reported.

After the command runs successfully, the following output is returned:

I am a language model from Alibaba Cloud. My name is Qwen.

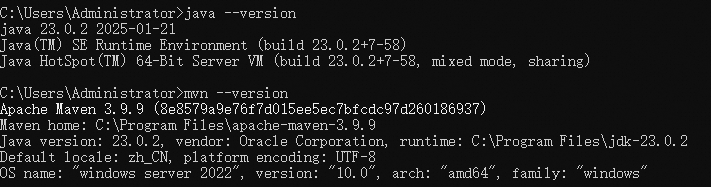

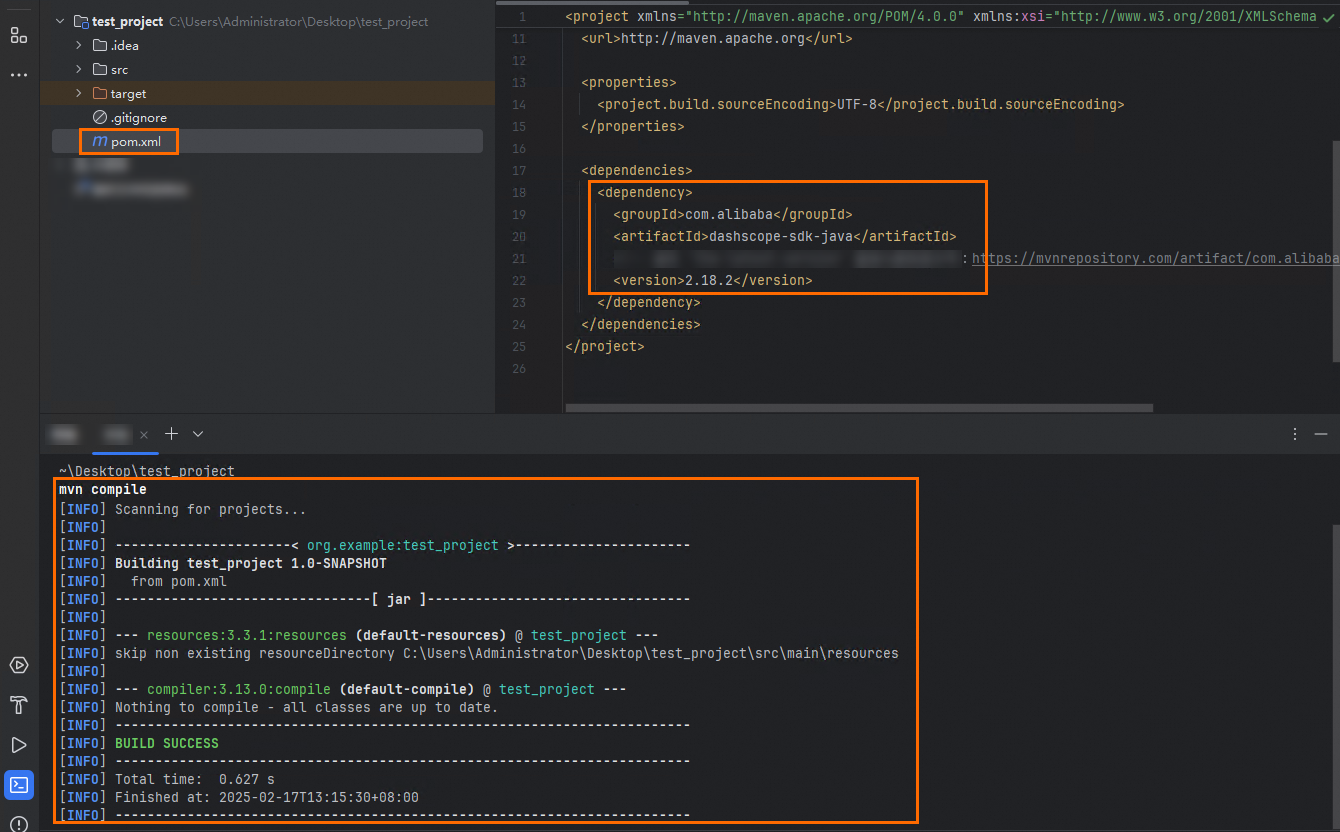

Java

Step 1: Set up the Java environment

Check your Java version

Install the SDK

Step 2: Call the LLM API

Run the following code to call the LLM API.

import java.util.Arrays;

import java.lang.System;

import com.alibaba.dashscope.aigc.generation.Generation;

import com.alibaba.dashscope.aigc.generation.GenerationParam;

import com.alibaba.dashscope.aigc.generation.GenerationResult;

import com.alibaba.dashscope.common.Message;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.InputRequiredException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.protocol.Protocol;

public class Main {

public static GenerationResult callWithMessage() throws ApiException, NoApiKeyException, InputRequiredException {

// The following URL is for the Singapore region. If you use a model in the China (Beijing) region, replace the URL with: https://dashscope.aliyuncs.com/api/v1

Generation gen = new Generation(Protocol.HTTP.getValue(), "https://dashscope-intl.aliyuncs.com/api/v1");

Message systemMsg = Message.builder()

.role(Role.SYSTEM.getValue())

.content("You are a helpful assistant.")

.build();

Message userMsg = Message.builder()

.role(Role.USER.getValue())

.content("Who are you?")

.build();

GenerationParam param = GenerationParam.builder()

// The API keys for the Singapore and China (Beijing) regions are different. To obtain an API key, see https://modelstudio.console.alibabacloud.com/?tab=model#/api-key

// If you have not configured an environment variable, replace the following line with your Model Studio API key: .apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

// For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

.model("qwen-plus")

.messages(Arrays.asList(systemMsg, userMsg))

.resultFormat(GenerationParam.ResultFormat.MESSAGE)

.build();

return gen.call(param);

}

public static void main(String[] args) {

try {

GenerationResult result = callWithMessage();

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent());

} catch (ApiException | NoApiKeyException | InputRequiredException e) {

System.err.println("Error message: "+e.getMessage());

System.out.println("For more information, see https://www.alibabacloud.com/help/en/model-studio/developer-reference/error-code");

}

System.exit(0);

}

}After the code runs, the following output is returned:

I am a large-scale language model developed by Alibaba Cloud. My name is Qwen.curl

You can call models using OpenAI compatible HTTP or DashScope HTTP. For a list of models, see Models and pricing.

If you have not configured an environment variable, replace -H "Authorization: Bearer $DASHSCOPE_API_KEY" \ with -H "Authorization: Bearer sk-xxx" \.

OpenAI compatible HTTP

The URL in the code example is for the Singapore region. If you use a model in the China (Beijing) region, you must replace the URL with https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions.

Run the following command to send an API request:

After you send the API request, the following response is returned:

{

"choices": [

{

"message": {

"role": "assistant",

"content": "I am a large language model from Alibaba Cloud. My name is Qwen."

},

"finish_reason": "stop",

"index": 0,

"logprobs": null

}

],

"object": "chat.completion",

"usage": {

"prompt_tokens": 22,

"completion_tokens": 16,

"total_tokens": 38

},

"created": 1728353155,

"system_fingerprint": null,

"model": "qwen-plus",

"id": "chatcmpl-39799876-eda8-9527-9e14-2214d641cf9a"

}DashScope HTTP

The URL in the code example is for the Singapore region. If you use a model in the China (Beijing) region, you must replace the URL with https://dashscope.aliyuncs.com/api/v1/services/aigc/text-generation/generation.

Run the following command to send an API request:

After you send the API request, the following response is returned:

{

"output": {

"choices": [

{

"finish_reason": "stop",

"message": {

"role": "assistant",

"content": "I am a large language model from Alibaba Cloud. My name is Qwen."

}

}

]

},

"usage": {

"total_tokens": 38,

"output_tokens": 16,

"input_tokens": 22

},

"request_id": "87f776d7-3c82-9d39-b238-d1ad38c9b6a9"

}Other languages

Call the LLM API

package main

import (

"bytes"

"encoding/json"

"fmt"

"io"

"log"

"net/http"

"os"

)

type Message struct {

Role string `json:"role"`

Content string `json:"content"`

}

type RequestBody struct {

Model string `json:"model"`

Messages []Message `json:"messages"`

}

func main() {

// Create an HTTP client.

client := &http.Client{}

// Construct the request body.

requestBody := RequestBody{

// For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

Model: "qwen-plus",

Messages: []Message{

{

Role: "system",

Content: "You are a helpful assistant.",

},

{

Role: "user",

Content: "Who are you?",

},

},

}

jsonData, err := json.Marshal(requestBody)

if err != nil {

log.Fatal(err)

}

// Create a POST request. The following URL is for the Singapore region. If you use a model in the China (Beijing) region, replace the URL with: https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions

req, err := http.NewRequest("POST", "https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions", bytes.NewBuffer(jsonData))

if err != nil {

log.Fatal(err)

}

// Set request headers.

// The API keys for the Singapore and China (Beijing) regions are different. To obtain an API key, see https://modelstudio.console.alibabacloud.com/?tab=model#/api-key

// If you have not configured an environment variable, replace the following line with your Model Studio API key: apiKey := "sk-xxx"

apiKey := os.Getenv("DASHSCOPE_API_KEY")

req.Header.Set("Authorization", "Bearer "+apiKey)

req.Header.Set("Content-Type", "application/json")

// Send the request.

resp, err := client.Do(req)

if err != nil {

log.Fatal(err)

}

defer resp.Body.Close()

// Read the response body.

bodyText, err := io.ReadAll(resp.Body)

if err != nil {

log.Fatal(err)

}

// Print the response content.

fmt.Printf("%s\n", bodyText)

}

<?php

// The following URL is for the Singapore region. If you use a model in the China (Beijing) region, replace the URL with: https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions

$url = 'https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions';

// The API keys for the Singapore and China (Beijing) regions are different. To obtain an API key, see https://modelstudio.console.alibabacloud.com/?tab=model#/api-key

// If you have not configured an environment variable, replace the following line with your Model Studio API key: $apiKey = "sk-xxx";

$apiKey = getenv('DASHSCOPE_API_KEY');

// Set request headers.

$headers = [

'Authorization: Bearer '.$apiKey,

'Content-Type: application/json'

];

// Set the request body.

$data = [

"model" => "qwen-plus",

"messages" => [

[

"role" => "system",

"content" => "You are a helpful assistant."

],

[

"role" => "user",

"content" => "Who are you?"

]

]

];

// Initialize a cURL session.

$ch = curl_init();

// Set cURL options.

curl_setopt($ch, CURLOPT_URL, $url);

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, json_encode($data));

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_HTTPHEADER, $headers);

// Execute the cURL session.

$response = curl_exec($ch);

// Check for errors.

if (curl_errno($ch)) {

echo 'Curl error: ' . curl_error($ch);

}

// Close the cURL resource.

curl_close($ch);

// Output the response.

echo $response;

?>using System.Net.Http.Headers;

using System.Text;

class Program

{

private static readonly HttpClient httpClient = new HttpClient();

static async Task Main(string[] args)

{

// The API keys for the Singapore and China (Beijing) regions are different. To obtain an API key, see https://modelstudio.console.alibabacloud.com/?tab=model#/api-key

// If you have not configured an environment variable, replace the following line with your Model Studio API key: string? apiKey = "sk-xxx";

string? apiKey = Environment.GetEnvironmentVariable("DASHSCOPE_API_KEY");

if (string.IsNullOrEmpty(apiKey))

{

Console.WriteLine("The API key is not set. Make sure that the 'DASHSCOPE_API_KEY' environment variable is set.");

return;

}

// The following URL is for the Singapore region. If you use a model in the China (Beijing) region, replace the URL with: https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions

string url = "https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions";

// For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

string jsonContent = @"{

""model"": ""qwen-plus"",

""messages"": [

{

""role"": ""system"",

""content"": ""You are a helpful assistant.""

},

{

""role"": ""user"",

""content"": ""Who are you?""

}

]

}";

// Send the request and get the response.

string result = await SendPostRequestAsync(url, jsonContent, apiKey);

// Output the result.

Console.WriteLine(result);

}

private static async Task<string> SendPostRequestAsync(string url, string jsonContent, string apiKey)

{

using (var content = new StringContent(jsonContent, Encoding.UTF8, "application/json"))

{

// Set request headers.

httpClient.DefaultRequestHeaders.Authorization = new AuthenticationHeaderValue("Bearer", apiKey);

httpClient.DefaultRequestHeaders.Accept.Add(new MediaTypeWithQualityHeaderValue("application/json"));

// Send the request and get the response.

HttpResponseMessage response = await httpClient.PostAsync(url, content);

// Process the response.

if (response.IsSuccessStatusCode)

{

return await response.Content.ReadAsStringAsync();

}

else

{

return $"Request failed: {response.StatusCode}";

}

}

}

}API reference

For information about the request and response parameters of the Qwen API, see Qwen.

For more information about other models, see Models and pricing.

FAQ

What to do if the Model.AccessDenied error is reported after I call the LLM API?

A: This error occurs because you are using the API key of a sub-workspace, and the sub-workspace does not have permission to access the applications or models in the default workspace. To use the API key of a sub-workspace, the Alibaba Cloud account administrator must grant model authorization to the sub-workspace. For example, this topic uses the Qwen-Plus model. For more information, see Authorize a sub-workspace to call models.

What to do next

View more models | The sample code uses the qwen-plus model. Alibaba Cloud Model Studio also supports other Qwen models and third-party models such as DeepSeek and Kimi. For more information about supported models and their API references, see Models and pricing. |

Learn about advanced usage | The sample code implements a simple Q&A interaction. To learn more about advanced uses of the Qwen API, such as streaming output, structured output, and function calling, see the topics in the Overview of text generation models section. |

Experience models online | To interact with a large model in a dialog box, similar to the experience on Qwen Chat, visit Playground (Singapore or Beijing). |