ECE 595 Computer Network Systems

Peer-to-Peer Systems

�Applications

File download

Location and Retrieval (Napster, Gnutella) Efficient distribution (BitTorrent, EDonkey)

Audio Conference (Skype) Live Internet Broadcasting

�Scaling Problem

Millions of clients server and network meltdown

�P2P System

Leverage the resources of client machines (peers)

Computation, storage, bandwidth

�P2p file-sharing

Quickly grown in popularity

Dozens or hundreds of file sharing applications 35 million American adults use P2P networks -29% of all Internet users in US! Audio/Video transfer now dominates traffic on the Internet

�Whats out there?

Central Flood Supernode flood KaZaa Route

Search

Napster

Gnutella

DHTs

BitTorrent: Being able to transfer parts of a file at a time

�Searching

N1

Key=title Value=MP3 data Publisher

N2

N3

Internet

? N6

Client Lookup(title)

N4

N5

�Framework

Common Primitives:

Join: how to I begin participating? Publish: how do I advertise my file? Search: how to I find a file? Fetch: how to I retrieve a file?

�Next Topic...

Centralized Database

Napster

Query Flooding

Gnutella

Intelligent Query Flooding

KaZaA

Swarming

BitTorrent

Structured Overlay Routing

Distributed Hash Tables

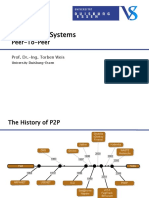

�Napster: History

1999: Sean Fanning launches Napster Peaked at 1.5 million simultaneous users Jul 2001: Napster shuts down

10

�Napster: Overiew

Centralized Database:

Join: on startup, client contacts central server Publish: reports list of files to central server Search: query the server => return someone that stores the requested file Fetch: get the file directly from peer

11

�Napster: Publish

insert(X, 123.2.21.23) ...

Publish

I have X, Y, and Z! 123.2.21.23

12

�Napster: Search

123.2.0.18

search(A) --> 123.2.0.18 Fetch

Query

Reply

Where is file A?

13

�Napster: Discussion

Pros:

Simple Search scope is O(1)

Cons:

Server may not scale: Server maintains O(N) State Server does all processing Single point of failure

14

�Next Topic...

Centralized Database

Napster

Query Flooding

Gnutella

Intelligent Query Flooding

KaZaA

Swarming

BitTorrent

Structured Overlay Routing

Distributed Hash Tables

15

�Gnutella: History

Released in 2000 Soon many other clients: Bearshare, Morpheus, LimeWire, etc. In 2001, many protocol enhancements including ultrapeers

16

�Gnutella: Overview

Query Flooding:

Join: on startup, client contacts a few other nodes; these become its neighbors Publish: no need Search: ask neighbors, who ask their neighbors, and so on... when/if found, reply to sender.

TTL limits propagation�

Fetch: get the file directly from peer

17

�Gnutella: Search

I have file A. I have file A.

Reply

Query

Where is file A?

18

�Gnutella

Searching by flooding: If you dont have the file you want, query neighbors If they dont have it, they contact their neighbors TTL ensures flooding is controlled Avoid looping: nodes store recent query ids.

No looping but packets may be received twice.

Query response to neighbor requesting query

Forwards it to node it received query from.

19

�Constructing Topology

Node must know one initial bootstrap node. Through query responses, learns about other nodes Can attach itself to some of these. Keeps track of whether neighbor is alive through ping messages.

20

�Gnutella: Discussion

Pros:

Fully de-centralized Search cost distributed Processing @ each node permits powerful search semantics

Cons:

Search scope is O(N) Nodes leave often, network unstable

TTL-limited search works well for popular files

May not work well for files not popular.

21

�KaZaA: History

Created in 2001, by Dutch company Single network called FastTrack used by other clients as well: Morpheus, giFT, etc. Eventually protocol changed so other clients could no longer talk to it Popular file sharing network today

22

�KaZaA: Overview

Smart Query Flooding:

Join: on startup, client contacts a supernode ... may at some point become one itself Publish: send list of files to supernode Search: send query to supernode, supernodes flood query amongst themselves. Fetch: get the file directly from peer(s); can fetch simultaneously from multiple peers

23

�KaZaA: Network Design

Super Nodes

24

�KaZaA: File Insert

insert(X, 123.2.21.23) ...

Publish

I have X! 123.2.21.23

25

�KaZaA: File Search

search(A) --> 123.2.22.50

123.2.22.50 Query Replies

search(A) --> 123.2.0.18

Where is file A?

123.2.0.18

26

�Why Superpeers?

Pros:Query consolidation

Many connected nodes may have only a few files Propagating a query to a sub-node would take more b/w than answering it yourself

Cons: More load on superpeers. Selecting superpeers

How long youve been on is a good predictor of how long youll be around.

27

�KaZaA: Discussion

Pros:

Tries to take into account node heterogeneity:

Bandwidth Host Computational Resources Host Availability (?)

Cons:

Still no real guarantees on search scope or search time

Similar behavior to gnutella, but better.

28

�BitTorrent: History

In 2002, B. Cohen released BitTorrent Key Motivation:

Popularity exhibits temporal locality (Flash Crowds) E.g., Slashdot effect, CNN on 9/11, new movie/game release

Focused on Efficient Fetching, not Searching:

Distribute the same file to all peers Single publisher, multiple downloaders

Has some real publishers

29

�BitTorrent: Distribute chunks at a time

1 2 3 4

3 3 4

Service capacity doubles!

30

�BitTorrent: Overview

A overlay per file Obtain torrent file

File, length, hashing information, url of tracker

Centralized Tracker Server

Join: contact centralized tracker server, get a list of peers. Helps peers keep track of each other

Peer: Seed and leecher

Seed: already obtained full file.

Files broken into segments: segment hash Get segments from peers

31

�Key Ideas

Split the file Form mesh-based overlay Transfer by file blocks

Overlap downloading with uploading (Help each other while downloading) Overlap downloading with downloading Receive the all blocks out-of-order

Key differences from Napster:

Use of chunks instead of entire files Anti-freeloading mechanisms

32

�BitTorrent: Publish/Join

Tracker

33

�BitTorrent: Fetch

34

�BitTorrent: Sharing Strategy

Employ Tit-for-tat sharing strategy

A is downloading from some other people

A will let the fastest N of those download from him

Be optimistic: occasionally let freeloaders download

Otherwise no one would ever start! Also allows you to discover better peers to download from when they reciprocate

35

�Operations

Bootstrap:

New peer P. Contacts tracker. Gets partial list of other peers in the system.

Connection Establishment

Each peer establishes connections with other peers Peers exchange bitmaps reporting which pieces they have Updated as and when new pieces downloaded.

36

�Maintaining block diversity

Diversity

1 2 3 4

No Diversity

1 2 3 4

1 3 3 4 4

1 ??

??

Distributed coordination: To select block to download: Rarest block first

37

�Guard against free-riding

Free-riding: (Selfish, harmful to system)

downloading without uploading

Solution: Tit-for-tat:

If you dont upload to me, Im not uploading to you! Free-rider is punished How much you give is how much you get

38

�BitTorrent: Summary

Pros:

Works reasonably well in practice Gives peers incentive to share resources; avoids freeloaders

Cons:

Central tracker server needed to bootstrap swarm Not clear how effective tit-for-tat is.

39

�Next Topic...

Centralized Database

Napster

Query Flooding

Gnutella

Intelligent Query Flooding

KaZaA

Swarming

BitTorrent

Unstructured Overlay Routing

Freenet

Structured Overlay Routing

Distributed Hash Tables (DHT)

40

�Distributed Hash Tables (DHTs)

Gnutella: flooding oriented search

Expensive for items not as popular.

DHTs

Developed in academic community Ensures efficient search/lookup without flooding Makes some things harder

Fuzzy queries / full-text search / etc.

Deployment

Adopted by recent versions of BitTorrent, and Emule (Kad)

41

�Distributed Hash Tables

Nodes in system form a distributed data structure

Clever structure Convenient to locate objects Different than Gnutella: structure can be arbitrary

Each node has a unique id.

Usually hash of nodes IP address

Each object has a unique id.

Usually hash of filename. Map object to node nearest it in id space

42

�DHT: Overview

Structured Overlay Routing:

Join: On startup, contact a bootstrap node and integrate yourself into the distributed data structure; get a node id Publish: Route publication for file id toward a close node id along the data structure Search: Route a query for file id toward a close node id. Data structure guarantees that query will meet the publication. Fetch: Two options:

Publication contains actual file => fetch from where query stops Publication says I have file X => query tells you 128.2.1.3 has X, use IP routing to get X from 128.2.1.3

43

�Structured Networks

Distributed Hash Tables (DHTs) Hash table interface: put(key,item), get(key) O(log n) hops

K I

(K1,I1)

K I

K I K I

I1

K I

K I

K I

put(K1,I1)

K I K I

get (K1)