0% found this document useful (0 votes)

114 views30 pagesFoundations of Deep Learning

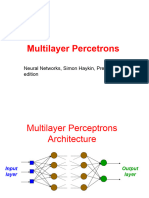

The document provides definitions and explanations of key concepts in deep learning including representation learning, deep learning, traditional vs representation learning pipelines, multilayer perceptrons, activation functions, forward propagation, optimization using gradient descent, empirical risk minimization, stochastic gradient descent, loss functions, and computing gradients using backpropagation. It explains how deep learning models learn representations from raw data in an end-to-end manner through multiple levels of nonlinear transformations.

Uploaded by

Nelson Ubaldo Quispe MCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

114 views30 pagesFoundations of Deep Learning

The document provides definitions and explanations of key concepts in deep learning including representation learning, deep learning, traditional vs representation learning pipelines, multilayer perceptrons, activation functions, forward propagation, optimization using gradient descent, empirical risk minimization, stochastic gradient descent, loss functions, and computing gradients using backpropagation. It explains how deep learning models learn representations from raw data in an end-to-end manner through multiple levels of nonlinear transformations.

Uploaded by

Nelson Ubaldo Quispe MCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 30