0% found this document useful (0 votes)

106 views23 pagesEU IT Salary Prediction Analysis

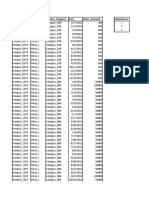

This document describes analyzing a dataset containing salary information for IT employees in the EU to predict salary based on factors like experience, location, skills, and company details. It performs data cleaning, outlier removal, variable selection, splitting into training and test sets, and builds linear regression models to relate salary to experience and other variables. Model performance is evaluated using metrics like RMSE.

Uploaded by

Venkatesh mCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as DOCX, PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

106 views23 pagesEU IT Salary Prediction Analysis

This document describes analyzing a dataset containing salary information for IT employees in the EU to predict salary based on factors like experience, location, skills, and company details. It performs data cleaning, outlier removal, variable selection, splitting into training and test sets, and builds linear regression models to relate salary to experience and other variables. Model performance is evaluated using metrics like RMSE.

Uploaded by

Venkatesh mCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as DOCX, PDF, TXT or read online on Scribd

/ 23