RED H

AT C

EPH S

TORAGE C

HEAT S

HEET

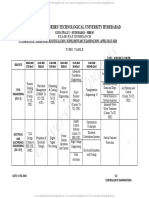

Summary of Certain Operations-oriented Ceph Commands

Note: Certain command outputs in the Example column were edited for better readability.

Monitoring and Health

Command Purpose Example

ceph -s Show status # ceph -s

summary cluster 1c528497-24e0-4af7-bb18-d43a8d31cecc

health HEALTH_OK

ceph -w Watch ongoing

status

rados df Show per pool and # rados df

total usage

pool_name rbd total_objects 176

used 700M total_used 9220M

objects 176 total_avail 4824G

clones 0 total_space 4833G

copie 528

missing_on_primary 0

unfound 0

degraded 0

rd_ops 0

rd 0

wr_ops 351

wr 700M

ceph df Show disk usage # ceph df

overview, global

and per pool GLOBAL POOLS

size 11172G name rbd

avail 11172G ID 0

raw used 501M used 0

%raw 0 %used 0

max avail 3724G

objects 0

ceph Show details about # ceph health detail

health health issues HEALTH_WARN mon.ceph4 low disk space; mon.ceph5 low disk space;

detail mon.ceph6 low disk space

mon.ceph4 low disk space -- 18% avail

mon.ceph5 low disk space -- 22% avail

mon.ceph6 low disk space -- 16% avail

1 | Red Hat Ceph Storage Cheat Sheet

�

ceph osd df Show disk usage # ceph osd df tree

tree linked to the CRUSH

tree ID -1 -2 0 3 4 8

weight 10.91034 3.63678 0.90919 0.90919 0.90919 0.90919

reweight - 11172G - 3724G 1.00000 1.00000 1.00000 1.00000

size 501M 168M 931G 931G 931G 931G

use 11172G 3724G 44760k 42752k 42804k 42616k

avail 0.00 0.00 931G 931G 931G 931G

%use 1.00 1.01 0.00 0.00 0.00 0.00

var 0 0 1.05 1.0 1.0 1.0

type root host 69 63 62 62

name default ceh3 ods.0 ods.3 ods.4 ods.8

Working With Pools and OSDs

Subcommands of the "c

eph osd" command

Command Purpose Example

ceph osd tree Lits hosts, their # ceph osd tree

OSDs, up and down

status, OSD weight, ID -1 -10 0 3 6

local reweight class hdd hdd hdd

weight 4.72031 2.71317 0.90439 0.90439 0.90439

type name root default host osd.0 osd.3 osd.6

status up up up

reweight 1.00000 1.00000 1.00000 1.00000 1.00000

PRI-AFF 1.00000 1.00000 1.00000 1.00000 1.00000

ceph osd stat Print a summary of # ceph osd stat

the OSD map 9 osds: 9 up, 9 in

ceph osd Instruct Ceph to # ceph osd deep-scrub osd.0

deep-scrub <id> perform a deep osd.0 instructed to deep-scrub

scrubbing process

(consistency check)

on an OSD.

ceph osd find Display location of # ceph osd find 0

<id> a given OSD (host {

name, port, and "osd": 0,

CRUSH details) "ip": "10.12.xxx.xxx:6804/61412",

"crush_location": {

"host": "ceph4",

"root": "default"

}

ceph osd map Locate an object # ceph osd map rbd benchmark_data_ceph1_268097_object865

pool object from a pool. osdmap e115 pool 'rbd' (3) object

Displays primary 'benchmark_data_ceph1_268097_object865' -> pg 3.c9f193ff (3.7f) -> up

and replica ([4,6,8], p4) acting ([4,6,8], p4)

placement groups

for the object

2 | Red Hat Ceph Storage Cheat Sheet

�

ceph osd Display OSD # ceph osd metadata 0

metadata <id> metadata (host and {

host info) "id": 0,

"arch": "x86_64",

"back_addr": "10.12.xxx.xxx:6805/61412",

"back_iface": "eno1",

ceph osd out Take an OSD out of # ceph osd out 0

<id> the cluster, marked out osd.0

rebalancing its data

to other OSDs.

ceph osd pool Create a new # ceph osd pool create test 64 64

create replicated pool with pool 'test' created

<pool-name> a number of

<pg-number> placement groups.

<pgs-number> Use the C

eph

Placement Groups

(PGs) per Pool

Calculator to

determine the

number of

placement groups.

Also, see P

ools in

the Administration

Guide.

ceph osd pool Delete a pool. # ceph osd pool delete test test --yes-i-really-really-mean-it

delete Specify the pool pool 'test' removed

<pool-name> name twice

<pool-name> followed by

--yes-i-really-re confirmation. Be

ally-mean-it careful when

deleting pools

because this action

cannot be reverted.

ceph osd pool Get all parameters # ceph osd pool get rbd all

get <pool> all for a pool. Specify size: 3

pool name for min_size: 2...

specific pool.

ceph osd pool ls List pools and # ceph osd pool ls detail

detail details of pools. pool 1 'rbd' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins

pg_num 128 pgp_num 128 last_change 65 flags hashpspool stripe_width 0

ceph osd pool Set a pool # ceph osd pool set rbd min_size 1

set <parameter> parameter, for set pool 1 min_size to 1

<value> example, “size”,

“min_size”, or

“pg_num”

ceph osd Temporarily # ceph osd reweight 0 0.5 # use 50% of default space on osd.0

reweight <id> override weight for

<weight> an OSD.

3 | Red Hat Ceph Storage Cheat Sheet

�

ceph osd Change the weight # ceph osd reweight-by-utilization 110

reweight-by-util of OSDs based on moved 7 / 576 (1.21528%)

ization their utilization. avg 64

<percent> See S et an OSD’s stddev 26.7623 -> 26.8328 (expected baseline 7.54247)

Weight by min osd.1 with 18 -> 18 pgs (0.28125 -> 0.28125 * mean)

Utilization in the max osd.4 with 102 -> 102 pgs (1.59375 -> 1.59375 * mean)

Storage Strategies

Guide.

ceph osd scrub Initiate a "light" # ceph osd scrub osd.0

<id> scrub on an OSD. osd.0 instructed to scrub

ceph osd Test how setting an # ceph osd test-reweight-by-utilization 110

test-reweight-b OSD weight based no change

y-utilization on utilization will moved 3 / 576 (0.520833%)

<percent> reflect data avg 64

movement.

ceph osd set Set various flags on # ceph osd set noout

<flag> the OSD

subsystem. See

Overrides in the

Administration

Guide.

Working With Placement Group

Subcommands of the "c

eph pg" command

Command Purpose Example

ceph pg Query statistics and other # ceph pg 1.c query

pg-id metadata about a placement {

query group. Often valuable info for "state": "active+clean",

troubleshooting, for example "snap_trimq": "[]",

the state of replicas, past "epoch": 72,

events, and other. "up": [

7,

3,

8

],

ceph pg pg-id List unfound objects. The # ceph pg 1.c list_missing

list_missing “ceph pg p g-id q

uery” {

command lists more "num_missing": 0,

information about which "num_unfound": 0,

OSDs contain unfound "objects": [],

objects. See U nfound Objects

in the Troubleshooting Guide.

ceph pg dump Show statistics and metadata # ceph pg dump

[--format for all placement groups dumped all

format] including information about version 1409550

scrub processes, last stamp 2017-10-24 08:51:54.763931

replication, current OSDs, last_osdmap_epoch 0

blocking OSDs, and so on. last_pg_scan 0

Format can be plain or json. full_ratio 0

nearfull_ratio 0…

4 | Red Hat Ceph Storage Cheat Sheet

�

ceph pg Show stuck placement # ceph pg dump_stuck unclean

dump_stuck groups (PGs).See I dentifying ok

inactive | Troubled Placement Groups

unclean | stale | in the Administration Guide. pg_stat 3.6 3.6

undersized | active+undersized+ active+undersized+

stat

degraded degraded degraded

up [7,8] [8,4]

up_primary 7 8

acting [7,8] [8,4]

acting_primary 7 8

ceph pg scrub Initiate the scrub process on # ceph pg scrub 3.0

pg-id the placement groups instructing pg 3.0 on osd.1 to scrub

contents.

ceph Initiate the deep scrub # ceph pg deep-scrub 3.0

deep-scrub process on the placement instructing pg 3.0 on osd.1 to deep-scrub

pg-id groups contents.

ceph pg repair Fix inconsistent placement # ceph pg repair 3.0 instructing pg 3.0 on osd.1 to repair

{pg-id} groups. See R epairing

Inconsistent Placement

Groups in the

Troubleshooting Guide.

Interaction With Individual Daemons

Subcommands of the "c eph daemon <daemon-name>" command. These commands interact with individual daemons

on the current host. Typically, they are used for low-level investigation and troubleshooting. Specify the target daemon

by its name, for example "osd.1", or by using a path to the daemon’s socket file. For example,

"/var/run/ceph/ceph-osd.0.asok".

Command Purpose Example

ceph daemon Show a list of currently active # ceph daemon osd.0 dump_ops_in_flight

<osd.id> operations for an OSD. Useful if {

dump_ops_in_fli one or more operations are "ops": [

ght inactive, stuck or blocked. {

"description": "osd_op(client.24153.0:45 3.33

3:cd6d298e:::benchmark_data_ceph1_268097_object44:h

ead [set-alloc-hint object_size 4194304 write_size

4194304,write 0~4194304] snapc 0=[]

ondisk+write+known_if_redirected e115)",

"initiated_at": "2017-10-24

ceph daemon Print a list of commands a daemon # ceph daemon osd.0 help

<daemon-name> supports {

help "calc_objectstore_db_histogram": "Generate key value

histogram of kvdb(rocksdb) which used by bluestore",

"compact": "Commpact object store's omap. WARNING:

Compaction probably slows your requests"...

5 | Red Hat Ceph Storage Cheat Sheet

�

ceph daemon Print high level status information # ceph daemon mon.ceph1 mon_status

<daemon-name> for a Monitor {

mon_status "name": "ceph1",

"rank": 0,

"state": "leader",

"election_epoch": 6,

"quorum": [

0,

1,

2

],

ceph daemon Print high level status information # ceph daemon osd.0 status

<osd.id> status for an OSD {

"cluster_fsid":

"82282e8f-b8ff-4ec2-b564-e06a3e514fb7",

"osd_fsid": "f05ea8f0-df33-440b-8921-511a93f2ec96",

"whoami": 0,

ceph daemon Print performance statistics. See # ceph daemon client.radosgw.primary perf dump

<daemon-name> Performance Counters in the { "cct": {"total_workers": 16, "unhealthy_workers": 0 },

perf dump Administration Guide for details. "client.radosgw.primary": { "req": 1156723,...

Authentication and Authorization

For details, see M

anaging Users in the Administration Guide.

Command Purpose Example

ceph auth list List users # ceph auth list

installed auth entries:

osd.0

key:

AQDUIcRZKW5JERAA+DFBSVZLsmd0gj

FK6TxS7A==

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

ceph auth Get user details, or create the user if it does not exist # ceph auth get-or-create client.rbd mon

get-or-create yet and return details. 'allow r' osd 'allow rw pool=rbd'

[client.rbd] key = Axxxxxxxxxxx==

ceph auth Delete a user # ceph auth del updated

delete

ceph auth caps Add or remove permissions for a user. Permissions # ceph auth caps client.bob mon 'allow *'

are grouped per daemon type ( mon, osd, mds). osd 'allow *' mds 'allow *' updated caps

Capabilities can be 'r', 'w', 'x' or '*'. See A

uthorization for client.user1

(Capabilities) in the Administration Guide for details.

6 | Red Hat Ceph Storage Cheat Sheet

�

Object Store Utility

The RADOS Object Store utility commands

Command Purpose Example

rados -p pool put Upload a file into a pool, name the resulting object. # rados -p rbd put myfile myfile.txt

object file

rados -p pool ls List objects in a pool # rados -p rbd ls

rados -p pool get Download an object from a pool into a local file. Give # rados -p rbd get myfile - new.txt

object file '-' as a file name to write to standard output

rados -p pool rm Delete an object from a pool # rados -p test rm myfile

object

rados -p pool List watchers of an object in pool. For instance, the # rados -p rbd listwatchers

listwatchers head object of a mapped rbd volume has its clients benchmark_data_ceph1_268097_object

object as watchers 865watcher=12.10.x.x:0/330978585

client.28223 cookie=1

rados bench Run the built-in benchmark for given time in # rados bench -p rbd 120 write

seconds mode [-b seconds. Mode can be write, seq, or rand (latter are --no-cleanup

object-size ] [-t read benchmarks). Before running one of the hints = 1

threads ] reading benchmarks, run a write benchmark with Maintaining 16 concurrent writes of

the –no-cleanup option. The default object size is 4 4194304 bytes to objects of size 4194304

MB, and the default number of simulated threads for up to 120 seconds or 0 objects

(parallel writes operations) is 16. See B

enchmarking

Performance in the Administration Guide for details.

7 | Red Hat Ceph Storage Cheat Sheet