100% found this document useful (3 votes)

254 views118 pagesData Science - Sem6

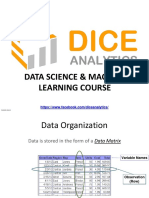

Data science involves extracting meaningful insights from large amounts of raw data through deep study and analysis. It uses powerful hardware, programming, and algorithms to solve data problems. Data science involves asking questions, modeling data using complex algorithms, visualizing data, and understanding data to make better decisions. Exploratory data analysis and visualization are important techniques used in data science to gain initial insights from data through simple statistical analysis and visualization tools like scatter plots, histograms, and box plots. Principal component analysis is a dimensionality reduction technique used to reduce large datasets while retaining most of the information.

Uploaded by

Dinesh K LoharCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

100% found this document useful (3 votes)

254 views118 pagesData Science - Sem6

Data science involves extracting meaningful insights from large amounts of raw data through deep study and analysis. It uses powerful hardware, programming, and algorithms to solve data problems. Data science involves asking questions, modeling data using complex algorithms, visualizing data, and understanding data to make better decisions. Exploratory data analysis and visualization are important techniques used in data science to gain initial insights from data through simple statistical analysis and visualization tools like scatter plots, histograms, and box plots. Principal component analysis is a dimensionality reduction technique used to reduce large datasets while retaining most of the information.

Uploaded by

Dinesh K LoharCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 118