0% found this document useful (0 votes)

399 views8 pagesTable of Specification

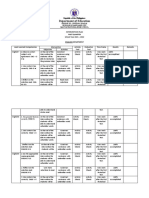

A table of specifications (TOS) is a two-way chart that aligns learning outcomes with course content. It allows teachers to construct tests that adequately sample student knowledge across topics. To make a TOS, teachers first determine exam coverage and objectives using Bloom's taxonomy. They then allocate test items proportionally based on time spent on each topic. Item analysis validates draft items by examining difficulty and ability to discriminate between more and less knowledgeable students. Together, the TOS and item analysis help teachers ensure tests are appropriately aligned with instruction and accurately measure learning.

Uploaded by

Contagious Obsessions AffiliateCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as DOCX, PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

399 views8 pagesTable of Specification

A table of specifications (TOS) is a two-way chart that aligns learning outcomes with course content. It allows teachers to construct tests that adequately sample student knowledge across topics. To make a TOS, teachers first determine exam coverage and objectives using Bloom's taxonomy. They then allocate test items proportionally based on time spent on each topic. Item analysis validates draft items by examining difficulty and ability to discriminate between more and less knowledgeable students. Together, the TOS and item analysis help teachers ensure tests are appropriately aligned with instruction and accurately measure learning.

Uploaded by

Contagious Obsessions AffiliateCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as DOCX, PDF, TXT or read online on Scribd

/ 8