0% found this document useful (0 votes)

37 views27 pagesML-Unit I - Decision Tree

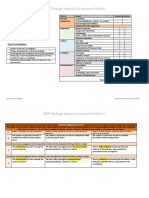

1 3 0 The document discusses decision trees, providing examples to illustrate how they

1.5 are built. It explains that decision trees break down a dataset into smaller subsets

2 8 0 using decision nodes and leaf nodes. The examples show how decision trees use

5.5 attributes like age and lower birth to determine ticket fare concessions. It also

9 3.5 1 discusses concepts like information gain, entropy, and purity to select the best

9.5 attribute to split the tree on at each step.

10 4 0

11

12 5 1

Uploaded by

Pranav ReddyCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

37 views27 pagesML-Unit I - Decision Tree

1 3 0 The document discusses decision trees, providing examples to illustrate how they

1.5 are built. It explains that decision trees break down a dataset into smaller subsets

2 8 0 using decision nodes and leaf nodes. The examples show how decision trees use

5.5 attributes like age and lower birth to determine ticket fare concessions. It also

9 3.5 1 discusses concepts like information gain, entropy, and purity to select the best

9.5 attribute to split the tree on at each step.

10 4 0

11

12 5 1

Uploaded by

Pranav ReddyCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 27