0 ratings0% found this document useful (0 votes)

304 views19 pagesMLT Unit 3 Notes

Uploaded by

Taarani SreeramCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF or read online on Scribd

0 ratings0% found this document useful (0 votes)

304 views19 pagesMLT Unit 3 Notes

Uploaded by

Taarani SreeramCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF or read online on Scribd

You are on page 1/ 19

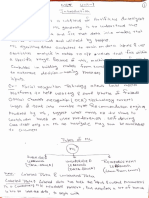

UNIT 01 DISTANCE-BASED MODELS. 742

NEAREST NEIGHBOR MODELS

Nearest neighbour methods are a good starting point since they readily encode basic smoothness

intuitions and are easy to program, forming a useful baseline method,

Ina classification problem each input veetor x has a corresponding class label, c* € {1,...,C}. Given

a dataset of N such training examples, D = {xn , en} ,» = 1,...,.N, and a novel x, we aim to return

the correct class e(x). A simple, but often effective, strategy for this supervised learning problem can

be stated as: for novel x, find the nearest input in the training set and use the class of this nearest input

For veetors x and x’ representing two different datapoints, we measure ‘nearness’ using a dissimilarity

finetion d(x, x’)

A common dissimilarity is the squared Euclidean distance d(x, x’) = (x —¥’)F(x —¥’)

which can be more conveniently written (x — x)’. Based on the squared Euclidean distance, the

decision boundary is determined by the lines which are the perpendicular bisectors of the closest

training points with different training labels given in the figure below. This is called a Voronoi

tessellation.

In nearest neighbour classification a new vector is assigned the label of the nearest vector in the

training set, Here there are three classes, with training points given by the circles, along with their

class. The dots indicate the class of the nearest training vector. The decision boundary is piecewise

linear with each segment corresponding to the perpendicular bisector between two datapoints

belonging to different classes, giving rise to a Voronoi tessellation of the input space

The nearest neighbour algorithm is simple and intuitive. There are some issues:

‘4 How should we measure the distance between points? Whilst the Euclidean square distance

is popular, this may not always be appropriate. A fundamental limitation of the Euclidean

distance is that it does not take into account how the data is distributed.

A The whole dataset needs to be stored to make a classification. This can be addressed by a

method called data editing in which datapoints which have little or no effect on the decision

boundary are removed from the training dataset,

The Nearest neighbour algorithm to classify a new vector x, given a set of training data

D = {(xn,en), n= 1,...,.N}

41: Calculate the dissimilarity of the test point x to each of the stored points, dn = d(x, xn).n=1,

oN.

2: Find the training point x" which is nearest to x n+ = argmin d (x, x*)

3: Assign the class label e(x) = 0.

4: In the case that there are two or more ‘equidistant’ neighbours with different class labels, the most

numerous class is chosen. If there is no one single most numerous class, we use the K-nearest-

neighbours

Machine Learning Techniques Page [42

i ee oe oe

‘The figures illusatrate the decision regions of a S-nearest neighbour classifier; the shading represents

the predicted probability distribution over the five classes. (middle) S-nearest neighbour. (right) 7=

nearest neighbour

K-MEANS

‘The K-means problem is NP-complete, which means that there is no efficient solution to find the

global minimum, So we need to initiate the heuristic algorithm

An algorithm that tries to minimize the distance of the points in a chister with their centroid is the k=

means clustering algorithm

K-means is a centroid-based algorithm, or a distance-based algorithm, where we calculate the

distances to assign a point to a cluster. In K-Means, each cluster is associated with a centroid.

‘The main objective of the K-Means algorithm is to minimize the sum of distances between the points

and their respective cluster centroid.

The outline of the algorithm is given below. The algorithm iterates between partitioning the data

using the nearest-centroid decision rule, and recalculating centroids from a partition

Algorithm 8.1: KMeans(D.K) ~ Kemeans clustering using Euclidean distance Dis2.

Input : data D C Rd ; number of dusters K EN.

Output: K cluster means jt, pK € Rd

1 randomly initialise K vectors qt1,... AK € Rd;

2 repeat

3 assign each x ED to argminj Dis2(s.j )

4 forj=1t0Kdo

5 Dj {x €D|x assigned to cluster} }:

6 Wj = 1/|Dj | Eaens X:

7 end

8 until no change in 1, .. WK:

S return Ll, RK:

The figure given below gives the demonstration of the algorithmon a small data set with three clusters

First iteration of 3-means on Gaussian mixture data. The dotted lines are the Voronoi boundaries

resulting from randomly initialised centroids: the violet solid lines are the result of the recalculated

means. (middle) Second iteration, taking the previous partition as starting point (dotted line). (right)

Third iteration with stable clustering,

Machine Learning Techniques Page [43

‘The next figure shows how an unfortunate initialisation of the centroids can lead to a sub-optimal

solution. In practice itis advisable to run the algorithm a number of times and select the solution with

the smallest within-cluster scatter:

In the figure given above (left) First iteration of 3-means on the same data as previous figure with

differently initialised centroids. (right) S-means has converged to a sub-optimal clustering.

CLUSTERING AROUND MEDOIDS

K-means algorithm is used for different distance metric but this will also change the objective function

being minimised. So K-medoids algorithm, which additionally requires the exemplars to be data

joints. The K medoids algorithm is given below

Algorithm 8.2: KMedoids(D.K.Dis) — K-medoids clustering using arbitrary distance metric Dis.

Input : data D CX; number of elusters Kt EN,

distancemetrie Dis ‘X xX =R.

Output : K medoids {1, .. . AK ED, representing a predictive clustering off.

1 randomly pick K data points 4, ... HK €D;

2 repeat

3 assign each x ED to argminj Diss. }:

+ forj=1tokdo

5 Dj {x €D|x assigned to chusterj };

6 {i= wml cm E som Diss

8 until no change in 411,

9 return ft, pI:

nk

For calculating the medoid of a cluster requires examining all pairs of points, whereas calculating the

mean requires just a single pass through the points, which can be prohibitive for large data sets.

an alternative algorithm called partitioning around medoids (PAM) that tries to improve a clustering

locally by swapping medoids with other data points. The quality of a clustering Q is calculated as the

total distance over all points to their nearest medoid

Machine Learning Techniques Page 144

Algorithm 8.5: PAM(D.K.Dis) — Partitioning around medoids clustering using arbitrary distance

metric Dis,

Input : data D CX; number of clusters K €N;

distancemetrie Dis :X xX —R.

‘Output: K medoids 11, ... JK €D, representing a predictive clustering ofX.

1 randomly pick K data points jt... IK ED;

2 repeat

3 assign each x ED to argminj Dis(x.pj)

+ for j=1tokdo

5 Dj “fx ED |x assigned to duster} };

6 end

7 Qj ExeDj Diss.)

8 for each medoidmand each non-medeid o do

9 caleulate the improvement in Q resulting from swappingmwith o;

10 end

n select the pair with maximum improvement and swap:

12 until no further improvement possible;

15 return jut, gs

The two data sets in Figure shows that they are identical, except for a rescaling of the y-axis. K-means

finds entirely different clusterings. The figure in (left) On this data 2-means detects the right clusters.

(right) After rescaling the y-axis, this configuration has a higher between-cluster scatter than the

intended one,

SILHOUTTES

An interesting technique is the use of silhouettes. For any data point xi , let d{xi .Dj ) denote the

average distance of xi to the data points in cluster Dj , and let j (i) denote the index of the cluster that

xi belongs to. Furthermore, let a(xi ) = d(xi .Dj (1) be the average distance of xi to the points in its

own cluster Dj (i), and let b(xi ) = min k #j (7) €(xi Dk) be the average distance to the points in its,

neighbouring cluster. We would expect a(xi ) to be considerably smaller than b(xi }, but this cannot

be guaranteed

Its, however, conceivable that a(xi ) > b(xi ), in which case the difference b(xi }-a(xi ) is negative

This describes the situation that, on average, the members of the neighbouring cluster are closer to

xi than the members of its own cluster. In order to get a normalised value we divide by a(xi ) in this

case. This leads to the following definition:

dix) atx)

$00 = Faxtat), BORD)

‘A silhouette then sorts and plots s(x) for each instance, grouped by cluster. Examples are shown in

Figure

Machine Learning Techniques Page [45

we have used squared Euclidean distance in the construction of the silhouette, but the method can be

applied to other distance metrics. We can clearly see that the first clustering is much better than the

second, In addition to the graphical representation, we can compute average silhouette values per

cluster and over the whole data set.

HIERARCHICAL CLUSTERING

‘A method that uses tree structures to represent a cluster is called as hierarchical clustering. those

trees use features to navigate the instance space, similar to decision trees. consider trees called

dendrograms, which are purely defined in terms of a distance measure. Because dendrograms ase

features only indirectly, as the basis on which the distance measure is ealeulated, they partition the

given data rather than the entire instance space, and hence represent a descriptive clustering rather

than a predictive one.

Given a data set D, a dendrogram is a binary tree with the elements of D at its leaves. An internal

node of the tree represents the subset of elements in the leaves of the subtree rooted at that node. The

level of anode is the distance between the two clusters represented by the children of the node. Leaves

have level 0.

A linkage function L: 2X x2X — R calculates the distance between arbitrary subsets of the instance

space, given a distance metric Dis :X xX —R.

The most common linkage functions are as follows:

Single linkage defines the distance between two clusters as the smallest pairwise distance

between elements from each cluster.

4 Complete linkage defines the distance between two clusters as the largest pointwise distance

4b Average linkage defines the cluster distance as the average pointwise distance

4 Centroid linkage defines the cluster distance as the point distance between the cluster means.

‘These linkage functions can be defined mathematically as follows:

Leage(A.B) = xeagtSDIs(%y)

eamplae(A,B)= max ,Distx,y)

ExcaycuDis(xy)

Faveaee( A B= TAB]

Lrcax Lyesy

Leontona( A,B) = Dis (24%, E12)

Lentrost(A. B) a )

all these linkage functions coincide for singleton clusters: L({x}. {y}) = Dis(x, y)-

However, for larger clusters they start to diverge. For example, suppose Dis(x. y) <

Dis(x, 2), then the linkage between {x} and {y, 2} is different in all four cases:

Lsingle( {x}. {y.2}) = Diss. y)

Leomplete( {x}. {y. z}) = Dis(x, 2)

Laverage( {x}, {y, 2}) =Dis(x, y)+Dis(x, 2)/2

Leentroid({x}, {y, 2}) = Dis(x, (y +2)/2)

Machine Learning Techniques Page 146

‘The general algorithm to build a dendrogram is given in Algorithm,

‘The tree is built from the data points upwards and is hence a bottom-up or agglomerative algorithm.

At each iteration the algorithm constructs a new partition of the data by merging the two nearest

clusters together. In general, the HAC algorithm gives different results when different linkage

fimetions are used. Single linkage is the easiest case to understand, as it effectively builds a graph by

adding increasingly longer links between points, one at a time, such that ultimately there is a path

between any pair of points (hence the term linkage’)

Algorithm 8. HAC(D,L) — Hierarchical agglomerative clusterin,

Input : data D CX; linkage fimetion L : 2X x2X FR defined in terms of

distancemetrie.

Output: a dendrogram representing a descriptive clustering of D.

1 initialise clusters to singleton data points;

2 create a leaf at level 0 for every singleton cluster:

3 repeat

4 find the pair of clusters X.Y with lowest linkage 1 and merge:

5 create aparent of X,Y at level 1;

6 until all data points are in one cluster;

7 return the constructed binary tree with linkage levels:

Hierarchical clustering methods have the distinct advantage that the number of clusters does not need.

to be fixed in advance. However, this advantage comes at considerable computational cost.

Furthermore, we now need to choose not just the distance measure used, but also the linkage fimetion.

‘We consider a regular grid of § points in two rows of four in the figure. We assume that ties are

broken by small irregularities. Each linkage function merges the same clusters in the same order, but

the linkages are quite different in each case. Complete linkage gives the impression that D is far

removed from the rest, whereas by moving D very slightly to the right it would have been added to

E before CWith centroid linkage we see that E has in fact the same linkage as A and B, which means

that A and B are not really discernible as separate clusters, even though they are found first. Single

linkage scems preferable in this case, as it most clearly demonstrates that there is no meaningful

cluster structure in this set of points

Single and complete linkage both define the distance between clusters in terms of a particular pair of

points. Consequently, they cannot take the shape of the cluster into account, which is why average

and centroid linkage can offer an advantage

K-D TREES

K Dimensional tree (or kd tree) is a tree data structure that is used to represent points in a ke

dimensional space. It is used for various applications like nearest point (in k-dimensional space),

efficient storage of spatial data, range search etc.

Consider the insertion of points: (5. 25), (15, 58). (90, 40). (35, 20), (50, 50) in order. It can be illustrated.

Machine Learning Techniques Page 147

2S 2 (6.25) eee

me x

05,55), eee

a x abB,

8, 65)

oo 98.20) ¥.

_ 25)

one x oes,

a [es =]

ip est (25, 20) ¥

(@0. 40)

25)

(15,25) x secre,

(0, 40)

x

¥

25)

aay x] eM,

(0, 409

x

Ss,

@0, 50) «os. 20)

v ¥

Machine Learning Techniques

Page [48

It is important to note that building the tree from a given set of points gives a balanced tree while

there is no such gurantee on consecutive inserions. Using median stratergy, the tree would look like

Median in ett

sub-space of parent

Median vert

\ (20, 50) ae

oe Xx

aia wit Median in ight

(0.40) _ sub-space of parent

is (8,25) (30, 40)

62) . Y Y

95

(35, 20)

x

Although it might look like a quadtree and octree's generalized version, its implementation quite

different. Any internal node in this structure divides the space into 2 halfs. The left child of the node

represents the left half while right child represents right half. The space is divided into 2 halves

irrespective of the number of dimensions. To be more accurate, every internal node represents a

hyperplane that ents the space in 2 parts. For 2-dimensional space, that isa line and for 3 dimensional

space, that is a plane.

node in the tree represents a point in the space, General procedure to construct a k-< tree is to

ively divide the space in 2 parts along the axis that has widest spread. Every node in the tree

indicates along which dimension the space was divided by the node.

Algorithm

‘The algorithms below consider the space to be 2 dimensional but ean be applied to any space

Search(x, y}: This function checks if the point exists in space. Start with root node as current node.

If the current node represents the point (x, y), return truc.

If current node is not a leaf node, goto step 3, otherwise return false.

Let current node be the point (X, Y). If the node divides space along x-axis, compare x with X. If x <

X, set current node as left child, otherwise set current node as right child. Ifthe node divided the space

along y-axis, compare y and Y.

Goto step 1

Insert(x, y): Every insert operation divides the space. The algorithm here considers space to be 2-

dimensional but is applicable in all dimensions:

Search the tree for (x, y) until a leaf node is reached

If the tree is empty, add a new node as root representing the point (x, y). Here, the space can be divided

along any axis. Indicate the axis along which the space is divided and end insertion.

Insert a new node where the point (x, y) should have existed and have it store (x, y). If the parent

divided the space along x-axis, have the point divide the space along y-axis, otherwise have it divide

space along x-axis.

Ineate the tree is to be built from a given set of points, the strategy to fllow isto find the median

point with respect to space to be divided, Insert that point using above method and repeat to find

children nodes,

LOCALITY SENSITIVE HASHING

‘The task of finding nearest neighbours is very common. You can think of applications like finding

duplicate or similar documents, audio/video ‘search. Although using brute force to cheek for all

possible combinations will give you the exact nearest neighbour but it’s not scalable at all

Approximate algorithms to accomplish this task has been an area of active research. Although these

Machine Learning Techniques Page [49

algorithms don’t guarantee to give you the exact answer, more often than not they'll be provide a

good approximation. These algorithms are faster and scalable.

A Locality sensitive hashing (LSH) is one such algorithm. LSH has many applications, including:

ob Near-duplicate detection: LSH is commonly used to deduplicate large quantities of documents,

webpages, and other files

4 Genome-wide association study: Biologists often use LSH to identify similar gene expressions

in genome databases.

Large-scale image search: Google used LSH along with PageRank to build their image search

technology VisualRank.

4 Audio/video fingerprinting: In multimedia technologies, LSH is widely used as a

fingerprinting technique A/V data.

general hashing locality-sensitive hashing

ot ae

ere Tt) Le [ete

LSH refers to a family of functions (Imown as LSH families) to hash data points into buckets so that

data points near each other are located in the same buckets with high probability, while data points

far from each other are likely to be in different buckets. This makes it easier to identify observations

with various degrees of similarity.

Finding similar documents

Let's try to understand how we can leverage LSH in solving an actual problem. The problem that

we're trying to solve:

Goal: You have been given a large collections of documents. You want to find “near duplicate” pairs.

In the context of this problem, we can break down the LSH algorithm into 3 broad steps:

+ Shingling

Min hashing

& Locality-sensitive hashing

Machine Learning Techniques Page 150

Candidate

pairs:

Docu- those pairs

ment of signatures

that we need

totest for

Theset ‘Signatures: rita.

of strings short integer

ofllength k vectors that

‘that appear represent the

inthe doc- sets, and

ument reflect their

similarity

Shingling

In this step, we convert each document into a set of characters of length k (also known as k-shingles

or k-grams). The Key idea is to represent each document in our collection as a set of keshingles.

For ex: One of your document (D): “Nadal”. Now if we're interested in @-shingles, then our set: (Na,

ad, da, al}. Similarly set of 3-shingles: {Nad, ada, dal}

Similar documents are more likely to share more shingles

Reordering paragraphs in a document of changing words doesn't have much affect on shingles

kk value of 10 is generally used in practice. A small value will result in many shingles which are

present in most of the documents (bad for differentiating documents)

Documents

1 a 1 oO

: 7 oO 1

oO a © |4

3

Plo Jo jo ja

on

1 Oo |O 1

- = 1 oO

a jo |a jo

Jaccard Index

Its needed to have a metric to measure similarity between documents, Jaccard Index is a good choice

for this. Jaccard Index between document A & B can be defined as:

lAnBl

|AUBI

It’s also known as intersection over union (IOU),

J(A, B) =

Suppose A: “Nadal” and B: “Nadia”, then 2-shingles representation will be

A: {Na ad, da, al} and B: {Na, ad, di, ia}

Machine Learning Techniques Page [51

r CN

“eater Nat tad” “ol, a?

\ J

Jaccard Index = 2/6

More number of common shingles will result in bigger Jaccard Index and hence more likely that the

documents are similar

The two issues in the procedure are,

Time complexity

Now you may be thinking that we can stop here. But if you think about the scalability, doing just this

won't work, For a collection of n documents, you need to do n4(n-1)/2 comparison, basically O(n").

Imagine you have 1 million documents, then the number of comparison will be 510"

Space complexity

The document matrix is a sparse matrix and stor

way to solve this is hashing.

Hashing

‘The idea of hashing is to convert each document to a small signature using a hashing function H.

Suppose a document in our corpus is denoted by d. Then:

ig it as itis will be a big memory overhead. One

H(d) is the signature and it's small enough to fit in memory

If similarity(d1,d2) is high then Probability(H(d1}==H(da)) is high

If similarity(d1,d2) is low then Probability(H(d 2) is low

Choice of hashing fimetion is tightly linked to the similarity metric we're using. For Jaccard similarity.

the appropriate hashing funetion is min-hashing,

Min hashing

Permutation Input matrix (shingles x Documents)

1 jo ja oo

1 lo jo a

o fa jo a

o fa jo a

o fa jo a

a jo ja oo

1 jo ja o

Step 1: Random permutation (n) of row index of document shingle matrix,

Step 2 Hash function is the index of the first (in the permuted order) row in which column C has value

1, Do this several time (use different permutations) to create signature of a column,

Machine Learning Techniques Page [52

h,(C) = minx(C)

wT

a [2 [03 [CH

Permutation Input matin Singles x Documents)

2 Jo’ [2)\o

Signature marin

° oa

ohhh |= 7

° °

2 1 lo

2 a jo

ca Je2 G3 Jeu ca ]C2]c3 |eq

Saintes ptm EDD

° [2 jo

o fo a

a [oa

a lo a

a fo a

a fo ja 0

ja fo ja lo

Min-hash property

‘The similarity of the signatures is the fraction of the minchash functions (rows) in which they agree

So the similarity of signature for C1 and C3 is 2/5 as 1st and 5rd row are same.

Ca] C2103 Ca

‘Signatre mate

opepeyeye

‘Signature matric M

In the below example you can see this to some extent. There is difference as we have signatures of

length 3 only. But if increase the length the 2 similarities will be closer

Machine Learning Techniques Page [53

Input Matrix: ‘Signature Matrix

a2

TEI bE BE]

TE Oe

eae acnoaene

Locality-sensitive hashing

Goal: Find documents with Jaceard similarity of at least t

‘The general idea of LSH is to find a algorithm such that if we input signatures of @ documents, it tells

us that those 2 documents form a candidate pair or not ie. their similarity is greater than a threshold

t. Remember that we are taking similarity of signatures as a proxy for Inccard similarity between the

original documents.

Specifically for min-hash signature matrix:

Hash columns of signature matrix M using several hash functions

If documents hash into same bucket for at least one of the hash fnetion we ean take the 2 documents

as a candidate pai

Now the question is how to create different hash functions. For this we do band partition.

Band partition

| =

bands |

| ~ one

| mgs

Here is the algorithm:

Divide the signature matrix into b bands, each band having r rows

For each band, hash its portion of each column to a hash table with k buckets

Candidate column pairs are those that hash to the same bucket for at least 1 band

‘Tune b and r to catch most similar pairs but few non similar pairs

There are few considerations here. Ideally for each band we want to take k to be equal to all possible

combinations of values that a column can take within a band. This will be equivalent to identity

matching. But in this way Ic will be a huge number which is computationally infeasible, For ex: If for

a band we have 5 rows in it. Now if the elements in the signature are 52 bit integers then k in this case

will be (2°)? ~ 1.4615016e+28. You can see what's the problem here. Normally k is taken around 1

million.

The idea is that if @ documents are similar then they will will appear as candidate pair in at least one

of the bands.

Machine Learning Techniques Page [54

Choice of b & r

_ Columns 2nd 6

are probably identical

(candidate pair)

Columns 6 and 7 are

surely diferent

rrows bands

If we take b large ie more number of hash functions, then we reduce ras b¥r is a constant (number of

rows in signature matrix). Intuitively it means that we're increasing the probability of finding a

candidate pair. This case is equivalent to taking’a small t (similarity threshold)

Let's say your signature matrix has 100 rows. Consider 2 cases:

bi=10+r=10

be=20>r

In gnd case, there is higher chance for 2 documents to appear in same bucket at least once as they have

more opportunities (20 vs 10) and fewer elements of the signature are getting compared (5 vs 10)

Higher b implies lower similarity threshold (higher false positives) and lower b implies higher

similarity threshold (higher false negatives)

EX:

Setup:

100k documents stored as signature of length 100

Signature matrix: 100100000

Brute force comparison of signatures will result in 100C2 comparisons = 5 billion (quite a lot!)

Let's take b = 20 +r

similarity threshold (t) : 80%

‘We want 2 documents (D1 & Da) with 50% similarity to be hashed in the same bucket for at least one

of the 20 bands.

P(D1 & De identical in a particular band) = (0.8)° = 0.528

P(D1 & D2 are not similar in all 20 bands) = (1-0.528)"20 = 0.00035

‘This means in this scenario we have ~.035% chance of a false negative @ 80% similar documents.

Also we want 2 documents (D3 & D4) with 30% similarity to be not hashed in the same bucket for

any of the 20 bands (threshold = 80%).

P(D3 & D+ identical in a particular band) = (0.3)° = 0.00243.

P(Ds & Dé are similar in at least one of the 20 bands)

— (1-0.00248)'20 = 0037+

Machine Learning Techniques Page [55

This means in this scenario we have ~#.74%% chance of a false positive @ 30% similar documents.

So we can see that we have some false positives and few false negatives. These proportion will vary

with choice of b and r.

What we want here is something like below. If we have 2 documents which have similarity greater

than the threshold then probability of them sharing the same bucket in at least one of the bands should

be 1 else.

;

{

/

Prati

rrobsatty | Noctane

of sharing ifset

abucket \

\

‘

Similarity s of two sets ~

nai amet,

‘boc

NON-PARAMETRIC REGRESSION

Nonparametric regression, like linear regression, estimates mean outcomes for a given set of

covariates. Unlike linear regression, nonparametric regression is agnostic about the functional form

between the outcome and the covariates and is therefore not subject to misspecification error.

In nonparametie regression, you do not specify the fimetional form. You specify

variable—the ontcome—and the covariates. You specify yx1x2, and x3 to fit

the dependent

(x1xe.xs)+e

‘The method does not assume that g() is linear; it could just as well be

11+ B2n22+ Bsx51x2+Btes +E

“The method does not even assume the function is linear in the parameters. It could just as well be

1 xB21+cos(x253)+e

‘Or it could be anything else,

The result is not returned to you in algebraic form, but predicted values and derivatives can be

calculated. To fit whatever the model is, you type

- Mpregress kernel y x1 x2 x3

Machine Learning Techniques Page 156

npregress needs more observations than linear regression to produce consistent estimates, of course,

but perhaps not as many extra observations as you would expect. A model like this one could easily

be fit on 500 observations.

‘The goal ofa regression analysis is to produce a reasonable analysis to the unknown response function

£, where for N data points (Xi,¥i), the relationship can be modeled as,

Yiem(xi)+ei,i=1,.

=-Note: my.) = Efy|x] iF Efe |x}=0 ie, ele

We have different ways to model the conditional expectation function (CEF), m())

Parametric approach= m(.) is known and smooth. It is fully described by a finite set of parameters, to

be estimated. Easy interpretation. For example, a linear model

y= x,'B+e,, i=heN

+ Nonparametric approach- mi.) is smooth, flexible, but unknown. Let the data determine the shape of

m(). Difficult interpretation

y, = m(x,)+E,, N

= Semiparametric approach m(,) have some parameters -to be estimated-, but some parts are

determined by the data.

B+ m.(2))+6,,

“N

ENSEMBLE LEARNING

Ensemble Learning Techniques in Machine Learning, Machine learning models suffer bias and/or

variance. Bias is the difference between the predicted value and actual value by the model. Bias is

introduced when the model doesn’t consider the variation of data and creates a simple model. The

simple model doesn't follow the patterns of data, and hence the model gives errors in predicting

training as well as testing data ie. the model with high bias and high variance, You Can also read

Covariance and Correlation In Machine Learning,

‘When the model follows even random quirks of data, as pattern of data, then the model might do very

well on training dataset ie. it gives low bias, but it fails om test data and gives high variance

Therefore, to improve the accuracy (estimate) of the model, ensemble learning methods are developed.

Ensemble is a machine learning concept, in which several models are trained using machine learning

algorithms. It combines low performing classifiers (also called as weak learners or base learner) and.

combine individual model prediction for the final prediction,

On the basis of type of base learners, ensemble methods can be categorized as homogeneous and

heterogeneous ensemble methods. If base learners are same, then it is a homogeneous ensemble

method. [fbase learners are different then it is a heterogencous ensemble method.

arta}

Method

Pte cult Pete caer

Different Base Learners

Ensemble techniques are classified into three types:

4b Bagging

4 Boosting

+ Stacking

Machine Learning Techniques Page [57

BAGGING AND RANDOM FORESTS

Bagging, short for ‘bootstrap aggregating’, is a simple but highly effective ensemble method that

creates diverse models on different random samples of the original data set. These samples are taken

uniformly with replacement and are known as bootstrap samples,

Because samples are taken with replacement the bootstrap sample will in general contain duplicates,

and hence some of the original data points will be missing even if the bootstrap sample is of the same

size as the original data set. This is exactly what we want, as differences between the bootstrap

samples will create diversity among the models in the ensemble.

In the figure given above An ensemble of five basic linear classifiers built from bootstrap samples with

Bagging is given. The decision rule is majority vote, leading to a piecewise linear decision boundary.

(right) If we turn the votes into probabilities, we see the ensemble is effectively a grouping mode:

each instance space segment obtains a slightly different probability.

A bagging algorithm, which returns the ensemble as a set of models is given. We can choose to

combine the predictions from the different models by voting — the class predicted by the majority of

models wins — or by averaging, which is more appropriate if the base classifiers output scores or

probabilities.

samples

Input _: data set D; ensemble size T; learning algorithm of

Output: ensemble of models whose predictions are to be combined by voting

oraveraging

1 for t= 1t0T do

build a bootstrap sample D; from D by sampling [D| data points with

replacement

1 | runef on D; to produce a model Mi:

tend

+ return (Mill. s¢'<7)

Bagging is particularly usefil in combination with tree models, which are quite sensitive to variations

in the training data, When applied to tree models, bagging is often combined with another idea: to

build each tree from a different random subset of the features, a process also refered to as subspace

sampling:

‘This encourages the diversity in the ensemble even more, and has the atonal advantage thatthe

training time of each tree is reduced. The resulting ensemble method is called random forests, and the

algorithm is given below

Machine Learning Techniques Page [58

‘Algorithm RandomForestD. Ta)

bootstrap samples and random subspace:

Input data set D; ensemble size T; subspace dimension d.

Output : ensemble of tree models whose predictions are to be combined by

voting or averaging.

1 for r= 1t0 Tdo

2 | build bootstrap sample D, from D by sampling |D} data points with

‘replacement,

2 | select d features at random and reduce dimensionali

of D, accordingly;

«| train tee model M, on D, without pruning;

send

46 return (M,|1. <7)

Since a decision tree is a grouping model whose leaves partition the instance space, so is a random

forest: its corresponding instance space partition is essentially the intersection of the partitions of the

individual trees in the ensemble, While the random forest partition is therefore finer than most tree

partitions, it can in principle be mapped back to a single tree model

BOOSTING

Boosting is an ensemble technique that is superficially similar to bagging, but uses a more

sophisticated technique than bootstrap sampling to create diverse training sets

Suppose we train a linear classifier on a data set and find that its training error rate is €

‘We want to add another classifier to the ensemble that does better on the misclassifications of the first

classifier. One way to do that is to duplicate the misclassified instances: if our base model is the basic

linear classifier, this will shift the class means towards the duplicated instances. A better way to

achieve the same thing is to give the misclassified instances a higher weight, and to modify the

classifier to take these weights into account

‘The idea is that half of the total weight is assigned to the misclassified examples, and the other half to

the rest. Since we started with uniform weights that sum to 1, the current weight assigned to the

misclassified examples is exactly the error rate €, so we multiply their weights by 1/2€. Assuming €

< 0.5 this is an increase in weight as desired. The weights of the correctly classified examples get

multiplied by 1/2(1-€), so the adjusted weights again sum to

Example 11.1 (Weight updates in boosting). Suppose a linear classifier

achieves performance as in the contingency table on the left. The error

rate is ¢ = (9+ 16)/100 = 0.25. The weight update for the misclassified examples

is afactor 1/2e = 2 and for the correctly classified examples 1/2(1 ~e) = 2/3.

Predicted ® Predicted © e 8

Actuale 24 16 40 2 6 32 48

Actual = 9 51 0 1 mt 52

33 67 100 34 66 100

‘Taking these updated weights into account leads to the contingency table

‘on the right, which has a (weighted) error rate of 05.

‘The basic boosting algorithm is given in Algorithm, illustrates how a boosted ensemble of five basic

linear classifiers can achieve zero training error. It is clear that the resulting decision boundary is

much more complex than could be achieved by a single basic linear classifier. In contrast, a bagged

ensemble of basic linear classifiers has learned five very similar decision boundaries, the reason being

that on this data set the bootstrap samples are all very similar

Machine Learning Techniques Page [59

‘Algorithm Soosing(D, Tya/) ~ wain an en

reweighted training sets

mble of binary Classifiers from

Input data set D; ensemble size T; learning algorithm of

Output : weighted ensemble of models.

1 wy 1D I forall x;€ D; 1/ start with uniform weights

2 for t= 1toTdo

2 | rund on D with weights wy to produce amodel M;

4 | calculate weighted error;

5 | ite; 2 1/2then

a | | set t—1end break

+ | ena

| atin; 11 confidence for this model

9 | wens SH for misclassified instances x; € D 11 increase weight

10 | wn) —pty for correctly classified instances x, €D; 1/ decrease weight

n end

12 return M(x) = EE a, M(x)

Figure gives An ensemble of five boosted basic linear classifiers with majority vote. The linear

classifiers were learned from blue to red; none of them achieves zero training error, but the ensemble

does. (right) Applying bagging results in a much more homogeneous ensemble, indicating that there

is little diversity in the bootstrap samples

META LEARNING

Meta-learning first involves training a variety of models on a large collection of data sets. The aim is

then to construct a model that can help us answer questions such as the following:

‘4 Inwhich situations is a decision tree likely to outperform a support vector machine?

4 When can a linear classifier be expected to perform poorly?

4 Can the data be used to give suggestions for setting particular parameters?

‘The key question in meta-learning is how to design the features on which the meta model is built.

‘These features should combine data set characteristics and relevant aspects of the trained model. Data

set characteristics should go much further than simply listing the number and kind of features and the

number of instances, as it is unlikely that anything can be predicted about a model's performance from

just that

information

Ex: we can try to assess the noise level of a data set by measuring the size of a trained decision tree

before and after pruning. Training simple models such as decision stumps on a data set and measuring

their performance also gives useftl information.

In Background we referred to the no free lunch theorem, which states that no learning algorithm can

outperform any other learning algorithm over the set of all possible learning problems. As a coroll

we have that meta-learning over all possible learning problems is futile: ifit wasn’t, we could build a

single hybrid model that uses a meta-model to tell us which base model would achieve better than

random performance on a particular data set.

It follows that we can only hope to achieve useful! meta-learning over non-

earning problems,

wniform distributions of

Machine Learning Techniques Page 160

You might also like

- JNTUA Discrete Mathematics & Graph Theory Notes R20No ratings yetJNTUA Discrete Mathematics & Graph Theory Notes R20235 pages

- Iv Semester: Data Mining Question Bank: Unit 2 2 Mark Questions)No ratings yetIv Semester: Data Mining Question Bank: Unit 2 2 Mark Questions)5 pages

- Data Warehousing and Data Mining SyllabusNo ratings yetData Warehousing and Data Mining Syllabus2 pages

- AD8402 - Artificial Intelligence (Unit III)No ratings yetAD8402 - Artificial Intelligence (Unit III)24 pages

- Unit II: Software Requirement Analysis and SpecificationsNo ratings yetUnit II: Software Requirement Analysis and Specifications64 pages

- Unit 5 - Data Mining - WWW - Rgpvnotes.inNo ratings yetUnit 5 - Data Mining - WWW - Rgpvnotes.in15 pages

- Data Mining Primitives, Languages and System ArchitectureNo ratings yetData Mining Primitives, Languages and System Architecture26 pages

- Topic: Classification of Data StructureNo ratings yetTopic: Classification of Data Structure24 pages

- DATABASE MANAGEMENT SYSTEMS Unit Wise Important Questions: PART - A (Short Answer Questions)No ratings yetDATABASE MANAGEMENT SYSTEMS Unit Wise Important Questions: PART - A (Short Answer Questions)6 pages

- Cs8582-Object Oriented Analysisand Design Laboratory-46023968-Cs8582 - Ooad LabNo ratings yetCs8582-Object Oriented Analysisand Design Laboratory-46023968-Cs8582 - Ooad Lab132 pages

- AKTU Circular Regarding Free Internship Program by Softpro India Computer Technologies Pvt. Limited UnderMoU SignedNo ratings yetAKTU Circular Regarding Free Internship Program by Softpro India Computer Technologies Pvt. Limited UnderMoU Signed1 page

- Constraints in DBMS - Types of Constraints in DBMS - Gate VidyalayNo ratings yetConstraints in DBMS - Types of Constraints in DBMS - Gate Vidyalay5 pages

- Unit - I: Write About Principles of Modeling?No ratings yetUnit - I: Write About Principles of Modeling?9 pages