0 ratings0% found this document useful (0 votes)

79 views127 pagesData Analytics

Uploaded by

aditikaushal06Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF or read online on Scribd

0 ratings0% found this document useful (0 votes)

79 views127 pagesData Analytics

Uploaded by

aditikaushal06Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF or read online on Scribd

You are on page 1/ 127

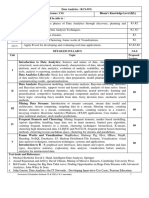

42d (CS-5/AT-6) Introduction to Data Analytics

aw Orr re eee vw’

wet] What is data analytics ?

4. Data analytics is the science of analyzing raw data in order to make

2 Any ype of information can be subjected to data analytics techniques to

get insight that can be used to improve things.

4. Data analytics techniques can help in finding the trends and metries

that would be used to optimize processes to increase the overall efficiency

ofa business or system.”

4, Many of the techniques and processes of data analytics have been

automated into mechanical processes and algorithms that work over

raw data for human consumption.

5. For example, manufacturing companies often record the runtime,

downtime, and work queue for various machines and thenvanalyze the

data to better plan the workloads so the machines operate closer to peak

capacity.

Explain the source of data (or Big Data).

Three primary sources of Big Data are:

\L-Bocial data :

@.” Social data comes from the likes, tweets & retweets, comments,

‘ideo uploads, and general media that are Uploaded and shared via

social media platforms.

b. ‘This kind of data provides invaluable’ insights into consumer

behaviour and sentiment and can be enormously influential in

marketing analytics.

Scanned with CamScanner

1385

ta Analytics - Sin

Dal lie web is another good source of social data, and toc

oe The ee trends can be used to good effect to increase the volte

Cote eof

bigdata.

ine data? : /

2, Machinedata: das information which is generate,

. pment, sepsorsthatare installed in machinery ,”

industrial 20° Poh track user behaviour. inet, ang

. ta is expected to grow exponentially as the int,

|b This tone oft ever more pervasive and expands around the yn

of

ch as medical devices, smart meters, road camer

= sees, sales and the rapidly growing Internet of Things at

satelite velocity, value, volume and variety of data in they

near future.

3 Transactional data = “

‘Transactional datais generated from all the daily transactions tet

take place both online and offline.

(ic Invoices, payment orders, storage records, delivery receipts are

characterized as transactional data.

( eis Write short notes on classification of data.

a

Unstructured data :

\s— Unstructured data is the rawest form of data.

\b-—Data that has no inherent structure, which may include text

documents, PDFs, images, and video.

¢. This datais often stored in a repository of files.

2 Structured data :

\s-~ Structured data is tabular data (rows and columns) which areve

well defined.

\b= Data containing adefined data type, format, and structure, witb

may include transaction d iti files,

simple spreadshosta on ot Seaditional RDBMS, CSV files

_ 3 Semi-structured data : ‘

2 Textual data file:

as Extensible

Aconsistent

strict,

with a distinct pattern that enables parsize*™*

Markup Language [XML] data files or JSON.

b

format is defined however the structure isnot 7

Semi-;

structured data are often stored as files.

Scanned with CamScanner

jAd (CS5AT-©) Introduction to Data Analytics

Itis based on It is based on

Relational database |RDF. [character and

table. binary data.

Iyvansaction | Matured transaction |Transactionis [No transactio’

|nanagement| and various adapted from management and

concurrency ‘DBMS. Ino concurrency.

techniques.

[Flexibility | Itis schema It is more flexible| Tt very flexible and

dependent and less|than_ structured|there is absence of

flexible. data but less than|schema.

flexible than

‘unstructured data.

(Scalability | It is very difficult to|It is more scalable | It is very scalable.

scale: database|than structured

schema. data.

IQuery Structured query | Queries over (Only textual query|

lperformance| allow complex anonymous nodes|are possible.

joining. are possible.

Explain the characteristics of Big Data.

Big Data is characterized into four dimensions:

‘Volume:

a. Volume is concerned about scale of data i.

at which it is growing.

b. The volume of data is growing rapidly, due to several applications of

business, social, web and scientific explorations.

2 Velocity:

\a—The speed at which data is increasing thus demanding analysis of

streaming data.

b. The velocity is due to growing speed of business intelligence

applications such as trading, transaction of telecom and banking

domain, growing number of internet connections with the increased

‘usage of internet etc.

the volume of the data

Scanned with CamScanner

. , ss

Databoel ifferent forms of data to use f “ 2)

jety : It depicts different for use for analysis

\a—Variety ? Such,

fused, semi structured and unstructured, i"

struct CEA aad

4, Veracity:

La” Veracity is concerned with uncertainty or inaccuracy of,

the det

Inmany cases the data will be inaccurate hence filtering and g,

the data which is actually needed is a complicated taste ing

Alot of statistical and analytical process has to go for d

5 lata cleans

© for choosing intrinsic data for decision making, “aRsing

‘QaeiG | Write short note on big data platform.

1. Big data platform isa type of IT solution that combines the features and

capabilities of several big data application and utilities within a single

solution.

~ 2 Ttis an enterprise class IT platform that enables organization in

developing, deploying, operating and managing a big data infrastructure!

environment, :

Big data platform generally consists ofbig data storage, servers, database,

big data management, busiriess intelligence and other big data

management utilities,

4." It also supports custom development: quexyi integration with

other systems, en eee Pee

8 ne Primary benefitbehind a big data platform id to reduce the complexity

of multiple vendors/ sol is into a one cohesive solution.

6. Big data platform are also del det

; i Tm are ered through cloud where the provi

Provides an all ‘inclusive big data, Solutions and services.

“Wat are the features of big data platform ?

Scanned with CamScanner

1-64 (CS-5AT-6) Introduction to Data Analytics

a. er Oucton toDate Analytica

ssleal

Features of Big Data analytics platform :

1, Big Data platform should be able to accommodate new platforms and

tool based on the business requirement,

2, Itshould supportlinear scale-out,

3. Itshould have capability forrapid deployment.

\c Itshould support variety of data format,

5. . Platform should provide data analysis and reporting tools.

6, Itshould provide real-time data analysis software.

‘4 It should have tools for searching the data through large data sets.

VEG Why there is need of data analytics ?

Need of data analytics :

\4~ Itoptimizes the business performance.

\4x~ Ithelps to make better decisions.

\- Ithelps to analyze customers trends and solutions.

Steps involved in data analysis are:

1. Determine the data: ‘ x

4. The frst step is to determine the data requirements or how the

data is grouped.

b. "Data may be separated by age, demographic, income, or gender.

Data values may be numerical or be divided by category.

Scanned with CamScanner

Data Analytics

M13 Cap

\2- collection of data

a, Thesecond stop in data analytiesis the process of

This can be done through a variety of sources g

online sources, cameras, environmental so

personnel.

3 Organization of data :

a. Thirdstep is to organize the data.

Once thedatais collected, it must be organized soit canbe

_— Iza

Organization may take place on a spreadsheet or ot]

~ software that can take statistical data, her form

\4-“Cleaning of data :

a. Infourth step, the data is then cleaned up before analysis,

b. ‘This means it is scrubbed and checked to ensure there j

duplication or error, and that it is not incomplete. ——-=-18

plication or error, and that itis not incomplete

2° Thisstep helps correct any errors before it goes onto adata analy

tobe analyzed. be

collectin,

Ch as ¢

UTCeS, oF the

Bit,

I] Write short note on evolution of analytics scalability,

ae

1. Inanalytic scalability, we have to pull the data together in a separate

analytics environment and then start performing analysis.

Database 2 :

f-po oe

[Database 3

The heavy processing occurs

in the analytic environment

Analytic server

or PC a

2. Analysts do the merge operation on the data sets which contain 10"

andcolumns, =".

3. The columns represent information about the customers such as

spending level, or status, ey

4, In merge or join, two or more data sets are combined together ot

are typically merged / joined so that specific rows of one data

table are combined with specific rows of another.

Scanned with CamScanner

re

1a (CS-6/IT-6) Introduction to Data Analytics

Ve

5. Analysts also do data proparation. Data preparation ia made up of

joins, aggregations, derivations, and transformations. In this process.

they pull data from various sources and merge it all together to create

the variables required for an anal;

«Massively Parallel Processing (MPP) system )s the most mature, proven,

‘and widely deployed mechanism for storing and analyzing large

amounts of data,

7, AnMPP database breaks the data into independent pieces managed by :

independent storage and central processing unit (CPU) resources.

100 GB | | 100 GB | 100 GB || 100 Ge |} 100 GB

Chunks || Chunks || Chunks || Chunks || Chunks

lterabyte |_, :

tabl

© 100 GB || 100 GB || 100 GB || 100 GB |} 100 GB

Chunks || Chunks || Chunks || Chunks || Chunks j

A traditional database 10 Simultaneous 100-GB queries

will query a one

terabyte one row at time.

| Fig. LL0CL, Massively Parallel Processing et

MPPsystems build in redundancy to make recovery easy.

9, MPP systems have resource management tools =

‘a. Manage the CPU and disk space

b. Query optimizer

Answer |

L With increased level of scalability, it needs to update analytic processes

to take advantage of it.

2: This can be achieved with the use of analytical sandboxes to provide

analytic profossionals with a scalable environment to build advanced

analyties processes.

3. One ofthe uses of MPP database system is to facilitate the building and

deployment of advanced analytic processes.

4. An analytic sandbox is the mechanism to utilize an enterprise data

warehouse.

5. If used appropriately, an analytic sandbox can be one of the primary

drivers of value in the world of big data.

Analytical sandbox :

1. An analytic sandbox provides a set of resources with which in-depth

analysis can be done to answer critical business questions.

Scanned with CamScanner

LOT (C

Data Analyti

eal for data exploration, development of,

Ananalytie sandbox t a

processes, proof of concepts, and prototyping. tig

: ‘ «d processes or

3. Once things progress into ongoing, user-manage red

processes, then the aandbox should not be involved, ti

4. A sandbox is going to be leveraged by a fairly small set of users,

5. There will be data created within the sandbox that is segregated fy,

the production database. ‘ oat aa

6. Sandbox users will also be allowed to load data of their own for bres

time periods as part ofa project, even if that datais not part ofthe ote,

enterprise data model.

\4rApache Hadoop:

a Hadoop, abig data analytics tool which is a Java based free

| software framework. ~

\D-— It helps in effective storage of huge amount of data in a storage

plane ‘known asa cluster. 7 ~

c. It runs in parallel on a cluster and also has bility to process huge

data across all nodes in it.

is. storage system in Hadoop popularly known as the Hadoop

@ ae teen ie ado which helps to splits the large

volume of data and distribute across many nodes present in a

ae cluster. ©

2. KNIME:

i a. KNIME analytics platform is one of the Jeading open solutions for

{ data-driven innovation.

i b. This tool helps in discovering the potential and hidden in a huge

volume of data, it also performs mine for fresh insights, or ‘predicts

the new futures,

{

\i 3. OpenRefine:

\a—OneRefine tool is one of the efficient tools to work on the messy

} and large volume of data.

| b, Iti leansing data, transforming that data from one format

another.

\e-~ It helps to explore large data sets easily.

4. Orange:

\2 Orange is famous open-source data visualization and helps in data

iv analysis for beginner and as well to the expert.

Scanned with CamScanner

4-10d (CS-5/1T-6) Introduction to Data Analytics |

= _mirection to Data Analytics

b, This tool provides interactive workflows with

: large toolbox option

to create the same which helps in analysis and visualizing of data.

5. RapidMiner:

a. RapidMiner tool operates using visual programming and also itis

much capable of ‘manipulating, analyzing and modeling the data.

». RapidMiner tools make data science teams easier and productive

by using an open-source platform for all their jobs like machine

learning, data preparation, and model deployment.

© R-programming :

Le Ris a free open source software programming language and a

software environment for statistical computing and graphics,

Itis used by data miners for developing statistical software and data

analysis,

\—Ithas become a highly popular tool for big data in recent years.

Datawrapper:

\-~ Itis an online data visualization tool for making interactive charts.

\b--“Tt uses data file in a csv, pdf or excel format.

\_-~ Datawrapper generate visualization in the form of bar, line, map

ete. It can be embedded into any other website as well.

@ sebtean:

\a-—Tableau is another popular big data tool. Itis simple and very intuitive

to use.

b.

b. It communicates the insights of the data through data visualization.

¢. Through Tableau, an analyst can check a hypothesis and explore

the data before starting to work on it extensively,

Que 1.13, | What are the benefits of analytic sandbox from the view

of an analytic professional ?

Benefits of analytic sandbox from the view of an analytic

Professional :

\4r“ Independence : Analytic professionals will be able'to work

independently on the database system without needing to continually

80 back and ask for permissions for specific projects.

2 Flexibility : Analytic professionals will have the flexibility to use

whatever business intelligence, statistical analysis, or visualization tools

that they need to use.

8 Efficiency : Analytic professionals will be able to leverage the existing

enterprise data warehouse or data mart, without having to move or

migrate data. e

Scanned with CamScanner

1g

Data Analytics (CS-51T.6)

Freed i i focus on the administratj

+: Analytic professionals can reduce focy inistration

* of yeti Ae ection processes by shifting those maintenanes

eae: ill be realized with th

5. Speed : Massive speed improvement will be realized with the move to

parallel processing. This also enables rapid iteration and the ability to

“fail fast” and take more risks to innovate.

q fits of analytic sandbox from ¢]

Que 1.14. | What are the bene! iyti the

view of IT?

aE

Answer |

Benefits of analytic sandbox from the view of IT :

1, ‘Centralization : IT will be able to centrally manage a sandbox

environment just as every other database environment on the system is

managed.

2 Streamlining: A sandbox will greatly simplify the promotion of analytic

processes into production since there will be a consistent platform for

both development and deployment. .

8 Simplicity : There will be no more processes built during development

that have to be totally rewritten to run in the production environment,

4. Control : IT will be able to control the sandbox environment, balancing

sandbox needs and the needs of other users. The production environment

is safe from an experiment gone wrong in the sandbox.

5. _ Costs : Big cost savings can be realized by consolidating many analytic

data marts into one central system.

we 115. | Explain the application of data analytics.

Application of data analyties :

1. Security : Data analytics applications or, more specifically, predictive

analysis has also helped in dropping crime rates in certain areas.

2. Transportation :

a. Data analytics can be used to revolutionize transportation:

b. Tt can be ‘used especially in areas where we need to transport @

large number of people to a specific area and require seamless

transportation. . >

3, Risk detection:

a. Many organizations were struggling under debt, and they wanted @

© olution to problem of feud. eee ene

b. . They already had enough custom in thei and 80,

thoy applied dots nemeuth customer data in their hands, an

—d

Scanned with CamScanner

S-5/TT-6

1-125 (C! ) Introduction to Data Analytics

They id ‘divide and conquer’ Policy with the data, analyzing recent

Understand rues: and any other important inforaetien te

a. Several top logistic companies are using data analysis to examine

collected data and improve their overall efficiency.

b. Using data analytics applications, the companies were able to find

the best shipping routes, delivery time, ax well a. the most cost-

efficient transport means,

5, Fast internet allocation :

a. While it might. seem that allocating fast internet in every area

makes a city ‘Smart, in reality, it is more important to engage in

smart allocation. This smart allocation would mean understanding

how bandwidth is being used in specific areas and for the right

cause.

tis also important to shift the data allocation based on timing and

priority. It is assumed that financial and commercial areas require

the most bandwidth during weekdays, while residential ‘areas

require it during the weekends. But the situation is much more

complex. Data analytics can solve it.

¢. For example, using applications of data analysis, a community can

draw the attention of high-tech industries and in such cases: higher

bandwidth will be required in such areas.

6 Internet searching :

a. When we use Google, we are using one of their many data analytics

applications employed by the company. :

Most search engines like Google; Bing, Yahoo, AOL ete., use data

analytics, These search engines use different algorithms to deliver

the best result for a search query.

7. Digital advertisement :

a. Data analytics has revolutionized digital advertising.

- b. Digital billboards in cities as well as banners on websites, that is,

most of the advertisement sources nowadays use data analytics

using data algorithms. ,

b

Rene] What are the-different types of Big Data analytics ?

Different types of Big Data analyti

1. Descriptive-analytic

‘a, Ttuses data aggregation and data mining to provide insight into the

past,

Scanned with CamScanner

interpretable by humans.

2 Predictive analytics:

8. It uses statistical models and forecasts techniques to understang

the future.

5. Predictive analytis provides-companies with actionable insights

based on data, It provides estimates about the likelihood ofa furge®

outcome.

3 Prescriptive analytics :

"a. Ituses optimization and simulation algorithms to advice,

outcomes.

b. _Itallows users to “prescribe” a numberof different possible actions

and guide them towards a solution.

4. - Diagnostic analytics : .

8. _Itis used to determine why something happened in the past,

b. “It is characterized by techniques such as drill-down, data discovery,

data mining and correlations.

© Diagnostic analytics takes a deeper look at data to understand the

Toot causes of the events. x

on possible

Gen] Explain the key roles for a successful analyties projects.

saa

Key roles for a'successfull analytics project:

1. Business user : aa

a, Business user is someone who understands the domain area and

usually benefits from the results. a3

b. This person can consult and advise the project team on'the context

of the project, the value of the results, and how the outputs will be

1 ereor ete tne Talus of the ra n

Es py enaecamnete

= ae

2 eb

—

Scanned with CamScanner

rr

1-14 (CS-5/1T-6) Introduction to Data Analytics

pee Se aol Nase

Usually a business analyst, line manager, or deep subject matter

expert in the project domain fulfills this role.

3, Project sponsor :

a, Project sponsor is responsible for the start of the project and provides

all the requirements for the project and defines the core business

problem,

b. Generally provides the funding and gauges the degree of value

from the final outputs of the working team.

¢. Thisperson sets the priorities for the project and clarifies the desired

outputs.

3. Project manager : Project manager ensures that key milestones and

objectives are met on time and at the expected quality.

4 Businéss Intelligence Analyst :

a, Analyst provides business domain expertise based on a deep

understanding’ of the data, Key Performance Indicators (KPIs),

key metrics, and business intelligence from a reporting perspective.

b, Business Intelligence Analysts generally create dashboards and

reports and have knowledge of the data feeds and sources.

5. Database Administrator (DBA) :

a. DBAprovisions and configures the database environment to support

the analytics needs of the working team. «

b. ‘These responsibilities may include providing access to key databases,

or tables and ensuring the appropriate security levels are in place

related to the data repositories.

6. Data engineer : Data engineer have deep technical skills to assist with

tuning SQL queries for data management and data extraction, and

Provides support for data ingestion into the analytic sandbox.

1. Data scientist :

a. Data scientist provides subject matter expertise for analytical

techniques, data modeling, and applying valid analytical techniques

to given business problems.

b, They ensure overall analytics objectives are met,

‘They designs and executes analytical methods and approaches with

the data available to the project.

Que 1:18)] Explain various phases of dats analytics life cycle.

Various phases of data analytic lifecycle are :

Phase 1 : Discovery :

Scanned with CamScanner

Data Analytics es 4

the business domain, including rete,

peer rons euro oinfinization or binteg anit haa attempiey

i wl :

inal rajcetin the pont trom which they ext lean,

2 The team assesses the resources available to support the

terms of people, technology, time, and data. ing the bet

vite in this phase include framing the business Prohen

a tn analptio challenge and formulating initial hypothosce Hs) to teat

and begin learning the data.

Phase 2: Data preparation : ; | ;

1. Phase 2 requires the presence ofan analytic sandbox, in which the team

" canwork with data and perform analytics for the duration ofthe project

+ The teamneedstoorcute extra lod, and transform (BLT) or extras,

* alee and load (ETL) to got data into the sandbox. Data should

transformed in the ETL process so the team can work with: itand analyze

it 2 :

3. In this phase, the team also needs to familiar:

thoroughly and take steps to condition the data,

Phase 3 : Model planning :

1 Phase 3 is model planning,

techniques, and workflow it

Project in,

ize itself with the data

where the team determines the methods,

intends to follow.for the subsequent model

building phase,

2. The team explores the data to learn about the relationships between

variables and subsequently selects ‘Key variables and the most suitable

models.

- Phase 4: Model building:

1 In phase 4, the team develops data sets for testing, training, and

Production purposes,

2. Inaddition, in this phase the team buik

lds and executes models based on

the work done in the model planning phase,

ite results ; a

1. In phase 5, the team, in collabo; iti ith maj

incre 8th team ration withi major’ stakeholders,

of the project are ilure based

Me ctiteria developed in phase 1 Te *SU°EESS OF a fare

Scanned with CamScanner

1-16 J (CS-5/IT-6) Introduction to Data Analytics

In addition, the team may run a pilot project to implemont the models in

production environment,

What are the activities should be performed while

identifying potential data sources during discovery phase ?

Main activities that are performed while identifying potential data sources

during discovery phase are :

1. Identify data sources :

a. . Make alist of candidate data sources the team may need to test the

initial hypotheses outlined in discovery phase.

b. Make an inventory of the datasets currently available and those

that can be purchased or otherwise acquired for the tests the team

wants to perform,

2 Capture aggregate data sources :

a. This is for previewing the data and providing high-level

understanding.

b, Itenables the team to gain a quick overview of the data and perform

further exploration on specific areas.

c. Italso points the team to possible areas of iriterest within the data.

3 Review the raw data:

a. Obtain preliminary data from initial data feeds.

b. Begin understanding the interdependencies among the data

attributes, and become familiar with the content of the data, its

quality, and its limitations. :

4.. Evaluate the data structures and tools needed :

a. The data type and structure dictate which tools the team can use to

analyze the data. y

b. This evaluation gets the team thinking about which technologies

may be good candidates for the project and how to start getting

access to these tools.

5. “Scope the sort of data infrastructure needed for this type of

problem : In addition to the tools needed, the data influences the kind

of infrastructure required, such as disk storage and network capacity.

Geizn| Explain the sub-phases of data preparation.

Sub-phases of data preparation are

1. Preparing an analytics sandbox :

Scanned with CamScanner

he te

of data preparation requires t] am to oh, >

oe haualfiesenlionsniicnte tabete explore the arta

interfering with live production databases. edt

i i itis abest practice

le ‘the analytic sandbox, it i

* a ea edit

} and varieties of data for a Big Data analytics project,

is is can i i immary-level aggregated

| include everything from suimmary-level aggre, ie

ly o era data, raw data feeds, and unstructured text data fn

i call logs or web logs.

2 Performing ETLT:

2 InETL, users perform extract, transform, load Processes to

data from adata store, perform data transformations, and load the

data back into the data store.

{

|

{

| Data Analytics

bal

|

I

|

\

!

& Acritical aspect ofa data science project is to become familiar with

Fi the data itself,

b. Spending time to learn the nuances of the datasets provides ‘context

~ to understand what constitutes « reasonable value and expected

& Data conditioninigrefers to

datasets, and

b. Data conditi

‘the process, ‘of cleaning data, normalizing

erforming transformations on te: data.

‘oning can involve many complex steps toj

join or merge

‘asets into a state that enal

bles analysis,

i datasets or otherwise get datas

ul 7

Scanned with CamScanner

1-184 (CS-8IT-6)

ae Introduction to Data Analytics

wera. What are activities that are performed in model planning |

phase?

Activities that are performed in model planning phase are :

1, Assess the structure of the datasets:

8, The structure of the data sets is one factor that dictates the tools

and analytical techniques for the next phase.

b, Depending on whether the team plans to analyze textual data or

transactional data different tools and approaches are required.

2. Ensure that the analytical techniques enable the team to meet the

business objectives and accept or reject the working hypotheses. |

3. Determine if the situation allows a single model or a series of techniques

as part of a larger analytic workflow.

Qaer22-] What are the common tools for the model planning

Se

Common tools for the model planning phase :

LR: .

a Ithasacomplete set of modeling capabilities and provides a good

environment for building interpretive models with high-quality

code.

b. It has the ability to interface with databases via an ODBC

connection and execute statistical tests and analyses against Big

Data via an open source connection.

2 SQL analysis services : SQL Analysis services can perform in-

database analytics of common data mining functions, involved

aggregations, and basic predictive models.

3 SAS/ACCESS:

a. SAS/ACCESS provides integration between SAS and the analytics

sandbox via multiple data connectors such as OBDC, JDBC, and

OLE DB.

b, SASitself'is generally used on file extracts, but with SAS/ACCESS,

users ean connect to relational databases (such as Oracle) and

data warehouse appliances, files, and enterprise applications.

TEER] explain the common commercial tools for model

building phase.

Scanned with CamScanner

; pet

' Data Analytics ee CSénr9

Commercial common tools for the model building phase ;

1. SASenterprise Miner:

a. - SAS Enterprise Miner allows users to run predictive and deseng

models based on arg volumes of data from across the enten®

i 3. Teintroperate with other large data stores, hasmany partner

| and is built for enterprise-level computing and analytics,

! 2 SPSS Modeler provided by IBM : It offers methods to explore ca

analyze data through a GUI.

8 Matlab Matlab provides a high-level language for performing a va ty

of data analytics, algorithms, and data exploration.

4 Apline Miner : Alpine Miner provides a GUI frontend for userg to

develop analytic workflows and interact with Big Data tools ‘and platforms

on the backend.

5. STATISTICA and Mathematica are also popular and well-régarded data

mining and analyties tools.

Que 1.247) Explain common open-source tools for the model

building phase.

Free or open source tools are :

1. Rand PLR:

a. _R provides a good environment for building interpretive models

and PL/Ris a procedural language for PostgreSQL with R,

‘Bb. Using this approach means that R commands can be executed in

database.

© _ This technique provides higher performance and is more scalable

than running R in memory.

2 Octave:

a. It is.a free software programming language for computational

modeling, has some of the functionality of Matlab.

b. Octave is used in major universities when teaching machine

learning.

3. WEKA:WEKA\is a free datamining sofware package with an analyti¢

‘workbench. The functions created in WEKA can ke executed withia

Java code. :

4°. Python: Python: is programming language that provides toolkits for

machine learning and analysis, such, ipy, pandas, and relat

data Visualization using matplotis ee ee ae,

|

Scanned with CamScanner

J (CS-5/IT-6) Introduction to Data Analyti

QL in-database implementations, such as MADIib, provide

analternative to in-memory desktop analytical tools. MADIib provides

an open-source machine learning library of algorithms that can be

executed in-database, for PostgreSQL.

©©9

Scanned with CamScanner

hn. a

é 1: Data Analysis :

Perel Regression Modeling,

Multivariate Analysis

Bayesian Modeling,

Inference and Bayesian

‘Networks, Support Vector

and Kernel Methods

Part-2

Part-3 : Analysis of Time Series :

Linear System Analysis

of Non-Linear Dynamics,

Rule Induction

+ Neural Networks

Learning and Generalisation,

Competitive Learning,

Principal Component Analysis

and Neural Networks

+ Furay Logic : Extractin

: 6 Fuzzy

Models From Data, Fuzzy

Dasion Trees Stoehany

‘Search Method ott

215 (Cs.57-6) . dl

Scanned with CamScanner

2-20(CS-8IT-6) Data Analysis

1.-. Regression models are widely used in analytics, in general being among

the most easy to understand and interpret type of analytics techniques.

2, Regression techniques allow the identification and estimation of possible

relationships between a pattern or variable of interest, and factors that

influence that pattern. :

3, For example, a company may be interested in understanding the

effectiveness of its marketing strategies.

4, ~ Aregression model can be used to understand and quantify which of its

marketing activities actually drive sales, and to what extent.

5, Regression models are built to understand historical data and relationships

‘to assess effectiveness, as in the marketing effectiveness models.

6, Regréssion techniques are used across a range of industries, including

financial services, retail, telecom, pharmaceuticals, and medicine.

7| what are the various types of regression analysis

Various types of regression analysis techniques : .

1. Linear regression : Linear regressions assumes that there is a linear

relationship between the predictors (or the factors) and the, target -

variable.

2. Non-linear regression : Non-linear regression allows modeling of

non-linear relationships.

& Logistic regressio

variable is binomial (accept or reject).

4. Time series regression : Time series regressions is used to forecast

” futiure behavior of variables based on historical time ordered data.

Logistic regression is useful when our target

Scanned with CamScanner

Jauueoguieg YIM peuuess,

“4

235 CSanT9)

FSET] we hr Hee rereson model

del:

tibet tha denon indepen

ie ependent variable in the repeat

ayo rc tnder greed Sa

Linear regression m

1. Weconsider the model

‘variable, When there io

‘model, the model is gener

2 Consider asimple linear regression model

ye Pt BXte

Where,

pis termed asthe dependent or study variable and X is termed asthe

independent or explanatory variable.

‘The terms ,and , arethe parameters ofthe model. The parameter,

ii rermed aa'an intercept term, and the parameter fis termed as the

slope parameter.

‘These parameters are usually called as regression coefficients. The

‘unobservable error component. accounts for the failure of data to lie on

the straight line and represents the difference between the true and

“observed realization of.

‘There can be several reatons for such difference, such as the effect of

alldcleted variables in the model, variables may be qualitative, inherent

randomness in the observations ete.

‘We assume that e is observed as independent and identically distributed

‘random variable with mean zero and constant variance o* and assume

that eisnormally distributed.

6. Theindependent variables are viewed as controlled by the experimenter,

0 it is considered as nox-stochastie whereas y is viewed as a random

variable with :

BG) =}, +p, Xand Var () = 0%

1. Sometimes X can also bea random variable, In such a case, instead of

the sample mean snd sample variance ofy, we consider the conditional

mean ofy given X =x as

BGs) = by + Bye

And the conditional varience ofy given X= as

Varty |x)

8 When the values of ff, and o* are known, the model is completely

described. The parameters, 8, and o are generally unknown in practice

and ¢ is unobserved. Tae determination of the statistical mode!

¥=0,+0,X + edepends onthe determination .. estimation) of Bo Py

and o% In order to know the values of these parameters, be

observations (xy y,Mi=1,...n) on (X,9) are observed/eoliected and f°

used to determine these unknown parameters.

2-4J (CS-S/IT-6) Data Analysis

Write short note on multivariate analy:

1, . Multivariate analysis (MVA) is based on the principles of multivariate

statistics, which involves observation and analysis of more than one

statistical outcome variable ata time.

2 These variables are nothing but prototypes of real time situations,

products and services or decision making involving more than one

variable.

3. MVAis used to address the situations where multiple measurements

are made on each experimental unit and the’relations among these

measurements and their structures are important.

4. Multiple regression analysis refers to a set of techniques for studying

the straight-line relationships among two or more variables.

5. Multiple regression estimates the B's in the equation

y= Bo + Baty + Baty + + Bey +S

Where, thes are the independent variables. y is the dependent variable.

‘The subscript j represents the observation (row) mamber. The f’s are

the unknown regression coefficients. Their estimates are represented

by b's. Each B represents the original unknown (population) parameter,

while b is an estimate of this B. The g is the error (residual) of observation

i

6. Regression problem is solved by least squares. In least squares method

regression analysis, the b's are selected so as to minimize the sum of the

squared residuals. This set of is is not necessarily the set we want, since

they may be distorted by outliers points that are not representative of

the data, Robust regression, an alternative to least squares, seeks to

reduce the influence of outliers. kK

7. Multiple regression analysis studies the relationship between a

dependent (response) variable and p independent variables (predictors,

regressors).

8, The sample multiple regression equation is

Hy = byt byt te HOA,

10, Ifp=1, the model is called simple linear regression, The intercept, by, is

the point at which the regression plane intersects the ¥ axis. The bare

the slopes of the regression plane in the direction of x. These coefficients

are called the partial-regression coefficients, Each partial regression

coefficient represents the net effect the * variable has on the dependent

variable, holding the remaining x’s in the equation constant

RR ee

Scanned with CamScanner

probabilisti¢ graphical model that uses

Bayesian inference for probability computations.

2, A Bayesian network is a directed acyclic graph in

A eponds to. conditional dependency, and each not

which each edge |

de corresponds to

‘aunique random variable. |

4, Bayesian networks aim to model conditional dependence by representing |

edges in a directed graph. |

. (PC=DPC=F)

a 05 05

3, Through these ; os

relationshi; A ngier nani

the randim ele rae one can efficiently conduct inference °°

e graph through the use of factors.

Scanned with CamScanner

2-69 (CS-51T-6) Data Analysis

4. Using the relationships specified by our Bayesian network, wecan obtain

acomppact, factorized representation of the joint probability distribution

by taking advantage of conditional independence.

-5. Formally, if an edge (A, B) oxists in the graph connecting random

variables A and B, it means that P(B A) isa factor in the joint probability

distribution, so we must know P(B|A) for all values of B and A in order

to conduct inference,

6 Inthe Fig. 2.5.1, since Rain has an edge going into WetGrass, it means

that P(WetGrass| Rain) will be a factor, whose probability values are

specified next to the WetGrass node in a conditional probability table.

7. Bayesian networks satisfy the Markov property, which states that a

node is conditionally independent of its non-descendants given its

parents. In the given example, this means that

P(Sprinkler | Cloudy, Rain) = P(Sprinkler | Cloudy)

Since Sprinkler is conditionally independent of its non-descendant, Rain,

given Cloudy.

‘Write short notes on inference over Bayesian network.

Hoon

Inference over a Bayesian network can come in two forms.

L_ First form:

a. The first is simply evaluating the joint probability of a particular

assignment of values for each variable (or a subset) in the network.

b. For this, we already have a factorized form of the joint distribution,

so we simply evaluate that produet using the provided conditional

probabilities. :

© Hfweonly care about a subset of variables, we will need to marginalize

out the ones we are not interested in,

Inmany cases, this may result in underflow, so itis common to take

the logarithm of that product, which is equivalent to adding up the

individual logarithms of each term in the product,

‘Second form :

a, Inthis form, inference task is to find P

some assignment of a subset of the v.

other variables (our eyidence, e), 4

In the example shown in Fig. 2.6.1, we have to find

Sprinkler, WetGrass | Cloudy),

where (Sprinkler, WetGrass} is our.x, and (Cloudy] is oure.

© In order to calculate this, we use the fact that PCr le) = Pr, e) / Ple)

§,22G,0) where a is a normalization constant that we will calculate at

the end such that Plx|e) + P(x | ¢)=1,

wy

(|e) or tofind the probability of

rariables (x) given assignments of

il

Scanned with CamScanner

Palen a5 Pe

© Bor the given oxamplo in ig. 26.1 we c

WetGrass | Cloudy) a lions,

Sprinkler, WetGraas | Cloudy) =

an calculate Pig,

Scanned with CamScanner

2-8J (CS-5/IT-6) Data Analysis

products. Often the spares inventory consists of thousands of distinct

part numbers. *

b. To forecast future demand, complex models for each part number

can be built using input variables suchas expected part failure

rates, service diagnostic effectiveness and forecasted new product

shipments.

¢, However, time series analysis can provide accurate short-term

, | forecasts based simply on prior spare part demand history.

3% Stock trading:

a. Some high-frequency stock traders utilize a technique called pairs

trading.

b. _Inpairs trading, an identified strong positive correlation between

the prices of two stocks is used to detect a market opportunity.

¢. Suppose the stock prices of Company A and Company B consistently

move together.

@ Time ceries analysis can be applied to the difference of these

companies' stock prices over time. :

e. Astatistically larger than expected price difference indicates that it

is a good time to buy the stock of Company A and sell the stock of

Company B, or vice versa.

Qiie 2.8 What are the components of time series ?

=

Answer

A time series can consist of the following components :

1, Trends: ' ‘

a. ‘The trend refers to the long-term movement in a time series.

b, It indicates whether the observation values are increasing or

decreasing over time.

c. _Examples of trends are a steady inerease in sales month over month

or an annual decline of fatalities due to car accidents.

2 Seasonality :

1, The seasonality component deseribes the fixed, periodic fluctuation

in the observations over time.

b. Itisoftenrelated tothe calendar.

For example, monthly retail sales can fluctuate over the year due

to the weather and holidays.

3 Cyclic: .

a. Acyclic component also refers to a periodic fluctuation, which is not

as fixed. :

Scanned with CamScanner

mH d

Data Analytics 95 (C85, ‘|

1b Forexample, retails salos are influenced by the general state a

economy. ‘ j

EHH Bxpiain rule induction.

1. Rufeinduetion is data mining process of deducing i then rules fom

dataset.

2. These symbolic decision rules explain an inherent relationshi;

the attributes and class labels in the dataset.

3, Many real-life experiences are based on intuitive rule induction,

4.” Rule induction provides a powerful classification approach

easily understood by the general users.

5. Itisused in predictive analytics by classification of unknown data,

6. Rule induction is also used to describe the patterns in the data.

7. The easiest way to extract rules from a data set.

that is developed on the same data set.

j

iP between |

that can be

is from a decision tree

Que 2:10:] Explain an iterative procedure of extracting rules from

data sets.

1 Sequential cove;

ting is an iterative procedure of i |

Sequential Pi of extracting rules from

‘The sequential covering approach attempt: in |

dalaserlass ty oeeriné 4PPFoach attempts to find all the rules in the i

2 The algorithm starts wit]

b

Scanned with CamScanner

2-10 (CS-5/IT-6) .- Data Analysis

SS naan

Step 2: Rule development:

a. The objective in this step is to cover all “+” data points using

classification rules with none or as few “-” as possible.

b. For example, in Fig. 2.10.2 , ruler, identifies the area of four “+” in

the top left corner. .

c. Since this rule is based on simple logic operators in conjunets, the

boundary is rectilinear.

4 Once rule r, is formed, the entire data points covered by r, are

eliminated and the next best rule is found from data sets.

Step 3: Learn-One-Rule:

a. Each rule r, is grown by the learn-one-rule approach.

b, Bach rule starts with an empty rule set and conjuncts are added

one by one to increase the rule accuracy.

c. Rule accuracy is the ratio of amount of “+” covered by the rule to all

records covered by the rule :

Correct records by rule

racy A (7) = Correct records by rule__

Rule accuracy A (7) = “5iT-ecords covered by the rule

dQ. Learn-one-rule starts with an empty rule set: if ) then class = “+”.

e. The accuracy of this rule is the same as the proportion of + data

points in the data set. Then the algorithm greedily adds conjuncts

‘until the accuracy reaches 100 %.

{If the addition of a conjunct decreases the accuracy, then the

algorithm looks for other conjuncts or stops and starts the iteration

of the next rule.

Scanned with CamScanner

k

5 Aer are is develope, thon all the data prints covera

\ fale are eliminated from the data set, od

it bh. The above steps are repeated for the next rule to cover the ne

k the "+" data points, F |

; & nF. 2105, rer developed ae the data int coverd

ke are eliminated, r

5

|

Step 5 : Development of rule set

After the rule set is developed to identify all “+” data points, the rule

| model is evaluated with a data set used for pruning to reduce

generalization errors.

b. The metric used to evaluate the need for pruning is (p - np +n,

where p is the number of positive records covered by the rule and

‘nis the number of negative records covered by the rule.

© Allrules to identify “+” data points are aggregated to form a rule

group. . -

‘Scanned with CamScanner

2-123 (CS.5/IT-6) Data Analysis

Describe supervised learning and unsupervised

1,__ Supervised learning is also known as associative learning, in which the

network is trained by providing it with input and matching output

patterns,

2. Supervised training requires the pairing of each input vector with a

target vector representing the desired output. z

‘8, Thoinput weetor together with the corresponding target vectors called

_ training pair.

_ Tosolve a problem of supervised learning following steps are considéred :

a. Determine the type of training examples.

b. Gathering of a training set.

cx: Determine the input feature representation of the learned function.

4. Determine the structure of the learned function and corresponding

learning algorithm. ‘

e. Complete the design. i

4,

5. Supervised learning can be classified into two categories :

i’ Classification ii, Regression _

‘Unsupervised learning :

1. Unsupervised learning, an output unitis trained to respond to clusters

of pattern within the input.

3. _Inthis method of training, the input vectors of similar type are grouped

without the use of training data to specify how atypical member of each

group looks or to which group a member. belongs.

3. Unsupervised training does not require ‘a teacher; it requires certain

guidelines to form groups.

4, Unsupervised learning can be classified into two categories :

i, Clustering ii Association

ai] Differentiate between supervised learning and

Difference between supervised and unsupervised learning: “

Scanned with CamScanner

data as input.

2 | Computational complexity is Computational complexity is less,

very complex. sss

2 |itusesofflineanalysis, _|Ituses real time analysis of data,

[Number of classes is | Number of classes is not known,

known.

5. |Accurate and: reliable | Moderate accurate and reliable|

results. results.

[EEEZAT] What is the'multilayer perceptron model ? Explain it

Multilayer perceptron is a class of feed forward artificial neural networl

Multilayer perceptron model has three layérs; an input layer, and output

layer, and a layer in between not connected directly to the input or the

output and hence, called the hidden layer.

For the perceptrons in the input layer, we use linear transfer function, | +

and for the perceptrons in the hidden layer and the output layer, we use

sigmoidal or squashed-S function.

‘The input layer serves to distribute the values they receive to the next |

layer and so, does not perform'a weighted sum or threshold.

The input-output mapping of mi

Fig. 2.13.1 and is represented by :

wultilayer perceptron is shown ia

Scanned with CamScanner

2-14d (CS-5/IT-6) Data Analysis

6 Multilayer perceptron does not increase computational power over a

single layer neural network unless there is a non-linear activation

function between layers.

i Draw and explain the multiple perceptron with its

learning algorithm.

1. The perceptrons which are arranged in layers are called multilayer

(multiple) perceptron.

2. This model has three layers : an input layer, output layer and one or

more hidden layer.

3, Fortheperceptrons in the input layer, the linear transfer function used

and for the perceptron in the hidden layer and output layer, the sigmoidal

or squashed-S function is used. The input signal propagates through the

network in a forward direction. -

4. Inthe multilayer perceptron bias b(n) is treated as a synaptic weight

driven by fixed input equal to + 1.

x(n) = (41, x01), 24(0), serene (OI?

where n denotes the iteration step in applying the algorithm,

5. Correspondingly we define the weight vector as :

w(n):= (b(n), w,(n), wn (nF -

6. Accordingly the linear combiner output is written in the compact form

Vin) = Swilndsn) = wn) x(n)

Architecture of multilayer perceptron :

Inputlayer First hidden Second hidden 2

. layer layer

Scanned with CamScanner

a. SoG UF

Data Analytics

‘ig. 2.14.1 shows the architectural model of multilayer

* oe hidden layer and an output layer.

network progresses in a forward ding,

Bel toy oe a ae layei-hy-layer basis, ction |

m

arning algorithm : ae

Le “ th number of input set x(n), is correctly classified into Fam

1 itt able classes, by the weight vector w(n) then no adjustmese ty

se] ,

weights are done

w(n + 1) = w(n)

Tf w"x(n) > 0 and x(n) belongs to class G,.

w(n ¥ 1) = wln)

IfwTe(n) <0 and x(n) belongs to class G,,

2... Otherwise, the weight vetor ofthe perceptron is updated in accordane

with the rule.

Perceptron wig

network size are :

1. Growing algorithms :

& This group of algorithms begins with training a relatively smal)

aiden rehitecture and allows new units ae4 connections to be

aided during the training process, whos necessary,

b Three growing algorithms are commonly applied: the upstart

algorithm, the tiling algorithm

., and the cascade correlation,

1

Algorithms to optimize the |

Poly to binary input/output variables and networks

with step activation function,

a The third one, which is applicable to problems with continuous

input/output, Varia} it it

2

& General

of ‘training a relatively large

ly removing eithi

er weights or complete units

Necessary,

llows the ‘network to learn, quickly and witha |

‘al conditions and local minima,

Scanned with CamScanner

216 J (CS-5/IT-6)

Approach for knowledge extraction from multilayer perceptrons :

a. Global approach : :

1. This approach extracts a set of rules characterizing the behaviour

~. of the whole network in terms of input/output mapping.

2. A tree of candidate rules is defined. The node at the top of the tree

represents the most general rule and the nodes at the bottom of

.» the tree represent the most specific rules. se

3. Each candidate symbolic rule is tested against the network's

behaviour, to see whether such a rule can apply.

4. The process of rule verification continues until most of the training

set is covered.

5. One of the problems connected with this approach is that, the

number of candidate rules can become huge when the rule space

becomes more detailed.

b. Local approach :

1. This approach decomposes the original multilayer network into a

collection of smaller, usually single-layered, sub-networks, whose

input/output mapping might be easier to model in terms of symbolic

rules.

2, Based on the assumption that hidden and output units, though

sigmoidal, can be approximated by threshold functions, individual

units inside each sub-network are modeled by interpreting the

incoming weights as the antecedent of a symbolic rule:

3. The resulting symbolic rules are gradually combined together to

define a more general set of rules that describes the network as a

whole.

Data Analysis

4, The monotonicity of the activation function is required, to limit the

number of candidate symbolic rules for each unit.

5, Local rule-extraction methods usually employ a special error

function and/or a modified learning algorithm, to encourage hidden

and output units to stay in a range consistent with possible rules

and to achieve networks with the smallest number of units and

weights. .

Discuss the selection of various parameters in BPN.

Selection of various parameters in BPN (Back Propagation Network):

1. Number of hidden nodes : i:

Scanned with CamScanner

Jauueoguieg YIM peuuess,

Dat Analytice 2175 (C8-51T.4)

‘The uiding criterion isto soleet tho minimum nodes which would

not impair the network performance so that the memory demand

for atoring the weights ean be kopt minimum.

When the number of hidden nodes is equal to the number of

‘raining patterns thelearning could be fastest,

In such eases, Back Propagation Network (BPN) remembers

‘raining patterns losing all generalization capabilities.

iv. Hence, as far as generalization is concerned, thé number of hidden

nodes should be small compared to the numberof training pattems

‘ay 10:1),

2 Momentum coefficient (a):

i The another method of reducing the training time is the use of

momentum factor because it enhances the training process,

fe

(Weight change

srithout momentum)

alawi"

(Momentum term)

{The momentum also overcomes the effect of local minima,

‘ii, Itwill carry a weight change process through one or local minima

and get it into global minima,

3. Sigmoidal gain @)

i. When the weights become large and force the neuron to operate

in aregion where sigmoidal function is very flat, a better method

of coping with network paralysisis to adjust the sigmoidal gain,

By decreasing this scaling factor, we effectively spread out

sigmoidal function on wide range so that training proceeds faster.

4. Local minima :

i. One of the most practical solutions involves the introduction of @

shock which changes all weights by specific or random amounts.

ii If this fails, thon the solution is to re-randomize the weights and

start the training all over. Z

‘iL Simulated annealing used to continue training until local minis

in reached.

iv, After this, simulated annealing is stopped and BPN continues

until global minimum is reached, PP

¥. Inmost ofthe cases, only afew simu

two-stage process aru needed.

lated annealing cycles ofthis

164 (CS-5IT-8) Data Analysis

+

ves

or for knowledge extraction from multilayer perceptrons :

a. Global approach :

1, Thisapproach extracts a set of rules characterizing the behaviour

ofthe whole network in terms of input/output mapping,

2, Atree of candidate rules is defined. The node at the top of the tree

represents the most general rule and the nodes at the bottom of

the tree represent the most specific rules, : -

3. Each candidate symbolic rule is tested against the network's

behaviour, to see whether such a rule can apply.

4, The process of rule verification continues until most of the training

set is covered.

5. One of the problems connected with this approach is that, the

number of candidate rules can become huge when the rule space

becomes more detailed.

b. Local approach :

1. This approach decomposes the original multilayer network into a

collection of smaller, usually single-layered, sub-networks, whose

input/output mapping might be easier to model in terms of symbolic

rules.

2 Based on the assumption that hidden and output units, though

sigmoidal, can be approximated by threshold functions, individual

units inside each sub-network are modeled by interpreting the

incoming weight’ as the antecedent of a symbolic rule.

3. The resulting symbolic rules are gradually combined together to

define a more general set of rules that describes the network as a

whole,

4. The monotonicity of the activation function is required, to limit the

number of candidate symbolic rules for each unit.

5. Local rule-extraction methods usually employ a special error

function and/or a modified learning algorithm, to encourage hidden

and output units to stay in a range consistent with possible rules

and to achieve networks with the smallest number of units and

weights,

Qa] Discuss the selection of various parameters in BPN.

Selection of various parameters in BPN (Back Propagation Network) :

le Number of :

Scanned with CamScanner

‘The guiding criteri

not impair the network performance so that the meme; ch woul

“> 2° foe atoring the weights can be kept minimum, "9 demand

en the number of hidden nodes is equal to th,

hc patterns, the learning could be fastest,” bey 5

In such’eases, Back Propagation Network (BPN) y¢,

training patton losing all generalization capabilitigg“™°™bey

iv. Hence, a far a8 generalization is concerned, thé number op

nodes should be small compared to the number of training hidden,

\ Patterns

(say 10:1).

2 Momentum coefficient (a) :

i! The another method of reducing the training time ithe ug A

momentum factor because it enhances the training Process,

(Weight change

‘without momentum),

law)”

(Momentum term)

i, The momentum also overcomes the effect of local minima.

iii. Itwill carry a weight change process through one or local minima

and get it into global minima,

3. Sigmoidal gain (2):

i When the weights become large and force the neuron to operate

in aregion where sigmoidal function is very flat, a better meth

of coping with network paralysis is to adjust the sigmoidal be

ii: By decreasing this scaling ‘factor, we effectively iret

sigmoidal function on wide range so that training proceeds

4. Local minima : ‘ a

; jon o

i. One of the tiost practical solutions involves the introductions.

shock which changes all weights by specific or random =

ii If this fails, then the solution is to re-randomize the weis! :

start the training all over. iain

Hi” Simulated annealing used to continue training until oeal ™®

is reached. continues

iv. After this, simulated annealing is stopped and BPN ©

until global minimum is reached. sivas

In most of the cases, only a few simulated annealing °¥'

two-stage process are needed.

es of tit

a

Scanned with CamScanner

9-18 (CS-5AT-6) vatunieth

Learning coefficient (n) :

i, The learning coefficient cannot be negative bee i

i this would

cause the change of weight vector to ase 0

vector position. move away from ideal weight

i, If the learning coefficient is zero, no le

hence, the learning coefficient must be p.

ii, Ifthe learning coefficient is greater than 1, the wei i

overshoot from its ideal position and oscillate, nt et" Mil

iv. . Hence, the learning coefficient must be between zero and one.

eae] What is learning rate ? What is its function 2

ieee |

1, Learning rate is’ constant used in learning algorithih that define the

speed and extend in weight matrix corrections, g

2, Setting a high learning rate tends to bring instability and the system is

difficult to converge even to a near optimum solution.

3, Alow value will improve stability, but will slow down convergence.

Learning function :

1. In most applications the learning rate is a simple function of time for

example LR. = (1 +2).

2 These functions have the advantage of having high values during the

first epochs, making large corrections to the weight matrix and smaller

values later, when the corrections need to be more precise.

3, Using a fuzzy controller to adaptively tune the learning rate has the

added advantage of bringing all expert knowledge in use.

4," Ifitwas possible to manually adapt the learning rate in every epoch, we

would surely follow rules of the kind listed below :

‘a. Ifthe change in error is small, then increase the learning rate.

b, _ Ifthere are a lot of sign changes in error, then largely decrease the

learning rate.

, If the change in error is small and the speed of error change is

small, then make a large increase in the learning rate,

oeaiay] Explain competitive learning.

Competitive learning is a form of unsupervised learning in artificial

neural networks, in which nodes compete for the right to respond to a

Subset of the input'data, increas

Avariant of Hebbian learning, competitive learning works by increasing

the specialization of each node in the network. It is well suited to finding

clusters within data. .

arning takes place and

ositive.

a

Scanned with CamScanner

ata Analytics”

dels and al

a ede vector ava its arotiert ‘

tive learning model there are hierarchical ajyo¢

4 Inacompt ‘th inhibitory and excitatory connections, Mite

hi

: nections are between individual la

Te Soy connec ire between units in layered clustery’ *

; .

Unitsin a cluster are either active or inactive.

t ‘There are three basic elements to a competitive learning rule.

] the same except fo ‘a

of neurons.that are all cep be ia :

a a eriboted synaptic weights, and which therefore dnl

Giferently toa given set of input patterns, a

‘Alimit imposed on the “strength” of each neuron,

‘Amechanism that permits the neurons to compete forthe right

respond to a given subset of inputs, such that only one ouip

respon (or only one neuron per group), is active (i.e, on’) stg

time. The neuron that wins the competition is called a “winner.

take-all” neuron.

ERAT] wxplain Principle Component Analysis (PCA) in dan

analysis.

1

1. PCA is a method used to reduce number of variables in dataset by

extracting important one from a large dataset.

2 Itreduces the dimension of our data with the aim of retaining as much

informations possible.

3. In other words, this method combines highly correlated variables

together to forma smaller number of an artificial set of variables which

its called principal components (PC) that account for most-variance in?

ta.

4. “A principal component can be defined as a linear combination of

optimally-weighted observed variables.

5. The first principal component retains: maximum variation

a. Prstttinthe original eomponents. ‘

* The principal components are the eigenvectors ofa covariane® ie

os hence they are orthogonal. a

ar hate PCA are these principal components, the number of WHE?

The POs aaa tual the number of original variables. |

in the Pos Some useful properties which are listed beIO™ a}

Festa 8 essentially the linear combinations of °°

les and the weights vector

4. The Pos an ector,

*¢ orthogonal, : u

ms based on the principle of compet;

tization and sel: :

i

that was

Scanned with CamScanner

(cst) Data Analysis

» | sed

7 ‘The variation present in the PC decrease as we move from the Ist

g © pcto the last one.

, Define fuzzy logic and its importance in our daily life.

What is role of crisp sets in fuzzy logic?

1, Fuzzy logic is an approach to computing based on “degrees of truth”

rather than “true or false” (1 or 0).

2, Fuzzy logic includes 0 and 1 as extreme cases of truth but also includes

the various states of truth in between.

3. Fuzzy logic allows inclusion of human assessments in computing

problems.

4, - Itprovides an effective means for conflict resolution of multiple criteria

and better assessment of options.

Importance of fuzzy logic in daily life :

L Ruy logic is essential for the development of human-like capal

‘AL

2. It isused in the development of intelligent systems for decision making,

‘identification, optimization, and control.

3. Fuzzy logic is extremely useful for many people involved in research

and development including engineers, mathematicians, computer

« software developers and researchers.

4. Fuzzy logichas been used in numerous applications such as facial pattern

Tecognition, air conditioners, vacuum cleaners, weather forecasting

systems, medical diagnosis and stock trading.

{tcontains the precise location of the set boundaries.

It provides the membership value of the set.

a Define classical set and fussy seta: State the importance

fuzzy sets,

Scanned with CamScanner

Jauueoguieg YIM peuuess,

oy Fun

2219 C545

aa

sate

ms amt it

Octet gy

5 sip rea, |

‘3. Tho-assial sti de

Fseplitted into two Br

Fuzzy set

gaz members and non-members,

= satin stein dy

cee io Unb eg

Pasa cy

etanyeleedD

rhere jit the degre of membership of in A

Importance of fuzzy set:

sere und forthe modeling and ncusion of contradiction ins kava

ise.

2. tals ineregss the system autonomy.

-based appliance.

2 lena pero eee

“TE cw nd conte cs iat fa

Tilo Tope an lomont | ray loge supports

Uiksrniongs tor don aot ofmembershiofelemen

Belong toa act

‘2 | Crisp logic is built on a) Foszy logis built on a mutt

froth values| truth vals.

Grae).

[The statemont which Ia] Afuay proposition sa

ra eer Na ataoqure a

Tethisaled a propostonn

voy ee. 4

7] twofold nana af oi mile 2

law of non-contradiction | contradiction are ted

eld good inerip ge |

ae

elo

cout) Data Analysis

Define the membership function and state its importance

wel 0, Also discuss the features of membership functions.

snip function t

Members!

ip function for a fuzzy sot A on the universe of discourse X

Amenterst? :X- [0,1], where each element of X is mapped toa value

fetween Oand 1.

Thisvalu, called membership value or degree of membership, quantifies

Thugrade of membership ofthe element in X to the fuzny set A.

4 Membership funetions characterize fuzziness (.. all the information in

faszy set), whether the elements in fuzzy sets are discrete or continuous.

‘4 Membership functions can be defined as a technique to solve practical

problems by experience rather than knowledge.

5, Membership functions are represented by graphical forms.

Importance of membership function in fuzzy logic :

1 Itallows usto graphically represent a fuzzy set.

2 Ithelpsin finding different fuzzy set operation.

Features of membership functiot

L Core:

L

2

a. Thecoreofa membership function for some fuzzy set A is defined

as that region of the universe that is characterized by complete and

full membership in the set.

b. The core comprises those elements x of the universe such that

HaG@)=L,

2 Support:

a The support of a membership function for some fuzzy set A is

defined as that region of the universe that is characterized by

nonzero membership in the set A.

‘The support comprises those elements x of the universe such that

Hy) >0,

Scanned with CamScanner

Tipsy)

Data Analytics 2-235 (C8574)

nix) Core

, je

a —rx

Support

a. The boundaries of a membership function for some fuzzy set A

are defined as that region of the universe containing elements that

have a non-zero membership but not complete membership.

b. The boundaries comprise. those elements x of the universe such

that 0< Hy (x) <1.

1. _Inferences is a technique where facts, prémises F,, F,, .

goal G is to be derived from a given set.

” 2. Fuzzy inferenceis the process of formulating the mapping from a given

input to an output using fuzzy logic.

3... The mapping then provides a basis from which decisions can be made.

4.° Fuzzy inference (approximate réasoning) reférs to computational

procedures used for evaluating linguistic (IF-THEN) descriptions.

5. The two important inferring procedures are :

i, Generalized Modus Ponens (GMP) :

1. GMPis formally stated as

Itxis A THENy is B

xis A’

yis B

Here, A, B, A’ and B’ are fuzzy terms.

2, Every fuzzy linguistic statement above the line is analytically known

and what is below is analytically unknown.

Scanned with CamScanner

8-B/T-6)

Here B

oRG,y)

where ‘0’ denotes max-min compositi :

3 ‘The membership function ig." “THEN relation)

My @) = max (min (u,. 6, n_(e,9))

where 11, (9) is membership functionof 5’, H,.() ismembership

function of A’ and pq(x,y) is thy

implication relation,

ii, Generalized Modus Tollens (GMT)

1. GMTis defined as |

Ifzis A Thenyis B

yis BY

© membership function of

xis A’

2. The membership of A’ is computed as

A’ = BioR. Gy)

3. Interms of membership function

: G2) = max (min (u(y), b4g,9)))

(QG€236]] explain Fuzzy Decision Tree (FD).

1, Decision trees are one of the most popular methods for learning and

reasoning from instances. ‘

2. . Given aset of n input-output training patterns D = ((X',y)i=1, ....n),

where each training pattern X" has been described by a set ofp conditional

(or input) attributes (,)...-%,) and one corresponding discrete class label

where y! ¢ (1,....g} and q is the number of classes,

.. The decision attribute y represerits a posterior knowledge regarding

the class of each pattern.

4,, An arbitrary class has been indexed by / (1

You might also like

- Unit1 Introduction To Data Analytics and Data Analytics Lifecycle NotesNo ratings yetUnit1 Introduction To Data Analytics and Data Analytics Lifecycle Notes13 pages

- Chapter-1 Introduction To Data AnalyticsNo ratings yetChapter-1 Introduction To Data Analytics34 pages

- Lecture1 Introductiontobigdata 190301171350No ratings yetLecture1 Introductiontobigdata 19030117135063 pages

- Data Analytics Quantum 240518 190845 RemovedNo ratings yetData Analytics Quantum 240518 190845 Removed141 pages

- Big Data Analytics Project Proposal by SlidesgoNo ratings yetBig Data Analytics Project Proposal by Slidesgo12 pages

- Introduction To Big Data & Basic Data AnalysisNo ratings yetIntroduction To Big Data & Basic Data Analysis51 pages

- Getting An Overview of Big Data (Module1)No ratings yetGetting An Overview of Big Data (Module1)58 pages