Unit - I - Introduction

Uploaded by

ramyaprojectUnit - I - Introduction

Uploaded by

ramyaprojectB.

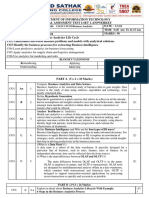

E- CSE-CS3352–FDS – R-2021 II/III

Krishnasamy

College of Engineering & Technology

Department of Computer Science & Engineering

CS3352 – FOUNDATIONS OF DATA SCIENCE

Notes of Lesson

B.E - CSE

Year & Sem: II / III

Regulations -2021

Prepared by, P. RAMYA, AP/CSE Page 1

B.E- CSE-CS3352–FDS – R-2021 II/III

CS3352 – FOUNDATIONS OF DATA SCIENCE

UNIT – I: INTRODUCTION (9)

Syllabus:

Data Science: Benefits and uses – facets of data - Data Science Process: Overview – Defining

research goals – Retrieving data – Data preparation - Exploratory Data analysis – build the model–

presenting findings and building applications - Data Mining - Data Warehousing – Basic Statistical

descriptions of Data.

Material Reference:

David Cielen, Arno D. B. Meysman, and Mohamed Ali, “Introducing Data Science”,

Manning Publications, 2016. (first two chapters for Unit I) Page Number: 1 to 56

and Internet.

CHAPTER – I

INTRODUCTION ABOUT DATA SCIENCE

What is Data Science?

Data science is the domain of study that deals with vast volumes of data using

modern tools and techniques to find unseen patterns, derive meaningful information, and

make business decisions. Data science uses complex machine learning algorithms to build

predictive models. The data used for analysis can come from many different sources and

presented in various formats.

Data Science:

Data science is an interdisciplinary / multidisciplinary field which is focused on

extracting knowledge from Big Data, which are typically large, and applying the

knowledge and actionable insights from data to solve problems in a wide range of

application domains.

Need for Data Science

Big data is a huge collection of data with wide variety of different data set and in different

formats. It is hard for the conventional management techniques to extract the data of

different format and process them.

Data science involves using methods to analyse massive amounts of data and extract

the knowledge it contains.

Prepared by, P. RAMYA, AP/CSE Page 2

B.E- CSE-CS3352–FDS – R-2021 II/III

The example is like a relationship between crude oil and oil refinery.

Characteristics of Big data

Volume - How much data is there?

Variety - How diverse are different types of data?

Velocity - At what speed is new data generated?

Veracity - How accurate is the data?

Benefits & uses of Data Science & Big Data:

Data science and big data are used almost everywhere in both commercial and non-

commercial settings.

Example

Google AdSense, which collects data from internet users so relevant commercial messages

can be matched to the person browsing the internet.

Human resource professionals use people analytics and text mining to screen candidates,

monitor the mood of employees, and study informal networks among co-workers.

Financial institutions use data science to predict stock markets, determine the risk of

lending money, and earn how to attract new clients for their services.

Many governmental organisations not only rely on internal data scientists to discover

valuable information, but also share their data with the public.

Nongovernmental organisations (NGO’s) are also no strangers to using data. They use it to

raise money and defend their causes.

Applications of Data Science: (or) Top 10 Data Science Applications:

Fraud and Risk Detection.

Healthcare.

Internet Search.

Targeted Advertising.

Website Recommendations.

Advanced Image Recognition.

Speech Recognition.

Airline Route Planning.

1. Explain are the facets of data? NOV / DEC 2023 ,NOV / DEC 2022

Facets of Data:

It is used to represent the various forms in which the data could be represented inside

Big Data. The following are the various forms in which the data could be represented.

Prepared by, P. RAMYA, AP/CSE Page 3

B.E- CSE-CS3352–FDS – R-2021 II/III

Structured

Unstructured

Natural Language

Machine Generated

Graph Based

Audio, Video & Image

Streaming Data

Structured

Structured data is data that depends on a data model and resides in a fixed field

within a record. As such, it’s often easy to store structured data in tables within databases

or Excel files. SQL, or Structured Query Language, is the preferred way to manage and

query data that resides in databases.

Unstructured

Unstructured data is data that isn’t easy to fit into a data model because the content is

context-specific or varying.

Prepared by, P. RAMYA, AP/CSE Page 4

B.E- CSE-CS3352–FDS – R-2021 II/III

Natural Language

Natural language is a special type of unstructured data; it’s challenging to process

because it requires knowledge of specific data science techniques and linguistics.

The natural language processing community has had success in entity recognition,

topic recognition, summarization, text completion, and sentiment analysis, but models

trained in one domain don’t generalise well to other domains.

Example: Emails, mails, comprehensions, essays, articles etc..

Machine Generated

Machine-generated data is information that’s automatically created by a computer,

process, application, or other machine without human intervention. Machine-generated

data is becoming a major data resource and will continue to do so.

Graph Based

“Graph data” can be a confusing term because any data can be shown in a graph.

“Graph” in this case points to mathematical graph theory. In graph theory, a graph is a

mathematical structure to model pair-wise relationships between objects. Graph or network

data is, in short, data that focuses on the relationship or adjacency of objects. The graph

structures use nodes, edges, and properties to represent and store graphical data. Graph-

based data is a natural way to represent social networks, and its structure allows you to

calculate specific metrics such as the influence of a person and the shortest path between

two people.

Prepared by, P. RAMYA, AP/CSE Page 5

B.E- CSE-CS3352–FDS – R-2021 II/III

Audio, Video & Image:

Audio, image, and video are data types that pose specific challenges to a data scientist.

Tasks that are trivial for humans, such as recognizing objects in pictures, turn out to be

challenging for computers.

Examples: You Tube videos, podcast, music and lots more to add up to.

Streaming Data:

While streaming data can take almost any of the previous forms, it has an extra

property. The data flows into the system when an event happens instead of being loaded

into a data store in a batch. Although this isn’t really a different type of data, we treat it

here as such because you need to adapt your process to deal with this type of information.

Examples: Video conferences and live telecasts all work on these basics.

Prepared by, P. RAMYA, AP/CSE Page 6

B.E- CSE-CS3352–FDS – R-2021 II/III

2. Describe the overview of the data science process AP / MAY 2023 NOV / DEC 2022

Data Science Process – Overview:

The data science process typically consists of six steps:

Prepared by, P. RAMYA, AP/CSE Page 7

B.E- CSE-CS3352–FDS – R-2021 II/III

1. Setting the Research Goal

The first step of this process is setting a research goal. The main purpose here is

making sure all the stakeholders understand the what, how, and why of the project. In

every serious project this will result in a project charter.

Defining research goal

An essential outcome is the research goal that states the purpose of your assignment

in a clear and focused manner. Understanding the business goals and context is critical for

project success.

Create project charter

A project charter requires teamwork, and your input covers at least the following:

A clear research goal

The project mission and context

How you’re going to perform your analysis

What resources you expect to use

Proof that it’s an achievable project, or proof of concepts

Deliverables and a measure of success

A timeline

2. Retrieving Data

The second phase is data retrieval. You want to have data available for analysis, so

this step includes finding suitable data and getting access to the data from the data owner.

The result is data in its raw form, which probably needs polishing and transformation

before it becomes usable.

Data can be stored in many forms, ranging from simple text files to tables in a

database. The objective now is acquiring all the data you need. This may be difficult, and

even if you succeed, data is often like a diamond in the rough: it needs polishing to be of

any use to you.

Start with data stored within the company.

The data stored in the data might be already cleaned and maintained inrepositories

such as databases, data marts, data warehouses and data lakes.

Don't be afraid to shop around

If the data is not available inside the organization, look outside your organization walls.

Do data quality checks now to prevent problems later

Always double check while storing your data if it is an internal data. If it is an external data

prepare the data such a way that it could be easily extracted.

3. Briefly describe the steps involved in Data Preparation. NOV / DEC 2023

Prepared by, P. RAMYA, AP/CSE Page 8

B.E- CSE-CS3352–FDS – R-2021 II/III

4. Data Preparation

The data preparation involves Cleansing, Integrating and transforming data

Cleansing Data

Data cleansing is a sub process of the data science process that focuses on

removing errors in your data so your data becomes a true and consistent representation of

the processes it originates from.

Interpretation error - Example a age of a person can be greater than 120

Inconsistencies- Example is mentioning the Gender as Female in one column and F in

another column but both tend to mention the same

Data Entry Errors - Data collection and data entry are error-prone processes.

They often require human intervention, and because humans are only human, they make

typos or lose their concentration for a second and introduce an error into the chain.

Redundant White space - White spaces tend to be hard to detect but cause errors

like other redundant characters would. White spaces at the beginning of a word or at a end

of a word is much hard to identify and rectify.

Impossible values and sanity checks - Here the data are checked for physically

and theoretically impossible values.

Outliers - Here the data are checked for physically and theoretically impossible

values. An outlier is an observation that seems to be distant from other observations or,

more specifically, one observation that follows a different logic or generative process than

the other observations.

Dealing with the Missing values - Missing values aren’t necessarily wrong, but

you still need to handle them separately; certain modelling techniques can’t handle missing

values.

Techniques used to handle missing data are given below

Prepared by, P. RAMYA, AP/CSE Page 9

B.E- CSE-CS3352–FDS – R-2021 II/III

Correct as early as possible,

Decision-makers may make costly mistakes on information based on incorrect data from

applications that fail to correct for the faulty data.

If errors are not corrected early on in the process, the cleansing will have to be done for

every project that uses that data.

Data errors may point to defective equipment, such as broken transmission lines and

defective sensors.

Data errors can point to bugs in software or in the integration of software that may be

critical to the company.

Combining data from different sources

Data from different model can be combined and stored together for easy cross reference.

There are different ways of combining the data.

Joining Tables

Appending Tables

Using views to simulate data joins and appends

Transforming Data certain models require their data to be in a certain

shape.

Data Transformation - Converting a data from linear data into sequential or continuous

form of data

Reducing the number of variables - Having too many variables in your model makes the

model difficult to handle, and certain techniques don’t perform well when you overload

them with too many input variables.

Prepared by, P. RAMYA, AP/CSE Page 10

Prepared by, P. RAMYA, AP/CSE Page 11

B.E- CSE-CS3352–FDS – R-2021 II/III

Turning variables into Dummies

5. Data Exploration

Information becomes much easier to grasp when shown in a picture, therefore you

mainly use graphical techniques to gain an understanding of your data and the interactions

between variables.

Examples

Pareto diagram is a combination of the values and a cumulative distribution.

Histogram: In it, a variable is cut into discrete categories and the number of occurrences

in each category are summed up and shown in the graph.

Prepared by, P. RAMYA, AP/CSE Page 12

B.E- CSE-CS3352–FDS – R-2021 II/III

Box plot: It doesn’t show how many observations are present but does offer an impression

of the distribution within categories. It can show the maximum, minimum, median, and

other characterising measures at the same time.

6. Data Modelling or Model Building

With clean data in place and a good understanding of the content, you’re ready to

build models with the goal of making better predictions, classifying objects, or gaining an

understanding of the system that you’re modelling.

Building a model is an iterative process. Most models consist of the following main steps:

Selection of a modelling technique and variables to enter in the model

Execution of the model

Diagnosis and model comparison

Model and variable selection

The model has to be built upon the following aspects

Must the model be moved to a production environment and, if so, would it be easy to

implement?

How difficult is the maintenance on the model: how long will it remain relevant if left

untouched?

Does the model need to be easy to explain?

Model Execution - Once you’ve chosen a model you’ll need to implement it in code.

Prepared by, P. RAMYA, AP/CSE Page 13

B.E- CSE-CS3352–FDS – R-2021 II/III

7. Presentation and Automation

After you’ve successfully analysed the data and built a well-performing model,

you’re ready to present your findings to the world. This is an exciting part all your hours of

hard work have paid off and you can explain what you found to the stakeholders.

Summary of Data Science Process:

1. The first step of this process is setting a research goal. The main purpose here is to make

sure all the stakeholders understand the what, how, and why of the project. In every serious

project this will result in a project charter.

2. The second phase is data retrieval. You want to have data available for analysis, so this

step includes finding suitable data and getting access to the data from the data owner.

3. The result is data in its raw form, which probably needs some polishing and

transformation before it becomes usable.

4. Now that you have the raw data, it is time to cleanse it. This includes transforming the

data from a raw form into data that is directly usable in your models. To achieve this, you

will detect and correct different kinds of errors in the model, combine data from different

data sources, and transform it. If you have successfully completed this step, you can

progress to data visualization and modelling.

5. The fourth step is data exploration. The goal of this step is to gain a deep understanding

of the data. You will look for patterns, correlations, and deviations based on visual and

Prepared by, P. RAMYA, AP/CSE Page 14

B.E- CSE-CS3352–FDS – R-2021 II/III

descriptive techniques. The insights you gain from this phase will enable you to start

modelling.

6. Finally we get data modelling: It is now you attempt to gain the insights or make the

predictions that were stated in your project charter. Now is the time to bring out the heavy

guns, but remember research has taught us that often (but not always) a combination of

simple models tends to outperform one complicated model. If you have done this phase

right, you are almost done.

7. The last step of the data science model is presenting your results and automating the

analysis if needed. One goal of a project is to change the process and/or make better

decisions. You might still need to convince the business that your findings will indeed

change the business process as expected.

This is where you can shine in your influencer role. The importance of this step is

more apparent in projects on a strategic and tactical level. Some projects require you to

perform the business process over and over again, so automating the project will save you

lots of time. In reality you will not progress in a linear way from step 1 to step 6; often you

will regress and iterate between the different phases. Following these six steps pays off in

terms of a higher project success ratio and increased impact of research results.

This process ensures you have a well-defined research plan, a good understanding of

the business question, and clear deliverables before you even start looking at data.

The first steps of your process focus on getting high-quality data as input for your models.

This way your models will perform better later on.

In data science there’s a well known paradigm: Garbage in equal’s garbage out.

Another benefit of following a structured approach is that you work more in prototype

mode while you search for the best model.

When building a prototype you will probably try multiple models and won’t focus

heavily on things like program speed or writing code against standards. This allows you to

focus on bringing business value instead.

Prepared by, P. RAMYA, AP/CSE Page 15

B.E- CSE-CS3352–FDS – R-2021 II/III

CHAPTER - 2

DATA MINING

Introduction to Data Mining

We are in an age often referred to as the information age. In this information age,

because we believe that information leads to power and success, and thanks to

sophisticated technologies such as computers, satellites, etc., we have been collecting

tremendous amounts of information. Initially, with the advent of computers and means for

mass digital storage, we started collecting and storing all sorts of data, counting on the

power of computers to help sort through this amalgam of information.

Unfortunately, these massive collections of data stored on disparate structures very

rapidly became overwhelming. This initial chaos has led to the creation of structured

databases and database management systems (DBMS). The efficient database management

systems have been very important assets for management of a large corpus of data and

especially for effective and efficient retrieval of particular information from a large

collection whenever needed. The proliferation of database management systems has also

contributed to recent massive gathering of all sorts of information.

Today, we have far more information than we can handle: from business

transactions and scientific data, to satellite pictures, text reports and military intelligence.

Information retrieval is simply not enough anymore for decision-making. Confronted with

huge collections of data, we have now created new needs to help us make better

managerial choices. These needs are automatic summarization of data, extraction of the

“essence” of information stored, and the discovery of patterns in raw data.

What is Data Mining?

Data mining refers to extracting or mining knowledge from large amounts of data.

The term is actually a misnomer. Thus, data mining should have been more appropriately

named as knowledge mining which emphasis on mining from large amounts of data. It is

the computational process of discovering patterns in large data sets involving methods at

the intersection of artificial intelligence, machine learning, statistics, and database systems.

The overall goal of the data mining process is to extract information from a data set and

transform it into an understandable structure for further use.

The key properties of data mining are

Automatic discovery of patterns

Prediction of likely outcomes

Creation of actionable information

Focus on large datasets and databases.

What kind of information are we collecting?

Prepared by, P. RAMYA, AP/CSE Page 16

B.E- CSE-CS3352–FDS – R-2021 II/III

We have been collecting a myriad of data, from simple numerical measurements and text

documents, to more complex information such as spatial data, multimedia channels, and

hypertext documents. Here is a non-exclusive list of a variety of information collected in

digital form in databases and in flat files.

• Business transactions:

Every transaction in the business industry is (often) “memorized” for perpetuity.

Such transactions are usually time related and can be inter-business deals such as

purchases, exchanges, banking, stock, etc., or intra-business operations such as

management of in-house wares and assets. Large department stores, for example, thanks to

the widespread use of bar codes, store millions of transactions daily representing often

terabytes of data. Storage space is not the major problem, as the price of hard disks is

continuously dropping, but the effective use of the data in a reasonable time frame for

competitive decisionmaking is definitely the most important problem to solve for

businesses that struggle to survive in a highly competitive world.

• Scientific data:

Whether in a Swiss nuclear accelerator laboratory counting particles, in the

Canadian forest studying readings from a grizzly bear radio collar, on a South Pole iceberg

gathering data about oceanic activity, or in an American university investigating human

psychology, our society is amassing colossal amounts of scientific data that need to be

analyzed. Unfortunately, we can capture and store more new data faster than we can

analyze the old data already accumulated.

• Medical and personal data:

From government census to personnel and customer files, very large collections of

information are continuously gathered about individuals and groups. Governments,

companies and organizations such as hospitals, are stockpiling very important quantities of

personal data to help them manage human resources, better understand a market, or simply

assist clientele. Regardless of the privacy issues this type of data often reveals, this

information is collected, used and even shared. When correlated with other data this

information can shed light on customer behaviour and the like.

• Surveillance video and pictures:

With the amazing collapse of video camera prices, video cameras are becoming

ubiquitous. Video tapes from surveillance cameras are usually recycled and thus the

content is lost. However, there is a tendency today to store the tapes and even digitize them

for future use and analysis.

• Satellite sensing:

There is a countless number of satellites around the globe: some are geo-stationary above a

region, and some are orbiting around the Earth, but all are sending a non-stop stream of

Prepared by, P. RAMYA, AP/CSE Page 17

B.E- CSE-CS3352–FDS – R-2021 II/III

data to the surface. NASA, which controls a large number of satellites, receives more data

every second than what all NASA researchers and engineers can cope with. Many satellite

pictures and data are made public as soon as they are received in the hopes that other

researchers can analyze them.

• Games:

Our society is collecting a tremendous amount of data and statistics about games, players

and athletes. From hockey scores, basketball passes and car-racing lapses, to swimming

times, boxer’s pushes and chess positions, all the data are stored. Commentators and

journalists are using this information for reporting, but trainers and athletes would want to

exploit this data to improve performance and better understand opponents.

• Digital media:

The proliferation of cheap scanners, desktop video cameras and digital cameras is

one of the causes of the explosion in digital media repositories. In addition, many radio

stations, television channels and film studios are digitizing their audio and video

collections to improve the management of their multimedia assets. Associations such as the

NHL and the NBA have already started converting their huge game collection into digital

forms.

• CAD and Software engineering data:

There are a multitude of Computer Assisted Design (CAD) systems for architects to

design buildings or engineers to conceive system components or circuits. These systems

are generating a tremendous amount of data. Moreover, software engineering is a source of

considerable similar data with code, function libraries, objects, etc., which need powerful

tools for management and maintenance.

• Virtual Worlds:

There are many applications making use of three-dimensional virtual spaces. These

spaces and the objects they contain are described with special languages such as VRML.

Ideally, these virtual spaces are described in such a way that they can share objects and

places. There is a remarkable amount of virtual reality object and space repositories

available. Management of these repositories as well as content-based search and retrieval

from these repositories are still research issues, while the size of the collections continues

to grow.

Text reports and memos (e-mail messages):

Most of the communications within and between companies or research

organizations or even private people, are based on reports and memos in textual forms

often exchanged by e-mail. These messages are regularly stored in digital form for future

use and reference creating formidable digital libraries.

Prepared by, P. RAMYA, AP/CSE Page 18

B.E- CSE-CS3352–FDS – R-2021 II/III

• The World Wide Web repositories:

Since the inception of the World Wide Web in 1993, documents of all sorts of

formats, content and description have been collected and inter-connected with hyperlinks

making it the largest repository of data ever built. Despite its dynamic and unstructured

nature, its heterogeneous characteristic, and its very often redundancy and inconsistency,

the World Wide Web is the most important data collection regularly used for reference

because of the broad variety of topics covered and the infinite contributions of resources

and publishers. Many believe that the World Wide Web will become the compilation of

human knowledge.

What are Data Mining and Knowledge Discovery?

With the enormous amount of data stored in files, databases, and other repositories,

it is increasingly important, if not necessary, to develop powerful means for analysis and

perhaps interpretation of such data and for the extraction of interesting knowledge that

could help in decision-making. Data Mining, also popularly known as Knowledge

Discovery in Databases (KDD), refers to the nontrivial extraction of implicit, previously

unknown and potentially useful information from data in databases.

While data mining and knowledge discovery in databases (or KDD) are frequently

treated as synonyms, data mining is actually part of the knowledge discovery process. The

following figure (Figure 1.1) shows data mining as a step in an iterative knowledge

discovery process.

Pattern

Task-relevantData

Data

Warehouse Selection and

Transformation

Data

Data Integration

Databases

Figure 1.1: Data Mining is the core of Knowledge Discovery process

The Knowledge Discovery in Databases process comprises of a few steps leading

from raw data collections to some form of new knowledge. The iterative process consists

of the following steps:

Prepared by, P. RAMYA, AP/CSE Page 19

B.E- CSE-CS3352–FDS – R-2021 II/III

• Data cleaning: also known as data cleansing, it is a phase in which noise data and

irrelevant data are removed from the collection.

• Data integration: at this stage, multiple data sources, often heterogeneous, may

be combined in a common source.

• Data selection: at this step, the data relevant to the analysis is decided on and

retrieved from the data collection.

• Data transformation: also known as data consolidation, it is a phase in which

the selected data is transformed into forms appropriate for the mining procedure.

• Data mining: it is the crucial step in which clever techniques are applied to

extract patterns potentially useful.

• Pattern evaluation: in this step, strictly interesting patterns representing

knowledge are identified based on given measures.

• Knowledge representation: is the final phase in which the discovered

knowledge is visually represented to the user. This essential step uses visualization

techniques to help users understand and interpret the data mining results. It is common to

combine some of these steps together.

For instance, data cleaning and data integration can be performed together as a pre-

processing phase to generate a data warehouse. Data selection and data transformation can

also be combined where the consolidation of the data is the result of the selection, or, as for

the case of data warehouses, the selection is done on transformed data.

The KDD is an iterative process. Once the discovered knowledge is presented to

the user, the evaluation measures can be enhanced, the mining can be further refined, new

data can be selected or further transformed, or new data sources can be integrated, in order

to get different, more appropriate results. Data mining derives its name from the

similarities between searching for valuable information in a large database and mining

rocks for a vein of valuable ore. Both imply either sifting through a large amount of

material or ingeniously probing the material to exactly pinpoint where the values reside.

It is, however, a misnomer, since mining for gold in rocks is usually called “gold

mining” and not “rock mining”, thus by analogy, data mining should have been called

“knowledge mining” instead. Nevertheless, data mining became the accepted customary

term, and very rapidly a trend that even overshadowed more general terms such as

knowledge discovery in databases (KDD) that describe a more complete process. Other

similar terms referring to data mining are: data dredging, knowledge extraction and pattern

discovery.

What kind of Data can be mined?

In principle, data mining is not specific to one type of media or data. Data mining

should be applicable to any kind of information repository. However, algorithms and

Prepared by, P. RAMYA, AP/CSE Page 20

B.E- CSE-CS3352–FDS – R-2021 II/III

approaches may differ when applied to different types of data. Indeed, the challenges

presented by different types of data vary significantly.

Data mining is being put into use and studied for databases, including relational

databases, object-relational databases and object oriented databases, data warehouses,

transactional databases, unstructured and semi structured repositories such as the World

Wide Web, advanced databases such as spatial databases, multimedia databases, time-

series databases and textual databases, and even flat files.

Here are some examples in more detail:

• Flat files: Flat files are actually the most common data source for data mining

algorithms, especially at the research level. Flat files are simple data files in text or binary

format with a structure known by the data mining algorithm to be applied. The data in

these files can be transactions, time-series data, scientific measurements, etc.

• Relational Databases: Briefly, a relational database consists of a set of tables

containing either values of entity attributes, or values of attributes from entity

relationships. Tables have columns and rows, where columns represent attributes and rows

represent tuples.

A tuple in a relational table corresponds to either an object or a relationship

between objects and is identified by a set of attribute values representing a unique key. In

Figure 1.2 we present some relations Customer, Items, and Borrow representing business

activity in a fictitious video store Our Video Store. These relations are just a subset of what

could be a database for the video store and is given as an example. customerID date itemID

# … C1234 99/09/06 98765 1 … Borrow . . . customerID name address birthdate password

family_income group … C1234 John Smith 120 main street Marty 1965/10/10 $45000 A

… Customer . . . itemID type title category media Value # … 98765 Video Titanic DVD

Borrow

customerID date itemID # …

...

Customer

name address password birthdate family_income group …

customerID

John Smith 120 main street Marty 1965/10/10 $45000 A …

C1234

Items

type title media category Value # …

itemID

Video Titanic DVD Drama $15.00 2 …

...

Figure 1.2: Fragments of some relations from a relational database for OurVideoStore.

Drama $15.00 2 … Items . . .

Prepared by, P. RAMYA, AP/CSE Page 21

B.E- CSE-CS3352–FDS – R-2021 II/III

The most commonly used query language for relational database is SQL, which

allows retrieval and manipulation of the data stored in the tables, as well as the calculation

of aggregate functions such as average, sum, min, max and count.

For instance, an SQL query to select the videos grouped by category would be:

SELECT count(*) FROM Items WHERE type=video GROUP BY category.

Data mining algorithms using relational databases can be more versatile than data

mining algorithms specifically written for flat files, since they can take advantage of the

structure inherent to relational databases. While data mining can benefit from SQL for data

selection, transformation and consolidation, it goes beyond what SQL could provide, such

as predicting, comparing, detecting deviations, etc.

• Data Warehouses: A data warehouse as a storehouse, is a repository of data

collected from multiple data sources (often heterogeneous) and is intended to be used as a

whole under the same unified schema. A data warehouse gives the option to analyze data

from different sources under the same roof. Let us suppose that Our Video Store becomes a

franchise in North America. Many video stores belonging to Our Video Store company

may have different databases and different structures. If the executive of the company

wants to access the data from all stores for strategic decision-making, future direction,

marketing, etc., it would be more appropriate to store all the data in one site with a

homogeneous structure that allows interactive analysis.

In other words, data from the different stores would be loaded, cleaned,

transformed and integrated together. To facilitate decision making and multi-dimensional

views, data warehouses are usually modelled by a multi-dimensional data structure.

The Data Cube and

The Sub-Space Aggregates

Q1Q2Q3Q4 Lethbridge

Calgary

Edmonton

By City

Group By Cross Tab

By Time & City

Drama

Category Drama Comedy

Comedy Horror

Drama Horror

By Category & City

By Time

Sum Sum Sum Sum By Category

Figure 1.3: A multi-dimensional data cube structure commonly used in data for data warehousing.

Figure 1.3 shows an example of a three dimensional subset of a data cube structure

used for Our Video Store data warehouse.The figure shows summarized rentals grouped by

Prepared by, P. RAMYA, AP/CSE Page 22

B.E- CSE-CS3352–FDS – R-2021 II/III

film categories, then a cross table of summarized rentals by film categories and time (in

quarters). The data cube gives the summarized rentals along three dimensions: category,

time, and city. A cube contains cells that store values of some aggregate measures (in this

case rental counts), and special cells that store summations along dimensions.

Each dimension of the data cube contains a hierarchy of values for one attribute.

Because of their structure, the pre-computed summarized data they contain and the

hierarchical attribute values of their dimensions, data cubes are well suited for fast

interactive querying and analysis of data at different conceptual levels, known as On-Line

Analytical Processing (OLAP). OLAP operations allow the navigation of data at different

levels of abstraction, such as drill-down, roll-up, slice, dice, etc.

Time (Quarters) Time (Months, Q3)

Edmonto

Location Ca lgary Q1 Q2 Q3 Q4 Location Edmonton Jul Aug Se

Calgary

(city, AB) Lethbridge

hbridge

Red Deer

Drama Category Category

Drama

Drill down on Q3

Roll-up on Location

Time (Quarters)

Maritimes

uebe Q1 Q2 Q3 Q4

(province, Ontario

Prairies

Canada) Western Category

Pr Drama

Figure 1.4: Summarized data from OurVideoStore before and after drill-down and roll-up operations.

Figure 1.4 illustrates the drill-down (on the time dimension) and roll-up (on the

location dimension) operations.

Transaction Databases: A transaction database is a set of records representing

transactions, each with a time stamp, an identifier and a set of items. Associated with the

transaction files could also be descriptive data for the items.

Rentals

transactio date time customer itemList

nID ID

T12345 99/09/ 19:38 C1234 I2, I6, I10, I45 …}

06

...

Figure 1.5: Fragment of a transaction database for the rentals at OurVideoStore.

Prepared by, P. RAMYA, AP/CSE Page 23

B.E- CSE-CS3352–FDS – R-2021 II/III

For example, in the case of the video store, the rentals table such as shown in

Figure 1.5, represents the transaction database. Each record is a rental contract with a

customer identifier, a date, and the list of items rented (i.e. video tapes, games, VCR, etc.).

Since relational databases do not allow nested tables (i.e. a set as attribute value),

transactions are usually stored in flat files or stored in two normalized transaction tables,

one for the transactions and one for the transaction items.

One typical data mining analysis on such data is the so-called market Red Deer Q1

Q2 Q4 Drama Horror Sci. Fi.. Comedy Time (Quarters) Location (city, AB) Category Q3

Edmonton Calgary Lethbridge Red Deer Jul Se Drama Horror Sci. Fi.. Comedy Time

(Months, Q3) Location (city, AB) Category Aug Edmonton Calgary Lethbridge Drill down

on Q3 Roll-up on Location Prairies Q1 Q2 Q4 Drama Horror Sci. Fi.. Comedy Time

(Quarters) Location (province, Canada) Category Q3 Maritimes Quebec Ontario Western

Pr.

Figure 1.4: Summarized data from Our Video Store before and after drill-down and

roll-up operations. Basket analysis or association rules in which associations between items

occurring together or in sequence are studied.

• Multimedia Databases: Multimedia databases include video, images, audio and text

media. They can be stored on extended object-relational or object-oriented databases, or

simply on a file system. Multimedia is characterized by its high dimensionality, which

makes data mining even more challenging. Data mining from multimedia repositories may

require computer vision, computer graphics, image interpretation, and natural language

processing methodologies.

• Spatial Databases: Spatial databases are databases that, in addition to usual data, store

geographical information like maps, and global or regional positioning. Such spatial

databases present new challenges to data mining algorithms.

• Time-Series Databases: Time-series databases contain time related data such stock

market data or logged activities. These databases usually have a continuous flow of new

data coming in, which sometimes causes the need for a challenging real time analysis. Data

mining in such databases commonly includes the study of trends and correlations between

evolutions of different variables, as well as the prediction of trends and movements of the

variables in time.

Page 23

Figure 1.7: Examples of Time-Series Data (Source: Thompson Investors Group)

B.E- CSE-CS3352–FDS – R-2021 II/III

Figure 1.7 shows some examples of time-series data. Transaction_ID, date, time,

customer_ID, item_List T12345 99/09/06 19:38 C1234 {I2, I6, I10, I45 …} Rentals . . .

Figure 1.5: Fragment of a transaction database for the rentals at Our Video Store. Figure

1.6: Visualization of spatial OLAP (from Geo Miner system)

Figure 1.6: Visualization of spatial OLAP (from GeoMiner system)

• World Wide Web: The World Wide Web is the most heterogeneous and dynamic

repository available. A very large number of authors and publishers are continuously

contributing to its growth and metamorphosis, and a massive number of users are accessing

its resources daily. Data in the World Wide Web is organized in inter-connected

documents. These documents can be text, audio, video, raw data, and even applications.

Conceptually, the World Wide Web is comprised of three major components:

The content of the Web, which encompasses documents available;

The structure of the Web, which covers the hyperlinks and the relationships between

documents; and

The usage of the web, describing how and when the resources are accessed.

A fourth dimension can be added relating the dynamic nature or evolution of the

documents. Data mining in the World Wide Web, or web mining, tries to address all these

issues and is often divided into web content mining, web structure mining and web usage

mining.

What can be discovered?

The kinds of patterns that can be discovered depend upon the data mining tasks

employed. By and large, there are two types of data mining tasks:

Descriptive data mining tasks that describe the general properties of the existing data, and

Predictive data mining tasks that attempt to do predictions based on inference on available

data.

Page 24

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

The data mining functionalities and the variety of knowledge they discover are

briefly presented in the following list: Figure 1.7: Examples of Time-Series Data (Source:

Thompson Investors Group)

• Characterization: Data characterization is a summarization of general features of

objects in a target class, and produces what is called characteristic rules. The data relevant

to a user-specified class are normally retrieved by a database query and run through a

summarization module to extract the essence of the data at different levels of abstractions.

For example, one may want to characterize the Our Video Store customers who regularly

rent more than 30 movies a year. With concept hierarchies on the attributes describing the

target class, the attribute oriented induction method can be used, for example, to carry out

data summarization. Note that with a data cube containing summarization of data, simple

OLAP operations fit the purpose of data characterization.

• Discrimination: Data discrimination produces what are called discriminate rules and is

basically the comparison of the general features of objects between two classes referred to

as the target class and the contrasting class. For example, one may want to compare the

general characteristics of the customers who rented more than 30 movies in the last year

with those whose rental account is lower than 5. The techniques used for data

discrimination are very similar to the techniques used for data characterization with the

exception that data discrimination results include comparative measures.

• Association analysis: Association analysis is the discovery of what are commonly called

association rules. It studies the frequency of items occurring together in transactional

databases, and based on a threshold called support, identifies the frequent item sets.

Another threshold, confidence, which is the conditional probability than an item appears in

a transaction when another item appears, is used to pinpoint association rules. Association

analysis is commonly used for market basket analysis.

For example, it could be useful for the Our Video Store manager to know what

movies are often rented together or if there is a relationship between renting a certain type

of movies and buying popcorn or pop. The discovered association rules are of the form:

P→Q [s,c], where P and Q are conjunctions of attribute value-pairs, and s (for support) is

the probability that P and Q appear together in a transaction and c (for confidence) is the

conditional probability that Q appears in a transaction when P is present. For example, the

hypothetic association rule: RentType(X, “game”) ∧ Age(X, “13-19”) → Buys(X, “pop”)

[s=2% ,c=55%] would indicate that 2% of the transactions considered are of customers

aged between 13 and 19 who are renting a game and buying a pop, and that there is a

certainty of 55% that teenage customers who rent a game also buy pop.

• Classification: Classification analysis is the organization of data in given classes. Also

known as supervised classification, the classification uses given class labels to order the

objects in the data collection. Classification approaches normally use a training set where

all objects are already associated with known class labels. The classification algorithm

learns from the training set and builds a model. The model is used to classify new objects.

Page 25

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

For example, after starting a credit policy, the Our Video Store managers could analyze the

customers’ behaviours vis-à-vis their credit, and label accordingly the customers who

received credits with three possible labels “safe”, “risky” and “very risky”. The

classification analysis would generate a model that could be used to either accept or reject

credit requests in the future.

• Prediction: Prediction has attracted considerable attention given the potential

implications of successful forecasting in a business context. There are two major types of

predictions: one can either try to predict some unavailable data values or pending trends, or

predict a class label for some data. The latter is tied to classification. Once a classification

model is built based on a training set, the class label of an object can be foreseen based on

the attribute values of the object and the attribute values of the classes. Prediction is

however more often referred to the forecast of missing numerical values, or increase/

decrease trends in time related data. The major idea is to use a large number of past values

to consider probable future values.

• Clustering: Similar to classification, clustering is the organization of data in classes.

However, unlike classification, in clustering, class labels are unknown and it is up to the

clustering algorithm to discover acceptable classes. Clustering is also called unsupervised

classification, because the classification is not dictated by given class labels. There are

many clustering approaches all based on the principle of maximizing the similarity

between objects in a same class (intra-class similarity) and minimizing the similarity

between objects of different classes (inter-class similarity).

• Outlier analysis: Outliers are data elements that cannot be grouped in a given class or

cluster. Also known as exceptions or surprises, they are often very important to identify.

While outliers can be considered noise and discarded in some applications, they can reveal

important knowledge in other domains, and thus can be very significant and their analysis

valuable.

• Evolution and deviation analysis: Evolution and deviation analysis pertain to the study

of time related data that changes in time. Evolution analysis models evolutionary trends in

data, which consent to characterizing, comparing, classifying or clustering of time related

data. Deviation analysis, on the other hand, considers differences between measured values

and expected values, and attempts to find the cause of the deviations from the anticipated

values.

It is common that users do not have a clear idea of the kind of patterns they can

discover or need to discover from the data at hand. It is therefore important to have a

versatile and inclusive data mining system that allows the discovery of different kinds of

knowledge and at different levels of abstraction. This also makes interactivity an important

attribute of a data mining system.

Page 26

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

Is all that is discovered interesting and useful?

Data mining allows the discovery of knowledge potentially useful and unknown.

Whether the knowledge discovered is new, useful or interesting, is very subjective and

depends upon the application and the user. It is certain that data mining can generate, or

discover, a very large number of patterns or rules. In some cases the number of rules can

reach the millions. One can even think of a meta-mining phase to mine the oversized data

mining results. To reduce the number of patterns or rules discovered that have a high

probability to be non-interesting, one has to put a measurement on the patterns. However,

this raises the problem of completeness. The user would want to discover all rules or

patterns, but only those that are interesting.

The measurement of how interesting a discovery is, often called interestingness,

can be based on quantifiable objective elements such as validity of the patterns when tested

on new data with some degree of certainty, or on some subjective depictions such as

understand ability of the patterns, novelty of the patterns, or usefulness. Discovered

patterns can also be found interesting if they confirm or validate a hypothesis sought to be

confirmed or unexpectedly contradict a common belief. This brings the issue of describing

what is interesting to discover, such as meta-rule guided discovery that describes forms of

rules before the discovery process, and interestingness refinement languages that

interactively query the results for interesting patterns after the discovery phase.

Typically, measurements for interestingness are based on thresholds set by the user.

These thresholds define the completeness of the patterns discovered. Identifying and

measuring the interestingness of patterns and rules discovered, or to be discovered is

essential for the evaluation of the mined knowledge and the KDD process as a whole.

While some concrete measurements exist, assessing the interestingness of discovered

knowledge is still an important research issue. How do we categorize data mining systems?

There are many data mining systems available or being developed. Some are specialized

systems dedicated to a given data source or are confined to limited data mining

functionalities, other are more versatile and comprehensive.

Data mining systems can be categorized according to various criteria among other

classification are the following:

• Classification according to the type of data source mined: this classification

categorizes data mining systems according to the type of data handled such as spatial data,

multimedia data, time-series data, text data, World Wide Web, etc.

• Classification according to the data model drawn on: this classification

categorizes data mining systems based on the data model involved such as relational

database, object-oriented database, data warehouse, transactional, etc.

• Classification according to the king of knowledge discovered: this

classification categorizes data mining systems based on the kind of knowledge discovered

or data mining functionalities, such as characterization, discrimination, association,

Page 27

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

classification, clustering, etc. Some systems tend to be comprehensive systems offering

several data mining functionalities together.

• Classification according to mining techniques used: Data mining systems

employ and provide different techniques. This classification categorizes data mining

systems according to the data analysis approach used such as machine learning, neural

networks, genetic algorithms, statistics, visualization, database oriented or data warehouse-

oriented, etc. The classification can also take into account the degree of user interaction

involved in the data mining process such as query-driven systems, interactive exploratory

systems, or autonomous systems. A comprehensive system would provide a wide variety

of data mining techniques to fit different situations and options, and offer different degrees

of user interaction.

What are the issues in Data Mining?

Data mining algorithms embody techniques that have sometimes existed for many

years, but have only lately been applied as reliable and scalable tools that time and again

outperform older classical statistical methods.

While data mining is still in its infancy, it is becoming a trend and ubiquitous.

Before data mining develops into a conventional, mature and trusted discipline, many still

pending issues have to be addressed. Some of these issues are addressed below. Note that

these issues are not exclusive and are not ordered in any way.

Security and social issues:

Security is an important issue with any data collection that is shared and/or is

intended to be used for strategic decision-making. In addition, when data is collected for

customer profiling, user behaviour understanding, correlating personal data with other

information, etc., large amounts of sensitive and private information about individuals or

companies is gathered and stored. This becomes controversial given the confidential nature

of some of this data and the potential illegal access to the information.

Moreover, data mining could disclose new implicit knowledge about individuals or

groups that could be against privacy policies, especially if there is potential dissemination

of discovered information. Another issue that arises from this concern is the appropriate

use of data mining. Due to the value of data, databases of all sorts of content are regularly

sold, and because of the competitive advantage that can be attained from implicit

knowledge discovered, some important information could be withheld, while other

information could be widely distributed and used without control.

User interface issues:

The knowledge discovered by data mining tools is useful as long as it is

interesting, and above all understandable by the user. Good data visualization eases the

interpretation of data mining results, as well as helps users better understand their needs.

Many data exploratory analysis tasks are significantly facilitated by the ability to see data

Page 28

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

in an appropriate visual presentation. There are many visualization ideas and proposals for

effective data graphical presentation. However, there is still much research to accomplish

in order to obtain good visualization tools for large datasets that could be used to display

and manipulate mined knowledge.

The major issues related to user interfaces and visualization is “screen real-estate”,

information rendering, and interaction. Interactivity with the data and data mining results is

crucial since it provides means for the user to focus and refine the mining tasks, as well as

to picture the discovered knowledge from different angles and at different conceptual

levels.

Mining methodology issues:

These issues pertain to the data mining approaches applied and their limitations.

Topics such as versatility of the mining approaches, the diversity of data available, the

dimensionality of the domain, the broad analysis needs (when known), the assessment of

the knowledge discovered, the exploitation of background knowledge and metadata, the

control and handling of noise in data, etc. are all examples that can dictate mining

methodology choices. For instance, it is often desirable to have different data mining

methods available since different approaches may perform differently depending upon the

data at hand. Moreover, different approaches may suit and solve user’s needs differently.

Most algorithms assume the data to be noise-free. This is of course a strong assumption.

Most datasets contain exceptions, invalid or incomplete information, etc., which may

complicate, if not obscure, the analysis process and in many cases compromise the

accuracy of the results.

As a consequence, data pre-processing (data cleaning and transformation) becomes

vital. It is often seen as lost time, but data cleaning, as time consuming and frustrating as it

may be, is one of the most important phases in the knowledge discovery process. Data

mining techniques should be able to handle noise in data or incomplete information. More

than the size of data, the size of the search space is even more decisive for data mining

techniques. The size of the search space is often depending upon the number of dimensions

in the domain space. The search space usually grows exponentially when the number of

dimensions increases. This is known as the curse of dimensionality. This “curse” affects so

badly the performance of some data mining approaches that it is becoming one of the most

urgent issues to solve.

Performance issues:

Many artificial intelligence and statistical methods exist for data analysis and

interpretation. However, these methods were often not designed for the very large data sets

data mining is dealing with today. Terabyte sizes are common. This raises the issues of

scalability and efficiency of the data mining methods when processing considerably large

data. Algorithms with exponential and even medium-order polynomial complexity cannot

be of practical use for data mining. Linear algorithms are usually the norm. In same theme,

sampling can be used for mining instead of the whole dataset.

Page 29

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

However, concerns such as completeness and choice of samples may arise. Other

topics in the issue of performance are incremental updating, and parallel programming.

There is no doubt that parallelism can help solve the size problem if the dataset can be

subdivided and the results can be merged later. Incremental updating is important for

merging results from parallel mining, or updating data mining results when new data

becomes available without having to re-analyze the complete dataset.

Data source issues:

There are many issues related to the data sources, some are practical such as the

diversity of data types, while others are philosophical like the data glut problem. We

certainly have an excess of data since we already have more data than we can handle and

we are still collecting data at an even higher rate. If the spread of database management

systems has helped increase the gathering of information, the advent of data mining is

certainly encouraging more data harvesting. The current practice is to collect as much data

as possible now and process it, or try to process it, later. The concern is whether we are

collecting the right data at the appropriate amount, whether we know what we want to do

with it, and whether we distinguish between what data is important and what data is

insignificant. Regarding the practical issues related to data sources, there is the subject of

heterogeneous databases and the focus on diverse complex data types.

We are storing different types of data in a variety of repositories. It is difficult to expect a

data mining system to effectively and efficiently achieve good mining results on all kinds

of data and sources. Different kinds of data and sources may require distinct algorithms

and methodologies. Currently, there is a focus on relational databases and data warehouses,

but other approaches need to be pioneered for other specific complex data types. A

versatile data mining tool, for all sorts of data, may not be realistic. Moreover, the

proliferation of heterogeneous data sources, at structural and semantic levels, poses

important challenges not only to the database community but also to the data mining

community.

Data Mining Functionalities:

We have observed various types of databases and information repositories on which

data mining can be performed. Let us now examine the kinds of data patterns that can be

mined. Data mining functionalities are used to specify the kind of patterns to be found in

data mining tasks. In general, data mining tasks can be classified into two categories:

descriptive and predictive. Descriptive mining tasks characterize the general properties of

the data in the database. Predictive mining tasks perform inference on the current data in

order to make predictions.

In some cases, users may have no idea regarding what kinds of patterns in their data

may be interesting, and hence may like to search for several different kinds of patterns in

Page 30

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

parallel. Thus it is important to have a data mining system that can mine multiple kinds of

patterns to accommodate different user expectations or applications. Furthermore, data

mining systems should be able to discover patterns at various granularities (i.e., different

levels of abstraction). Data mining systems should also allow users to specify hints to

guide or focus the search for interesting patterns. Because some patterns may not hold for

all of the data in the database, a measure of certainty or “trustworthiness” is usually

associated with each discovered pattern.

Data mining functionalities, and the kinds of patterns they can discover, are

described Mining Frequent Patterns, Associations, and Correlations Frequent patterns, as

the name suggests, are patterns that occur frequently in data. There are many kinds of

frequent patterns, including item sets, subsequences, and substructures.

A frequent item set typically refers to a set of items that frequently appear together

in a transactional data set, such as milk and bread. A frequently occurring subsequence,

such as the pattern that customers tend to purchase first a PC, followed by a digital camera,

and then a memory card, is a (frequent) sequential pattern. A substructure can refer to

different structural forms, such as graphs, trees, or lattices, which may be combined with

item sets or subsequences. If a substructure occurs frequently, it is called a (frequent)

structured pattern. Mining frequent patterns leads to the discovery of interesting

associations and correlations within data.

3. Briefly explain the architecture of data mining.

Architecture of Data Mining

A typical data mining system may have the following major components.

Page 31

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

1. Knowledge Base: This is the domain knowledge that is used to guide the search or

evaluate the interestingness of resulting patterns. Such knowledge can include concept hierarchies,

used to organize attributes or attribute values into different levels of abstraction. Knowledge such

as user beliefs, which can be used to assess a pattern’s interestingness based on its unexpectedness,

may also be included. Other examples of domain knowledge are additional interestingness

constraints or thresholds, and metadata (e.g., describing data from multiple heterogeneous sources).

2. Data Mining Engine: This is essential to the data mining system and ideally consists of

a set of functional modules for tasks such as characterization, association and correlation analysis,

classification, prediction, cluster analysis, outlier analysis, and evolution analysis.

3. Pattern Evaluation Module: This component typically employs interestingness

measures interact with the data mining modules so as to focus the search toward interesting

patterns. It may use interestingness thresholds to filter out discovered patterns. Alternatively, the

pattern evaluation module may be integrated with the mining module, depending on the

implementation of the data mining method used. For efficient data mining, it is highly

recommended to push the evaluation of pattern interestingness as deep as possible into the mining

process as to confine the search to only the interesting patterns.

4. User interface: This module communicates between users and the data mining system,

allowing the user to interact with the system by specifying a data mining query or task, providing

information to help focus the search, and performing exploratory data mining based on the

intermediate data mining results. In addition, this component allows the user to browse database

and data warehouse schemas or data structures, evaluate mined patterns, and visualize the patterns

in different forms.

Page 32

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

CHAPTER – 3

DATA WAREHOUSEING

Introduction to Data Warehouse:

A data warehouse is a subject-oriented, integrated, time-variant and non-volatile

collection ofdata in support of management's decision making process.

Subject-Oriented: A data warehouse can be used to analyze a particular subject

area. Forexample, "sales" can be a particular subject.

Integrated: A data warehouse integrates data from multiple data sources. For

example, source A and source B may have different ways of identifying a product,

but in a data warehouse, there will be only a single way of identifying a product.

Time-Variant: Historical data is kept in a data warehouse. For example, one can

retrieve data from 3 months, 6 months, 12 months, or even older data from a data

warehouse. This contrasts with a transactions system, where often only the most

recent data is kept. For example, a transaction system may hold the most recent

address of a customer, where a data warehouse can hold all addresses associated with

a customer.

Non-volatile: Once data is in the data warehouse, it will not change. So, historical

data in a data warehouse should never be altered.

Data Warehouse Design Process:

A data warehouse can be built using a top-down approach, a bottom-up approach,

or a combination of both.

The top-down approach starts with the overall design and planning. It is useful

in cases where the technology is mature and well known, and where the business problems

that must be solved are clear and well understood.

The bottom-up approach starts with experiments and prototypes. This is useful

in the early stage of business modeling and technology development. It allows an

organization to move forward at considerably less expense and to evaluate the benefits of

the technology before making significant commitments.

In the combined approach, an organization can exploit the planned and strategic

nature of the top-down approach while retaining the rapid implementation and

opportunistic application of the bottom-up approach.

The warehouse design process consists of the following steps:

Choose a business process to model, for example, orders, invoices, shipments, inventory,

Page 33

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

account administration, sales, or the general ledger. If the business process is

organizational and involves multiple complex object collections, a data warehouse model

should be followed. However, if the process is departmental and focuses on the analysis of

one kind of business process, a data mart model should be chosen.

Choose the grain of the business process. The grain is the fundamental, atomic level

of data to be represented in the fact table for this process, for example, individual

transactions, individual daily snapshots, and so on.

Choose the dimensions that will apply to each fact table record. Typical dimensions

are time, item, customer, supplier, warehouse, transaction type, and status.

Choose the measures that will populate each fact table record. Typical measures are

numericadditive quantities like dollars sold and units sold.

5. EXPLAIN THE ARCHITECHTURE OF DATA WRAEHOUSE? AP / MAY 2023

Three Tier Data Warehouse Architecture:

Tier-1:

The bottom tier is a warehouse database server that is almost always a relational

database system. Back-end tools and utilities are used to feed data into the bottom tier

from operational databases or other external sources (such as customer profile information

provided by external consultants). These tools and utilities perform data extraction,

cleaning, and transformation (e.g., to merge similar data from different sources into a

unified format), as well as load and refresh functions to update the data warehouse.

The data are extracted using application program interfaces known as gateways. A

gateway is supported by the underlying DBMS and allows client programs to generate

SQL code to be executed at a server. Examples of gateways include ODBC (Open

Database Connection) and OLEDB (Open Linking and Embedding for Databases) by

Microsoft and JDBC (Java Database Connection). This tier also contains a metadata

repository, which stores information about the data warehouse and its contents.

Tier-2:

The middle tier is an OLAP server that is typically implemented using either a

relational OLAP(ROLAP) model or a multidimensional OLAP.

OLAP model is an extended relational DBMS that maps operations on

multidimensional data to standard relational operations.

A multidimensional OLAP (MOLAP) model, that is, a special-purpose server

that directly implements multidimensional data and operations.

Page 34

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

Tier-3:

The top tier is a front-end client layer, which contains query and reporting tools, analysis

tools,and/or data mining tools (e.g., trend analysis, prediction, and so on).

Data Warehouse Models:

There are three data warehouse models.

1. Enterprise warehouse:

An enterprise warehouse collects all of the information about subjects

spanning the entire organization.

It provides corporate-wide data integration, usually from one or more

operational systems or external information providers, and is cross-

functional in scope.

It typically contains detailed data as well as summarized data, and can

range in size from a few gigabytes to hundreds of gigabytes, terabytes, or

beyond. An enterprise data warehouse may be implemented on

traditional

Page 35

Prepared by, P.RAMYA, AP/CSE

Page 36

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

mainframes, computer super servers, or parallel architecture platforms. It

requires extensive business modeling and may take years to design and

build.

2. Data mart:

A Data mart contains a subset of corporate-wide data that is of value to a

specific group of users. The scope is confined to specific selected subjects. For

example, a marketing data mart may confine its subjects to customer, item, and sales.

The data contained in data marts tend to be summarized.

Data marts are usually implemented on low-cost departmental servers that are

UNIX/LINUX- or Windows-based. The implementation cycle of a data mart is more

likely to be measured in weeks rather than months or years. However, it may involve

complex integration in the long run if its design and planning were not enterprise-

wide.

Depending on the source of data, data marts can be categorized as

independent more dependent. Independent data marts are sourced from data captured

from one or more operational systems or external information providers, or from data

generated locally within a particular department or geographic area. Dependent data

marts are source directly from enterprise data warehouses.

3. Virtual warehouse:

A virtual warehouse is a set of views over operational databases. For efficient

query processing, only some of the possible summary views may be materialized.

A virtual warehouse is easy to build but requires excess capacity on

operational database servers.

Meta Data Repository:

Metadata are data about data. When used in a data warehouse, metadata are the data

that define warehouse objects. Metadata are created for the data names and definitions of

the given warehouse. Additional metadata are created and captured for time stamping any

extracted data, the source of the extracted data, and missing fields that have been added by

data cleaning or integration processes.

A metadata repository should contain the following:

A description of the structure of the data warehouse, which includes the

warehouse schema, view, dimensions, hierarchies, and derived data definitions, as

Page 37

Prepared by, P.RAMYA, AP/CSE

B.E- CSE-CS3352–FDS – R-2021 II/III

well as data mart locations and contents.

Operational metadata, which include data lineage (history of migrated data

and the sequence of transformations applied to it), currency of data (active, archived,

or purged), and monitoring information (warehouse usage statistics, error reports,

and audit trails).

The algorithms used for summarization, which include measure and

dimension definition algorithms, data on granularity, partitions, subject areas,

aggregation, summarization, and predefined queries and reports.

The mapping from the operational environment to the data warehouse, which

includes source databases and their contents, gateway descriptions, data partitions,

data extraction, cleaning, transformation rules and defaults, data refresh and purging

rules, and security (user authorization and access control).

Data related to system performance, which include indices and profiles that

improve data access and retrieval performance, in addition to rules for the timing and

scheduling of refresh, update, and replication cycles.

Business metadata, which include business terms and definitions, data

ownership information, and charging policies.

Schema Design:

Stars, Snowflakes, and Fact Constellations: Schemas for Multidimensional

Databases The entity- relationship data model is commonly used in the design of relational

databases, where a database schema consists of a set of entities and the relationships

between them. Such a data model is appropriate for on- line transaction processing.

A data warehouse, however, requires a concise, subject-oriented schema that

facilitates on-line data analysis. The most popular data model for a data warehouse is a

multidimensional model. Such a model can exist in the form of a star schema, a snowflake