EE378A Statistical signal processing, part A

Lecture 2 - 01/05/2011

Markov Triplets and Undirected Graphical Models

Lecturer: Tsachy Weissman Scribe: Katarina Van Heusen

Markov Triplets

Denition 1. The random variables X, Y , Z are said to form a Markov triplet, denoted as X Y Z, if X and Z are conditionally independent given Y . Thus it follows that X Y Z p(x, z|y) = p(x|y)p(z|y) x, y, z p(x|y, z) = p(x|y) p(z|x, y) = p(z|y) (1) (2) (3)

Lemma 2. The random variables X, Y , Z are said to form a Markov triplet, X Y Z, if and only if the joint PMF p(x, y, z) can be expressed as the product of two joint PMFs 1 (x, y) and 2 (y, z). That is to say X Y Z q , 2 such that p(x, y, z) = 1 (x, y)2 (y, z) Proof To prove that X Y Z q , 2 such that p(x, y, z) = 1 (x, y)2 (y, z) we note that X Y Z p(x, y, z) = p(y)p(x, z|y) = p(y)p(x|y) p(z|y)

1 (x,y) 2 (y,z)

(4)

(5) (6) (7)

= 1 (x, y)2 (y, z) To prove that if 1 2 such that p(x, y, z) = 1 (x, y)2 (y, z) X Y Z. p(x|y, z) = = p(x, y, z) p(y, z) p(x, y, z) p(x , y, z)

x

(8) (9)

1 (x, y)2 (y, z) 1 (x , y)2 (y, z)

x

(10)

1 (x, y) 1 (x , y)

x

(11) (12)

p(x|y, z) = p(x|y) X Y Z

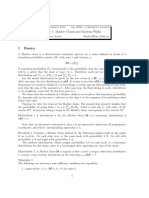

Figure 1: Graph G in Example 4.

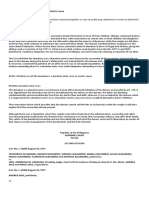

Figure 2: Graph G in Example 6.

Undirected Graphs

Denition 3. An undirected graph is a pair G = (V, E) where V is a set of vertices, or nodes, and E is a set of edges, which are two element subsets of V . An adjacent vertex of a vertex v V is a vertex connected to v by an edge. The neighborhood of v denoted by N (v) is the set of all vertices that are adjacent to v. Example 4. Consider a graph G = ({1, 2, 3, 4, 5, 6}, {{1, 2}, {1, 5}, {2, 5}, {2, 3}, {3, 4}, {4, 5}, {4, 6}}) which is shown

V E

in Figure 1. The neighborhood of vertex 2 is N (2) = {1, 3, 5}.

Graph induced by a collection of subsets

Denition 5. Given a set of vertices V and a collection {Vj } of subsets of V, the induced graph is G = (V, E) where E = {{v, v } V : v, v Vj for some j}. Example 6. Consider a set of vertices V = {1, 2, 3, 4, 5, 6} and a collection {Vj }3 where V1 = {2, 3, 4, 5}, V2 = j=1 {4, 5, 6}, V3 = {1, 2}. The induced graph is shown in Figure 2. The induced graph is G = ({1, 2, 3, 4, 5, 6}, {{1, 2}, {2, 3}, {2, 4}, {2, 5}, {3, 4}, {3, 5}, {4, 5}, {4, 6}, {5, 6}})

Figure 3: Graph G in Example 8.

Graph Induced by a Factorization of a Joint PMF

Let us dene a set of random variables X(I) = {X(i)}iI where I is called the index set. For example I = {1, 2, ..., n} would be a natural index set for a sequence of random variables, and I = {1, 2, ..., m}{1, 2, ..., n} would be a natural index set for a two dimensional set such as an image. Likewise, for a subset B I we dene X(B) = {X(i)}iB . Denition 7. Suppose that the PMF of X(I) can be expressed as p(x(I)) =

jJ

j (x(Bj )) where {Bj } is

a collection of subsets Bj I, then the graph induced by this factorization is the same as the graph induced by the subsets {Bj }jJ , referring to Denition 5. This is equivalent to saying that the graph induced by the factorization p(x(I)) = j (x(Bj )) is dened

jJ

as G = (I, E) where E = {{v, v } I : j such that v, v Bj }. That is to say that an edge {v, v } exists in the induced graph G if and only if both random variables {v, v } exist in at least one factor of the joint PMF. Example 8. Let {X(i)}1i6 , I = {1, 2, 3, 4, 5, 6}, and let the joint PMF be factored as p(x(1), x(2), x(3), x(4), x(5), x(6)) = 1 (x(1), x(4))2 (x(2), x(3))3 (x(1), x(2), x(5))4 (x(3), x(6))

4

(13)

=

j=1

j (x(Bj )) where B1 = {1, 4}, B2 = {2, 3}, B3 = {1, 2, 5}, B4 = {3, 6} (14)

The induced graph is shown in Figure 3 Theorem 9. Suppose the PMF of X(I) has the factorization p(x(I)) =

jJ

j (x(Bj )) and let N (i) denote

the neighborhood of i in the induced graph, G. Then X(i) X(N (i)) X(I \ ({i} N (i))) i. Remark: If X(I) saties X(i) X(N (i)) X(I \ ({i} N (i))) i I then it is said to be a Markov Random Field with respect to graph G.

�Proof p(x(I)) =

jJ

1 (x(Bj )) j (x(Bj ))

j:iBj 1 (x(i),x(N (i))) j:iBj / 2 (x(I\{i}))

(15) j (x(Bj )) (16)

= 1 (x(i), x(N (i)))2 (x(I \ {i})) = 1 (x(i), x(N (i)))2 (x(N (i)), x(I \ ({i} N (i)))) By Lemma 2 we have X(i) X(N (i)) X(I \ {i} N (i)).

(17) (18)

References

[1] M. I. Jordan, Graphical Models, Statist. Sci., vol. 19, no. 1, pp. 140-155, 2004. [2] D. Koller and N. Friedman, Probabilistic Graphical Models: Principles and Techniques, MIT Press, 2009.