100%(1)100% found this document useful (1 vote)

8K views166 pagesCS3551 Distributed Computing

notes

Uploaded by

lakshmivenkatesh8874Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF or read online on Scribd

100%(1)100% found this document useful (1 vote)

8K views166 pagesCS3551 Distributed Computing

notes

Uploaded by

lakshmivenkatesh8874Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF or read online on Scribd

You are on page 1/ 166

2106)

‘SUBJECT CODE : CS3551

Striclly os per Revised Syllobus of

ANNA UNIVERSITY

Choice Based Credit System (CBCS)

Semester - V (CSE/ IT / AlaDS)

DISTRIBUTED COMPUTING

Iresh A. Dhotre

ME. (Information Technology)

| Ex-Faculty, Sinhgad College of Engineering

| Pune

=} TECHNICAL

© PUBLICATIONS

KKK

889

Distributed Computing - (CS3551)

UNIT. INTRODUCTION

Introduction : Definition-Relation to Computer System Components ~ Motivation ~ Message -Passing

Systems versus Shared Memory Systems — Primitives for Distributed Communication ~ Synchronous

versus Asynchronous Executions - Design Issues and Challenges; A Model of Distributed

Computations : A Distributed Program - A Model of Distributed Executions ~ Models of

Communication Networks ~ Global State of a Distributed System. (Chapter - 1)

UNITIT LOGICAL TIME AND GLOBAL STATE

Logical Time : Physical Clock Synchronization : NTP — A Framework for a System of Logical Clocks

= Scalar Time ~ Vector Time; Message Ordering and Group Communication : Message Ordering

Paradigms — Asynchronous Execution with Synchronous Communication ~ Synchronous Program

Order on Asynchronous System — Group Communication ~ Causal Order ~ Total Order; Global State

and Snapshot Recording Algorithms : Introduction — System Model and Definitions - Snapshot

Algorithms for FIFO Channels. (Chapter - 2)

UNITIM DISTRIBUTED MUTEX AND DEADLOCK

Distributed Mutual exclusion Algorithms : Introduction ~ Preliminaries - Lamport’s algorithm —

Ricart-Agrawala’s Algorithm — Token-Based Algorithms ~ Suzuki-Kasami’s Broadcast Algorithm;

Deadlock Detection in Distributed Systems : Introduction - System Model ~ Preliminaries - Models

of Deadlocks ~ Chandy-Misra-Haas Algorithm for the AND model and OR Model. (Chapter - 3)

UNITIV. CONSENSUS AND RECOVERY F

Consensus and Agreement Algorithms : Problem Definition ~ Overview of Results ~ Agréement in a

Failure-Free System(Synchronous and Asynchronous) ~ Agreement in Synchronous Systems with

Failures; Checkpointing and Rollback Recovery : Introduction — Background and Definitions — Issues

in Failure Recovery ~ Checkpoint-based Recovery — Coordinated Checkpointing Algorithm - -

Algorithim for Asynchronous Checkpointing and Recovery. (Chapter - 4)

UNITV CLOUD COMPUTING

Definition of Cloud Computing - Characteristics of Cloud - Cloud Deployment Models ~ Cloud

Service Models ~ Driving Factors and Challenges of Cloud — Virtualization - Load Balancing —

Scalability and3Elasticity — Replication’ ~ Monitoring - Cloud Services and Platforms : Compute

Services ~ Storage Services ~ Application Services. (Chapter - 5)

ae (iv)

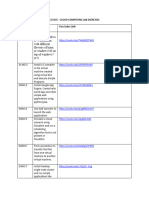

TABLE OF CONTENTS

ean ea]

Chapter-1 Introduction (1-1) to (1 - 30)

11

1.2

13

14

1.5// Primitives for Distributed Communication

1.6

a

18

Definition...

1.1.1 Disadvantages of DS

1.1.2 Difference between Parallel Computing and Distributed Computing.

Relation to Computer System Components...

Motivation

1.3.1 Need of Distributed System...

1.3.2 Focus on Resource Sharing,

Message-Passing Systems Versus Shared Memory Systems

1.4.1 Emulating Message - Passing Systems on a Shared Memory Systems.

1.5.1 Blocking / Non-blocking, Synchronous / Asynchronous Primitives.

Synchronous versus Asynchronous Executions...

Design Issues and Challenges

1.7.1 Challenges from System Perspective.

1.7.2 Challenges

1.7.2.1 Heterogeneity.

1.7.2.2 Openness

1.7.2.3 Security

1.7.2.4 Scalability

1.7.2.5 Failure Handling.

1.7.2.6 Concurrency.

1.7.2.7 Transparency .

1.7.3 Application of Distributed Computing and Challenge:

A Model of Distributed Computations : A Distributed Program

1-24

wv)

1.9 AModel of Distributed Executions. 1-24

1-25

1-26

1.12 Two Marks Questions with Answers 1-27

Chapter-2 — Logical Time and Global State (2 - 1) to (2 - 38)

1.10 Models of Communication Networks

1.11 Global State of Distributed System

2.1 Clock Events and Process State...

2.1.1 Physical Clock

2.1.2 Clock Skew and Drift Compensating ..

2.

Logical Time....

2.2.1 Event Ordering.

2.22 Lamport Timestamp..

2.2.3 Vector Timestamp.

2.3 Physical Clock Synchronization : NTP...

2.3.1 Synchronization in a Synchronous System.

2.3.2 Cristian's Method for Synchronizing Clocks...

2.3.2.1 Christian's Algorithm.

2.3.3. Berkeley Algorithm.

2.3.4 Network Time Protocol

(2.3.4.1 Localized Averaging Distributed Algorithms.

2.4 A Framework for a System of Logical Clocks.

2.5 Scalar Time...

2.6 Vector Tims

2d Message Ordering Paradigms...

2.8 Asynchronous Execution with Synchronous Communication..

2.8.1 Execution Realizable with Synchronous Communication

2.8.2. Hierarchy of Message Ordering Paradigms.

2.9 Synchronous Program Order on Asynchronous System ..

2.96 Group Communication ..

wi)

2.10.1 One to Many Communication.

2.10.2 Many-to-One Communication ..

2.10.3 Many-to-Many Communication.

2.10.3.1 Message Ordering.

2.11. Causal Order

2.11.1 Raynal-Schiper-Toueg Algorithm

2.1% Total Order ..

2.12.1. Three Phase Distributed Algorithm.

2.13 Global State and Snapshot Recording Algorithms

2.13.1. System Modi

2.13.2. Consistent Global State

2.46 Snapshot Algorithms for FIFO Channels.

2.14.1 Chandy-Lamport Algorithm,

2.14.2. Property of the Recorded Global State ...

2.15 Two Marks Questions with Answers

sug ing

Chapter-3 _ Distributed Mutex and Deadlock (3 - 1) to (3 - 24)

3.1 Distributed Mutual Exclusion Algorithms : Introduction

3.2 Preliminaries

3.2.1. System Model.

3.2.2. Requirement of Mutual Exclusion,

3.2.3 Performance Metrics

3.3 Lamport's Algorithm ..

3.4 _ Ricart-Agrawala’s Algorithm

3.5 Token-Based Algorithm:

3.5.1 Suzuki-Kasami's Broadcast Algorithm

3.6 Deadlock Detection in Distributed Systems : Introduction

3.6.1 Necessary Condition

3.7 System Model..

(iy

3.7.1 Wait for Graph...

3.8 Preliminaries : Deadlock Handling Strategies ..

3.8.1 Deadlock Prevention...

3.8.2 Dead Avoidance.....

3.83 Deadlock Detection

3.9 Models of Deadlocks

3.9.1 The Single Resource Model

3.9.2. The AND Model..

3.9.3 The OR Model

3.9.4 The AND-OR Model

3.10 Chandy-Misra-Haas Algorithm for the AND Model ....

3.11 Chandy-Misra-Haas Algorithm for the OR Model

3,12 Two Marks Questions with Answers

Chapter-4 Consensus and Recovery (4 - 1) to (4 - 28)

4-2

4-2

4-3

4-3

4.1 Consensus and Agreement Algorithms : Problem Definition ...

4.2 Byzantine Agreement Problem ..

4.2.1 Consensus Problem...

4.2.2 _ Interactive Consistency Problem ..

4.3 Overview of Results

4.4 Solution to Byzantine Agreement Problem..

4.4.1 Impossible Scenario

44,2 Lamport-Shostak-Pease Algorithm

4.5 Agreement in a Failure-Free System (Synchronous and Asynchronous)

4,6 Agreement in Synchronous Systems with Failures

4.7 _ Introduction of Check-pointing and Rollback Recovery ....

4.8 Background and Definitions ..

48.1 System Model...

4.8.2 Local Checkpoint...

(wit)

4.9 Consistent Set of Checkpoints ..

4.10

41

4.12

4.13

4.14

4.9.1. Synchronous Checkpointing, and Recovery...

AIA Checkpointing Agadthta co...

4.9.2 The Rollback Recovery Algorithen ....

4.9.3 Message Type

Issues in Failure Recovery...

4.10.1 Basic Concept...

Checkpoint-based Recovery...

4.11.1 Difference between Uncoordinated, Coordinated

and Cornmunication Induced Check Pointing, ..rcnnerce

Coordinated Checkpointing Algorithm.

Algorithm for Asynchronous Checkpointing and Recovery ...

Two Marks Questions with Answers...

Chapter-5 Cloud Computing

5.1

5.2

5.3

5.4

5.5

5.6

Definition of Cloud Computing...

5.1.1 Cloud Components...

5.1.2 Pros and Cons of Cloud Computing...

5.1.3 Application of Cloud Computing...

Characteristics of Cloud.

Cloud Deployment Models.

5.3.1° Difference between Public and Private Cloud...

Cloud Service Models.

5.4.1 Software as a Service (SaaS)...

5.4.2 Platform asa Service (PaaS).

5.4.3 _ Infrastructure as a Service (Iaa%)....

5.4.4 Difference between laaS, PaaS and Saa6......

Driving Factors and Challenges of Cloud..

Virtualization ....

(ix)

5.6.1 Hypervisor...

5.6.2 Para-Virtualization ..

5.6.3 Full-Virtualization.....

5.6.4 _ Difference between Full-Virtualization and Para-Virtualization ....

5.6.5 _ Difference between Cloud and Virtualization.

5.6.6 Pros and Cons of Virtualization ..

5.7 Load Balancing.

5.8 Scalability and Elasticity ...

5.9

5.10

5.11 Cloud Services and Platforms : Compute Services...

5.11.1 Amazon Elastic Compute Cloud

5.11.2 Windows Azure ..

5.12 Storage Service

5.12.1 Google Cloud Storage.

5.1:

&

Application Services ..

5.13.1 Application Framework and Runtime : Google App Engine.

5.13.2 Queuing Service : Amazon Simple Queue Servic

5.14 Two Marks Questions with Answers ..

Solved Model Question Paper (M - 1) to (M - 2)

Cc)

sas

OQ

UNIT I

Introduction

Syllabus

Introduction : Definition-Relation to Computer System Components - Motivation - Message -

Passing Systems versus Shared Memory Systems - Primitives for Distributed Communication -

Synchronous versus Asynchronous Executions - Design Issues and Challenges: A Model of

Distributed Computations : A Distributed Program - A Model of Distributed Executions - Models of

Communication Networks - Global State of a Distributed System.

Contents

1.1 Definition cece erect eee MADR, core tees seesses Marks 13

1.2 Relation to Computer System Components

1.3. Motivation

1.4 Message-Passing Systems Versus Shared

Memory Systems coven DOGe22, sees esses eee ees Marks 13

1.5 Primitives for Distributed Communication

"1.6 Synchronous versus Asynchronous Executions

1.7 Design Issues and Challenges . May-22, o-+ 00-0000 +++ Marks 13

1.8 A Model of Distributed Computations : A Distributed Program

1.9 ~ A Model of Distributed Executions

1.10 Models of Communication Networks

1.11 Global State of Distributed System

1.12 Two Marks Questions with Answers

a9

Distributed Computing 1-2 Introduction

EEE Definition

Definition of distributed systems :

¢( A distributed system is one in which components located at networked computers

communicate and co-ordinate their actions only by-pa

ing messages.

+ (A distributed system is collection of independent entities that co-operate to solve a

problem that cannot be individually solved)

* Tanenbaum’s definition : A distributed system is a collection of independent

computers that appears to its users a single coherent system.

DS can be characterized as a collection of mostly autonomous processors

communicating over a communication network. It having following features and

consequences nr a

. Concurrency :(The capacity of the system to handle shared resources can be

increased by adding more resources to the network. The p and q are concurrent if

either p can happen before q or q can happen before p, thus having interleaving

semantics.

2. No global clock : The only communication is by sending messages through a

network. Not possible to ‘synchronize many computers on a network and

guarantee synchronization over time, thus events are logically ordered. Not _

possible to have a process that can be aware of a single global state.

3. Independent failures : The programs may not_be able to. detect, whether the

network has failed or has become unusually slow. Running processes may be

unaware of other failures within context. Failed _pro

Both are due to processes running in isolation.

ssses_may_go_ undetected.

‘Autonomy and heterogeneity : The processors are loosely coupled in that they

have different speeds and each can be running a different OS.

Disadvantages of DS

1. Software : Difficult to develop software for distributed systems.

2. Network : Saturation, lossy transmissions.

3. Security : Easy access also applies to secret data.

4. Absence of global clock.

TECHNICAL PUBLICATIONS® - an up-hrust for knowledge

stributed Computing 1-3 Introduction

Difference between Parallel Computing

and Distributed Computing

Sr. No. Parallel computing Distributed computing

1 The goal of parallel computing has The goal of distributed computing is to

traditionally been to. pprovide provide convenience, where convenience.

performance, either in terms of includes high availability, reliability and

processor power or physical distributi

2 In parallel computation the interaction In distributed computation the

between processors is frequent. interaction is infrequent.

ally fine grained with low It is heavier weight.

4. Assumed to be reliable. Assumed to be unreliable.

5. Parallel computation values short Distributed computation values long up

execution time. time.

\

1. Explain how a parallel system differs front a distributed system.

4.2] Relation to Computer System Components

* Fig, 1.2.1 shows typical distributed system.

Memory

Fig. 1.2.1 Distributed system

* In distributed computing system, each node consists of a processor (CPU), local

memory and interface. Communication between any two or more nodes is only by

‘message passing because there is no common memory available.

© TECHNICAL PUBLICATIONS® - an up-thrust for knowledge

Introductio,

Distnbuted Computing 1-4 J

+ Distributed software is also termed as middleware, The distributed system uses q

Complexity of system design.

* Each computer has memory processing unit and the computers are connected by a

communication network. All the computers can communicate with each other

through LAN and WAN. The distributed system uses a layered architecture to

break down the complexity of system design.

« A distributed system is an information-processing system that contains a number

of independent computers that cooperate with one another over a communications

network in order to achieve a specific objective.

* Usually, distributed systems are asynchronous, i., they do not use a common

clock and do not impose any bounds on relative processor speeds or message

transfer times. Differences between the various computers and the ways in which

they communicate are mostly hidden from users.

‘© Users and applications can interact with a distributed system in a consistent ang

uniform way, regardless of where and when interaction takes place. Each host

executes components and operates a distribution middleware.

* Middleware enables the components to co-ordinate their activities. Users perceive

the system as a single, integrated computing facility.

« A distributed computer system consists of multiple software components that are

on multiple computers, but run as a single system. The computers that are in a

distributed system can be physically close together and connected by a local

network or they can be geographically distant and connected by a wide area

network.

* A distributed system can consist of any number of possible configurations, such as

mainframes, personal computers, workstations, minicomputers and so on.

Motivation

1. Economics : A collection of microprocessors offer a better price/performance than

mainframes. Low price /performance ratio is the cost effective way to increase

computing power.

2. Speed : A distributed system may have more total computing power than a

mainframe. .

3. Distributed systems can be extended through the addition of components, thereby

providing better scalability compared to centralized systems.

4, Inherent distribution : Some applications are inherently distributed e.g. a

supermarket chain.

:

e TECHNICAL PUBLICATIONS® - an up-thrust for knowledge

aE:

Distributed Computing 1-5 Introduction

5.

eppe

Reliability : If one machine crashes, the system as a whole can still survive. It

gives higher availability and improved reliability.

. Incremental growth : Computing power can be added in small increments.

Need of Distributed System

Resource sharing is main motivation of the distributed system. The term “resource”

is a rather abstract one, but it best characterizes the range of things that can

usefully be shared in a networked computer system.

Resources may be the software resources or hardware resources. Printers, disks,

CDROM and data are the example of software and hardware resources. Sharing of

resource extends from hardware components such as disks and printers to

software - defined entities siich as files, databases and data objects of all kinds.

It also includes the stream of video frames and audio connection that a mobile

phone call represents. A resource manager is a Software module that manages a

set of resources of a particular type.

Primary requirement of distributed system are as follows :

1. Fault tolerance 2. Consistency of replicated data

3. Security 4, Reliability 5. Concurrent transactions

Focus on Resource Sharing

The term resource is a rather abstract one, but it best characterizes the range of

things that can usefully be shared in a networked computer system.

Resources in a distributed system are encapsulated within one computer and can

only be accessed from other computers by communication. For effective sharing

each resource must be managed by a program called resource manager offering a

‘communication interface enabling the resource being accessed, manipulated and

updated consistently and reliably.

Equipments are shared to reduce cost. Data shared in database or web pages are

high-level resources which are more significant to users without regard for the

server or servers that provide these.

Types of resources :

. Hardware resource : Hard disk, printer, camera, scanner

. Data : File, database, web page.

Service ; Search engine

ICATIONS® - an up-thrust for knowledge

Distributod Computing 16 Introduction

© Patterns of resource sharing vary widely in their scope and in how closely users

work together

1. Search Engine : Users need no contact between users,

2. Computer Supported Co-operative Working (CSCW) : Users cooperate directly

action are determined by the

share resources. Mechanisms to coordinate us

pattern of sharing and the geographic distribution,

© For effective sharing, each resource must be managed by a program that offers a

communication interface enabling the resource to be accessed and updated reliably

and consistently.

# Service: Manages a collection of related resources and presents their functionalities

to users and applications,

© Server is basically storage of resources and it provides services to the

authenticated clients. It is running program on a networked computer. Server

accepts requests from client and performs a service and responds to request.

Example is Apache server and IIS server.

+ The complete interaction between server machine and client machine, from the

point when the client sends its request to when it receives the server's response, is

called a remote invocation.

* Resources may be encapsulated as objects and accessed by client objects. In this

case a client object invokes a method upon a server object.

Hardware and software resource sharing

+ Examples of hardware resources that can be usefully be shared and examples of

their sharing.

Hardware resources :

1. CPU:

a. Computing server : It executes processor-intensive applications for clients:

b. Remote object server : It executes methods on behalf of clients.

c. Worm program : It shares CPU capacity of desktop machine with the local

user. 4g

2, Memory : Cache server holds recently-accessed web pages in its RAM, for faster

access by other local computers.

3. Disk : File server, virtual disk server, video on demand server.

4-Screen : Network window systems, such as Xt; allow Processes in remote

“computers to update the content of windows.

TECHNICAL PUBLICATIONS® - an up-thnust for knowledge

Bene

eSeSSSSSaa——eeeaeEEtt—t

Distributed Computing 17 Introduction

5.

Printer + Networked printers accept print jobs from many computers and

managing, them with a queuin

Software rosourcos :

1.

2

3.

6.

Web page : Web servers enable multiple clients to share

ead-only page content

File : File servers enable multiple clients to share read-write files.

Object + Possibilities for software objects are limitless. Shared whiteboard, shared

diary and room booking system are examples of this type.

Database : Databases are intended to record the definitive state of some related

sets of data, They have been shared ever since multi-user computers appeared.

‘They include techniques to manage concurrent updates.

Newsgroup content : The netnews system makes read-only copies of the

recently-posted news items available to clients throughout the Internet.

Video/audio stream : Servers can store entire videos on disk and deliver them at

playback speed to multiple clients simultaneously.

EI Message-Passing Systems Versus Shared Memory Systems

OE

Message passing

Two processes communicate with each other by passing messages. Message

passing is direct and indirect communication. Indirect communication uses

mailbox for sending receiving message from other process.

Message passing system requires the synchronization and communication between

the two processes. Message passing used as a method of communication in

microkernels.

Message passing systems come in many forms. Messages sent by a process can be

either fixed or variable size. The actual function of message passing is normally

provided in the form of a pair of primitives.

a) Send (destination_name, message)

b) Receive (source_name, message).

Send primitive is used for sending a message to destination. Process sends

information in the form of a message to another process designated by a

destination. A process receives information by executing the receive primitive,

which indicates the source of the sending process and the message.

TECHNICAL PUBLICATIONS® - an up-thrust for knowledge

Distributed Computing

1-8

Shared memory

«Shared memory systems are those in which there is common shared addres

em. Communication among, processors takes place via” shi

bles for synchronization among the processor.

throughout the sys

data variables and contol va

shlroduction

A region of memory that is shared by co-operating processes is established,

Processes can then exchange information by reading and writing data to the

shared region.

Shared memory allows maximum speed and convenience of communication, as it

can be done at memory speeds when within a computer.

+ Shared memory is faster than message passing, as message-passing systems are

typically implemented using system calls and thus require

time-consuming task of Kernel intervention.

the more

Sr. No. ‘Message - passing systems Shared memory systems

1, | Platfornis that exchange messaging for | Platforms that provide a shared

sharing data are called message passing | memory for data sharing are called

| platforms. multiprocessors.

2. | Message passing is useful for sharing | In shared memory make sure that the

small amounts of data so that conflicts | processes are not writing to the same

need not occur. location simultaneously.

3 [4s message passing the communication | It follows a faster communication

| is slower when compared to shared strategy when compared to message

memory technique. passing technique.

4 In this message passing process model, | If the process wishes to initiate

the processes communicate with others

by exchanging messages.

communication and has data to share,

create a shared memory region in its

\ddress space. f

This technique can be used in

This cannot be used to heterogeneous

heterogeneous computers. computers.

T I I |

a Po Pa Interconnection network

[1 I

Interconnection network

TECHNICAL PUBLICATIONS® - an up-thrust for knowledge

Distributed Computing 1-9 Introduction

Emulating Message - Passing Systems on a Shared Memory

Systems

* Shared address space is divided into two disjoint parts and assigned to each

processor. Send and receive operations are implemented by using writing and

reading the information from receiver and sender processor.

* Synchronization primitives like write and read operation are controlled by

sender/receiver processor.

+ APP; message passing can be emulated by a write by P, to the mailbox and

then a read by P; from the mailbox. |

University Question

1, Illustrate the difference between message passing and shared memory process communication

model Ea

communication primitives denoted by Receive( ).

* Message passing primitive commands

SEND (msg, dest)

RECEIVE (src, buffer)

+ Send primitives uses two options for sending data : Buffered and unbuffered.

* In buffered options, user data is copied in the kernel buffer. In unbuffered options, |

the data gets copied directly from the user buffer onto the network.

¢ The communication of a message between two processes implies some level of

synchronization between the two processes. Sender and receiver can be blocking

or nonblocking. Three combinations are possible using blocking and nonblocking.

a.‘ Blocking send, blocking receive.

b. Nonblocking send, blocking receive.

c. Nonblocking send, nonblocking receive.

1. Blocking send, blocking receive : Both the sender and receiver are blocked

_ until the message is delivered. This is called Rendezvous. This combination

reen processes.

a

Distributed Computing 1-10 Introduction

2, Nonblocking send, blocking receive : Sender may continue on, the receiver is

blocked until the requested message arrives. A process that must receive a

message before it can do useful work needs to be blocked until such message

arrives. An example is a server process that exists to provide a service or

resource to other processes.

3. Nonblocking send, nonblocking receive : Sending process sends the message

and resumes the operation. Receiver retrieves either a valid message or a null

ie. neither party is required to wait.

« Processor synchrony : Processor synchrony indicates that all the processors

execute in lock step with their clocks synchronized.

Synchronous versus Asynchronous Executions

Synchronous execution :

Main features : A system in which the following bounds are defined =

1. Lower and upper bounds on execution time of processes can be set.

2, Transmitted messages are received within a known bounded time.

3. Drift rates between local clocks have a known bound.

Important consequences :

1. Ina synchronous distributed system there is a notion of global physical time (with

a known relative precision depending on the drift rate).

2. Only synchronous distributed systems have a predictable behaviour in terms of

timing. Only such systems can be used for hard real-time applications.

3. In a synchronous distributed system it is possible and safe to use timeouts in

order to detect failures of a process or communication link.

+ It is difficult and costly to implement synchronous distributed systems.

Asynchronous executions :

+ Many distributed systems (including those on the Internet) are asynchrorious.

* No bound-on process execution time ie. nothing can be assumed about speed,

load, and reliability of computers.

* No bound-on message transmission delays i.e. nothing can be assumed about

speed, load, reliability of interconnections.

* No bounds on drift rates between local clocks.

Important consequences :

1. In an asynchronous distributed system there is no global physical time. Reasoning

can be only in terms of logical time.

TECHNICAL PUBLICATIONS” - an up-thust for knowledge

VS we

Distnbuted Computing 1-14 Introduction

2. Asynchronous distributed systems are unpredictable in terms of timing,

3. No timeouts can be used.

* Asynchronous systems are widely and successfully used in practice. In practice

timeouts are used with asynchronous systems for failure detection. However,

additional measures have to be applied in order to avoid duplicated messages,

duplicated execution of operations, etc.

Sr. No. Synchronous execution Asynchronous execution

L Synchronous execution means the first. Asynchronous execution means a

task in a program must finish second task can begin executing in

processing before moving on to parallel, without waiting for an earlier

executing the next task. task to finish.

2. Lower and upper bounds on execution No bound-on process execution time.

time of processes can be set.

3! Transmitted messages are received No bound-on message transmission

within a known bounded time. delay

Drift rates between local clocks have a No bounds on drift rates between local

known bound. clocks.

Design Issues and Challenges

Challenges from System Perspective

* Communication mechanisms : /This task involves designing appropriate

mechanism for communication among the processes in the network For example :

Remote Procedure Call (RPC), Remote Object Invocation (ROM), message-oriented

vs. stream-oriented communication. - ba 4 oek”’

+ Processes : Issue involved are code migration, process/thread managem:

clients and servers, design of software and mobile agents.

* Naming : Easy to use identifiers needed to locate resources and processes

transparently and scalable.

7

* Synchronization lechanisms for synchronization, or soordination among the

5.00%, ncronization, OF LS mong

Processes are essential) Mutu exclusion is the classical ~ example of

synchronization, but many other forms of synchronization, such as leader election /

are also needed.

* Data storage and access : Various schemes for data storage, searching and lookup

should be fast and scalable across network. Revisit file system design.

|

ee Eee OEE

Distributed Computing 1-15 Introduction

« Mobile code systems address a wide range of needs and requirements, such as

service customization, dynamic extension of application functionality, autonomy,

fault tolerance and support for disconnected operations.

BEAD Scalabitity

A system is said to be scalable if it can handle the addition of users and resources

without suffering a noticeable loss of performance or increase in administrative

complexity.

+ The ability to accommodate any growth in the future be it expected or not. |

Distributed system architectures achieve scalability through employing more than

one host. Distributed systems can be scalable because additional computers can be

added in order to host additional components.

1. In size :-Dealing with large numbers of machines, users, tasks.

2, In location : Dealing with geometric distribution and mobility.

3, In administration : Addressing data passing through different regions of

ownership.

+ The design of scalable distributed systems presents the following challenges :

1. Controlling the cost of resources.

2. Controlling the performance loss.

3. Preventing software resources from running out.

4, Avoiding performance bottlenecks.

* Controlling the cost of physical resources ie. servers and users.

* Controlling the performance loss : DNS hierarchic structures scale better than

linear structures and save time for access structured data.

* Preventing software resources running out : Internet 32 bits addresses run out

soon. 128 bits one gives extra space in messages.

* Avoiding performance bottlenecks : DNS name table was kept in a single master

file partitioning between servers.

* Example : File system scalability is defined as the ability to support very large file

systems, large files, large directories and large numbers of files while still

providing I/O performance. Google file system aims at efficiently and reliably

managing many extremely large files for many clients, using commodity hardware.

* Various techniques such as replication, caching and cache memory management

' and asynchronous processing help to achieve scalability.

Distributed Computing 1012 Introduction

— sot

Acpaocluctoy Cormething That ew Ar

* Consistency and replication :'To avoid bottleneck, to provide fast access

and provide replication for fast a

es to data

cess, scalability, Require consistency

manag

nent among, replicas,

* Distributed systems security : Secure channels, access control, key management

(key generation and key distribution), authorization, secure group management are

the various method used to provide security.

Challenges

* Designing the distributed systems does not come for free. Some challenges need to

be overcome in order to get the ideal systems, Design issues and challenges of

distributed systems are as follows :

1. Heterogeneity 2, Openness 3. Security

4. Scalability 5. Failure handling 6, Concurrency

7. Transparency

Heterogeneity

* Modem distributed systems are (highly heterogeneous in many dimensions,

including available bandwidth, processor speed, disk capacity, security, failure

rate, and pattem of failures. It applies to all the following :

1. Computer networks : LAN, wireless network, satellite link

Computer hardware devices : Laptop, computer, mobiles phones, tablets

3. Operating systems : Linux, UNIX, Windows

4, . Programming languages : C/C+, Java, PHP

5. Different roles of software developers, designers, system managers

* There may be many different representations of data in the system. This might

include/different representations for integers, byte streams, floating point numbers,

and character sets, auvanged

Most of the ats can be grumfaled fom one eystem to anather without loxint

significance.) Attempts to provide a universal canonical form of information is

lagging.

* The integration of heterogeneous components implies the construction of

distributed systems. fn a distributed system, heterogeneity is almost unavoidable,

as different components may require different implementation technologi

Middleware

« (Middleware is a software layer that provides a programming ebstrection as well

as masking the heterogeneity of the underlying platform. E.g., CORBA, DCOM,

Java RMI, etc.)

TECHNICAL PusuicaTions® = an up-thrust for knowledge

Distribulod Computing 1-13 Introduction

© Middleware serves to hide both these aspects by providing uniform, standard,

high-level interfaces to the application developers and. integrators, so. that

applications can be casily composed, reused, ported, and made to interoperate.

Middleware services provide common services to perform various general purpose

functions. Fig. 1.7.1 shows position

of middlewar [Middleware Application software

software resicles above the network

and below — the application’ | | | | | | | | | 1

software.) ~ ~ APL for standardized, high-lovel sorvicos

«/ Mobile code is used to refer to Middleware

program code that canbe

transferred from one computer to [Td ft | |

another and run at the destination: Distributed, heterogeneous hardware nodes

SS aes eet

computer) Example of mobile code

is Java applets. Fig. 1.7.1 Position of middlowaro

© Code suitable for running on one computer is not necessarily suitable for running

on another because executable programs are normally specific both to the

instruction sct and to the host operating system. Middleware should make the

network transparent to the applications and_end_users.

* Users and applications should be able to perform the_same operations across the

network that they can perform locally. Middleware should hide the details of

computing hardware, OS, software components across networks.

FA Openness

* Openness means that the system can be easily extended and modified. Openness

refers to the ability to plug and play. You can, in theory, have two equivalent

services that follow the same interface contract, and interchange one with the

other.

he integration of new components means that they have to be able to

communicate with some of the components that already exist in the system,

Openness and distribution are related. (Distributed system components achieve

openness by communicating using well-defined intecfaced) fet of evel! -efies nd

© (If the well-defined interfaces for a system are published, it is easier for developers

to add new features or replace sub-systems in the future. )

* Open systems can easily be extended and modified. New components can be

integrated ie existing, components.

Feu

seeenee On)

Distributed Computing 1-14

« (Differences in data representation or interface types on different processors have to

be resolved. Openness and distribution are related to each other.“ System

components need to have well-defined and well-documented interfaces.)

It can be constructed from heterogeneous hardware and software. Openness is

concerned with extensions and improvements of distributed systems. Detailed

interfaces of components need to be published. New components have to be

integrated with existing components.

ure so that new components can be

« Che system needs to have a stable archi

easily integrated while preserving previous investments. )

* An open distributed system offers services according to standard rules that

describe the syntax and semantics of those services.

Security

* Security becomes even more important in a distributed system. Authentication,

authorization, digital signatures, non-repudiation, encryption, and privacy become

major issues in the distributed system.

* The four basic goals of a security system are to protect information, to detect an

intrusion, to confine the security breach, and to repair the damage and return the

system to a known stable and secure state.

«Security for information resources has ‘three components :

1. Confidentiality : Protection against disclosure to unauthorized individuals, e.g.

ACL in unix file system.

2. Integrity : Protection against alteration or corruption, e.g. checksum.

3. Availability : Protection against interference with the means to access the

resources, e.g. Denial of service.

* Encryption provides protection of shared resources, keeps sensitive information

secret when transmitted, Security challenges that are not yet fully met :

1. Denial of service attacks

2. Security of mobile-code.

* A denial-of-service attack is an attempt to make a computer or network resource

unavailable to its intended users.

* Security of mobile code : Mobile code systems are conceived to operate in large

scale settings where networks are composed of heterogeneous hosts, managed by

different authorities with different levels of trust and connected by links with

different bandwidths.

TECHNICAL PUBLICATIONS” - an up-thrust for knowledge

Distributed Computing 1-15 Introduction

Mobi

service customi

code systems address a wide range of needs and requirements, such as

zation, dynamic extension of application functionality, autonomy,

fault tolerance and support for disconnected operations.

Scalability

A system is said to be scalable if it can handle the addition of users and resources

without suffering a noticeable loss of performance or increase in administrative

complexity.

The ability to accommodate any growth in the future be it expected or not.

Distributed system architectures achieve scalability through employing more than

one host. Distributed systems can be scalable because additional computers can be

added in order to host additional components.

1._In size : Dealing with large numbers of machines, users, tasks.

2, In location : Dealing with geometric distribution and mobility.

3, In administration : Addressing data passing through different regions of

ownership.

The design of scalable distributed systems presents the following challenges :

1. Controlling the cost of resources

2. Controlling the performance loss.

3. Preventing software resources from running out.

4, Avoiding performance bottlenecks.

Controlling the cost of physical resources i.e. servers and users.

Controlling the performance loss : DNS hierarchic structures scale better than

linear structures and save time for access structured data.

Preventing software resources running out : Internet 32 bits addresses run out

soon. 128 bits one gives extra space in messages.

Avoiding performance bottlenecks : DNS name table was kept in a single master

file partitioning between servers.

Example : File system scalability is defined as the ability to support very large file

systems, large: files, large directories and large numbers of files while still

providing I/O performance. Google file system aims at efficiently and reliably

managing many extremely large files for many clients, using commodity hardware.

Various techniques such as replication, caching and cache memory management

and asynchronous processing help to achieve scalability.

TECHNICAL PUBLICA HONS? an ups for knowledge

Distributod Computing

Introduction

Scaling techniques

1.

x

3.

Hiding communication — late:

Examples would be asynchronous

communication as well as pushing code down to clients (eg. Java applets and

Javascript).

Distribution : Taking a component, splitting into smaller parts, and subsequently

spreading them across the system.

. Replication : Replicating components increases availability, helps balance the load

leading to better performance, helps hide latencies for geographically distributed

systems. Caching is a special form of replication.

Ey Failure Handling

When faults occur in hardware or software, programs may produce incorrect

results or they may stop before they have completed the intended computation.

Failure handling is difficult in distributed systems because failure is partial i.e.

some components fail while other continue to function.

Hardware, software and networks are not free of failures. Operations that continue

even in the presence of faults are referred to as fault-tolerant.

Distributed systems can maintain availability even at low levels of hardware

/software /network reliability.

Fault tolerance, that should not involve users or system administrators, is achieved

by recovery and replication of components.

Techniques for dealing with failures :

1. Detecting failures

2. Masking failures

3. Tolerating failures

4. Recovering from failures

5. Redundancy.

Detecting failures : Not all failures are detected but some of the failures can be

detected. For example : Corrupted data from file is detected by using checksum.

Detection of failure in remote crashed server in the internet is hard to detect.

Masking failures : Failures are hidden or made less sever. Examples of hiding

failures are :

1. Messages could be retransmitted.

2. Files can be duplicated on different place.

TECHNICAL PUBLICATIONS® - an upthnst for knowledge

Distributed Computing 1-17 Introduction

Tolerating failures : In the internet, client can be designed to tolerate failures

which generally involve the users tolerating them as well.

Recovery from failures : Recovery involves the design of software so that the state

of permanent data can be recovered or roll back after a server has crashed.

Redundancy : Services can be made to tolerate failures. Let us consider following:

examples :

. There should be at least 2 routes between any 2 routers in the internet.

2. In DNS, every name table is replicated in at least 2 servers.

. A database can be replicated in several servers. Clients are redirected from the

failure one to working one.

Concurrency

Components in distributed systems are executed

in concurrent processes. Fig. 1.7.2 shows concept Time

of concurrency.

There is a possibility that several clients will

attempt to access a shared resource at the same

time. Multiple users make requests on same

resource for read, write and update operation.

Each resource must be safe in a concurrent

environment.

Z4SCL

Any object that represents a shared resource in

a distributed system must be responsible for

ensuring that operates correctly in a concurrent Fig. 1.7.2 Concurrency

environment.

Transparency

Distributed systems should be perceived by users and application programmers as

a whole rather than as a collection of cooperating components. Transparency is a

key issue within distributed system.

Concept : Hide different aspects of distribution from the client. It is the ultimate

goal of many distributed system

er-level services. The’client uses these services

instead of hard coding the The service layer provides a service with a

certain Quality of Service.

TECHNICAL PUBI INS™ = an up-thrust for knowledge

Distbuted Computing 118 Introduction

# Transparency is an important goal but has to be considered together with all

other non-functional requirements and with respect to particular demands,

1. Location transparency : User using, resources need not know the loca

of

the resouree, Any request to retrieve or update data from any site” is

automatically forwarded by the system to the site or sites related) to” the

processing request, For example : URL

2. Acces 88 local and remote

ansparency

. Hyperlink in web page.

Using identical operations to acve

TWSOUTLLS, ©.

3. Concurrency transparency +

shared resourees without intert

everal processed operate. concurrently using,

i) i

renee with between them,

4. Replication transparency: Multiple instances. of resources to be used to

increase reliability: and performance without knowledge of the replicas by users.

or application prog:

ammers, eye web) cache.

5. Failure trar

the failure of hardware and software components, egy, email,

pareney : Users and applications to complete their tasks despite

6. Mobility transparency ¢ Movement of resources and clients within a system

without ativeting the operation of users and programs, e.g., mobile phone,

7. Performance transparency + Allows the system ( be reconfigured to improve

performance as loads vary,

8 Scaling transparency: Allows the system and applications to expand in seale

tem structure or the application algorithms

without change to the »

* Adva

tages of transparency :

* Easier for the user:

a. Doesn't have to bother with system topography,

b, Doesn't have to know about changes.

Rasier to understand,

* Easier for the programmer :

a, Doesn't have to bother with system topography,

b, Doesn't have to know about changes.

ce Easier to understand.

Disadvantages of transparency

a. Optimization cannot be done by programmer or user.

b, Strange bel

avior when the underlying system fails.

c. Underlying system can be very complete.

TECHNICAL PUBLICATIONS® . on uppthrust for knowledge

a

Distributod Computing -19 Introduction

Application of Distributed Computing and Challenges

41, Mobilo system

+ ‘The portability of the devices, such as laptop computers, PDA, mobile phone,

refrigerators, [together with their ability to. connect conveniently to networks in

different places, makes mobile computing possible,

Ubiquitous computing is the harnessing of many small cheap computational

devices that are present in user's physical environments, including the home, office

and elsewhere,

«Mobile devices are

1. Laptop compute

2. Handheld devices, including PDAs, cell phones, pagers, video cameras and

digital cameras.

3. Wearable devices, such as smart watches.

4, Devices embedded in appliances such as washing machines, hi-fi systems, cars.

© Mobile computing (nomadic computing)

1. People can still access resources while he is on the move or visiting places

other than their usual environment.

2

* Ubiquitous computing (pervasive computing) (1

1, The harnessing of many small, cheap computational devices those are present

in user's physical environments, including the home, office and elsewhere.

jon-aware computing : Utilize resources that are conveniently nearby.

2. It benefits users while they remain in a single environment such as home.

* Fig. 1.7.3 shows the portable and handheld devices in a distributed

(See Fig, 1.7.3 on next page)

© Mobile and ubiquitous computing raise significant system issues presents an

architecture for mobile computing.

* User has access to three forms of wireless connection ;

1, A laptop is connected to host's wireless LAN.

2. A mobile phone is connected to internet usi

ig WAP via a gateway,

3. A digital camera is connected to a printer over an intra-red link.

TECHNICAL PUBLICATIONS® - an up-thrust for knowledge

es. é

eS

Distributed Computing 1-20 Introduct,

Home

Internet

wrelers

Applicat

Fig. 1.7.3 Portable devices in DS

2. Pervasive computing

* Networking has become a pervasive resource and devices can be connected at any

time_and_any_place. The modem Internet is collection of various computer

networks.

** Computer network are of different types. Example of network includes a wide

range of wireless communication technologies such as WiFi, WiMAX, Bluetooth

and third-generation mobile phone networks(261)

+ Fig. 1.74 shows the typical portion of the internet.

* Programs running on the computers connected to it interact by passing messages,

employing a common means of communication.

TECHNICAL PUBLICATIONS® - an up-thrust for knowledge

E—e_e—«*""——

Distributed Computing 1-21 Introduction

diland Internet

SWI

C=) Prsvider

Satellite

Backbone

Fig. 1.7.4 Typical portion of Internet

+ fhe internet is a collection of large number of computer networks of many

different types>nternet communication mechanism is big technical achievement

arid it is possible’by using passing of messages. _

* The Internet is a yery large distributed system. The web is not equal to the

Internet. The implementation of the internet and services that it supports has

entailed the development of practical solutions to many distributed system issues.

. ( Internet service providers are companies that provide modem links and other

types of connection to individual_users and small organizations, enabling-them to

access services anywhere. It also provides local services such as email and web

hosting?

TECHNICAL PUBLICATIONS® - an up-thrust for knowledge

Distributed Computing 1-22 Introduction

* A backbone in a network link with a high transmission capacity, employing

satellite.connections and fibre optic cable, The Internet also provides multimedia

services. User can download audio and video files. Using Internet user can watch

“TV, play online games and do the video conference.

Intranet

* An intranet is a private network that is contained within an enterprise. It may

consist of many interlinked local_area networks and also use leased lines in the

WAN.

# Intranet is composed of several local’ area networks linked by backbone

connections. Routers are used to connect | intranet t¢ to internet.

An intranet is a portion of the internet that is separately administered and has a

boundary that can be configured to enforce local security policies. Fig. 1.7.5 shows

intranet. ~

Receives

Surels the

dala or

Computer nclucenke

Fig. 1.7.5 Intranet

* The main issues arising in the design of components for use in intranets are : File

services, firewalls anc

TECHNICAL PUBLICATIONS® - an up-hrst for knowledge

.

—eeeEeEeEEEEEEEEEEE——E—eeEeeEEeEeEeEeEEeEEe

Distributed Computing 1-23 Introduction

+ Firewalls protect an intranet by preventing unauthorized messages leaving or

entering; implementing by filtered messages. The cost of software installation and

support is reduce by the use of system architectures such as network computers

and thin clients.

3, Multimedia system

+ Digital multimedia : Computer-controlled integration of text, graphics, still images,

moving pictures, animation, sound, and any other medium. Ail these data types

are represented, stored, transmitted, and processed digitally.

* A continuous media type has an implicit time dimension, while a discrete type

does not. Referring to video and audio data as continuous and time based.

Continuous refers to the user's view of data. Internally, continuous media are

represented as sequences of discrete values that replace each other over time.

* Multimedia streams are said to be time-based because timed data elements in

audio and video streams define the content of the stream. The systems that

support multimedia applications need to preserve the timing when they handle

continuous data.

+ A distributed multimedia system should be able to perform the same functions for

continuous media types such as audio and video. It should be able to store and

locate audio or. video files, to transmit them across the network, to support the

presentation of the media types to the user and optionally also to share the media

types across a group of users.

* Distributed multimedia applications typically handle two different types of

communication : Request/Reply interaction for control information as well as

real-time streaming data.

amen aa

Web casting

* Web casting is an application of distributed multimedia technology. It broadcast

continuous media over the internet. A webcast uses streaming media technology to

take a single content source and distribute it to many simultaneous

Iisteners/ viewers. :

* Web casting support following distributed multimedia application :

1. It supports for encoding and encryption formats. For example : MP3 standard,

MPEG-1, HDTV.

2. It supports quality of service.

3. It uses resources management strategies, including appropriate scheduling

policies to support the desired quality of service.

+ Webcasting is also called push technology. It is a method of obtaining information

in which a server automatically downloads content to your computer at regular

intervals or whenever updates are made to the site.

TECHNICAL PUBLICATIONS® - an up-thrust for knowledge

Distributed Computing 1-24

Introduction

University Question

1, Discuss the design’issues and challenges in distributed system from a system perspecti

‘AU : May-22, Marks 13,

A Model of Distributed Computations : A Distributed Program

Distributed program is composed of a set of "n” asynchronous processes like py,

P2, P3r +» Pir Pn that communicate by message passing over the communication

network.

Here we assume that, each process is running on a different processor. The

Processes communicate only by using passing messages method.

Let Cy denote the channel from process p; to process p, and let my denote a

message sent by pj to pj. The communication delay is finite and unpredictable.

‘Also, processes do lobal

transfer are asynchronous. - a process may execute an action spontaneously and a

process sending a message does not wait for the delivery of the message to be

complete.

EEI A Model of Distributed Executions

Process execution means sequential execution of its action. Actions are atomic.

Actions of process are of three types : Interval events, message send events and

message receive events.

Internal event : Affects only the process which is executing the event,

Send event : A process passes messages to other processes.

Receive event : A processes gets messages from other processes.

For a message m, send(m) denotes its send events and rec(m) denote its receive

events. A send event changes the state of the process that sends the message and

the state of the channel on which the message is sent.

A receive event changes the state of the process that receives the message and the

state of the channel on which the message is received.

Fig, 1.9.1 shows space-timing diagram for distributed execution.

‘

Cn ae

my

Pa

Py

& of ‘time —>

Fig. 1.9.1

TECHNICAL PUBLICATIONS® - an up:hnst for knowledge

Pie i es

Distributed Computing 1-25 Introduction

* The send and the receive events signify the flow of information between processes

and establish causal dependency from the sender process to the receiver process.

+ In the figure, for process p,, the second event is a message send event, the third

event is an internal event and the fourth event is a message receive event.

Causal precedence relation

* Ordering of events for a single process is simple: they are ordered by their

occurrence.

* Send and receive events signify the flow of information between processes and

establish causal precedence between events at the sender and receiver.

* For any two events e; and e;, e; + e; denotes the fact that event e; does not

directly or transitively dependent on event e;. That is, event e; does not causally

affect event e;.

Logical vs. Physical concurrency

* In a distributed computation, two events are logically concurrent if and only if

they do not causally affect each other.

© Physical concurrency, on the other hand, has a connotation that the events occur at

the same instant in physical time.

* Two or more events may be logically concurrent even though they do not occur at

the same instant in physical time.

* However, if processor speed and message delays would have been different, the

execution of these events could have very well coincided in physical time.

* Whether a set of logically concurrent events coincide in the physical time or not,

does not change the outcome of the computation.

* Therefore, even though a set of logically concurrent events may not have occurred

at the same instant in physical time, we can assume that these events occurred at

the same instant in physical time.

Models of Communication Networks

+ There are several models of the service provided by communication networks,

namely, FIFO, Non-FIFO and causal ordering.

* In the FIFO model, each channel acts as a first-in first-out message queue and

thus, message ordering is preserved by a channel.

‘* In the non-FIFO model, a channel acts like a set in which the sender process adds

messages and the receiver process removes messages from it in a random order.

* The “causal ordering" model is based on Lamport's “happens before" relation. A

system that supports the causal ordering model satis?es the following property :

TECHNICAL PUBLICATIONS® - an up-thrust for knowledge

Introductic

Distributed Computing 1-26 fon

For any two messages mig and myy, if send (mj) — send (1),

then rec (mj; ) — rec (m3 )-

* This property ensures that causally related messages destined to the same

destination are delivered in an order that is consistent with their causality relation,

Causally ordered delivery of messages implies FIFO message delivery.

* Causal ordering model considerably simplifies the design of distributed algorithms

because it provides a built-in synchronization.

Global State of Distributed System

Definition : “The global state of a distributed computation is the set of local states of all

individual processes incolced in the computation plus the state of the communication channels.”

Requirements of global states

« Fig..L.11.1 shows detecting global properties.

PL P2

a. Garbage collection bject message

reference garbage object

b. Deadlock

¢. Termination

Fig. 1.11.4

1. Distributed garbage collection : An object is considered to be garbage if there are

no longer any references to it anywhere in the distributed system. It is based on

reference counting and should include the state of communication channels.

2 Distributed deadlock detection : It occurs when each of collection of processes

waits for another process to send it a message and look for “waits-for

relationship.

3. Distributed termination detection : Look for state in which all processes are

passive.

4. Distributed debugging : Need collect values of distributed variables at the same

time.

TECHNICAL PUBLICATIONS® - an up-thrist for knowledge

i

distbuted Computing 1-27 Introduction

EE Two Marks Questions with Answers

Q.1 What do you mean by message passing ?

Ans. : In this model, data is shared by sending and receiving messages between

co-operating processes, using system calls. Message passing refers to services

performing a simple, one-way transfer operation between two programs.

Ans. : Distributed program is composed of a set of “n” asynchronous processes like

Pi/P2/P3/---rPirPn that communicate by message passing over the communication

network.

Q2 Define distributed program.

Q.3_ What do you mean by synchronous and asynchronous execution 7

Ans. : Synchronous execution means the first task in a program must finish processing

before moving on to executing the next task. Asynchronous execution means 2 second

task can begin executing in parallel, without waiting for an earlier task to finish.

Q.4 List out the features of distributed systems. CORE}

Ans. : Features of distributed systems are heterogeneity, openness, scalability, fault

tolerance, transparency and resource sharing.

Q5 Differentiate between synchronous and asynchronous execution.

RE)

Ans. :

|. Sr. No. Synchronous execution Asynchronous execution

1 Synchronous execution means the

first task in a program must finish

processing before moving on to

Asynchronous execution means a

second task can begin executing in

parallel, without waiting for an

executing the next task

Lower and upper bounds on

execution time of processes can be

set.

‘Transmitted messages are received

within a known bounded time.

Drift rates between local clocks have

a known bound.

Q6 What is distributed system 7

earlier task to finish.

No bound-on process execution time.

No bound-on message transmission

delay. =

No bounds on drift rates between

local clocks.

Ans, A distributed system is one in which components located at networked

computers communicate and co-ordinate their actions only by passing messages. A

distributed system is a collection of independent computers that appears to its users a

single coherent system.

TECHNICAL PUBLICATIONS® - an up-hrust for knowdedge

y to use any hardware, software or data anywhere in the system. Resource

Q9 What are the significant consequences of distributed systems 2 XUBINENEY

Ans. : a. No global clock : The only communication is by sending messages through a

network.

b. Independent failures : The programs may not be able to detect whether the

network has failed or has become unusually slow.

. Concurrency : The capacity of the system to handle shared resources can be

increased by adding more resources to the network.

Q.10 Define transparency. What are its types 7 Ezy

istributed system needs to hide the fact that its processes and resources are

cally distributed across multiple computers.

Q.11 What is the need of openness in distributed system 7 Ey

Ans. : Distributed system must be able to interact with services from other open

systems, irrespective of the underlying environment. Systems should conform to

well-defined interfaces and should support portability of applications.

Q.12 List any two resources of hardware and software, which can be shared In

distributed systems with example.

Ans. : Hardware resource : Memory cache server and CPU servers do some

computation for their clients hence their CPU is a shared resource.

Software resource : File : File servers enable multiple clients to have read/write access

to the same files. Database : The content of a database can be usefully shared. There

are many techniques that control the concurrent access to a database.

Q,13 List an example of distributed system.

Ans, : Distributed system examples : Internel, an intranet which is a portion of the

internet managed by an organization, mobile and ubiquitous computing.

Q.14 Enlist the design issues and challengos of distributed systems,

Ans. : Design issues and challenges of distributed systems are heterogeneity,

openness, security, scalability, failure handling, concurrency and transparency.

a

Distributed Computing 1-28 Introduct,

Q.7 Write down the principles of distributed systems. EXE)

Ans. : Distributed system consists of a collection of autonomous computers, connected |

through a network and distribution middleware, which enables computers ty

coordinate their activities and to share the resources of the system, so that users

perceive the system as a single, integrated computing facility.

Q8 — State the objectives of resource sharing model. EQ

Ans. : Abi

manager controls access, provides naming scheme and controls concurrency.

TECHNICAL PUBLIGATIONS® - en up-trust for knowledge

Distributed Computing 4-29 Introduction

Q.15 Define access transparency.

Ans. : Enables local and remote information objects to be accessed using identical

operations.

Q.16 What is replication transparency ?

Ans. : It enables multiple instances of information objects to be used to increase

reliability and performance without knowledge of the replicas by users or application

programs. Example : Distributed DBMS.

Q.17 What Is the goal of concurrency and failure transparency ?

Ans. : Enables several processes to operate concurrently using shared information

objects without interference between them.

Failure transparency : Allows users and applications to complete their tasks despite the

failure of other components.

Q.18 Differentiate between buffering and caching. J AU : May-15 |

‘Ans. : Cache is made from static ram which is faster than the slower dynamic ram

used for a buffer. A cache transparently stores data so that future requests for that data

can be served faster. A buffer temporarily stores data while the data is the process of

moving from one place to another, i. the input device to the output device. The buffer

is mostly used for input/output processes while the cache is used during reading and

writing processes from the disk.

Q.19 What is open distributed system 7

Ans. : Open distributed system is a system that offers services according to standard

rules that describe the syntax and semantics of those services.

Q.20 Give the example of relocation transparency ?

Ans. : When mobile users can continue to use their wireless laptops while moving

from place to place without ever being disconnected

Q.21 Describe what is meant by a scalable system ?

Ans. : A system is scalable with respect to either its number of components, size or

number and size of administrative domains, if it can grow in one or more of these

dimensions without an unacceptable loss of performance.

Q.22 What is the role of middleware in a distributed system 7 CS

Ans. : To enhance the ition transparency that is missing in network operating

systems. In other words, middleware aims at improving the single system view that a

distributed system should have.

TECHNICAL PUBLICATIONS® - an up-thrust for knowledge

1-30 Introductic.

| 23 What is scalability 7

Ans: A system is said to be scalable if it can handle the addition of users and

[nsourees without suffering a noticeable loss of performance or increase in

| adounistrative complexity.

Q24 Name some services and examples of middleware.

Ans. : Middleware services are sets of distributed software that exist between the

pplication and the OS and network services on a system node in the network.

Software Foundation’s Distributed Computing Environment (DCE).

ooo

TECHNICAL PUBLICATIONS® - an up-thust for Knowledge

=

rel nt ——

UNIT II

Logical Time and Global State

Syllabus

gical Time > Physical Clock Synchronization: NTP ~ A Framework for a System of Logical

Clocks Scalar Time ~ Vector Time; Message Ordering and Group Conimunication » Message

Ordering Paradigms ~ Asynchronous Execution with Synchronous Communication ~ Synchronous

Program Order on Asynchronous System ~ Group Communication ~ Causal Order ~ Total Order:

Global State and Snapshot Recording Algorithms : Introduction ~ System Model and Definitions ~

Snapshot Algorithms for FIFO Channels.

Contents

21° Clock Events and Process Stato

22 Logical Time

23. Physical Clock Synchronization : NTP

24. A Framework for a System of Logical Clocks

25° Scalar Time

26 Vector Time

27 Message Ordering Paradigms ............. May-22, Dec-22, «+++++- Marks 13

28 Asynchronous Execution with Synchronous Communication

2.9 Synchronous Program Order on Asynchronous System

2.10 Group Communication . adhosn z sees Marks 13

2.11 Causal Order

2.12 Total Order . Doc.-22, veeesss Marks 13

2.13 Global State and Snapshot Recording Algorithms

2.14 Snapshot Algorithms for FIFO Channels ..... May-22, :- Marks 13

2.15 Two Marks Questions with Answers

2-1)

Distributed Computing 2-2 Logical Time and Global Stal,

EAI clock Events and Process State

* How to order the events that occur at a single processor ?

* A distributed system is defined as a collection P of N processes pi, oN,

Each process executes on a single processor and the processors do_not share

5) consisting ofits variables. ~~

memory: Each process p; has a state

Processes communicate only by messages (via a network). We can view each

process pi as to execute a sequence of actions that fall in one of the following

categories :

1. Sending a message;

2 Receiving a message;

3. Performi ing a computation that alters its state s;-

+! We define an event to be the execution of @'single action by p;.(Event is the

occurrence of a single action that a process carries out as it executes. For example :

Send, Receive, change state. The sequence of events within a single process pi can

be totally ordered, which is generally denoted by e—>; e’ if and only if the event e

occurs before e' at p;.

We can then define the history of process pi to be the sequence of events that take

place within : history (p;) = hy = ZZ

skew = 4 seconds

Fig. 2.1.1 Clock drift and clock skew

* Consider two clocks A and B, where clock B runs slightly faster than clock A by

approximately two seconds per hour: This is the clock drift of B relative to A. At

one point in time, ‘the di difference in time between the two clocks is approximately

4 seconds. This is the clock skew at that particular time.

* Successive events will correspond to different timestamps only if the clock

Tesolution is smaller than the rate at which events can occur. The rate at which

events occur depends on such factors as the length of the processor instruction

cycle.

* Applications running at a given computer require only the value of the counter to

timestamp events. The date and time-of-day can be calculated from the counter

value. Clock drift may happen when computer clocks count time at different rates.

TECHNICAL PUBLICATIONS® - an up-thrust for knowledge

Distributed Computing 2-4 Logical Time and Global State

* Co-ordinated Universal Time (UTC) is an international standard that is based on

e. UTC signals are synchronized and broadcast regularly from

ator

Jand-based radio stations and satellites.

+ If the computer clock is behind the ‘time service's, it is OK to set the computer

clock to be the time runs

then it should be slowed down for a period instead of set back to the

service's time directly.

* The way to cause LL Quart esta“

computer's clock run to

slow for a period can be

achieved in software Holding register

without changing the rate Counter ,

of the hardware clock. Also

called timer, usually a

quartz crystal, oscillating at

Central processing unit

a well-defined frequency.

« A timer is associated with \ |

two registers: a cqunter and

Main memory

a holding register, and

counter decreasing one at

each oscillation. When the

counter gets t zero, an interruption is generated and is called one clock tick.

* Crystals run at slightly different rates, the difference in time value is called a_clock

skew. Clock skew causes time-related failures. Fig. 2.1.2 shows working of

computer clock

Fig. 2.1.2 Working of computer clock

Working :

1. Oscillation at a well-defined frequency

2, Each crystal oscillation decrements the counter by 1

3. When counter gets 0, its value reloaded from the holding register

4. When counter is 0, an interrupt is generated, which is call a clock tick

5. At each clock tick, an interrupt service procedure add_] to time stored in memory

ve Synchronization of physical clocks with real-world clock :

}

1. TAI (International. Atomic Time) : Cs133 atomic clock

/2. UTC (Universal Co-ordinated Time) : Modem civil time, can be received from