0% found this document useful (0 votes)

18 views19 pagesLecture 5

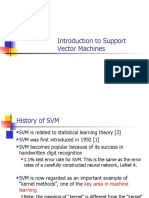

Lecture 5 of SYSC4415 focuses on Support Vector Machines (SVM) and K-Nearest Neighbors (KNN) for data classification. Key concepts include the SVM kernel for nonlinear decision boundaries, the hinge loss function, and the mechanics of KNN classification. The lecture also emphasizes hands-on activities to implement these classifiers and understand their underlying principles.

Uploaded by

Esraa Al dnCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

18 views19 pagesLecture 5

Lecture 5 of SYSC4415 focuses on Support Vector Machines (SVM) and K-Nearest Neighbors (KNN) for data classification. Key concepts include the SVM kernel for nonlinear decision boundaries, the hinge loss function, and the mechanics of KNN classification. The lecture also emphasizes hands-on activities to implement these classifiers and understand their underlying principles.

Uploaded by

Esraa Al dnCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 19