0% found this document useful (0 votes)

18 views2 pagesAssignment 1

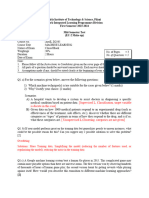

The document outlines an experiment for implementing the Perceptron algorithm to find weights for a Linear Discriminant function using data from a 'train.txt' file. It involves plotting sample points, generating high-dimensional data, applying the Perceptron algorithm with varying initial weights and learning rates, and creating a table and bar chart to visualize results. Additionally, it includes questions regarding the necessity of high-dimensional sample points and the number of updates for convergence in different scenarios.

Uploaded by

sadiamohiuddin3009Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

18 views2 pagesAssignment 1

The document outlines an experiment for implementing the Perceptron algorithm to find weights for a Linear Discriminant function using data from a 'train.txt' file. It involves plotting sample points, generating high-dimensional data, applying the Perceptron algorithm with varying initial weights and learning rates, and creating a table and bar chart to visualize results. Additionally, it includes questions regarding the necessity of high-dimensional sample points and the number of updates for convergence in different scenarios.

Uploaded by

sadiamohiuddin3009Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 2