06/10/2024

Spark Mllib

Instructor: Van-Dang Tran, Ph.D.

MACHINE LEARNING

“Programming Computers to optimize

performance using Example Data or Past

Experience”

1

� 06/10/2024

MACHINE LEARNING?

Field of study that gives "computers

the ability to learn without being

explicitly programmed."

-- Arthur Samuel, 1959

HAVE YOU PLAYED MARIO?

How much time did it take you to learn & win the princess?

2

� 06/10/2024

HOW ABOUT AUTOMATING IT?

How about

automating it?

Program Learns to Play Mario

Observes the game & presses keys

Maximises Score

3

� 06/10/2024

So?

• Program Learnt to play Mario and other games

• Without any need of programming

4

� 06/10/2024

Question: To make this program learn any other games such

as PacMan we will have to …

1. Write new rules as per the game

2. Just hook it to new game and let it play for a while

Question: To make this program learn any other games such as

PacMan we will have to …

1. Write new rules as per the game

2. Just hook it to new game and let it play for a while

10

5

� 06/10/2024

MACHINE LEARNING

• Branch of Artificial Intelligence

• Design and Development of Algorithms

• Computers Evolve Behaviour based on Empirical Data

Spark-MLb

il

11

MACHINE LEARNING - APPLICATIONS

Recommend Friends, Dates, Products to end-user.

12

6

� 06/10/2024

MACHINE LEARNING - APPLICATIONS

Classify content into predefined groups.

13

MACHINE LEARNING - APPLICATIONS

Identify key topics in large Collections of Text.

14

7

� 06/10/2024

MACHINE LEARNING - APPLICATIONS

Computer Vision - Identifying Objects

15

MACHINE LEARNING - APPLICATIONS

Natural Language Processing

16

8

� 06/10/2024

MACHINE LEARNING - APPLICATIONS

• Find Similar content based on Object Properties.

• Detect Anomalies within given data.

• Ranking Search Results with User Feedback Learning.

• Classifying DNA sequences.

• Sentiment Analysis/ Opinion Mining

• BioInformatics.

• Speech and HandWriting Recognition.

17

MACHINE LEARNING - TYPES?

Given example inputs & outputs, learn to

Supervised

map inputs to outputs

Machine Learning

18

9

� 06/10/2024

MACHINE LEARNING - TYPES?

Supervised Given example inputs & outputs, learn

to map inputs to outputs

Machine Learning Unsupervised No labels given, find structure

19

MACHINE LEARNING - TYPES?

Supervised

Given example inputs & outputs, learn

to map inputs to outputs

Machine Learning Unsupervised No labels given, find structure

Reinforcement

Dynamic environment, perform a certain

goal

20

10

� 06/10/2024

MACHINE LEARNING - TYPES?

Classification

Supervised

Regression

Machine Learning Unsupervised Clustering

Reinforcement

21

MACHINE LEARNING - CLASSIFICATION?

Check

Email

Spam? No

Yes We Use Logistic Regression

22

11

� 06/10/2024

MACHINE LEARNING - REGRESSION?

Predicting a continuous-valued

attribute associated with an object.

In linear regression, we draw all possible lines

going through the points such that it is closest

to all.

23

MACHINE LEARNING - CLUSTERING?

• To form a cluster based on

some definition of nearness

24

12

� 06/10/2024

MACHINE LEARNING - TOOLS

DATA SIZE CLASSFICATION TOOLS

Lines Sample Data Analysis and Whiteboard,…

Visualization

KBs - low MBs Prototype Analysis and Matlab, Octave, R,

Data Visualization Processing,

MBs - low GBs NumPy, SciPy,

Analysis

Online Data Weka,

Flare, AmCharts,

Visualization

Raphael, Protovis

GBs - TBs - PBs Analysis MLlib, SparkR, GraphX,

Big Data Mahout, Giraph

25

MACHINE LEARNING USING SPARK

• Spark RDDs à efficient data sharing

• In-memory caching accelerates performance

• Up to 20x faster than Hadoop

• Easy to use high-level programming interface

• Express complex algorithms ~100 lines.

26

13

� 06/10/2024

MACHINE LEARNING LIBRARY (MLlib)

Goal is to make practical machine learning scalable and easy

Consists of common learning algorithms and utilities, including:

• Classification

• Regression

• Clustering

• Collaborative filtering

• Dimensionality reduction

• Lower-level optimization primitives

• Higher-level pipeline APIs

27

MlLib STRUCTURE

ML Algorithms Featurization

Common learning algorithms

e.g. classification, regression, clustering, Feature extraction, Transformation, Dimensionality

and collaborative filtering reduction, and Selection

Pipelines Persistence

Tools for constructing, evaluating, Saving and load algorithms, models,

and tuning ML Pipelines and Pipelines

Utilities

Linear algebra, statistics, data handling, etc.

28

14

� 06/10/2024

MLLIB - COLLABORATIVE FILTERING

• Commonly used for recommender systems

• Techniques aim to fill in the missing entries of a user-item association

matrix

• Supports model-based collaborative filtering,

• Users and products are described by a small set of latent factors that can

be used to predict missing entries.

• MLlib uses the alternating least squares (ALS) algorithm to learn these

latent factors.

29

PIPELINES

DataFrame:This ML API uses DataFrame from Spark SQL as an ML dataset, which can hold a

variety of data types. E.g., a DataFrame could have different columns storing text, feature vectors,

true labels, and predictions.

Transformer: A Transformer is an algorithm which can transform one DataFrame into another

DataFrame. E.g., an ML model is a Transformer which transforms a DataFrame with features into a

DataFrame with predictions.

Estimator: An Estimator is an algorithm which can be fit on a DataFrame to produce a Transformer.

E.g., a learning algorithm is an Estimator which trains on a DataFrame and produces a model.

Pipeline: A Pipeline chains multiple Transformers and Estimators together to specify an ML

workflow.

Parameter: All Transformers and Estimators now share a common API for specifying parameters.

30

15

� 06/10/2024

PIPELINES

31

spark.mllib - BASIC STATISTICS

Summary statistics

Correlations

Stratified sampling

Hypothesis testing

Random data generation

Kernel density estimation

See https://spark.apache.org/docs/latest/mllib-statistics.html

32

16

� 06/10/2024

MLlib - CLASSIFICATION AND REGRESSION

MLlib supports various methods:

Binary Classification

linear SVMs, logistic regression, decision trees, random forests,

gradient-boosted trees, naive Bayes

Multiclass Classification

logistic regression, decision trees, random forests, naive Bayes

Regression

linear least squares, Lasso, ridge regression, decision trees,

random forests, gradient-boosted trees, isotonic regression

More Details>>

33

MlLib - Other Classes of Algorithms

Dimensionality reduction:

https://spark.apache.org/docs/latest/mllib-dimensionality-reduction.html

Feature extraction and transformation:

https://spark.apache.org/docs/latest/mllib-feature-extraction.html

Frequent pattern mining:

https://spark.apache.org/docs/latest/mllib-frequent-pattern-mining.html

Evaluation metrics:

https://spark.apache.org/docs/latest/mllib-evaluation-metrics.html

PMML model export:

https://spark.apache.org/docs/latest/mllib-pmml-model-export.html

Optimization (developer):

https://spark.apache.org/docs/latest/mllib-optimization.html

34

17

� 06/10/2024

MACHINE LEARNING TECHNIQUES

Classification

Clustering

Regression

Active learning

Collaborative filtering

35

K-Means Clustering using Spark

Focus: Implementation and Performance

36

18

� 06/10/2024

CLUSTERING

E.g. archaeological dig

Distance North

Grouping data according

to similarity

Distance East

37

CLUSTERING

E.g. archaeological dig

Distance North

Grouping data

according to

similarity

Distance East

38

19

� 06/10/2024

K-MEANS ALGORITHM

Benefits E.g. archaeological dig

Distance North

• Popular

• Fast

• Conceptually

straightforward

Distance East

39

K-MEANS: PRELIMINARIES

Data: Collection of values

data = lines.map(line=>

Feature 2

parseVector(line))

Feature 1

40

20

� 06/10/2024

K-MEANS: PRELIMINARIES

Dissimilarity:

Squared Euclidean distance

Feature 2

dist = p.squaredDist(q)

Feature 1

41

K-MEANS: PRELIMINARIES

K = Number of clusters

Feature 2

Data assignments to clusters

S1, S2,. . ., SK

Feature 1

42

21

� 06/10/2024

K-MEANS: PRELIMINARIES

K = Number of clusters

Feature 2

Data assignments to clusters

S1, S2,. . ., SK

Feature 1

43

K-MEANS ALGORITHM

• Initialize K cluster centers

• Repeat until convergence:

Assign each data point to

the cluster with the closest

Feature 2

center.

Assign each cluster center to

be the mean of its cluster’s

data points.

Feature 1

44

22

� 06/10/2024

K-MEANS ALGORITHM

• Initialize K cluster centers

• Repeat until convergence:

Assign each data point to

the cluster with the closest

Feature 2

center.

Assign each cluster center to

be the mean of its cluster’s

data points.

Feature 1

45

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

Assign each data point to

the cluster with the closest

center.

Assign each cluster center to

be the mean of its cluster’s

data points.

Feature 1

46

23

� 06/10/2024

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

Assign each data point to

the cluster with the closest

center.

Assign each cluster center to

be the mean of its cluster’s

data points.

Feature 1

47

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

Assign each data point to

the cluster with the closest

center.

Assign each cluster center to

be the mean of its cluster’s

data points.

Feature 1

48

24

� 06/10/2024

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

closest = data.map(p =>

(closestPoint(p,centers),p))

Assign each cluster center to

be the mean of its cluster’s

data points.

Feature 1

49

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

closest = data.map(p =>

(closestPoint(p,centers),p))

Assign each cluster center to

be the mean of its cluster’s

data points.

Feature 1

50

25

� 06/10/2024

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

closest = data.map(p =>

(closestPoint(p,centers),p))

Assign each cluster center to

be the mean of its cluster’s

data points.

Feature 1

51

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

closest = data.map(p =>

(closestPoint(p,centers),p))

pointsGroup =

closest.groupByKey()

Feature 1

52

26

� 06/10/2024

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

closest = data.map(p =>

(closestPoint(p,centers),p))

pointsGroup =

closest.groupByKey()

newCenters = pointsGroup.mapValues(

ps => average(ps))

Feature 1

53

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

closest = data.map(p =>

(closestPoint(p,centers),p))

pointsGroup =

closest.groupByKey()

newCenters = pointsGroup.mapValues(

ps => average(ps))

Feature 1

54

27

� 06/10/2024

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

closest = data.map(p =>

(closestPoint(p,centers),p))

pointsGroup =

closest.groupByKey()

newCenters = pointsGroup.mapValues(

ps => average(ps))

Feature 1

55

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

while (dist(centers, newCenters) > ɛ)

closest = data.map(p =>

(closestPoint(p,centers),p))

pointsGroup =

closest.groupByKey()

newCenters =pointsGroup.mapValues(

ps => average(ps))

Feature 1

56

28

� 06/10/2024

K-MEANS ALGORITHM

• Initialize K cluster centers

centers = data.takeSample(

false, K, seed)

Feature 2

• Repeat until convergence:

while (dist(centers, newCenters) > ɛ)

closest = data.map(p =>

(closestPoint(p,centers),p))

pointsGroup =

closest.groupByKey()

newCenters =pointsGroup.mapValues(

ps => average(ps))

Feature 1

57

K-MEANS ALGORITHM

centers = data.takeSample(

false, K, seed)

while (d > ɛ)

{

closest = data.map(p =>

Feature 2

(closestPoint(p,centers),p))

pointsGroup =

closest.groupByKey()

newCenters =pointsGroup.mapValues(

ps => average(ps))

d = distance(centers, newCenters)

centers = newCenters.map(_)

}

Feature 1

58

29

� 06/10/2024

EASE OF USE

§ Interactive shell:

Useful for featurization, pre-processing data

§ Lines of code for K-Means

- Spark ~ 90 lines – (Part of hands-on tutorial !)

- Hadoop/Mahout ~ 4 files, > 300 lines

59

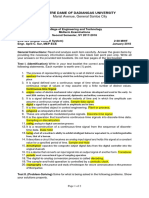

PERFORMANCE

K-Means Logistic Regression

274

300 Hadoop 250 H ad oo p

HadoopBinMem H ad oo pB inMem

184

250

Iteration time (s)

200

Iteration time (s)

Spark

197

Spark

200

157

150

116

143

111

121

150

106

100

80

76

87

100

62

61

50

33

50

15

0 0

25 50 100

25 50 100

Number of machines Number of machines

[Zaharia et. al, NSDI’12]

60

30

� 06/10/2024

CONCLUSION

§ Spark: Framework for cluster computing

§ Fast and easy machine learning programs

§ K means clustering using Spark

Examples and more: www.spark-project.org

61

31