0 ratings0% found this document useful (0 votes)

30 views136 pagesNLP CSM

The document outlines the syllabus for a Natural Language Processing (NLP) course at UNTU Hyderabad, focusing on various aspects such as word structure, syntax, and semantic parsing. It emphasizes the importance of understanding linguistic phenomena and implementing NLP algorithms, with a strong foundation in data structures and compiler design as prerequisites. Key topics include morphological models, language modeling techniques, and the challenges of ambiguity and irregularity in human language.

Uploaded by

dudipalashivatejaCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF or read online on Scribd

0 ratings0% found this document useful (0 votes)

30 views136 pagesNLP CSM

The document outlines the syllabus for a Natural Language Processing (NLP) course at UNTU Hyderabad, focusing on various aspects such as word structure, syntax, and semantic parsing. It emphasizes the importance of understanding linguistic phenomena and implementing NLP algorithms, with a strong foundation in data structures and compiler design as prerequisites. Key topics include morphological models, language modeling techniques, and the challenges of ambiguity and irregularity in human language.

Uploaded by

dudipalashivatejaCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF or read online on Scribd

You are on page 1/ 136

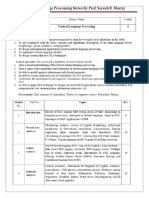

R22 BToch. CSE (Al and ML) Syllabus UNTU Hyderabad

AM603PC: NATURAL LANGUAGE PROCESSING

B.Tech. Ill Year Il Sem.

e4

ov

eo

Prerequisites:

1, Data structures and compiler design

Course Objectives:

‘+ Introduction to somo of the probloms and solutions of NLP and thoir rolation to linguistics and

statistics.

Course Outcome:

© Show sensitivily to linguistic phenomena and an ability to modol them with formal grammars,

Understand and carry out propor experimental mathadology for training and evaluating

empirical NLP systems

‘© Manipulate probabilities, construct statistical models over strings and trees, and estimate

parameters using supervised and unsupervised training methods.

+ Design, implement, and analyze NLP algorithms; and dasign differant language modeling

Techniques.

UNIT-1

Finding the Structure of Words: Words and Thoir Components, Issues and Challenges,

Morphological Models

Finding the Structure of Documents: Introduction, Methods, Complexity of the Approaches,

Porformances of tho Approaches, Features

UNIT =I

Syntax |: Parsing Natural Language, Tresbanks: A Data-Driven Approach to Syntax, Representation

of Syntactic Structure, Parsing Algorithms

UNIT =I

Syntax II: Models for Ambiguity Resolution in Parsing, Multilingual Issues

Semantic Parsing |: Introduction, Semantic Interpretation, Systom Paradigms, Word Senso

UNIT-1V

‘Semantic Parsing Il: Prodicate-Argument Structuro, Meaning Roprosontation Systoms

UNIT-V

Language Modeling: Introduction, N-Gram Models, Language Model Evaluation, Bayosian parameter

estimation, Language Model Adaptation, Language Models- class based, variable length, Bayesian

topic based, Multilingual and Gross Lingual Language Modeling

TEXT BOOKS:

41. Multilingual natural Language Processing Applications: From Theory to Practice ~ Daniel M.

Bikel and Imed Zitouni, Pearson Publication.

REFERENCE BOOK:

1. Spooch and Natural Language Processing - Danial Jurafsky & James H Marlin, Pearson

Publications.

2, Natural Language Processing and Information Retrieval: Tanvier Siddiqui, U.S. Tiwary.

Page 90 of 147

Unit

4.Finding the Structure of Words

This section deals with words, its structure and its models

4.4 Words and Their Components

4.4.1 Tokens

1.4.2 Lexemes

1.1.3 Morphemes

1.1.4 Typology

1.2 Issues and Challenges

1.2.1 Irregularily

1.2.2 Ambiguity

1.2.3 Productivity

1.3 Morphological Models

1.3.1 Dictionary Lookup

1.3.2 Finite-State Morphology

1.3.3 Unification Based Morphology

1.3.4 Functional Morphology

1.3.5 Morphology Induction

2.Finding the Structure of Documents:

This chapter mainly deals with Sentence and topic detection or segmentation,

2.1 Introduction

2.1.1 Sentence Boundary Detection

2.1.2 Topic Boundary Detection

2.2 Methods

This section deals with statistical classical approaches (Generative and Discriminative approaches)

2.2.1 Generative Sequence Classification Methods.

2.2.2 Discriminative Local Classification Methods

2.3.3 Discriminative Sequence Classification Methods

2.2.4 Hybrid Approaches

2.2.5 Extensions for Global Modelling for Sentence

‘Segmentation

2.3 Complexity of the Approaches

2.4 Performance of the Approaches

NATURAL LANGUAGE PROCESSING(NLP)

UNIT -1

i.Finding the Structure of Words:

« Words and Their Components

« Issues and Challenges

* Morphological Models

inding the Structure of Documents:

Introduction

Methods

Complexity of the Approaches

Performances of the Approaches.

.

.

.

Natural Language Processing

Humans communicate through some form of language either by text or speech.

To make interactions between computers and humans, computers need to understand natural languages used by

humans.

Natural language processing is all about making computers learn, understand, analyse, manipulate and interpret

natural(human) languages.

NLP stands for Natural Language Processing, which is a part of Computer Science, Human

language, and Artificial Intelligence.

Processing of Natural Language is required when you want an intelligent system like robot to perform as per your

instructions, when you want to hear decision from a dialogue based clinical expert system, etc.

The ability of machines to interpret human language is now at the core of many applications that we use every day

- chatbots, Email classification and spam filters, search engines, grammar checkers, voice assistants, and social

language translators.

The input and output of an NLP system can be Speech or Written Text

Components of NLP

« There are two components of NLP, Natural Language Understanding (NLU)

and Natural Language Generation (NLG).

e Natural Language Understanding (NLU) which involves transforming human

language into a machine-readable format.

e It helps the machine to understand and analyse human language by extracting the

text from large data such as keywords, emotions, relations, and semantics.

Natural Language Generation (NLG) acts as a translator that converts the

computerized data into natural language representation.

It mainly involves Text planning, Sentence planning, and Text realization.

The NLU is harder than NLG.

NLP Terminology

Phonology - It is study of organizing sound systematically.

Morphology: The study of the formation and internal structure of words.

Morpheme - It is primitive unit of meaning in a language.

Syntax: The study of the formation and internal structure of sentences.

Semantics: The study of the meaning of sentences.

Pragmatics — It deals with using and understanding sentences in different situations

and how the interpretation of the sentence is affected.

Discourse - It deals with how the immediately preceding sentence can affect the

interpretation of the next sentence.

World Knowledge - It includes the general knowledge about the world.

Steps in NLP Lexical Analysis

e There are general five steps :

1. Lexical Analysis

2. Syntactic Analysis (Parsing) “Semantic Analysis

3. Semantic Analysis +

4. Discourse Integration Saree

5. Pragmatic Analysis EESSAGEC San)

Lexical Analysis —

e The first phase of NLP is the Lexical Analysis.

e This phase scans the source code as a stream of characters and converts it into meaningful

lexemes.

e It divides the whole text into paragraphs, sentences, and words.

Syntactic Analysis (Parsing) —

Syntactic Analysis is used to check grammar, word arrangements, and shows the relationship

among the words.

The sentence such as “The school goes to boy” is rejected by English syntactic analyzer.

Semantic Analysis —

Semantic analysis is concerned with the meaning representation.

It mainly focuses on the literal meaning of words, phrases, and sentences.

The semantic analyzer disregards sentence such as “hot ice-cream”.

Discourse Integration —

Discourse Integration depends upon the sentences that proceeds it and also invokes the

meaning of the sentences that follow it.

Pragmatic Analysis —

During this, what was said is re-interpreted on what it actually meant.

It involves deriving those aspects of language which require real world knowledge.

Example: "Open the door" is interpreted as a request instead of an order.

Finding the Structure of Words

Human language is a complicated thing.

We use it to express our thoughts, and through language, we receive information and infer its

meaning.

Trying to understand language all together is not a viable approach.

Linguists have developed whole disciplines that look at language from different perspectives

and at different levels of detail.

The point of morphology, for instance, is to study the variable forms and functions of words,

The syntax is concerned with the arrangement of words into phrases, clauses, and sentences.

Word structure constraints due to pronunciation are described by phonology,

The conventions for writing constitute the orthography of a language.

The meaning of a linguistic expression is its semantics, and etymology and lexicology cover

especially the evolution of words and explain the semantic, morphological, and other links

among them.

Words are perhaps the most intuitive units of language, yet they are in general tricky to define.

Knowing how to work with them allows, in particular, the development of syntactic and

semantic abstractions and simplifies other advanced views on language.

Here, first we explore how to identify words of distinct types in human languages, and how the

internal structure of words can be modelled in connection with the grammatical properties and

lexical concepts the words should represent.

The discovery of word structure is morphological parsing.

In many languages, words are delimited in the orthography by whitespace and

punctuation.

But in many other languages, the writing system leaves it up to the reader to tell words

apart or determine their exact phonological forms.

Words and Their Components

Words are defined in most languages as the smallest linguistic units that can form a

complete utterance by themselves.

The minimal parts of words that deliver aspects of meaning to them are called

morphemes.

Tokens

Suppose, for a moment, that words in English are delimited only by whitespace and

punctuation (the marks, such as full stop, comma, and brackets)

Example: Will you read the newspaper? Will you read it? | won't read it.

If we confront our assumption with insights from syntax, we notice two here: words

newspaper and won't.

Being a compound word, newspaper has an interesting derivational structure.

In writing, newspaper and the associated concept is distinguished from the

isolated news and paper.

For reasons of generality, linguists prefer to analyze won't as two syntactic words, or tokens,

each of which has its independent role and can be reverted to its normalized form.

The structure of won’t could be parsed as will followed by not.

In English, this kind of tokenization and normalization may apply to just a limited set of

cases, but in other languages, these phenomena have to be treated in a less trivial manner.

In Arabic or Hebrew, certain tokens are concatenated in writing with the preceding or the

following ones, possibly changing their forms as well.

The underlying lexical or syntactic units are thereby blurred into one compact string of letters

and no longer appear as distinct words.

Tokens behaving in this way can be found in various languages and are often called clitics.

In the writing systems of Chinese, Japanese, and Thai, whitespace is not used to separate

words.

Lexemes

By the term word, we often denote not just the one linguistic form in_the given

context put ase the concept behind the form and the sef of alternative forms that

Such sets are called lexemes or lexical items, and they constitute the lexicon of a

language.

Lexemes can be divided by their behaviour into thi lexical categories of verbs, nouns,

adjectives, conjunctions, particles, or other parts of speech.

phe citation form of a lexeme, by which it is commonly identified, is also called its

lemma.

When we convert a word into its other forms, such 28, turning the singular mouse into

the plural mice or mouses, we say we inflect the lexeme.

When we transform, a lexeme into another one. that is morphologicall related,

regardless of its lexical cate: Ory, we. say we derive the lexeme: for instance, the

nouns receiver and reception are derived from the verb fo receive.

Example: Did you see him? | didn’t see him. | didn’t see anyone.

Example presents the problem of tokenization of didn’t and the investigation of the

internal structure of anyone.

In the paraphrase | saw no one, the. lexeme to see would be inflected into the

form saw to reflect its grammatical function of expressing positive past tense.

Likewise, him is the oblique case form of he or even of a more abstract lexeme

representing all personal pronouns.

In, the pargphrase, no one can be perceived as the minimal word synonymous

with nobody.

The diffjculty with the definition of what counts as a word need not pose a problem for

the syntacti descrip ion if we,understand no one as two closely connected tokens

treated as one fixed element.

Morphemes

Morphological theories differ on whether and how to associate the properties of word

forms with their structural components.

These components are usually called segments or morphs.

The morphs that by themselves represent some aspect of the meaning of a word are

called morphemes of some function.

Human languages employ a variety of devices by which morphs and morphemes are

combined into word forms.

Morphology

¢ Morphology is the domain of linguistics that

analyses the internal structure of words.

¢ Morphological analysis — exploring the structure of words

© Words are built up of minimal meaningful elements called morphemes:

played = play-ed

cats = cat-s

unfriendly = un-friend-ly

¢ Two types of morphemes:

i Stems: play, cat, friend

ii Affixes: -ed, -s, un-, -ly

e Two main types of affixes:

i Prefixes precede the stem: un-

ii Suffixes follow the stem: -ed, -s, un-, -ly

e Stemming = find the stem by stripping off affixes

play = play

replayed = re-play-ed

computerized = comput-er-ize-d

Problems in morphological processing

Inflectional morphology: inflected forms are constructed from base forms and inflectional

affixes.

Inflection relates different forms of the same word

Lemma Singular Plural

cat cat cats

dog dog dogs

knife knife knives

sheep sheep = sheep

mouse mouse mice

Derivational morphology: words are constructed from roots (or stems) and derivational

affixes:

inter+national = international

international+ize = internationalize

internationalizetation = internationalization

The simplest morpholo ical process concatenate morphs, one one, as in dis-

agree-ment-s, where agree is a free lexical morpheme and the g er elements are

bdéund grammatical morphemes contributing some Foartial meaning to the whole word.

jn a more complex sche orphs can interact with each other, and their forms ma

Become subject to Gdditional Bhonslogical act with each othe ehanges denoted y

morphophonemic.

The alternative forms of a morpheme are termed allomorphs.

Typology

Morphological typology divides languages into groups by characterizing the prevalent

morphological phenomena in those languages.

It can consider various criteria, and during the history of linguistics, different classifications

have been proposed.

Let us outline the typology that is based on quantitative relations between words, their

morphemes, and their features:

Isolating, or analytic, languages include no or relatively few words that would comprise more

than one morpheme (typical members are Chinese, Vietnamese, and Thai; analytic tendencies

are also found in English).

Synthetic languages can combine more morphemes in one word and are further

divided into agglutinative and fusional languages.

Agglutinative languages have morphemes associated with only a single function at a

time (as in Korean, Japanese, Finnish, and Tamil, etc.)

Fusional languages are defined by their feature-per-morpheme ratio higher than one

(as in Arabic, Czech, Latin, Sanskrit, German, etc.).

In accordance with the notions about word formation processes mentioned earlier, we

can also find out using concatenative and nonlinear:

Concatenative languages linking morphs and morphemes one after another.

Nonlinear languages allowing structural components to merge nonsequentially to

apply tonal morphemes or change the consonantal or vocalic templates of words.

Morphological Typology

Morphological typology is a way of classifying the languages of the world that groups

languages according to their common morphological structures.

The field organizes languages on the basis of how those languages form words by

combining morphemes.

The morphological typology classifies languages into two broad classes of synthetic languages

and analytical languages.

The synthetic class is then further sub classified as either agglutinative languages or fusional

languages.

Analytic languages contain very little inflection, instead relying on features like word order and

auxiliary words to convey meaning.

Synthetic languages, ones that are not analytic, are divided into two

categories: agglutinative and fusional languages.

Agglutinative languages rely primarily on discrete particles(prefixes, suffixes, and infixes) for

inflection, ex: inter+national = international, international+tize = internationalize.

While fusional languages "fuse" inflectional categories together, often allowing one word

ending to contain several categories, such that the original root can be difficult to extract

(anybody, newspaper).

Issues and Challenges

Irregularity: word forms are not described by a prototypical linguistic model.

Ambiguity: word forms be understood in multiple ways out of the context of their

discourse.

Productivity: is the inventory of words in a language finite, or is it unlimited?

Morphological parsing tries to eliminate the variability of word forms to provide higher-

level linguistic units whose lexical and morphological properties are explicit and well

defined.

It attempts to remove unnecessary irregularity and give limits to ambiguity, both of

which are present inherently in human language.

By irregularity, we mean existence of such forms and structures that are not described

appropriately by a prototypical linguistic model.

Some irregularities can be understood by redesigning the model and improving its

rules, but other lexically dependent irregularities often cannot be generalized

Ambiguity is indeterminacy (not being interpreted) in interpretation of expressions of

language.

Morphological modelling also faces the problem of productivity and creativity in language, by

which unconventional but perfectly meaningful new words or new senses are coined.

Irregularity

Morphological parsing is motivated by the quest for generalization and abstraction in the

world of words.

Immediate descriptions of given linguistic data may not be the ultimate ones, due to either

their inadequate accuracy or inappropriate complexity, and better formulations may be

needed.

The design principles of the morphological model are therefore very important.

In Arabic, the deeper study of the morphological processes that are in effect during inflection

and derivation, even for the so-called irregular words, is essential for mastering the whole

morphological and phonological system.

With the proper abstractions made, irregular morphology can be seen as merely enforcing

some extended rules, the nature of which is phonological, over the underlying or prototypical

regular word forms.

Panes

qaraa

peers

pearacth

Plane

eran

rents

beeper me

rea s

qara>

feet fart fasat-ti feesent— | ter feent—ae frend '

Fest Seren’ _“feasent be team frond en Trak M

wea 2 te or

Fae Fane Fa Ma

fase ferment—tei “fens caear fa ©

rad wacagte — raccer re s

Table: Discovering the

templates, where uniform structural operations apply to different kinds of s

regularity of

Arabic morphology using morphophonemic

ems.

In rows, surface forms S of gara_ ‘to read’ and ra__ a ‘to see’ and their inflections are

analyzed into immediate | and morphophonemic M templates, in which dashes mark the

structural boundaries where merge rules are enforced.

The outer columns of the table correspond to P perfective and | imperfective stems

declared in the lexicon; the inner columns treat active verb forms of the following

morphosyntactic properties: | indicative, S subjunctive, J jussive mood; 1 first, 2 second,

3 third person; M masculine, F feminine gender; S singular, P plural number.

* Table

illustrates differences between a naive model of word structure in Arabic and

the model proposed in Smr’z and Smr‘z and Bielick’y where morphophonemic merge

rules and templates are involved.

Morphophonemic templates capture morphological processes by just organizing stem

patterns and generic affixes without any context-dependent variation of the affixes or ad hoc

modification of the stems.

The merge rules, indeed very neatly or effectively concise, then ensure that such structured

representations can be converted into exactly the surface forms, both orthographic and

phonological, used in the natural language.

Applying the merge rules is independent of and irrespective of any grammatical parameters

or information other than that contained in a template.

Most morphological irregularities are thus successfully removed.

Ambiguity

Morphological ambiguity is the possibility that word forms be understood in multiple

ways out of the context of their discourse (communication in speech or writing).

Words forms that look the same but have distinct functions or meaning are called

homonyms.

Ambiguity is present in all aspects of morphological processing and language

processing at large.

e Table arranges homonyms on the basis of their behaviour with different endings.

Systematic homonyms arise as verbs combined with endings in Korean

Che) Ce) (-tere) Dewar

vreemnt feo

wreak. Koco

eee)

oer errs “paar

rrnveneel. wer rash

wraverenye eit

het. kee fanre “vell wp"

Ret ko foaere sweradic

Reb ko Aer. Eases”

Fearrengp- ko Fearne Seas +

Aewerap- ko mien! Aeneas, rene Koeeae. vervare -h

ofa STS. E71 etic. te ae. beare “2

HBr Fe 244 Beker a. deere +s.

e Arabic is a language of rich morphology, both derivational and inflectional.

e Because Arabic script usually does not encode short vowels and omits yet some other

diacritical marks that would record the phonological form exactly, the degree of its

morphological ambiguity is considerably increased.

e When inflected syntactic words are combined in an utterance, additional phonological and

orthographic changes can take place, as shown in Figure.

e In Sanskrit, one such euphony rule is known as external sandhi.

Hareasert i ee

weucadlerre Tages

Foanten tricar stele

nhgprrenmen Text

hgprere Pk

nigrrenendaes,

te

esl}

att)

ont ant

ae

ras agé

cases are expressed by the same word form with dirasoi: ‘my study’ and

meabutethe original case endings are distinct.

araAsty

Ems

BE bomyns

r5y awh

Tjrath

rjrAch

a1osr

slivcisertee ®

irasatt &

“bir

exten

vreecenlierrnte ©

vrveecentirrnd

Foon ten Deiacrrs fer

sagprenmen. Faas

ager here

nagyprennen Fats

Be dosenfe te

(es futje deasp y

we Rute ene oy

Ss hs arasp x

Speke EI y

ag ates) mEImy ¥

le S55 mtbem nh

eel) Ij¢6a* h

ates), SEpear ae

eel] rere’ n

eal 2 atoss

mavalimua ‘ my teachers’,

Productivity

Is the inventory of words in a language finite, or is it unlimited?

This question leads directly to discerning two fundamental approaches to language,

summarized in the distinction between /angue and parole, or in the competence versus

performance duality by Noam Chomsky.

In one view, language can be seen as simply a collection of utterances (parole) actually

pronounced or written (performance).

This ideal data set can in practice be approximated by linguistic corpora, which are finite

collections of linguistic data that are studied with empirical(based on) methods and can be

used for comparison when linguistic models are developed.

Yet, if we consider language as a system (langue), we discover in it structural devices

like recursion, iteration, or compounding(make up; constitute)that allow to produce

(competence) an infinite set of concrete linguistic utterances.

This general potential holds for morphological processes as well and is called

morphological productivity.

We denote the set of word forms found in a corpus of a language as its vocabulary.

The members of this set are word types, whereas every original instance of a word form is a

word token.

The distribution of words or other elements of language follows the “80/20 rule,” also known

as the law of the vital few.

It says that most of the word tokens in a given corpus can be identified with just a couple of

word types in its vocabulary, and words from the rest of the vocabulary occur much less

commonly if not rarely in the corpus.

Furthermore, new, unexpected words will always appear as the collection of linguistic data is

enlarged.

In Czech, negation is a productive morphological operation. Verbs, nouns, adjectives, and

adverbs can be prefixed with ne- to define the complementary lexical concept.

Morphological Models

There are many possible approaches to designing and implementing morphological models.

Over time, computational linguistics has witnessed the development of a number of

formalisms and frameworks, in particular grammars of different kinds and expressive power,

with which to address whole classes of problems in processing natural as well as formal

languages.

Let us now look at the most prominent types of computational approaches to morphology.

Dictionary Lookup

Morphological parsing is a process by which word forms of a language are associated with

corresponding linguistic descriptions.

Morphological systems that specify these associations by merely enumerating(is the act or

process of making or stating a list of things one after another) them case by case

do not offer any generalization means.

Likewise for systems in which analyzing a word form is reduced to looking it up verbatim in

word lists, dictionaries, or databases, unless they are constructed by and kept in sync with

more sophisticated models of the language.

In this context, a dictionary is understood as a data structure that directly enables

obtaining some precomputed results, in our case word analyses.

The data structure can be optimized for efficient lookup, and the results can be

shared. Lookup operations are relatively simple and usually quick.

Dictionaries can be implemented, for instance, as lists, binary search trees, tries, hash

tables, and so on.

Because the set of associations between word forms and their desired descriptions is

declared by plain enumeration, the coverage of the model is finite and the generative

potential of the language is not exploited.

Despite all that, an enumerative model is often sufficient for the given purpose, deals easily

with exceptions, and can implement even complex morphology.

For instance, dictionary-based approaches to Korean depend on a large dictionary of all

possible combinations of allomorphs and morphological alternations.

These approaches do not allow development of reusable morphological rules, though.

Finite-State Morphology

By finite-state morphological models, we mean those in which the specifications written by

human programmers are directly compiled into finite-state transducers.

The two most popular tools supporting this approach, XFST (Xerox Finite-State Tool) and

LexTools.

Finite-state transducers are computational devices extending the power of finite-state

automata.

They consist of a finite set of nodes connected by directed edges labeled with pairs of input

and output symbols.

In such a network or graph, nodes are also called states, while edges are called arcs.

Traversing the network from the set of initial states to the set of final states along the arcs is

equivalent to reading the sequences of encountered input symbols and writing the sequences

of corresponding output symbols.

The set of possible sequences accepted by the transducer defines the input

language; the set of possible sequences emitted by the transducer defines the output

language.

Cl Input Morphological parsed output

cat +N +PL

cat +N +SG

city +N +PL

(EEEESIE goose +N +PL

Cereal goose +N +SG) or (goose +V)

goose +V +3SG

merge +V +PRES-PART

(caught +V +PAST-PART) or (catch +V +PAST)

ry.

vam O* @5; 2)

NS

iene. wy yet

ye #

Figure 313 A schemas ranséucee fer Enplish coal mer infection Typ. The

symbols sboe exc a epesent elements fhe mogpogel pase the eel ape,

te sombols below each ar epeseat he suc tage (rte ine smeat tp, tobe

sated net), using he mpben-boeniary symbol” ané woud Douay mer #

‘he label on the acs leaving oy ace schematic, and aed tobe expanded by individual

* For example, a finite-state transducer could translate the infinite regular language consisting of the

words vnuk, pravnuk, prapravnuk, ... to the matching words in the infinite regular language defined

by grandson, great-grandson, great-great-grandson.

« In finite-state computational morphology, it is common to refer to the input word forms as surface strings and to

the output descriptions as lexical strings, if the transducer is used for morphological analysis, or vice versa, if it is

used for morphological generation.

«In English, a finite-state transducer could analyze the surface string children into the lexical

string child [+plural], for instance, or generate women from woman [+plural].

« Relations on languages can also be viewed as functions. Let us have a relation R, and let us denote by [5] the set

of all sequences over some set of symbols 2, so that the domain and the range of R are subsets of [2].

* We can then consider R as a function mapping an input string into a set of output strings, formally denoted by this

type signature, where [Z] equals String:

Rs (2) (8) Rs String — {String} ay

« A theoretical limitation of finite-state models of morphology is the problem of capturing reduplication of words or

their elements (e.g., to express plurality) found in several human languages.

* Finite-state technology can be applied to the morphological modeling of isolating and agglutinative languages in a

quite straightforward manner. Korean finite-state models are discussed by Kim, Lee and Rim, and Han, to mention

a few.

Unification-Based Morphology

The concepts and methods of these formalisms are often closely connected to those

of logic programming.

In finite-state morphological models, both surface and lexical forms are by themselves

unstructured strings of atomic symbols.

In higher-level approaches, linguistic information is expressed by more appropriate

data structures that can include complex values or can be recursively nested if

needed.

Morphological parsing P thus associates linear forms @ with alternatives of structured

content y, cf.

Ps da{ut Po: form — {content} (1.2)

Erjavec argues that for morphological modelling, word forms are best captured by

regular expressions, while the linguistic content is best described through typed

feature structures.

Feature structures can be viewed as directed acyclic graphs.

A node in a feature structure comprises a set of attributes whose values can be

Nodes are associated with types, and atomic values are attributeless nodes

distinguished by their type.

Instead of unique instances of values everywhere, references can be used to establish

value instance identity.

Feature structures are usually displayed as attribute-value matrices or as nested

symbolic expressions.

Unification is the key operation by which feature structures can be merged into a more

informative feature structure.

Unification of feature structures can also fail, which means that the information in them

is mutually incompatible.

Morphological models of this kind are typically formulated as logic programs, and

unification is used to solve the system of constraints imposed by the model.

Advantages of this approach include better abstraction possibilities for developing a

morphological grammar as well as elimination of redundant information from it.

Unification-based models have been implemented for Russian, Czech, Slovene,

Persian, Hebrew, Arabic, and other languages.

Functional Morphology

Functional morphology defines its models using principles of functional programming

and type theory.

It treats morphological operations and processes as pure mathematical functions and

organizes the linguistic as well as abstract elements of a model into distinct types of

values and type classes.

Though functional morphology is not limited to modelling particular types of

morphologies in human languages, it is especially useful for fusional morphologies.

Linguistic notions like paradigms, rules and exceptions, grammatical categories and

parameters, lexemes, morphemes, and morphs can be represented intuitively(without

conscious reasoning; instinctively) and succinctly(in a brief and clearly expressed

manner) in this approach.

Functional morphology implementations are intended to be reused as programming

libraries capable of handling the complete morphology of a language and to be

incorporated into various kinds of applications.

Morphological parsing is just one usage of the system, the others being

morphological generation, lexicon browsing, and so on.

we can describe inflection /, derivation D, and lookup L as functions of these generic

type

Many functional morphology implementations are embedded in a general-purpose

programming language, which gives programmers more freedom with advanced

programming techniques and allows them to develop full-featured, real-world

applications for their models.

The Zen toolkit for Sanskrit morphology is written in OCaml.

It influenced the functional morphology framework in Haskell, with which

morphologies of Latin, Swedish, Spanish, Urdu, and other languages have been

implemented.

In Haskell, in particular, developers can take advantage of its syntactic flexibility and

design their own notation for the functional constructs that model the given problem.

The notation then constitutes a so-called domain-specific embedded language, which makes programming even

more fun.

Even without the options provided by general-purpose programming languages, functional morphology models

achieve high levels of abstraction.

Morphological grammars in Grammatical Framework can be extended with descriptions of the syntax and

semantics of a language.

Grammatical Framework itself supports multilinguality, and models of more than a dozen languages are available in

it as open-source software.

2.Finding structure of Documents

2.1 Introduction

In human language, words and sentences do not appear randomly but have structure.

For example, combinations of words from sentences- meaningful grammatical units, such as

statements, requests, and commands.

Automatic extraction of structure of documents helps subsequent NLP tasks: for example,

parsing, machine translation, and semantic role labelling use sentences as the basic

processing unit.

Sentence boundary annotation(labelling) is also important for aiding human readability of

automatic speech recognition (ASR) systems.

Task of deciding where sentences start and end given a sequence of characters(made of words

and typographical cues) sentences boundary detection.

Topic segmentation as the task of determining when a topic starts and ends in a sequence of

sentences.

The statistical classification approaches that try to find the presence of sentence and topic

boundaries given human-annotated training data, for segmentation.

These methods base their predictions on features of the input: local characteristics that give

evidence toward the presence or absence of a sentence, such as a_period(.), a question

mark(?), an exclamation mark(!), or another type of punctuation.

Features are the core of classification approaches and require careful design and selection in

order to be successful and prevent overfitting and noise problem.

Most statistical approaches described here are language independent, every language is a

challenging in itself.

For example, for processing of Chinese documents, the processor may need to first segment

the character sequences into words, as the words usually are not separated by a space.

Similarly, for morphological rich languages, the word structure may need to be analyzed to

extract additional features.

Such processing is usually done in a pre-processing step, where a sequence of tokens is

determined.

Tokens can be word or sub-word units, depending on the task and language.

These algorithms are then applied on tokens.

2.1.1 Sentence Boundary Detection

Sentence boundary detection (Sentence segmentation) deals with automatically segmenting

a sequence of word tokens into sentence units.

In written text in English and some other languages, the beginning of a sentence is usually

marked with an uppercase letter, and the end of a sentence is explicitly marked with a

period(.), a question mark(?), an exclamation mark(!), or another type of punctuation.

In addition to their role as sentence boundary markers, capitalized initial letters are used

distinguish proper nouns, periods are used in abbreviations, and numbers and punctuation

marks are used inside proper names.

e The period at the end of an abbreviation can mark a sentence boundary at the same time.

Example: | spoke with Dr. Smith. and My house is on Mountain Dr.

In the first sentence, the abbreviation Dr. does not end a sentence, and in the second it does.

Especially quoted sentences are always problematic, as the speakers may have uttered

multiple sentences, and sentence boundaries inside the quotes are also marked with

punctuation marks.

An automatic method that outputs word boundaries as ending sentences according to the

presence of such punctuation marks would result in cutting some sentences incorrectly.

Ambiguous abbreviations and capitalizations are not only problem of sentence segmentation

in written text.

Spontaneously written texts, such as short message service (SMS) texts or instant

messaging(IM) texts, tend to be nongrammatical and have poorly used or missing

punctuation, which makes sentence segmentation even more challenging.

Similarly, if the text input to be segmented into sentences comes from an automatic system,

such as optical character recognition (OCR) or ASR, that aims to translate images of

handwritten, type written, or printed text or spoken utterances into machine editable text, the

finding of sentences boundaries must deal with the errors of those systems as well.

On the other hand, for conversational speech or text or multiparty meetings with

ungrammatical sentences and disfluencies, in most cases it is not clear where the boundaries

are.

Code switching -that is, the use of words, phrases, or sentences from multiple languages by

multilingual speakers- is another problem that can affect the characteristics of sentences.

For example, when switching to a different language, the writer can either keep the

punctuation rules from the first language or resort to the code of the second language.

Conventional rule-based sentence segmentation systems in well-formed texts rely on patterns

to identify potential ends of sentences and lists of abbreviations for disambiguating them.

For example, if the word before the boundary is a known abbreviation, such as “Mr.” or “Gov.,”

the text is not segmented at that position even though some periods are exceptions.

To improve on such a rule-based approach, sentence segmentation is stated as a classification

problem.

Given the training data where all sentence boundaries are marked, we can train a classifier to

recognize them.

2.1.2 Topic Boundary Detection

Segmentation(Discourse or text segmentation) is the task of automatically dividing a stream

of text or speech into topically homogenous blocks.

This is, given a sequence of(written or spoken) words, the aim of topic segmentation is to

find the boundaries where topics change.

Topic segmentation is an important task for various language understanding applications, such

as information extraction and retrieval and text summarization.

For example, in information retrieval, if a long documents can be segmented into shorter,

topically coherent segments, then only the segment that is about the user’s query could be

retrieved.

During the late1990s, the U.S defence advanced research project agency(DARPA) initiated the

topic detection and tracking program to further the state of the art in finding and following

new topic in a stream of broadcast news stories.

One of the tasks in the TDT effort was segmenting a news stream into individual stories.

2.2 Methods

Sentence segmentation and topic segmentation have been considered as a boundary

classification problem.

Given a boundary candidate( between two word tokens for sentence segmentation and

between two sentences for topic segmentation), the goal is to predict whether or not the

candidate is an actual boundary (sentence or topic boundary).

Formally, let x€X be the vector of features (the observation) associated with a candidate and y

€Y be the label predicted for that candidate.

The label y can be b for boundary and b for nonboundary.

Classification problem: given a set of training examples(x,y),,ain, find a function that will assign

the most accurate possible label y of unseen examples Xynseen,

Alternatively to the binary classification problem, it is possible to model boundary types using

finer-grained categories.

For segmentation in text be framed as a three-class problem: sentence boundary b?, without

an abbreviation and abbreviation not as a boundary

, b

Similarly spoken language, a three way classification can be made between non-boundaries »

statements b’, and question boundaries b9.

For sentence or topic segmentation, the problem is defined as finding the most probable

sentence or topic boundaries.

The natural unit of sentence segmentation is words and of topic segmentation is sentence, as

we can assume that topics typically do not change in the middle of a sentences.

The words or sentences are then grouped into categories stretches belonging to one

sentences or topic- that is word or sentence boundaries are classified into sentences or topic

boundaries and -non-boundaries.

The classification can be done at each potential boundary i (local modelling); then, the aim is

to estimate the most probable boundary type ¥jfor each candidate x;

argmax

I= To POulx)

Here, the “ is used to denote estimated categories, and a variable without a “ is used to show

possible categories.

In this formulation, a category is assigned to each example in isolation; hence, decision is

made locally.

However, the consecutive types can be related to each other. For example, in broadcast news

speech, two consecutive sentences boundaries that form a single word sentence are very

infrequent.

In local modelling, features can be extracted from surrounding example context of the

candidate boundary to model such dependencies.

It is also possible to see the candidate boundaries as a sequence and search for the sequence of boundary types

that have the maximum probability given the candidate examples, x= xy,..., xp

Y= OO P(X)

We categorize the methods into local and sequence classification.

Another categorization of methods is done according to the type of the machine learning algorithm: generative versus

discriminative.

Generative sequence models estimate the joint distribution of the observations P(X,Y) (words, punctuation) and the

labels(sentence boundary, topic boundary).

Discriminative sequence models, however, focus on features that categorize the differences between the labelling of that

examples.

2.2.1 Generative Sequence Classification Methods

e Most commonly used generative sequence classification method for topic and

sentence is the hidden Markov model (HMM).

¢ The probability in equation 2.2 is rewritten as the following, using the Bayes rule:

Y= TOP (YX) 2.1

P= ON PY |X) = TO (PAY) P(Y)/P(X)) = SP (P(XIY) PCY) 2.2

Here Y= Predicted class(boundary) label

Y = (¥1,Yo,----Y,)= Set of class(boundary) labels

X = (Xj,Xo,.-..X,)= set of feature vectors

P(Y[X) = the probability of given the X (feature vectors), what is the probability of X

belongs to the class(boundary) label.

P(x) = Probability of word sequence

P(Y) = Probability of the class(boundary)

P(YX) = argunax yuax PUX|Y)POY

e P(X) in the denominator is dropped because it is fixed for different Y and hence does not

change the argument of max.

e P(X|Y) and P(Y) can be estimated as

P(X|Y) = [] Pesn w) 24)

and

PUY I] Pity ) 2.5

2.2.2Discriminative Local Classification Methods

Discriminative classifiers aim to model P(y, |x;) equation 2.1 directly.

The most important distinction is that whereas class densities P(x|y) are model assumptions

in generative approaches, such as naive Bayes, in discriminative methods, discriminant

functions of the feature space define the model.

A number of discriminative classification approaches, such as support vector machines,

boosting, maximum entropy, and regression. Are based on very different machine learning

algorithms.

While discriminative approaches have been shown to outperform generative methods in

many speech and language processing tasks.

For sentence segmentation, supervised learning methods have primarily been applied to

newspaper articles.

Stamatatos, Fakotakis and Kokkinakis used transformation based learning (TBL) to infer rules

for finding sentence boundaries.

Many classifiers have been tried for the task: regression trees, neural networks, classification

trees, maximum entropy classifiers, support vector machines, and naive Bayes classifiers.

The most Text tiling method Hearst for topic segmentation uses a lexical cohesion metric in a

word vector space as an indicator of topic similarity.

Figure depicts a typical graph of similarity with respect to consecutive segmentation units.

Figure 2-4. Text Tiling example (from [22))

e The document is chopped when the similarity is below some threshold.

Originally, two methods for computing the similarity scores were proposed: block

comparison and vocabulary introduction.

The first, block comparison, compares adjacent blocks of text to see how similar they are

according to how many words the adjacent blocks have in common.

Given two blocks, b, and b,, each having k tokens (sentences or paragraphs), the similarity

(or topical cohesion) score is computed by the formula:

Where w,,, is the weight assigned to term t in block b.

The weights can be binary or may be computed using other information retrieval- metrics

such as term frequency.

The second, the vocabulary introduction method, assigns a score to a token-sequence gap

on the basis of how many new words are seen in the interval in which it is the midpoint.

Similar to the block comparison formulation, given two consecutive blocks b, and b,, of

equal number of words w, the topical cohesion score is computed with the following formula:

Num NewTerms(b, Num NewTerma(b

Where NumNewTerms(b) returns the number of terms in block b seen the first time in text.

2.2.3Discriminative Sequence Classification Methods

In segmentation tasks, the sentence or topic decision for a given example(word, sentence,

paragraph) highly depends on the decision for the examples in its vicinity.

Discriminative sequence classification methods are in general extensions of local

discriminative models with additional decoding stages that find the best assignment of labels

by looking at neighbouring decisions to label.

Conditional random fields(CRFs) are extension of maximum entropy, SVM struct is an

extension of SVM, and maximum margin Markov networks(M3N) are extensions of HMM.

CRFs are a class of log-linear models for labelling structures.

Contrary to local classifiers that predict sentences or topic boundaries independently, CRFs

can oversee the whole sequence of boundary hypotheses to make their decisions.

Complexity of the Approaches

The approaches described here have advantages and disadvantages.

In a given context and under a set of observation features, one approach may be better than

other.

These approaches can be rated in terms of complexity (time and memory) of their training

and prediction algorithms and in terms of their performance on real-world datasets.

In terms of complexity, training of discriminative approaches is more complex than training

of generative ones because they require multiple passes over the training data to adjust for

feature weights.

However, generative models such as HELMs can handle multiple orders of magnitude larger

training sets and benefits, for instance, from decades of news wire transcripts.

On the other hand, they work with only a few features (only words for HELM) and do not

cope well with unseen events.

List and explain the challenges of morphological models. Mar 2021 [7]

2. Discuss the importance and goals of Natural Language Processing. Mar 2021 [8]

3.List the applications and challenges in NLP. Sep 2021 [7]

4.Explain any one Morphological model. Sep 2021 [8]

5.Discuss about challenging issues of Morphological model. Sep 2021 [7]

6.Differentiate between surface and deep structure in NLP with suitable examples. Sep 2021 [8]

7.Give some examples for early NLP systems. Sep 2021 [7]

8. Explain the performance of approaches in structure of documents? Sep 2021 [15]

9.With the help of a neat diagram, explain the representation of syntactic structure. Mar 2021 [8]

10.£lobarate the models for ambiguity resolution in Parsing. Mar 2021 [7]

11.Explain various types of parsers in NLP? Sep 2021 [8]

12.Discuss multilingual issues in detail. Sep 2021 [7]

13.Given the grammar S->AB|BB, A->CC|AB|a, B->BB|CA|b, C->BA|AA|b, word w=‘aabb’. Applay top down parsing test, word

can be generated or not. Sep 2021 [8]

14.€xplain Tree Banks and its role in parsing. Sep 2021 [7]

55CK R18

‘Code No:

JAWAHARLAL NEHRU TECHNOLOGICAL UNIVERSITY HYDERABAD

C Ares xetsscx | R18 |

[AWAHARLAL NEHRU TECHNOLOGICAL UNIVERSITY HYDERABAD

B. Tech 111 Year I Semester Examinations, March - 2021

NATURAL LANGUAGE PROCE:

(Computer Science and Engineer

Times E ‘s Max. Marks: 75

List the applications in NLP.

Applications of NLP:

+ Information retrieval & web search

+ Grammar correction & Question answering

*Sentiment Analysis.

*Text Classification.

*Chatbots & Virtual Assistants.

Text Extraction.

*Machine Translation.

*Text Summarization.

*Market Intelligence.

*Auto-Correct.

Discuss the importance and goals of Natural Language Processing.

Natural Language Processing

Unit-Il

Syntax Analysis:

2.1Parsing Natural Language

2.2Treebanks: A Data-Driven Approach to Syntax,

2.3Representation of Syntactic Structure,

2.4Parsing Algorithms,

2.5Models for Ambiguity Resolution in Parsing, Multilingual Issues

¢ The parsing in NLP is the process of determining the syntactic structure of a text by analysing

its constituent words based on an underlying grammar.

Example Grammar:

oun phrase > proper_noun.

oun_phrase -> determiner, noun

verb_phrase -> verb. noun_phrase

proper_noun > [Tom

nous > apple)

el

or > Land

* Then, the outcome of the parsing process would be a parse tree, where sentence is the root,

intermediate nodes such as noun_phrase, verb_phrase etc. have children - hence they are

called non-terminals and finally, the leaves of the tree ‘Tom’, ‘ate’, ‘an’, ‘apple’ are

called terminals.

Parse Tree: [sentence

_~

noun_phrase —_verb_phrase

YD

proper_noun verb noun_phrase

Pm,

determiner “noun

| |

‘Tom’ “ate” “an” — “apple”

A treebank can be defined as a linguistically annotated corpus that includes some kind of

syntactic analysis over and above part-of-speech tagging.

A sentence is parsed by relating each word to other words in the sentence which depend on it.

The syntactic parsing of a sentence consists of finding the correct syntactic structure of that

sentence in the given formalism/grammar.

Dependency grammar (DG) and phrase structure grammar(PSG) are two such formalisms.

PSG breaks sentence into constituents (phrases), which are then broken into smaller

constituents.

Describe phrase, clause structure Example: NP,PP,VP etc.,

DG: syntactic structure consist of lexical items, linked by binary asymmetric relations called

dependencies.

Interested in grammatical relations between individual words.

Does propose a recursive structure rather a network of relations

These relations can also have labels.

Constituency tree vs Dependency tree

e Dependency structures explicitly represent

- Head-dependent relations (directed arcs)

- Functional categories (arc labels)

- Possibly some structural categories (POS)

e Phrase structure explicitly represent

- Phrases (non-terminal nodes)

- Structural categories (non-terminal labels)

- Possible some functional categories (grammatical functions)

Defining candidate dependency trees for an input sentence

@ Learning: scoring possible dependency graphs for a given sentence, usually by factoring the

graphs into their component arcs

@ Parsing: searching for the highest scoring graph for a given sentence

Syntax

In NLP, the syntactic analysis of natural language input can vary from being very low-level,

such as simply tagging each word in the sentence with a part of speech (POS), or very high

level, such as full parsing.

In syntactic parsing, ambiguity is a particularly difficult problem because the most possible

analysis has to be chosen from an exponentially large number of alternative analyses.

From tagging to full parsing, algorithms that can handle such ambiguity have to be carefully

chosen.

Here we explores the syntactic analysis methods from tagging to full parsing and the use of

supervised machine learning to deal with ambiguity.

2.1Parsing Natural Language

In a text-to-speech application, input sentences are to be converted to a spoken output that

should sound like it was spoken by a native speaker of the language.

Example: He wanted to go a drive in the country.

There is a natural pause between the words derive and In in sentence that reflects an

underlying hidden structure to the sentence.

Parsing can provide a structural description that identifies such a break in the intonation.

e Asimpler case: The cat who lives dangerously had nine lives.

In this case, a text-to-speech system needs to know that the first instance of the word lives is

a verb and the second instance is a noun before it can begin to produce the natural

intonation for this sentence.

This is an instance of the part-of-speech (POS) tagging problem where each word in the

sentence is assigned a most likely part of speech.

Another motivation for parsing comes from the natural language task of summarization, in

which several documents about the same topic should be condensed down to a small digest

of information.

Such a summary may be in response to a question that is answered in the set of documents.

In this case, a useful subtask is to compress an individual sentence so that only the relevant

portions of a sentence is included in the summary.

For example:

Beyond the basic level, the operations of the three products vary widely.

The operations of the products vary.

The elegant way to approach this task is to first parse the sentence to find the various

constituents: where we recursively partition the words in the sentence into individual

phrases such as a verb phrase or a noun phrase.

© The output of the parser for the input sentence is shown in Fig.

sentence

noun_phrase —_ver_phrase

proper noun verb noun_phrase

determiner noun

| |

Tom’ “ate” “an” — “apple”

e Another example is the paragraph parsing.

e Inthe sentence fragment, the capitalized phrase EUROPEAN COUNTRIES can be replaced with

other phrases without changing the essential meaning of the sentences.

e A few examples of replacement phrases are shown in the sentence fragments .

Open border imply increasing racial fragmentation in EUROPEAN COUNTRIES.

Open borders imply increasing racial fragmentation in the countries of Europe

Open borders imply increasing racial fragmentation in European states.

Open borders imply increasing racial fragmentation in Europe

Open borders imply increasing racial fragmentation in European nations

Open borders imply increasing racial fragmentation in European countries.

* In contemporary NLP, syntactic parsers are routinely used in many applications, including

but not limited to statistical machine translation, information extraction from text

collections, language summarizations, producing entity grinds for language generation,

error correction in text.

2.2Treebanks: A Data-Driven Approach to Syntax

Parsing recovers information that is not explicit in the input sentence.

This implies that a parser requires some knowledge(syntactic rules) in addition to the input

sentence about the kind of syntactic analysis that should be produced as output.

One method to provide such knowledge to the parser is to write down a grammar of the

language — a set of rules of syntactic analysis as a CFGs.

In natural language, it is far too complex to simply list all the syntactic rules in terms of a CFG.

The second knowledge acquisition problem- not only do we need to know the syntactic rules

for a particular language, but we also need to know which analysis is the most

plausible(probably) for a given input sentence.

The construction of treebank is a data driven approach to syntax analysis that allows us to

address both of these knowledge acquisition bottlenecks in one stroke.

A treebank is simply a collection of sentences (also called a corpus of text), where each

sentence is provided a complete syntax analysis.

The syntactic analysis for each sentence has been judged by a human expert as the most

possible analysis for that sentence.

A lot of care is taken during the human annotation process to ensure that a consistent

treatment is provided across the treebank for related grammatical phenomena.

There is no set of syntactic rules or linguistic grammar explicitly provided by a treebank, and

typically there is no list of syntactic constructions provided explicitly in a treebank.

A detailed set of assumptions about the syntax is typically used as an annotation guideline

to help the human experts produce the single-most plausible syntactic analysis for each

sentence in the corpus.

Treebanks provide a solution to the two kinds of knowledge acquisition bottlenecks.

Treebanks solve the first knowledge acquisition problem of finding the grammar underlying

the syntax analysis because the syntactic analysis is directly given instead of a grammar.

In fact, the parser does not necessarily need any explicit grammar rules as long as it can

faithfully produce a syntax analysis for an input sentence.

Treebank solve the second knowledge acquisition problem as well.

Because each sentence in a treebank has been given its most plausible(probable) syntactic

analysis, supervised machine learning methods can be used to learn a scoring function over

all possible syntax analyses.

Two main approaches to syntax analysis are used to construct treebanks: dependency graph

and phrase structure trees.

These two representations are very closely related to each other and under some

assumptions, one representation can be converted to another.

Dependence analysis is typically favoured for languages such as Czech and Turkish, that have

free word order.

Phrase structure analysis is often used to provide additional information about long-distance

dependencies and mostly languages like English and French.

+ NLP: is the capability of the computer software to understand the natural language.

* There are variety of languages in the world.

+ Each language has its own structure(SVO or SOV)->called grammar ->has certain

set of rules->determines: what is allowed, what is not allowed.

* English:S O V Other languages:S VO orOS V

| eat mango

+ Grammar is defined as the rules for forming well-structured sentences.

* belongs to Vy

* Different Types of Grammar in NLP

1.Context-Free Grammar (CFG) 2.Constituency Grammar (CG) or Phrase structure

grammar 3.Dependency Grammar (DG)

Context-Free Grammar (CFG)

+ Mathematically, a grammar G can be written as a 4-tuple (N, T, S, P)

* Nor Vy = set of non-terminal symbols, or variables.

* Tor > =set of terminal symbols.

+ S$ = Start symbol where S EN

+ P= Production rules for Terminals as well as Non-terminals.

+ Ithas the form a — B, where a and B are strings on Vy U > at least one symbol of a

Example: Jogn hit the ball

S ->NP VP

VP ->V NP

V hit

NP-> DN

D->the

N->John|ball

Natural Language Processing | Context Free Grammar | CFG | Easy explanation with Exam... @ & @

Tir the Capability Tf Compelir Soptmrane. [5

Underritand Ika noturol Lonyosy-

4

- ice Vaniely of wa lla Wald.

ian ee lowquogs for Ita sn. Shmetwre , LiKe SvO, Sov,

Y called

AMAR.

{hes

Lorrain net OF Rules.

Yaetowine

» What in allayable

> Who iomnot allevable-

| ee

You might also like

- Natural Language Processing by DR A NageshNo ratings yetNatural Language Processing by DR A Nagesh136 pages

- Introduction To Natural Language ProcessingNo ratings yetIntroduction To Natural Language Processing69 pages

- Introduction To Natural Language ProcessingNo ratings yetIntroduction To Natural Language Processing45 pages

- Introduction To Natural Language Processing-03-01-2024No ratings yetIntroduction To Natural Language Processing-03-01-202427 pages

- B - N - M - Institute of Technology: Department of Computer Science & EngineeringNo ratings yetB - N - M - Institute of Technology: Department of Computer Science & Engineering44 pages

- ACFrOgBKMtkrKQXYgwzYfGAQxQ0GJjQ4MloahBs6vi5pwqo xRZUN6IRgh8lAAyR2U7sguAn6becvxh174Y RYo84nZ3K9mm OlN3Q JrDvd18FxMzMkCBuxruzd1tH0C6XqndKXsCSXuwHIWVT7olg5FKOstIhFYq-Kh6hMBgNo ratings yetACFrOgBKMtkrKQXYgwzYfGAQxQ0GJjQ4MloahBs6vi5pwqo xRZUN6IRgh8lAAyR2U7sguAn6becvxh174Y RYo84nZ3K9mm OlN3Q JrDvd18FxMzMkCBuxruzd1tH0C6XqndKXsCSXuwHIWVT7olg5FKOstIhFYq-Kh6hMBg32 pages

- 1.introduction To Natural Language Processing (NLP)100% (1)1.introduction To Natural Language Processing (NLP)37 pages

- Chapter 7 - Communication Perceving and ActingNo ratings yetChapter 7 - Communication Perceving and Acting21 pages

- Natural Language Processing Tools and ApproachesNo ratings yetNatural Language Processing Tools and Approaches106 pages

- Atural Anguage Rocessing: Chandra Prakash LPUNo ratings yetAtural Anguage Rocessing: Chandra Prakash LPU59 pages

- FALLSEM2019-20 CSE4022 ETH VL2019201002590 Reference Material I 17-Jul-2019 NLP1-Lecture 4No ratings yetFALLSEM2019-20 CSE4022 ETH VL2019201002590 Reference Material I 17-Jul-2019 NLP1-Lecture 434 pages