0% found this document useful (0 votes)

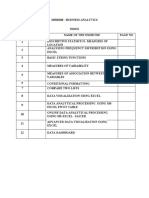

21 views11 pagesUnit - 1 Learning Notes

The document provides an overview of Big Data Analytics, highlighting the challenges and opportunities presented by the vast amounts of data generated in various industries. It distinguishes between Business Intelligence (BI) and Data Science, emphasizing the need for advanced analytical methods to derive actionable insights from complex data. Additionally, it discusses the current analytical architecture, challenges faced, and the emerging ecosystem surrounding Big Data, underscoring the evolving roles of data scientists in addressing business challenges through analytics.

Uploaded by

Krishnaprasanna MCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

21 views11 pagesUnit - 1 Learning Notes

The document provides an overview of Big Data Analytics, highlighting the challenges and opportunities presented by the vast amounts of data generated in various industries. It distinguishes between Business Intelligence (BI) and Data Science, emphasizing the need for advanced analytical methods to derive actionable insights from complex data. Additionally, it discusses the current analytical architecture, challenges faced, and the emerging ecosystem surrounding Big Data, underscoring the evolving roles of data scientists in addressing business challenges through analytics.

Uploaded by

Krishnaprasanna MCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 11