0% found this document useful (0 votes)

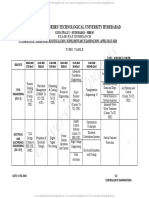

13 views37 pagesUnit II Search

The document discusses various search algorithms in Artificial Intelligence, including Random Search, Depth-First Search (DFS), Breadth-First Search (BFS), and heuristic search methods. It explains the features, advantages, and disadvantages of each algorithm, along with their applications and basic operational steps. Additionally, it provides pseudo-code and examples to illustrate how these algorithms work in practice.

Uploaded by

charuumcaCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as DOCX, PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

13 views37 pagesUnit II Search

The document discusses various search algorithms in Artificial Intelligence, including Random Search, Depth-First Search (DFS), Breadth-First Search (BFS), and heuristic search methods. It explains the features, advantages, and disadvantages of each algorithm, along with their applications and basic operational steps. Additionally, it provides pseudo-code and examples to illustrate how these algorithms work in practice.

Uploaded by

charuumcaCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as DOCX, PDF, TXT or read online on Scribd

/ 37