CLUSTERING

EE-671 Prof L. Behera, IITK

Presented By: Gagandeep Kaur, 10204069

�What Is Not Covered

Clustering The Concept

Goals of Clustering Possible Applications Requirements Problems How are the Clustering Algorithms Classified

�What Is Clustering

�The Goals of Clustering

To determine the intrinsic grouping in a set of unlabeled data.

�Possible Applications

Marketing: finding groups of customers with similar

behavior given a large database of customer data containing their properties and past buying records; Biology: classification of plants and animals given their features; Libraries: book ordering; Insurance: identifying groups of motor insurance policy holders with a high average claim cost; identifying frauds; City-planning: identifying groups of houses according to their house type, value and geographical location; Earthquake studies: clustering observed earthquake epicenters to identify dangerous zones; WWW: document classification; clustering weblog data to discover groups of similar access patterns.

�Requirements

Scalability; Dealing with different types of attributes; Discovering clusters with arbitrary shape; Minimal requirements for domain knowledge to determine input parameters; Ability to deal with noise and outliers; Insensitivity to order of input records; High dimensionality; Interpretability and usability.

�Problems

current clustering techniques do not address all the

requirements adequately (and concurrently); dealing with large number of dimensions and large number of data items can be problematic because of time complexity; the effectiveness of the method depends on the definition of distance (for distance-based clustering); if an obvious distance measure doesnt exist we must define it, which is not always easy, especially in multidimensional spaces; the result of the clustering algorithm (that in many cases can be arbitrary itself) can be interpreted in different ways.

�Finally! - To Be Covered Is

�Loading

�Four of the most used clustering algorithms:

K-means Fuzzy C-means Hierarchical clustering Mixture of Gaussians

Talk About This one !

�Hierarchical Clustering

Clustering is the assignment of data objects (records) into

groups (called clusters) so that data objects from the same cluster are more similar to each other than objects from different clusters . http://en.wikipedia.org/wiki/Data_clustering The Hierarchical method works by grouping data objects(records) into a tree of clusters.

Uses distance(similarity) matrix as clustering criteria. We

provide a termination condition

Classified Further as

Agglomerative Hierarchical Clustering Divisive Hierarchical Clustering

�Agglomerative Hierarchical Clustering

Data objects are grouped in a bottom-up fashion.

Initially each data object is in its own cluster.

Then merge these atomic clusters into larger and larger clusters, until all of the objects are in a single cluster or until certain termination

conditions are satisfied. Termination condition can be specified by the user, as the desired number of clusters.

�Divisive Hierarchical Clustering

In this data objects are grouped in a top down manner

Initially all objects are in one cluster

Then the cluster is subdivided into smaller and smaller pieces, until each object forms a cluster on its own or until it satisfies certain

termination conditions as the desired number of clusters is obtained.

�AGNES and DIANA

(AGglomerative NESting) (DIvisive ANAlysis) Agglomerative and divisive hierarchical clustering on data objects {a, b, c, d ,e }

Step 0 a b c d e

Step 1

Step 2 Step 3 Step 4

agglomerative (AGNES)

ab

abcde

cde de divisive (DIANA)

Step 4

Step 3

Step 2 Step 1 Step 0

�AGNES-Algorithm

1) Given a set of N objects to be clustered, and an N*N distance (or similarity) matrix, the basic process of hierarchical clustering (defined by S.C. Johnson in 1967) is this: Start by assigning each object to a cluster, so that for N objects, we have N clusters, each containing just one object. Let the distances (similarities) between the clusters the same as the distances (similarities) between the objects they contain. Find the closest (most similar) pair of clusters and merge them into a single cluster, so that now we have one cluster less. Compute distances (similarities) between the new cluster and each of the old clusters. Repeat steps 3 and 4 until all items are clustered into a single cluster of size N.

2)

3)

4) 5)

� Continue

Step 4 can be done in different ways, and this

distinguishes single-linkage, complete linkage and average-linkage clustering For Single Linkage: distance between one cluster and another cluster is equal to the shortest distance from any member of one cluster to any member of the other cluster For Complete Linkage: distance between one cluster and another cluster to be equal to the greatest distance from any member of one cluster to any member of the other cluster.

�Define Inter-Cluster Similarity

Similarity? distance

l l l

MIN MAX Group Average

�Define Inter-Cluster Similarity

distance = shortest distance, nearest

l

l l

neighbor clustering algorithm MIN Linkage MAX Linkage Group Average

�Define Inter-Cluster Similarity

distance = longest distance , farthest

l l l

neighbor clustering algorithm MIN Linkage MAX Linkage Group Average Linkage

�Define Inter-Cluster Similarity

average-link clustering, distance = average distance

l l l

MIN Linkage MAX Linkage Group Average Linkage

�Single Linkage Hierarchical Clustering Example

Initially Every point is its own cluster

�Single Linkage Example

1. Every point is its own cluster 2. Find most similar(distance) pair of

clusters

�Single Linkage Hierarchical Clustering Example

1. Every point is its own cluster 2. Find most similar pair of clusters 3. Merge it into a parent cluster

� CONT

1. Every point is its own cluster

2. Find most similar pair of clusters

3. Merge it into a parent cluster 4. Repeat

�Showing Below How a Hierarchical Tree is Formed

�Example 2 :A hierarchical clustering of distances in kilometers between some Italian cities. The method used is single-linkage.

BA

FI

MI

NA

RM

TO

BA

FI MI NA

0

662 877 255

662

0 295 468

877

295 0 754

255

468 754 0

412

268 564 219

996

400 138 869

RM

TO

412

996

268

400

564

138

219

869

0

669

669

0

�Procedure

The nearest pair of cities is MI and TO, at distance 138. These are merged into a single cluster called "MI/TO". The level of the new cluster is L(MI/TO) = 138 and the new sequence number is m = 1. Then we compute the distance from this new compound object to all other objects. In single link clustering the rule is that the distance from the compound object to another object is equal to the shortest distance from any member of the cluster to the outside object. So the distance from "MI/TO" to RM is chosen to be 564, which is the distance from MI to RM, and so on.

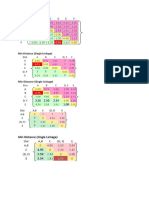

�Step-1, Input Distance Matrix L=0 for all clusters

BA BA FI 0 662

FI 662 0

MI/TO 877 295

NA 255 468

RM 412 268

MI/TO

NA RM

877

255 412

295

468 268

0

754 564

754

0 219

564

219 0

�Step-2,

min d(i,j) = d(NA,RM) = 219 => merge NA and RM into a new cluster called NA/RM L(NA/RM) = 219 m=2

BA BA FI MI/TO NA/RM 0 662 877 255

FI 662 0 295 268

MI/TO 877 295 0 564

NA/RM 255 268 564 0

�min d(i,j) = d(BA,NA/RM) = 255 => merge BA and NA/RM into a new cluster called BA/NA/RM L(BA/NA/RM) = 255 m = 3

BA/NA/RM

BA/NA/RM FI MI/TO 0 268 564

FI

268 0 295

MI/TO

564 295 0

�min d(i,j) = d(BA/NA/RM,FI) = 268 => merge BA/NA/RM and FI into a new cluster called BA/FI/NA/RM L(BA/FI/NA/RM) = 268 m = 4 BA/FI/NA/RM BA/FI/NA/RM MI/TO 0 295 MI/TO 295 0

�Finally, we merge the last two clusters at level 295. The process is summarized by the following hierarchical tree:

�Advantages:

Is simple and outputs a hierarchy, a structure that is

more informative It does not require us to pre-specify the number of clusters

Disadvantages:

Selection of merge or split points is critical as once a

group of objects is merged or split, it will operate on the newly generated clusters and will not undo what was done previously. Thus merge or split decisions if not well chosen may lead to low-quality clusters