100% found this document useful (1 vote)

133 views26 pagesDistributed System

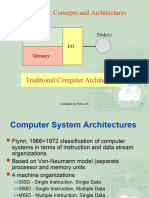

The document discusses distributed systems and parallel systems. It defines distributed systems as having loosely coupled distributed memory, with a focus on cost, scalability, reliability and resource sharing. Parallel systems are defined as having tightly coupled shared memory, with a focus on performance and scientific computing. The document outlines challenges in distributed systems like heterogeneity, transparency, openness, concurrency, security, scalability and failure handling. It then discusses the relation of distributed systems to computer system components and models of distributed execution. Finally, it covers differences between parallel and distributed systems, and concepts in parallel systems including parallel multiprocessor architectures, interconnection networks, and Flynn's taxonomy of parallelism.

Uploaded by

SMARTELLIGENTCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

100% found this document useful (1 vote)

133 views26 pagesDistributed System

The document discusses distributed systems and parallel systems. It defines distributed systems as having loosely coupled distributed memory, with a focus on cost, scalability, reliability and resource sharing. Parallel systems are defined as having tightly coupled shared memory, with a focus on performance and scientific computing. The document outlines challenges in distributed systems like heterogeneity, transparency, openness, concurrency, security, scalability and failure handling. It then discusses the relation of distributed systems to computer system components and models of distributed execution. Finally, it covers differences between parallel and distributed systems, and concepts in parallel systems including parallel multiprocessor architectures, interconnection networks, and Flynn's taxonomy of parallelism.

Uploaded by

SMARTELLIGENTCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

/ 26