0% found this document useful (0 votes)

8 views20 pagesClassification

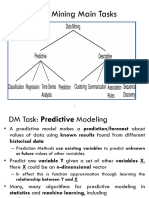

The document discusses classification and prediction in data mining, highlighting the differences between the two processes. Classification predicts categorical labels using models constructed from training data, while prediction focuses on continuous-valued functions. It also covers various classification methods, issues in data preparation, evaluation metrics, and algorithms like Bayesian classification and k-Nearest Neighbors.

Uploaded by

Kannan ThangaveluCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPT, PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

8 views20 pagesClassification

The document discusses classification and prediction in data mining, highlighting the differences between the two processes. Classification predicts categorical labels using models constructed from training data, while prediction focuses on continuous-valued functions. It also covers various classification methods, issues in data preparation, evaluation metrics, and algorithms like Bayesian classification and k-Nearest Neighbors.

Uploaded by

Kannan ThangaveluCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPT, PDF, TXT or read online on Scribd

/ 20