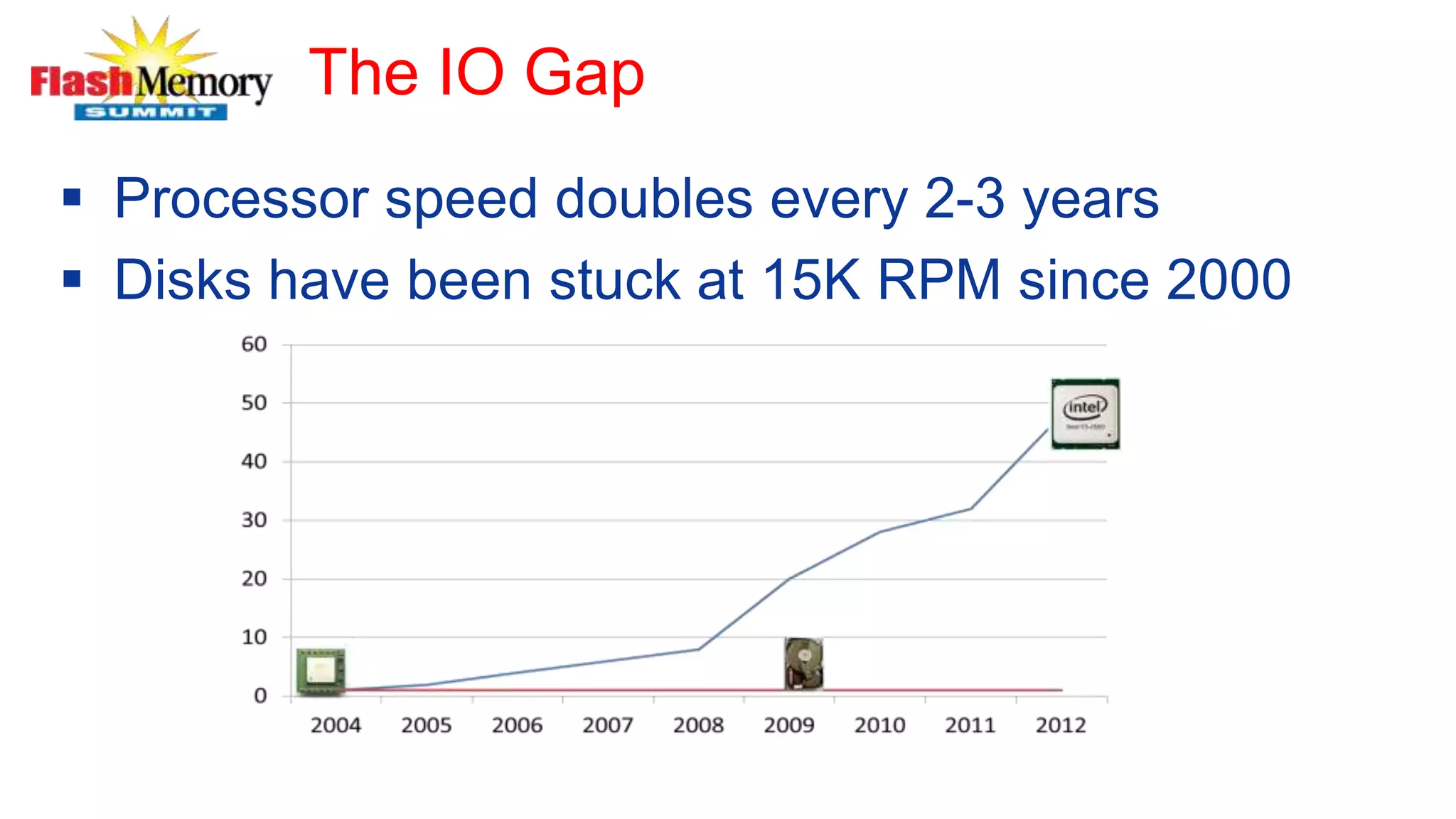

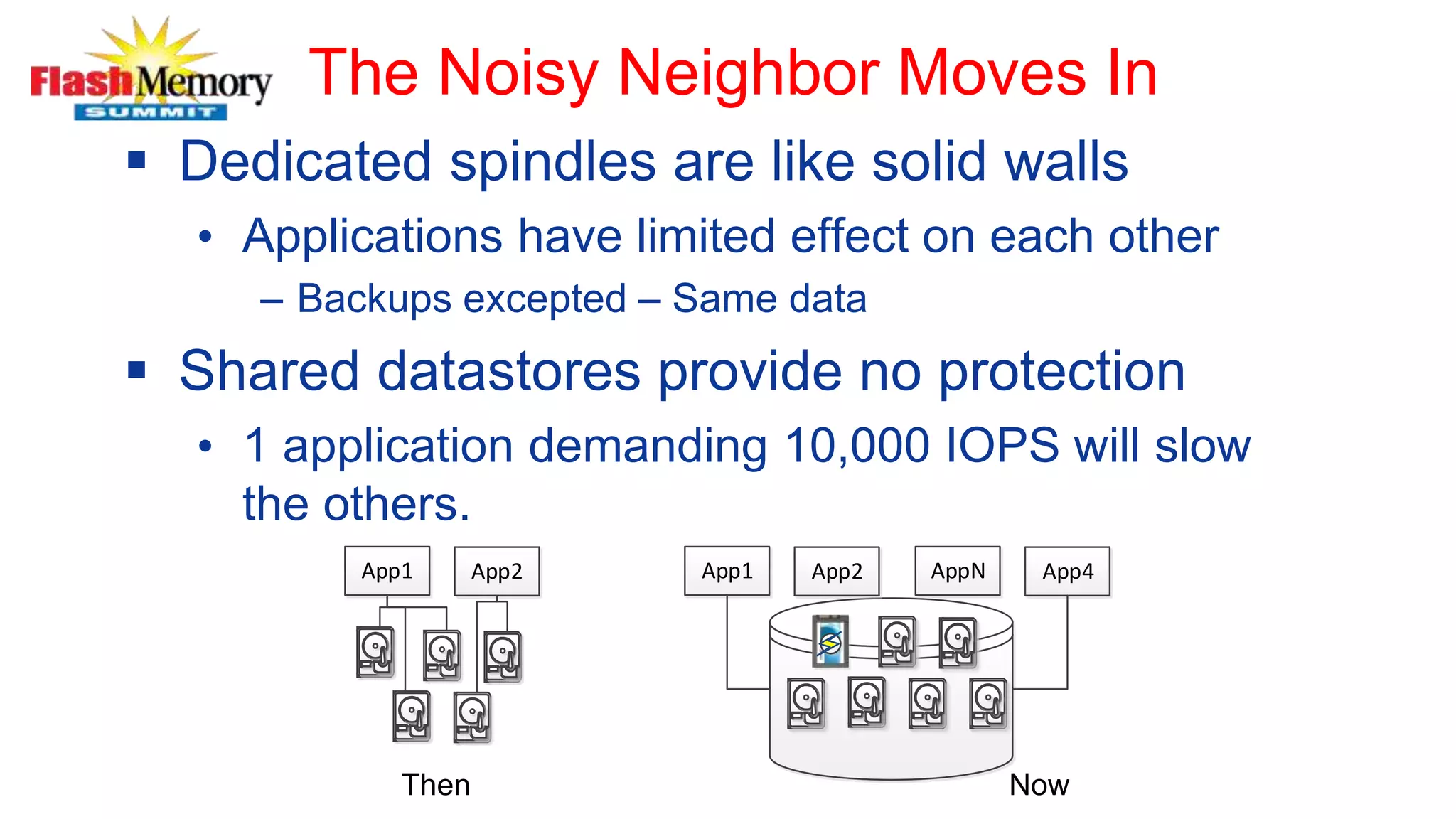

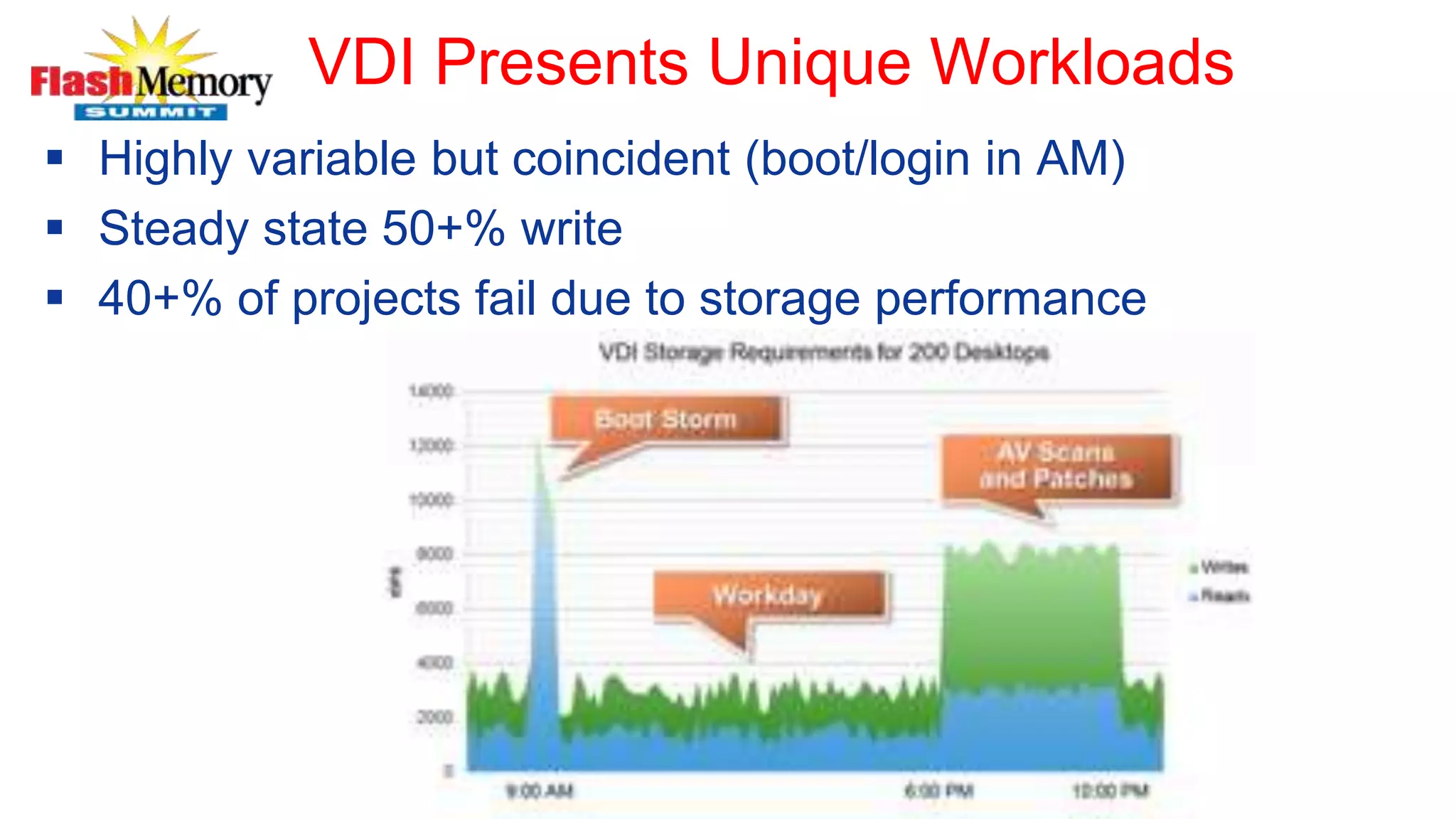

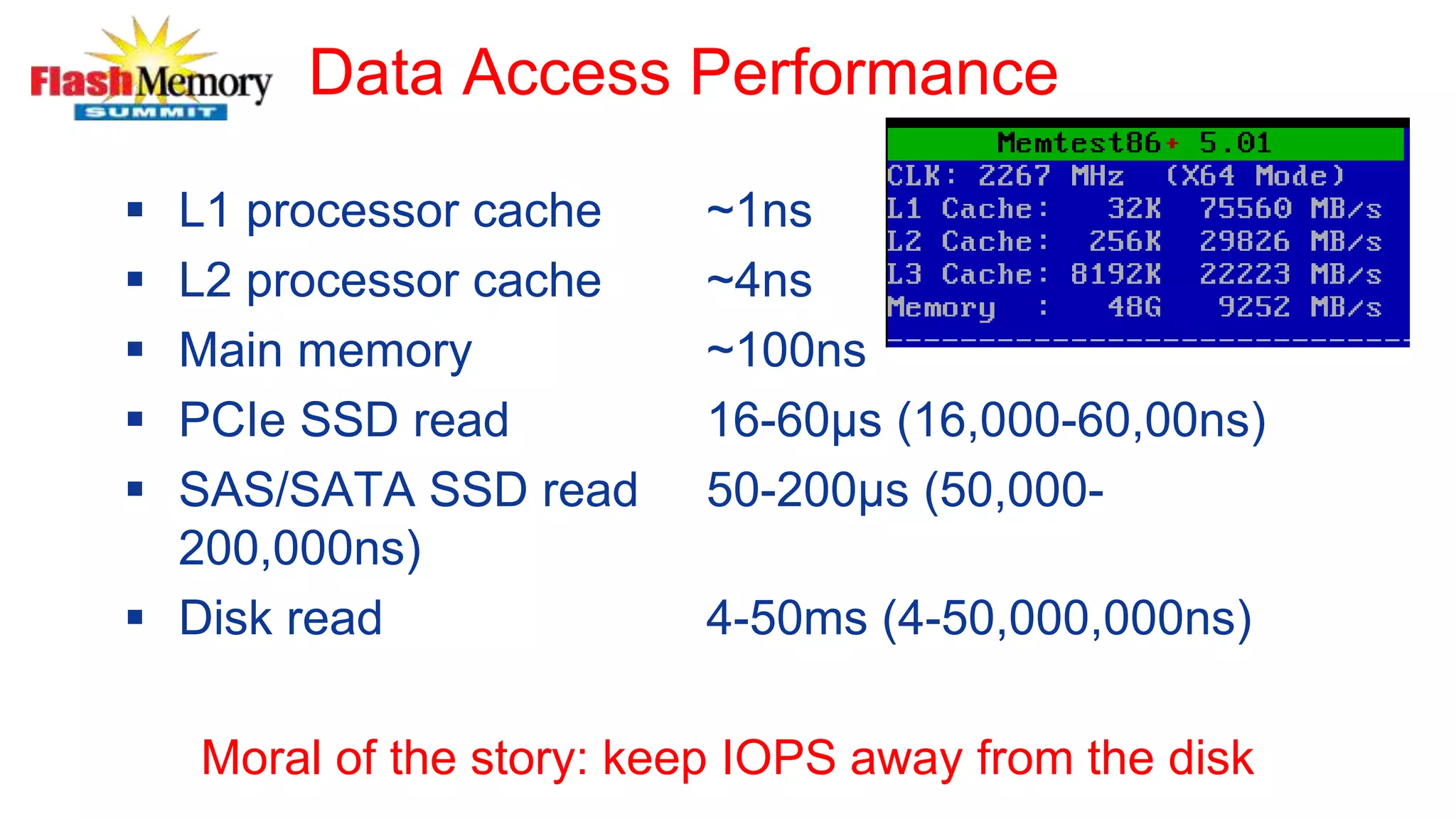

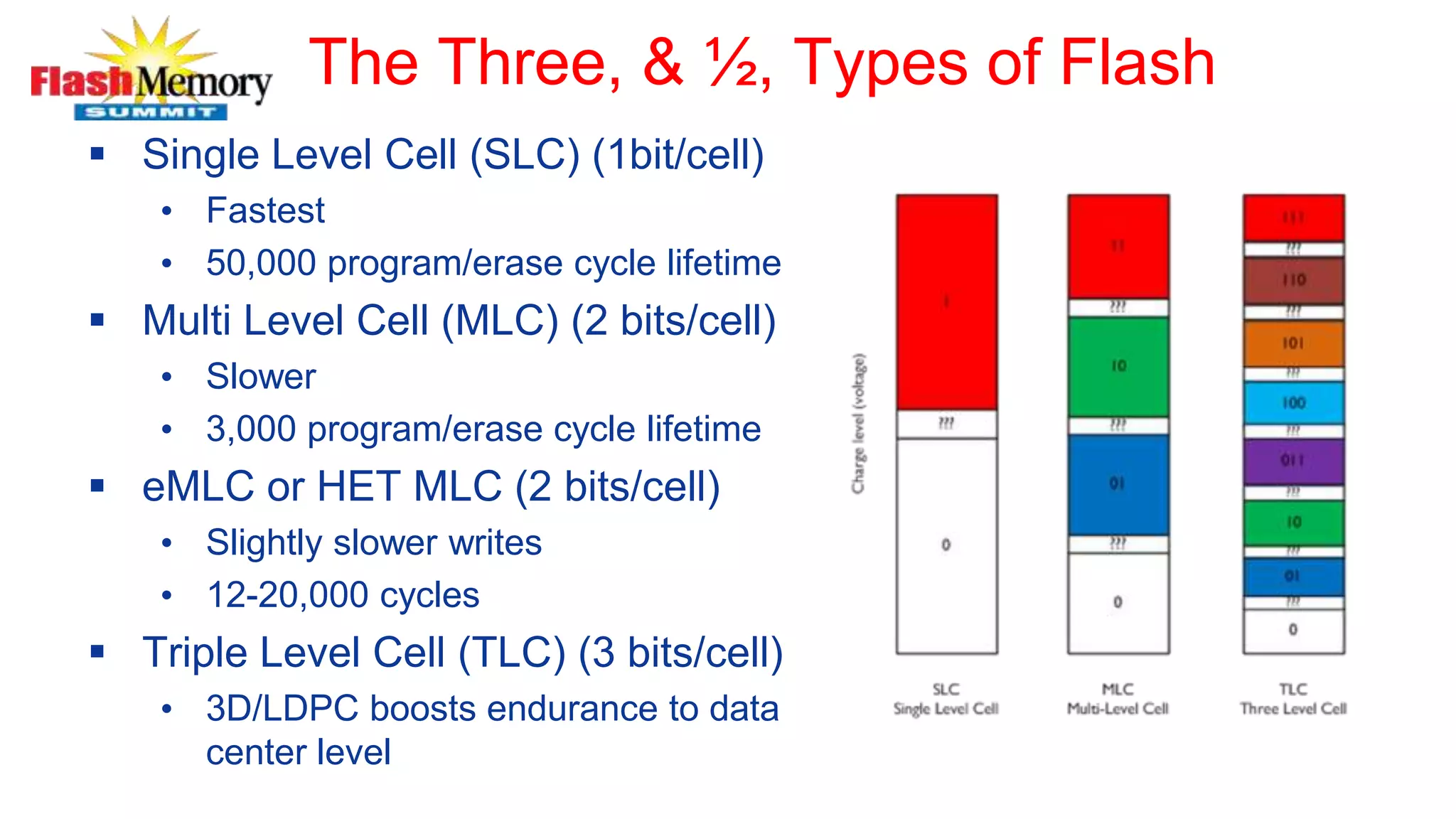

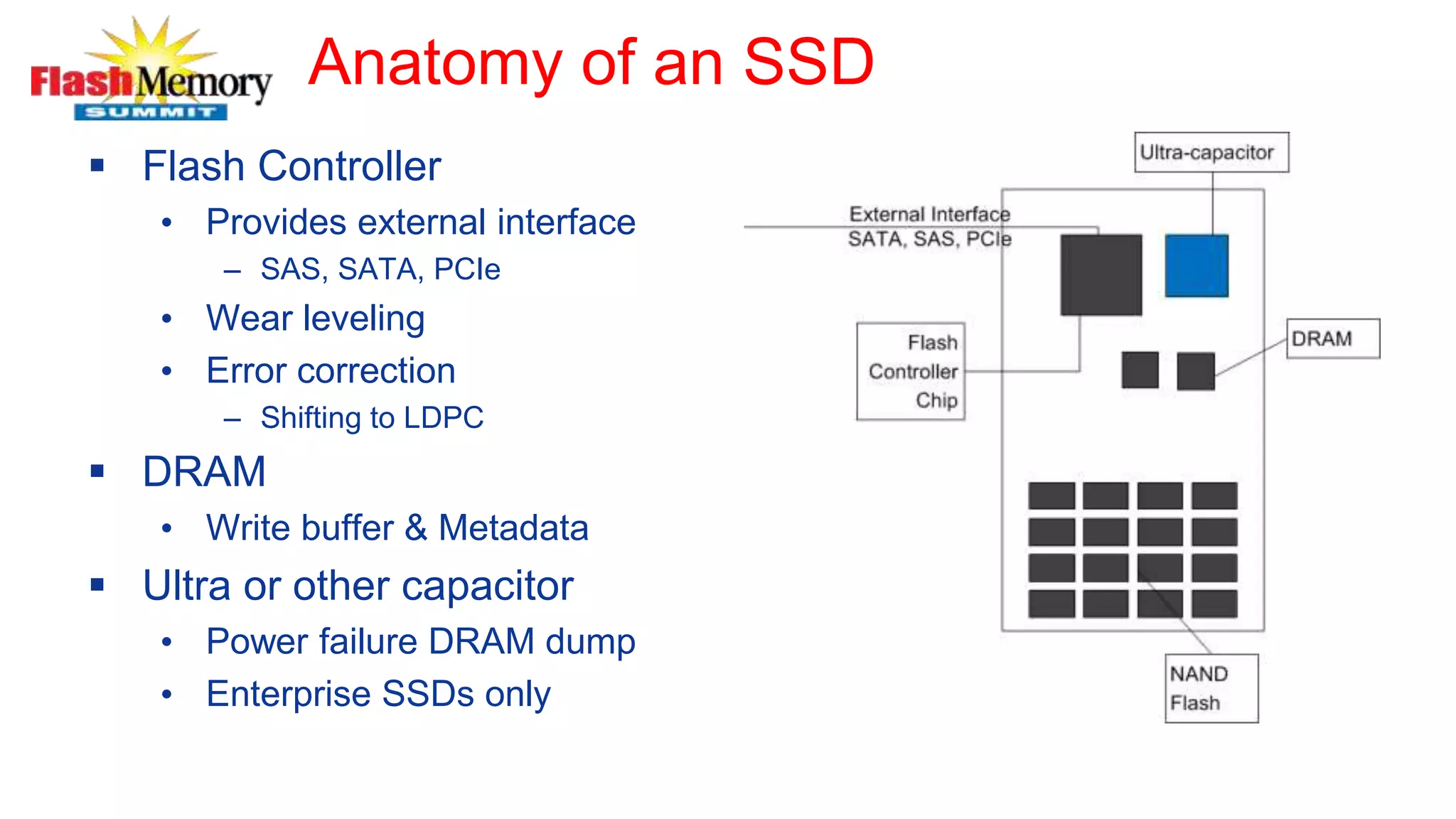

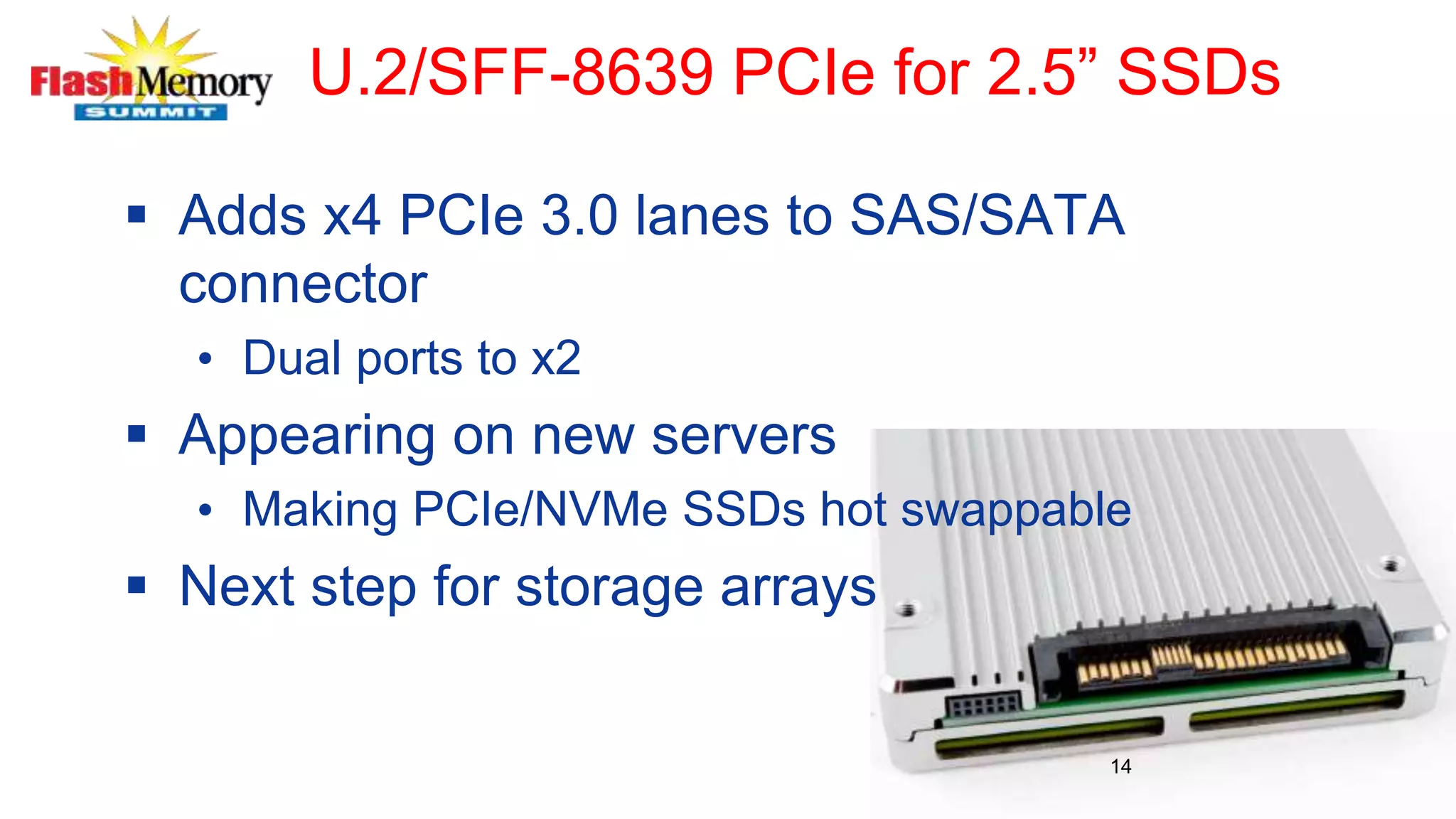

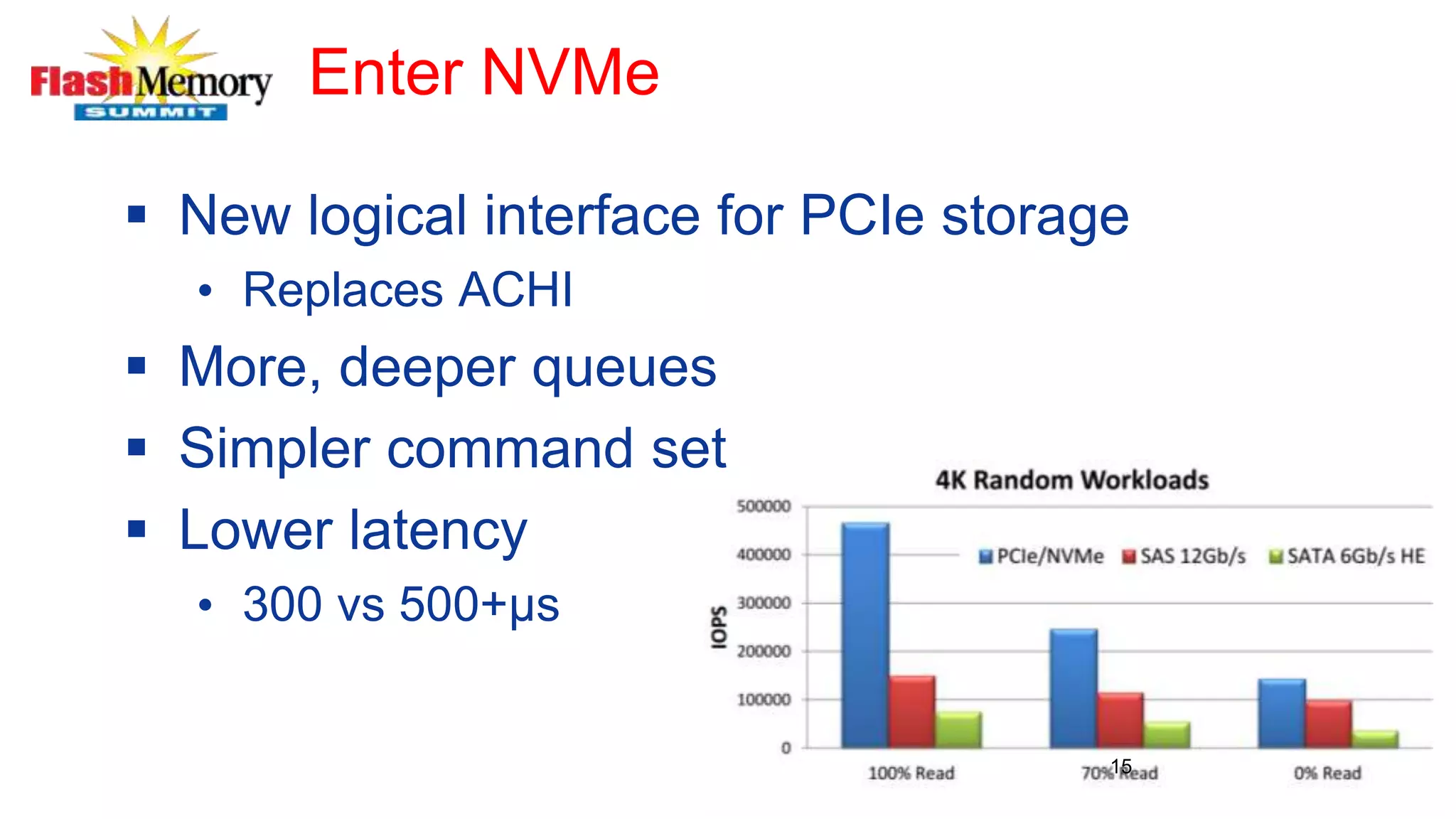

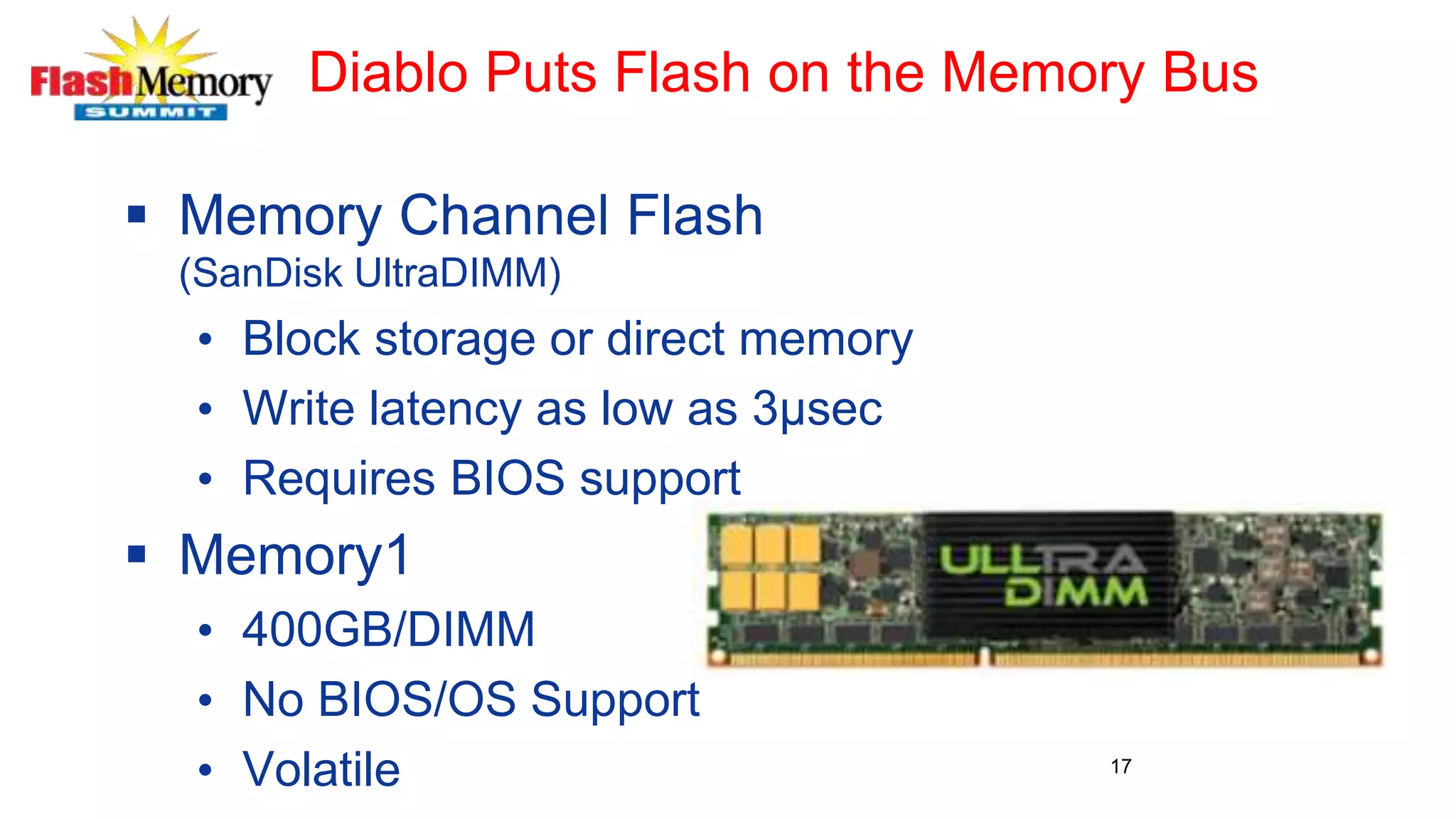

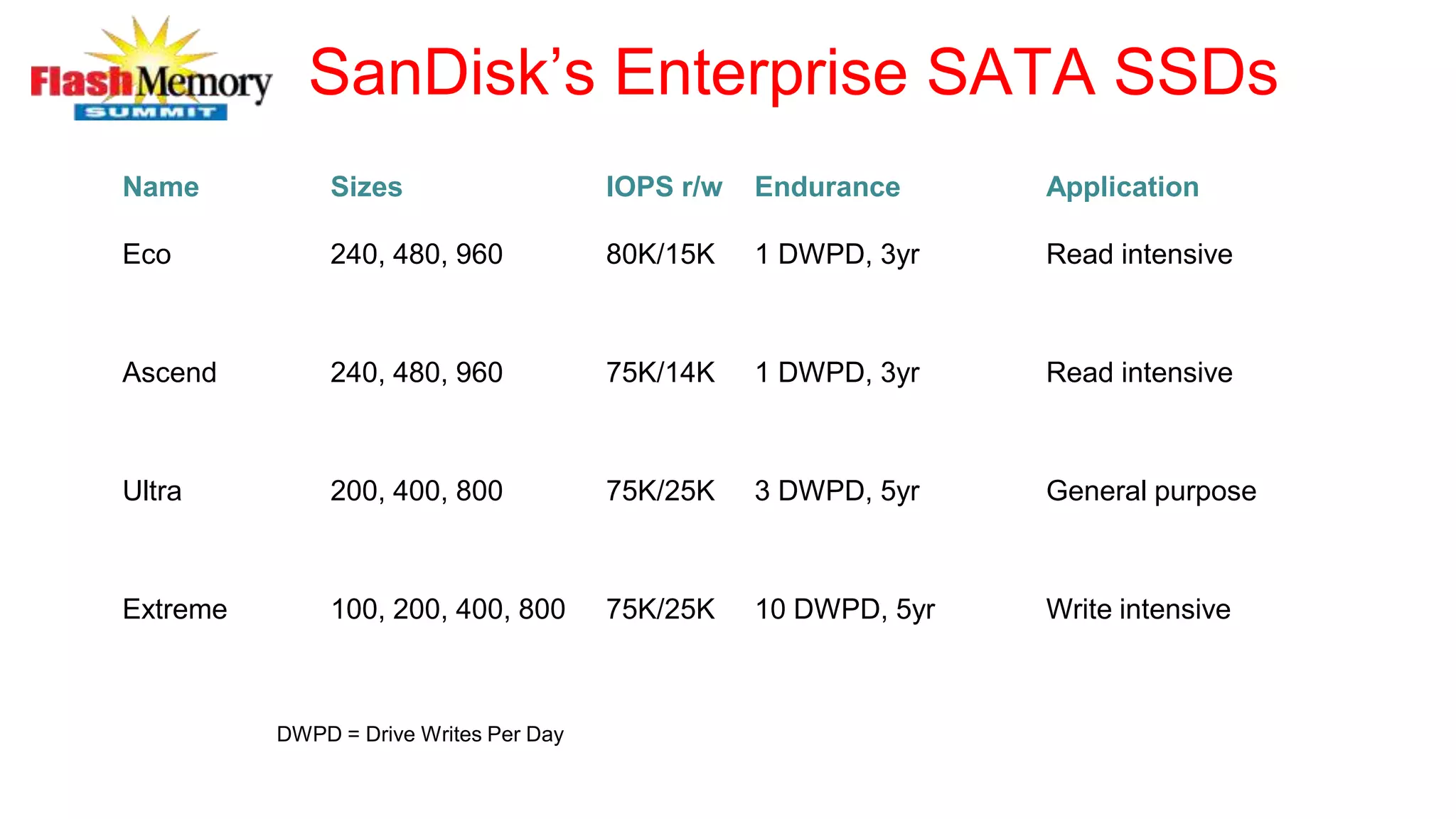

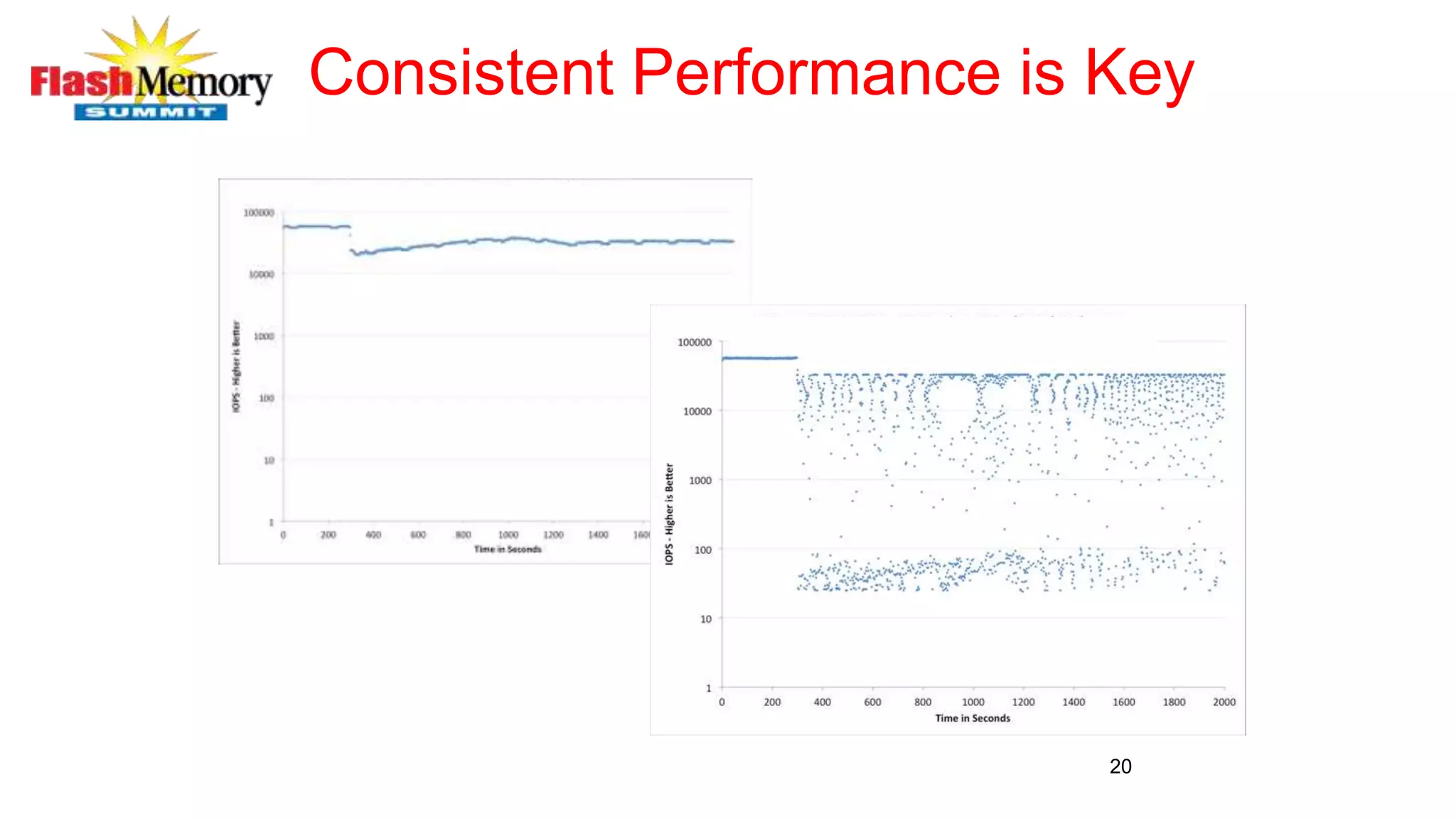

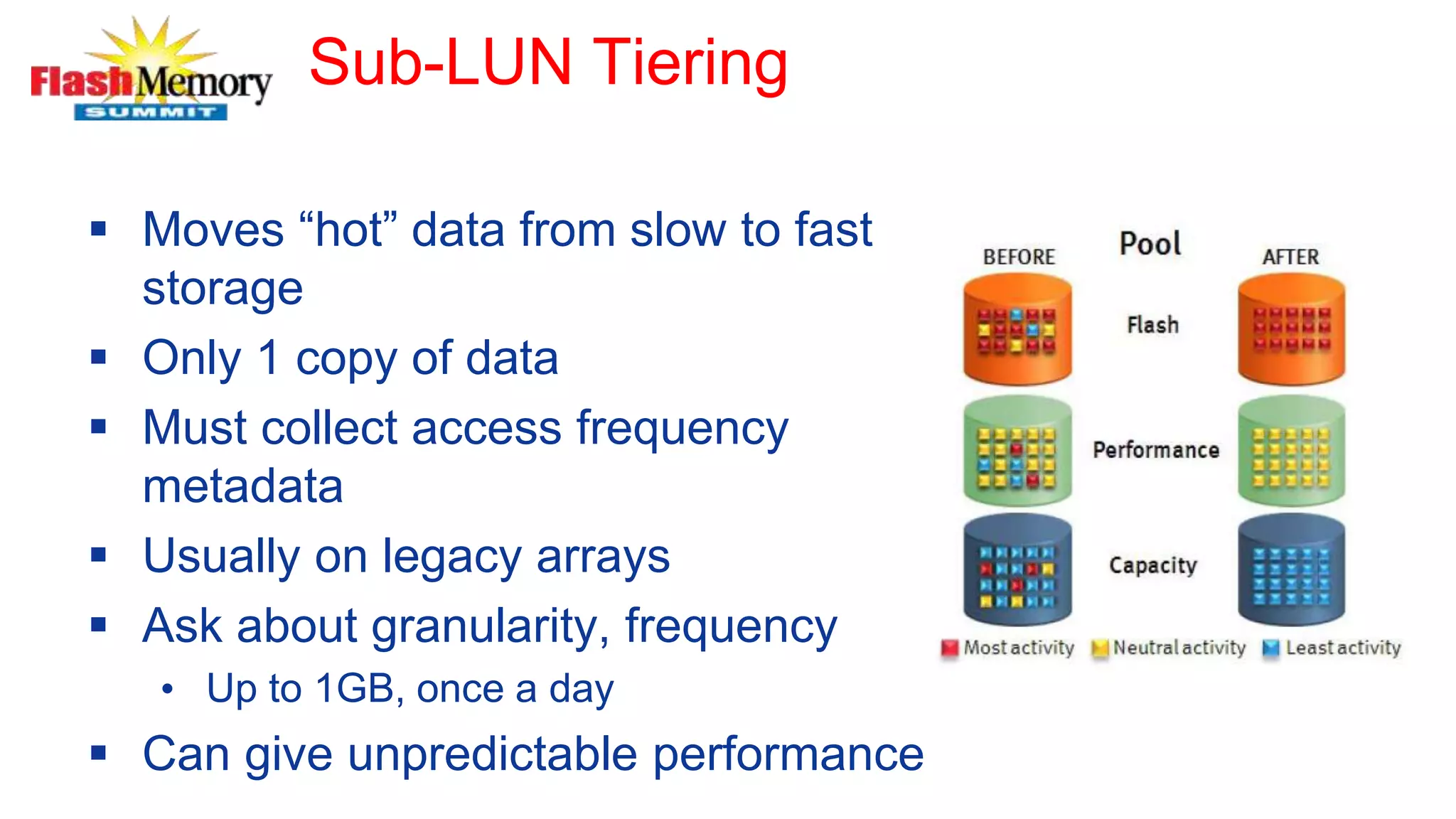

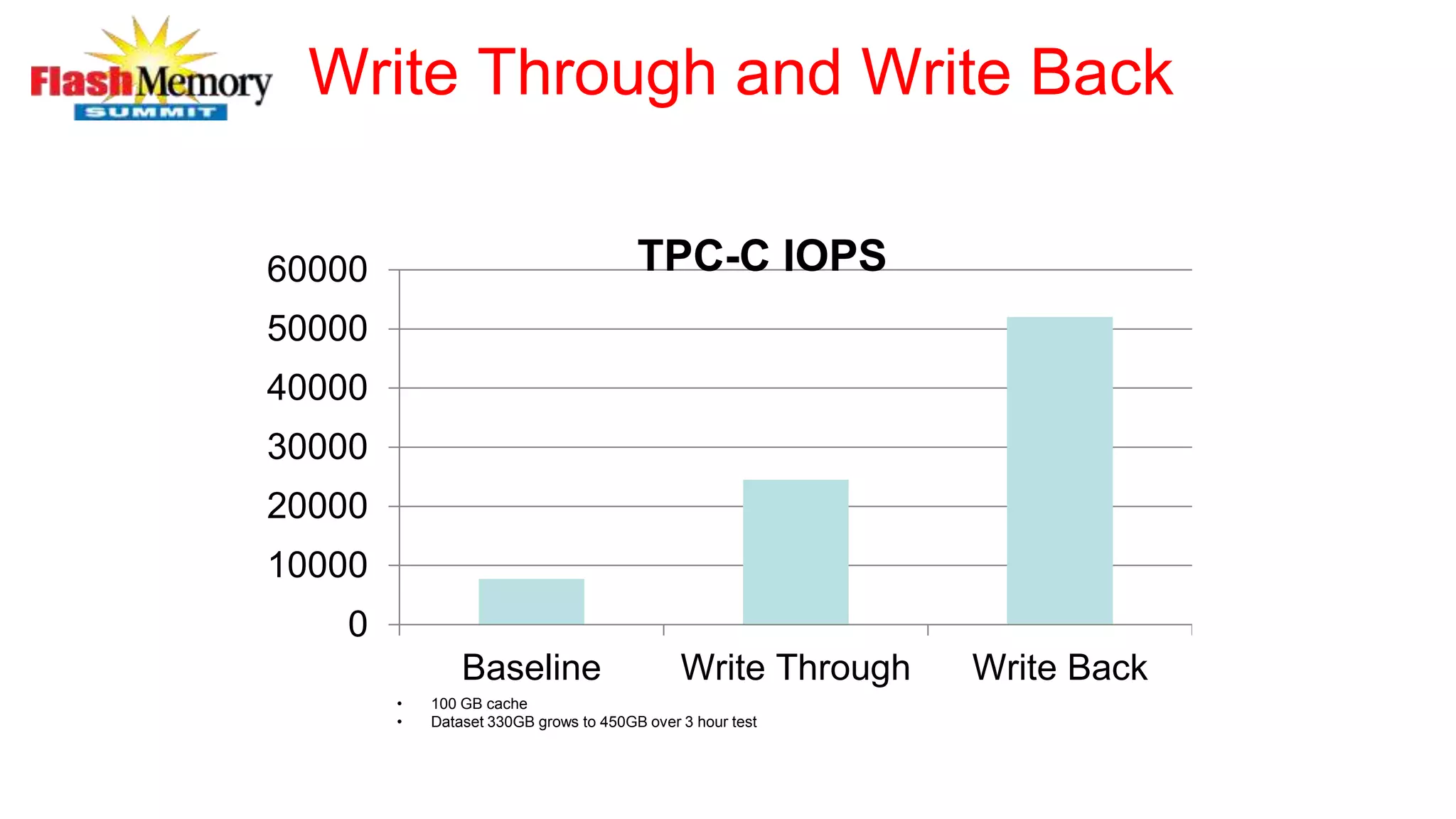

This document discusses deploying flash storage in the data center to improve storage performance. It begins with an overview of the performance gap between processors and disks. It then discusses all-flash arrays, hybrid arrays, server-side flash caching, and converged architectures as solutions. It provides details on flash memory types, form factors, and considerations for choosing a flash solution.