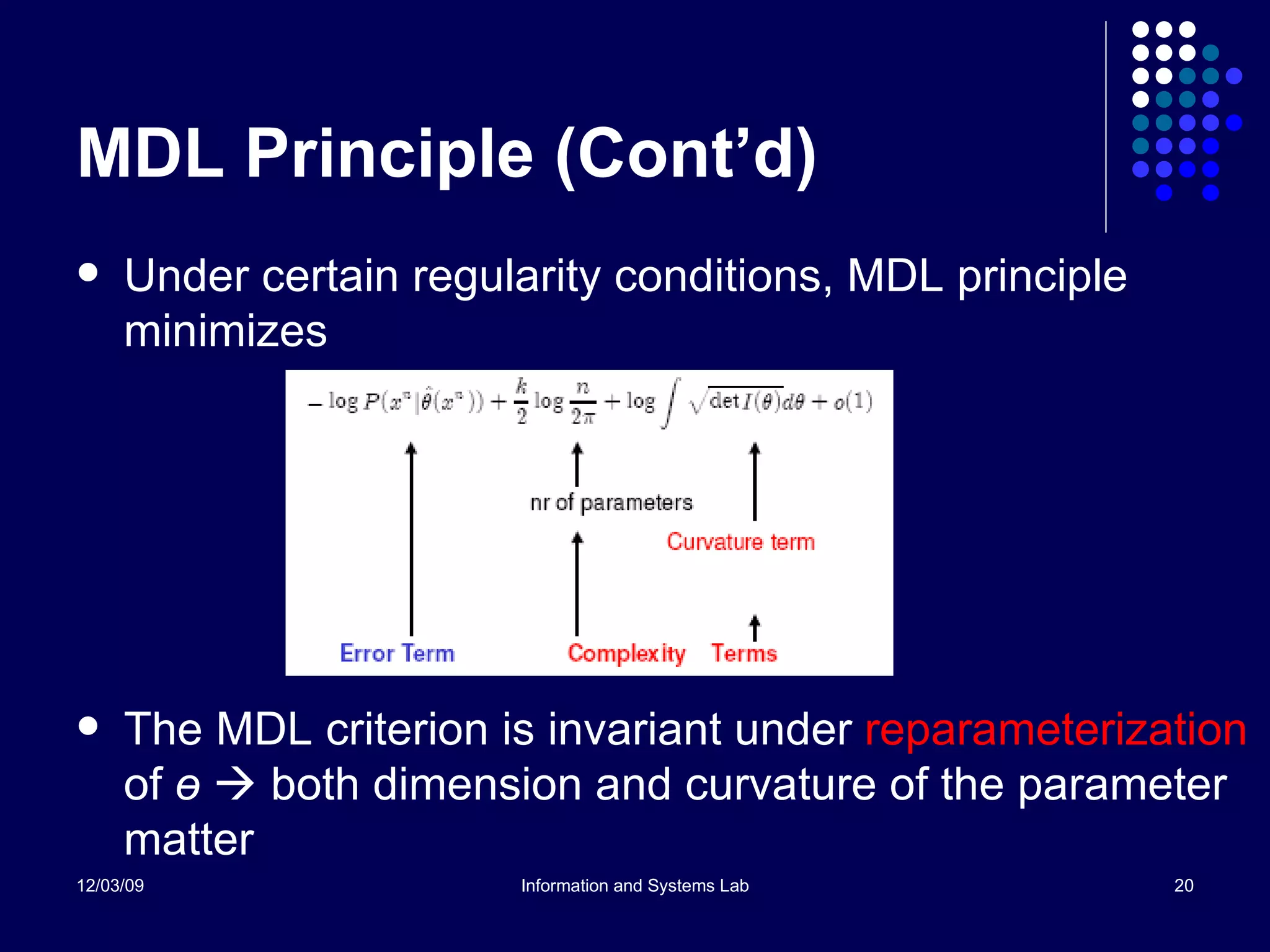

This document provides an introduction to statistical model selection. It discusses various approaches to model selection including predictive risk, Bayesian methods, information theoretic measures like AIC and MDL, and adaptive methods. The key goals of model selection are to understand the bias-variance tradeoff and select models that offer the best guaranteed predictive performance on new data. Model selection aims to find the right level of complexity to explain patterns in available data while avoiding overfitting.

![Predictive Risk (Cont’d) Calculate the residue squared sum RSS ( p ) and unbiased estimates σ ^2 = RSS ( p ) / ( n − p ) MSE = [ RSS ( p ) + 2 p σ ^2] / n Model complexity (Mallow) is C( p ) ≈ p Issues Consistency asymptotically overfit Effective bias estimate σ ^2 assuming p << n Hard to apply in problems other than regression](https://image.slidesharecdn.com/HChenIntroModelSelection-123207968268-phpapp03/75/Intro-to-Model-Selection-9-2048.jpg)

![Information Theoretic Measures (Cont’d) Encoding parameter in linear regression Bayesian Information Criterion (BIC) ( n /2)log RSS ( p ) + (p /2)log n Stochastic Information Criterion (SIC) ( n /2)log RSS ( p ) + ( p /2)log SIC ( p ) SIC ( p ) = [Y’Y −RSS( p )]/ p Akaike’s Information Criterion (AIC) ( n /2)log RSS ( p ) + p Minimum Description Length (MDL) ( n /2)log[ RSS ( p )/( n − p )] + ( p /2)log SIC ( p )](https://image.slidesharecdn.com/HChenIntroModelSelection-123207968268-phpapp03/75/Intro-to-Model-Selection-17-2048.jpg)