Backpropagation algorithm in Neural Network | PPTX

Recommended

PPTX

Back Propagation-11-11-2qwasdddddd024.pptx

PPTX

CS532L4_Backpropagation.pptx

PPTX

PPT

nural network ER. Abhishek k. upadhyay

PPT

PDF

Backpropagation - A peek into the Mathematics of optimization.pdf

PPTX

10 Backpropagation Algorithm for Neural Networks (1).pptx

PDF

Backpropagation: Understanding How to Update ANNs Weights Step-by-Step

PPTX

PDF

PDF

Design of airfoil using backpropagation training with mixed approach

PDF

Design of airfoil using backpropagation training with mixed approach

PDF

PPTX

Neural network basic and introduction of Deep learning

PPT

Chapter No. 6: Backpropagation Networks

PPTX

Training Neural Networks.pptx

PPTX

The-Backpropagation-Algorithm Algorithm.pptx

PPTX

The-Backpropagation-Algorithm for Deep Learning.pptx

PPTX

PDF

Lecture 5 backpropagation

PPTX

Artificial Neural Networks presentations

PPT

PPTX

Classification_by_back_&propagation.pptx

PPTX

Backpropagation And Gradient Descent In Neural Networks | Neural Network Tuto...

PDF

PDF

PPT

Neural networks,Single Layer Feed Forward

PPTX

Neural network - how does it work - I mean... literally!

PPT

k-mean-clustering algorithm for machine learning

PPTX

Decision Tree machine learning algorithms

More Related Content

PPTX

Back Propagation-11-11-2qwasdddddd024.pptx

PPTX

CS532L4_Backpropagation.pptx

PPTX

PPT

nural network ER. Abhishek k. upadhyay

PPT

PDF

Backpropagation - A peek into the Mathematics of optimization.pdf

PPTX

10 Backpropagation Algorithm for Neural Networks (1).pptx

PDF

Backpropagation: Understanding How to Update ANNs Weights Step-by-Step

Similar to Backpropagation algorithm in Neural Network

PPTX

PDF

PDF

Design of airfoil using backpropagation training with mixed approach

PDF

Design of airfoil using backpropagation training with mixed approach

PDF

PPTX

Neural network basic and introduction of Deep learning

PPT

Chapter No. 6: Backpropagation Networks

PPTX

Training Neural Networks.pptx

PPTX

The-Backpropagation-Algorithm Algorithm.pptx

PPTX

The-Backpropagation-Algorithm for Deep Learning.pptx

PPTX

PDF

Lecture 5 backpropagation

PPTX

Artificial Neural Networks presentations

PPT

PPTX

Classification_by_back_&propagation.pptx

PPTX

Backpropagation And Gradient Descent In Neural Networks | Neural Network Tuto...

PDF

PDF

PPT

Neural networks,Single Layer Feed Forward

PPTX

Neural network - how does it work - I mean... literally!

More from vipulkondekar

PPT

k-mean-clustering algorithm for machine learning

PPTX

Decision Tree machine learning algorithms

PPTX

Bivariate Analysis Statistics pesentation.pptx

PDF

Data-Quality-and-Preprocessing-Essential-Concepts-for-Engineering.pdf

PPTX

Free-Counselling-and-Admission-Facilitation-at-WIT-Campus.pptx

PPTX

Machine Learning Presentation for Engineering

PPTX

Exploring-Scholarship-Opportunities.pptx

PPTX

Documents-Required-for- Engineering Admissions.pptx

PPTX

Min Max Algorithm in Artificial Intelligence

PPTX

AO Star Algorithm in Artificial Intellligence

PPTX

A Star Algorithm in Artificial intelligence

PPTX

Artificial Intelligence Problem Slaving PPT

PPT

Deep Learning approach in Machine learning

PPT

Artificial Neural Network and Machine Learning

PPT

Microcontroller Timer Counter Modules and applications

PPT

Microcontroller 8051 timer and counter module

PPT

Microcontroller 8051 Timer Counter Interrrupt

PPTX

Microcontroller Introduction and the various features

PPTX

Avishkar competition presentation template

PPTX

Introduction to prototyping in developing the products

Recently uploaded

PDF

Scenario-based WLAN Planning Design for Warehouse.pdf

PDF

How Are Learning-Based Methods Reshaping Trajectory Planning in Autonomous D...

PPTX

Hydrocarbon traps, migration and accumulation of petroleum

PDF

Somnath Mukherjee_BIM Specialist_Portfolio.pdf

PDF

Overcoming QoS Challenges in a Full Automotive Ethernet Architecture

PPT

Basic electronics concepts like what is resistor , inductor capacitor

PDF

alireza payravi-resume english 1404-07-24.pdf

PPTX

Optimizing Wave Energy Capture: Utilizing Variable-Pitch Turbine Blades in We...

PDF

Boundary Conditions of field components.pdf

PPTX

Data types and datatype conversions of python programming.pptx

PPTX

Introduction to engineering dynamics for structural engineering student

PDF

How Are Learning-Based Methods Reshaping Trajectory Planning in Autonomous D...

PDF

Somnath Mukherjee_BIM Specialist_Resume.pdf

PDF

Performance analysis of recycled PET composites reinforced with waste slate d...

PDF

energies-14-0ggggggggggggggggg2725-v2.pdf

PDF

Vertical Roller Mill more detail inside function

PDF

mariadbwebinardr07301000156458558219.pdf

PDF

Introduction to Machine Learning: Foundations and Applications

PPTX

Understanding UL Wire Options that Surpass Industry Standards

PDF

Certified Cloud Security Professional (CCSP): Unit 4

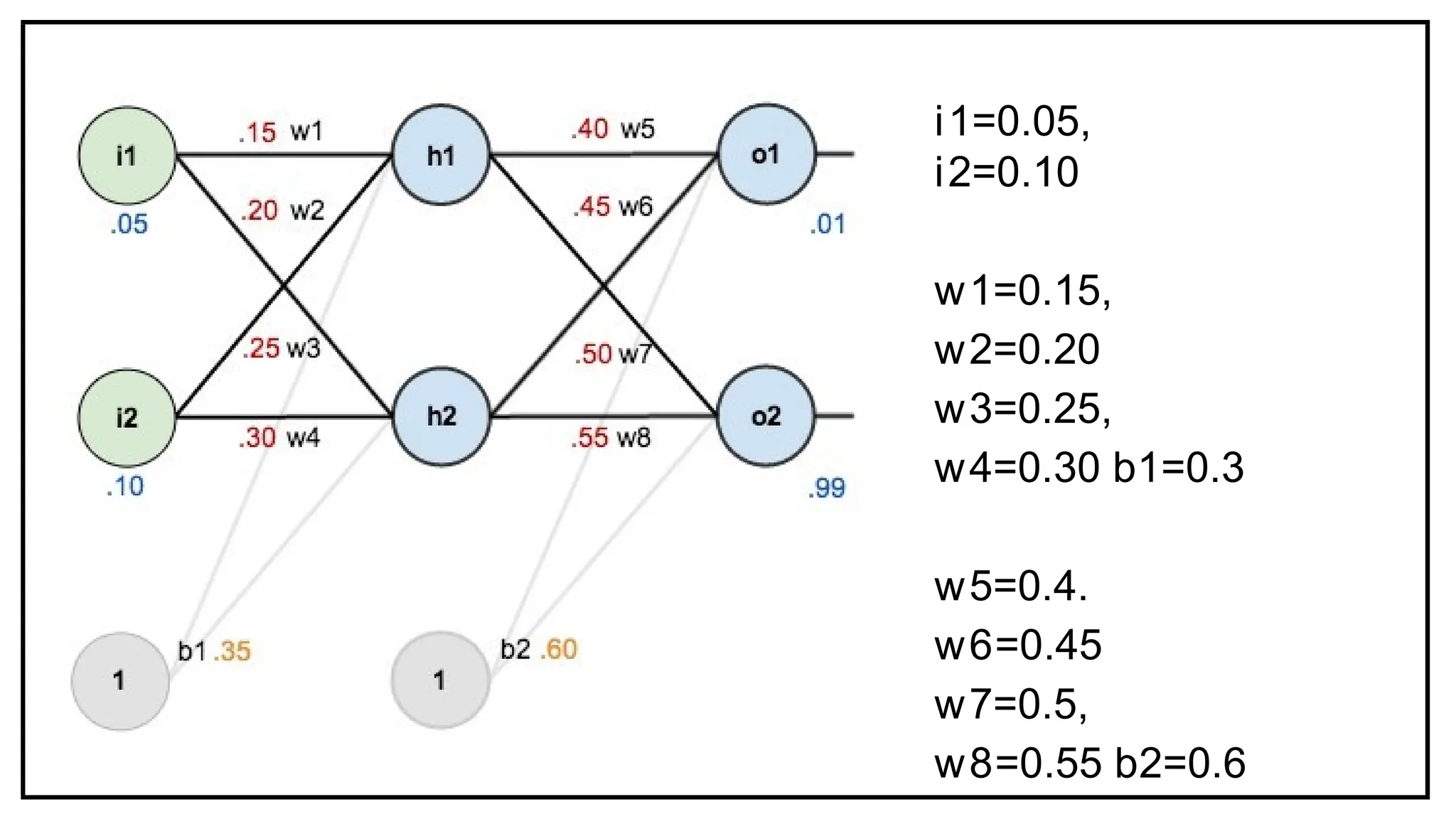

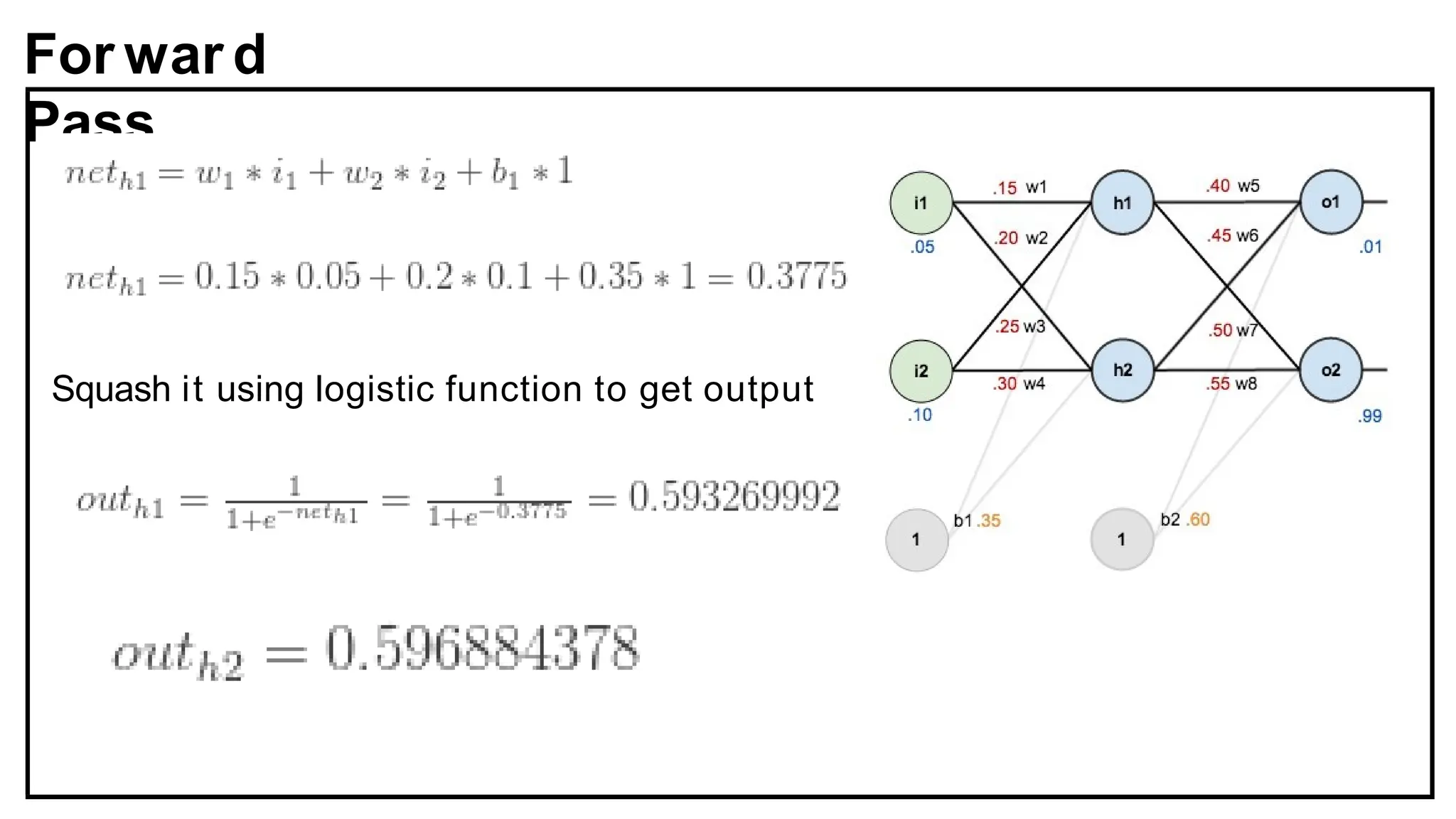

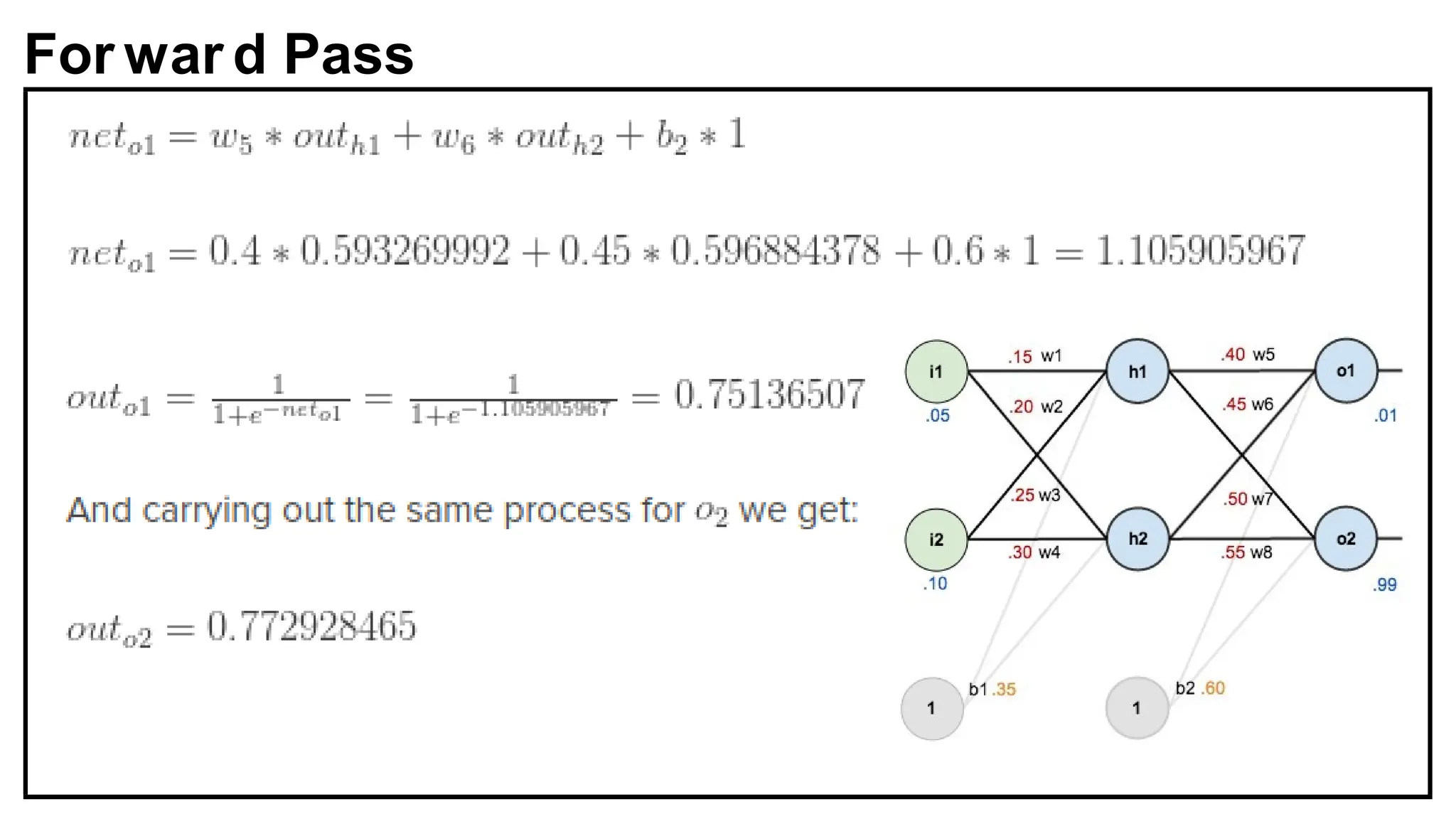

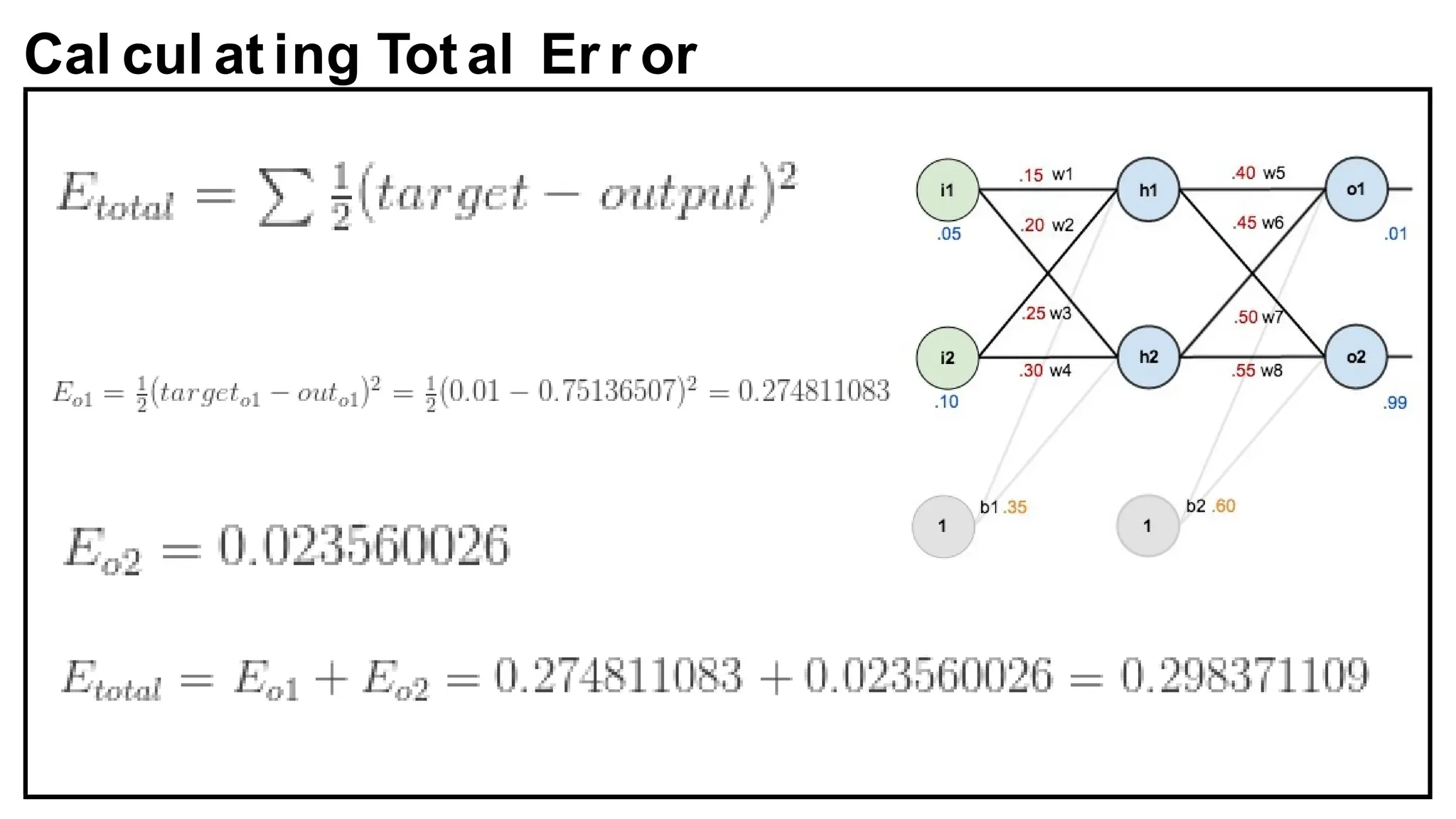

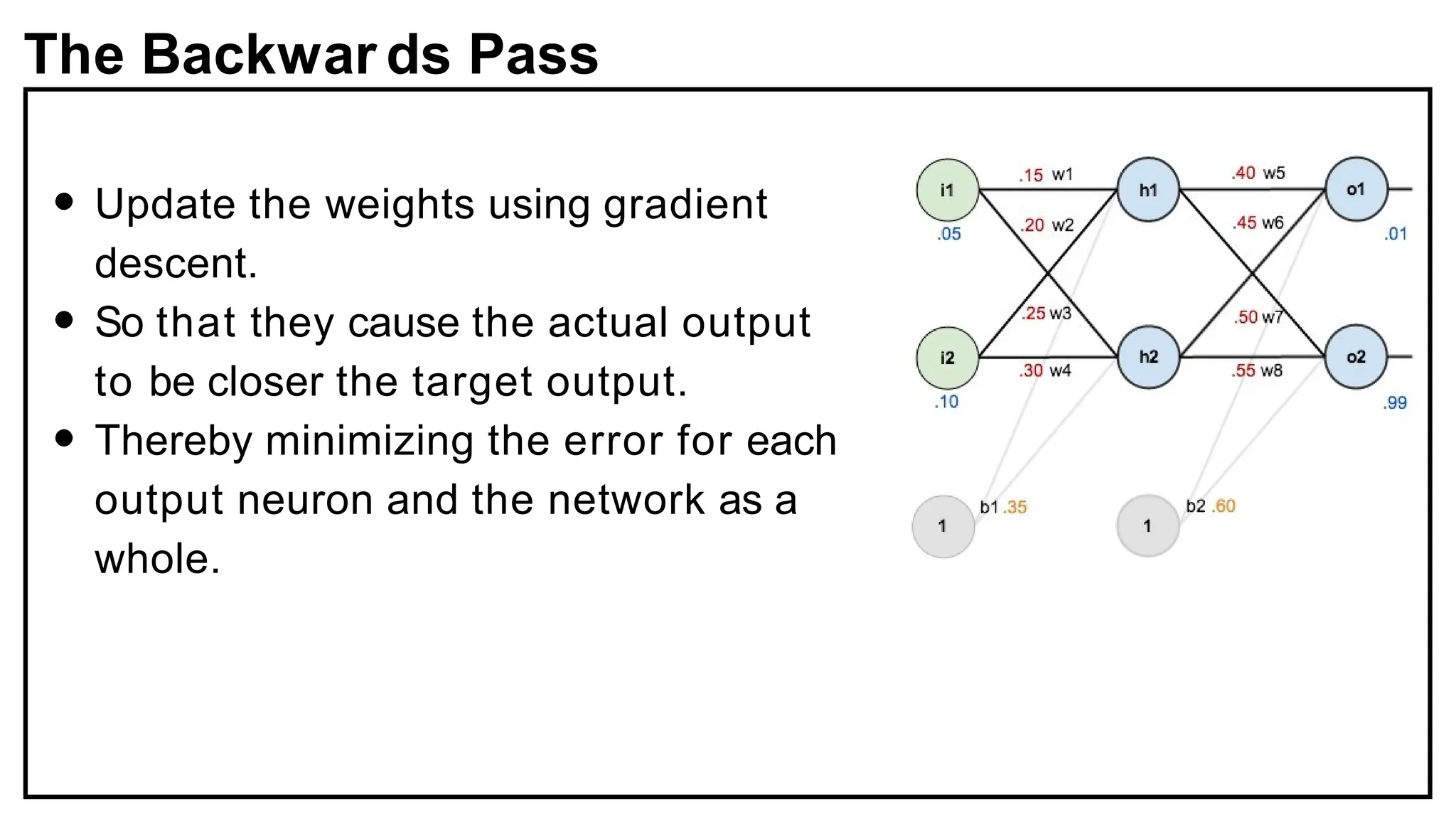

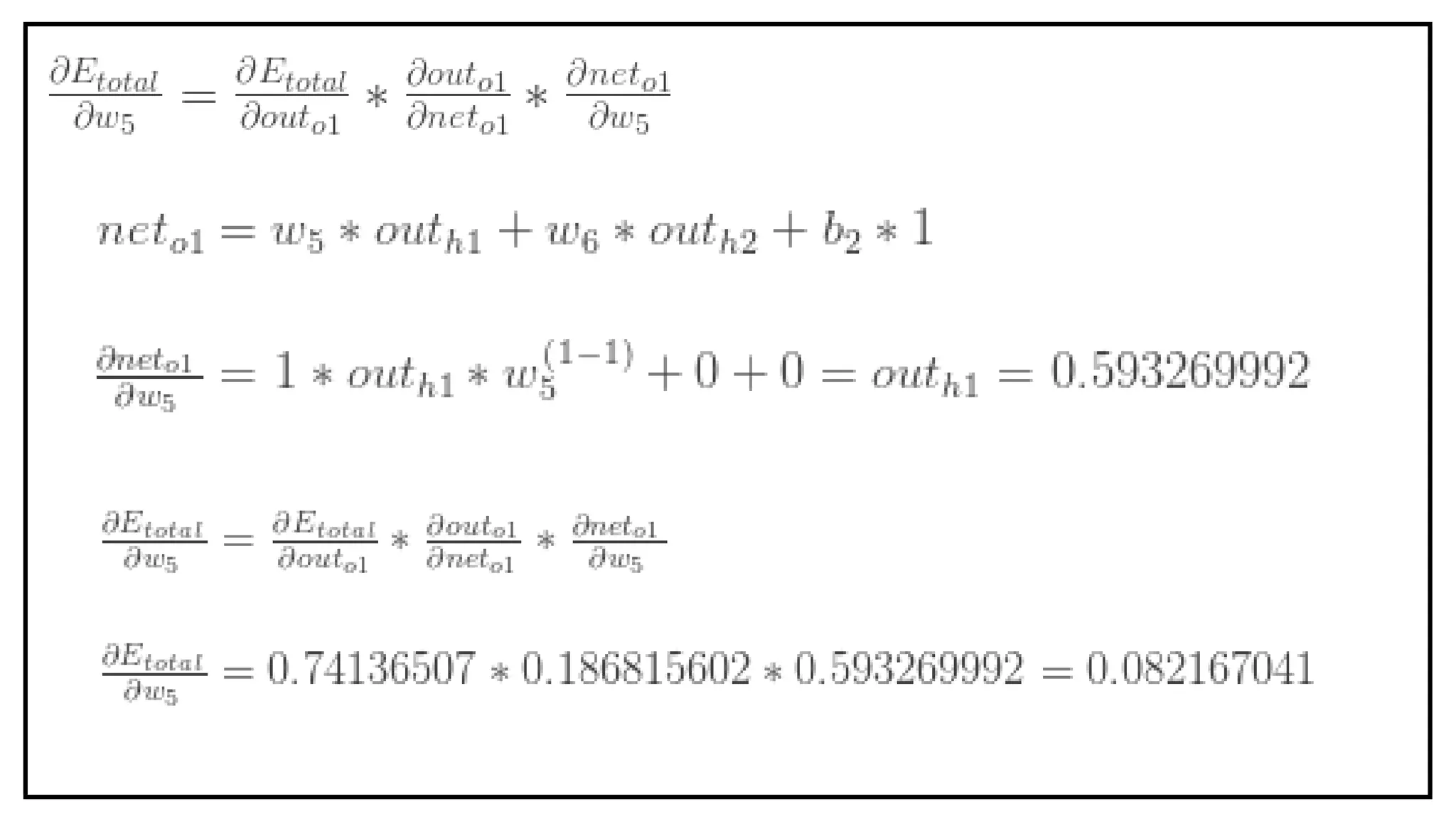

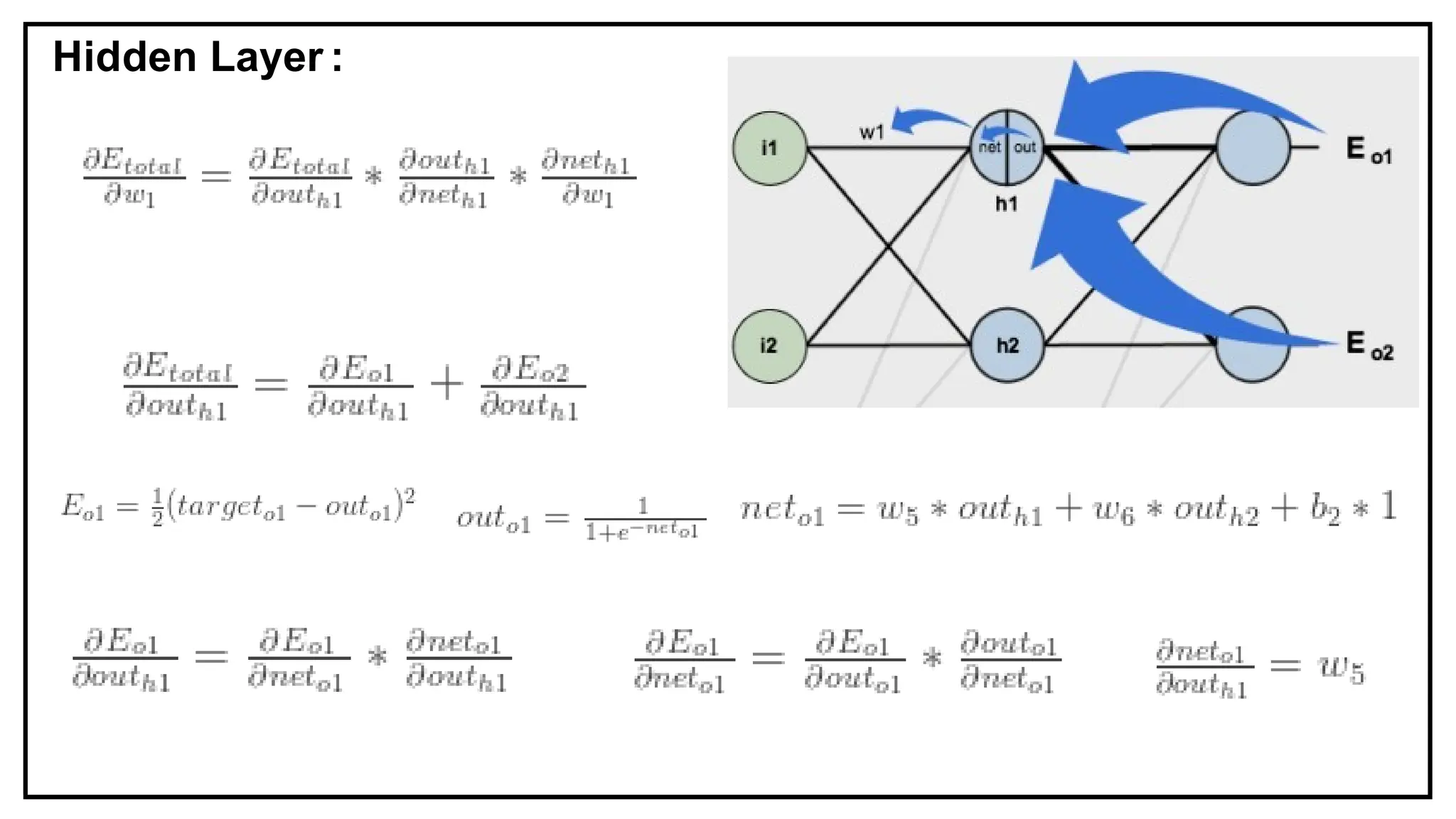

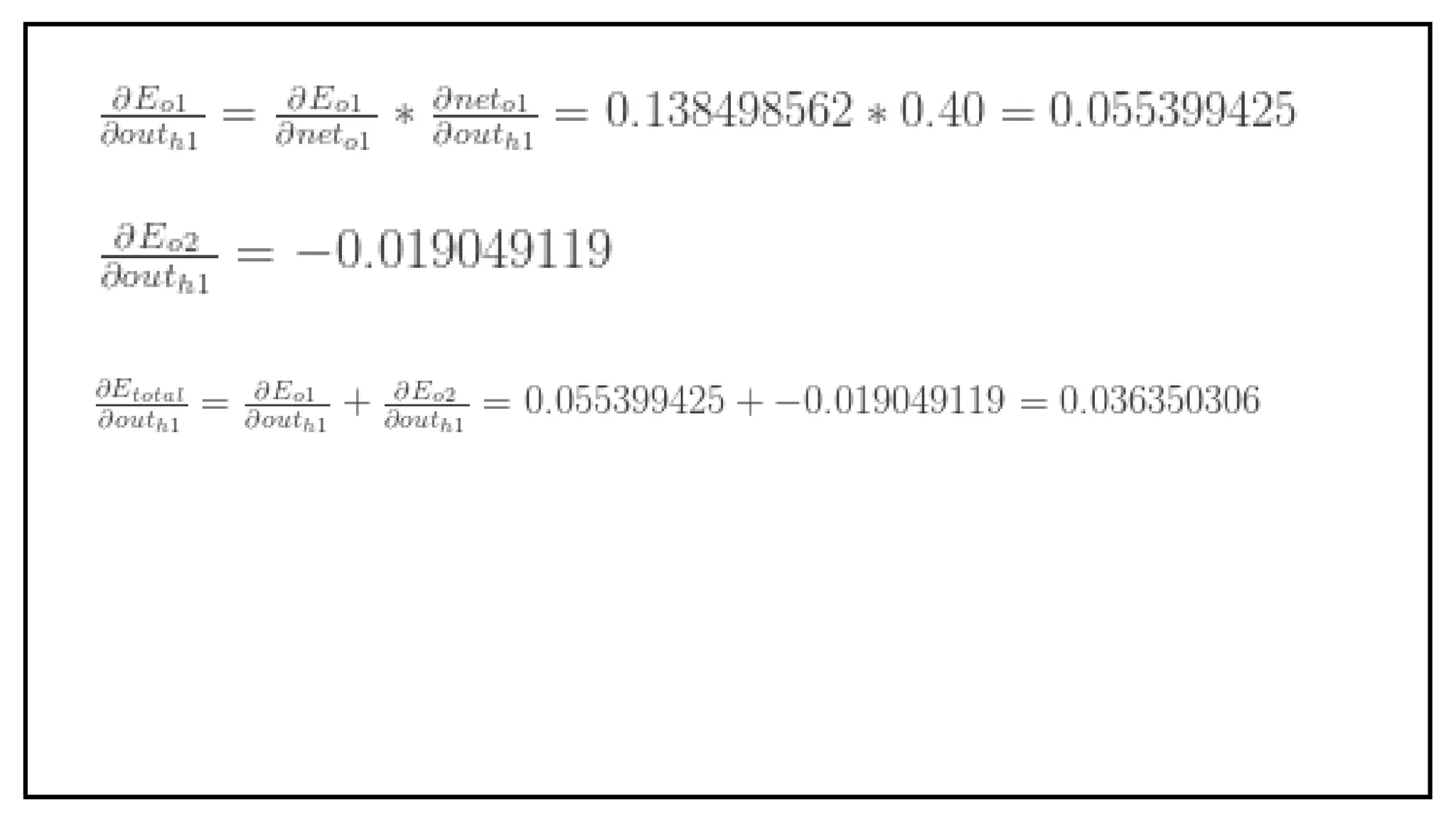

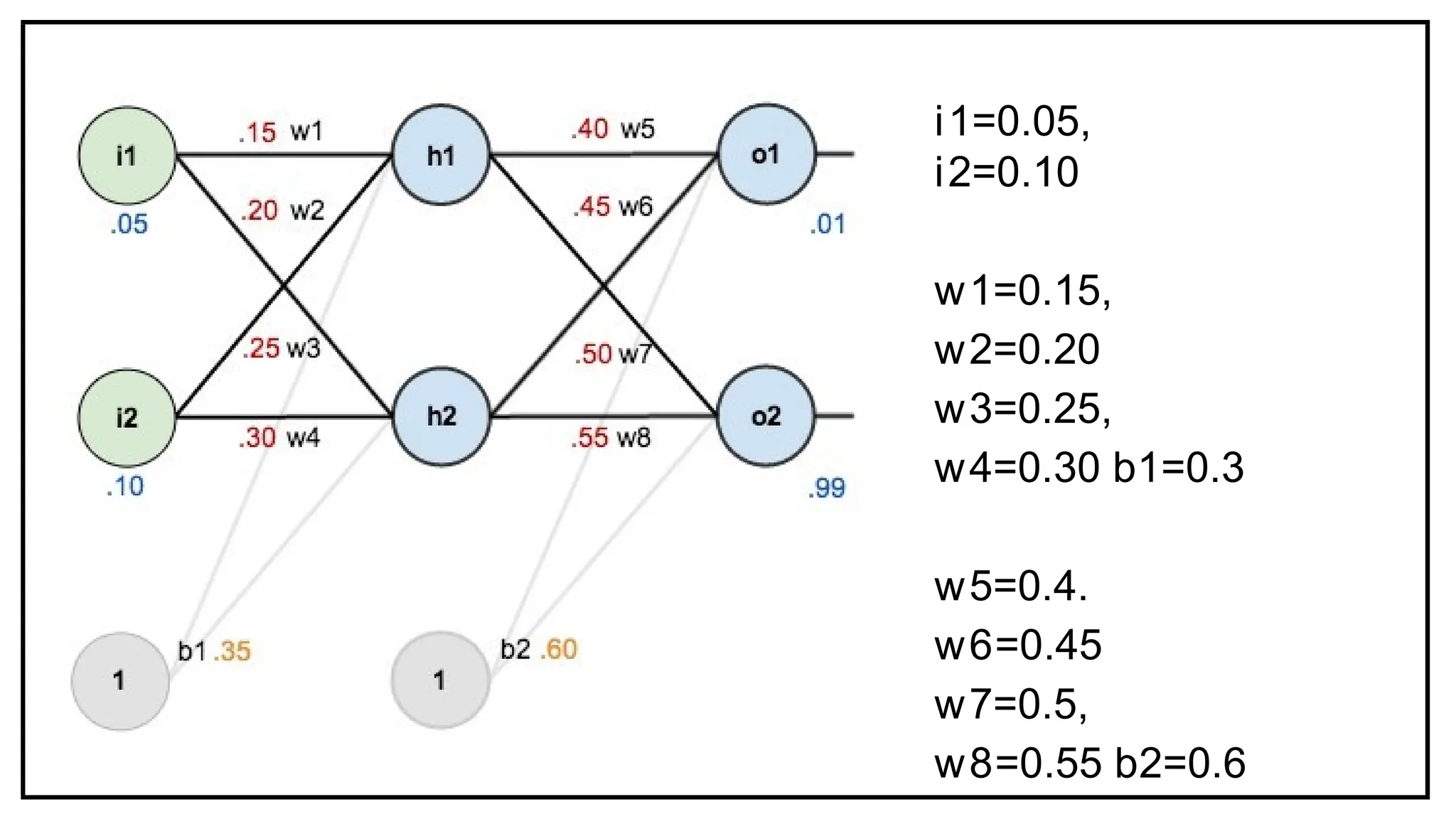

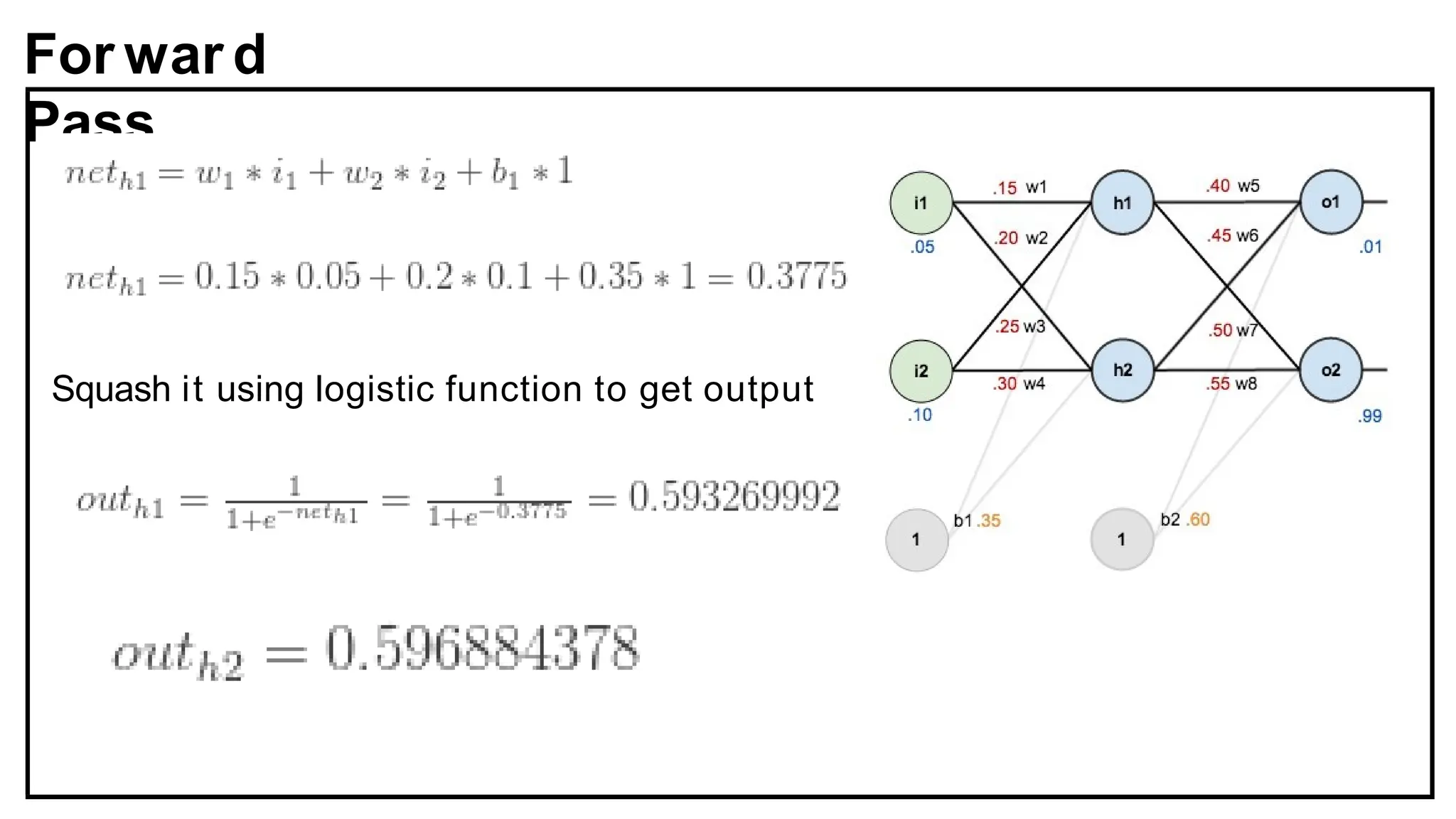

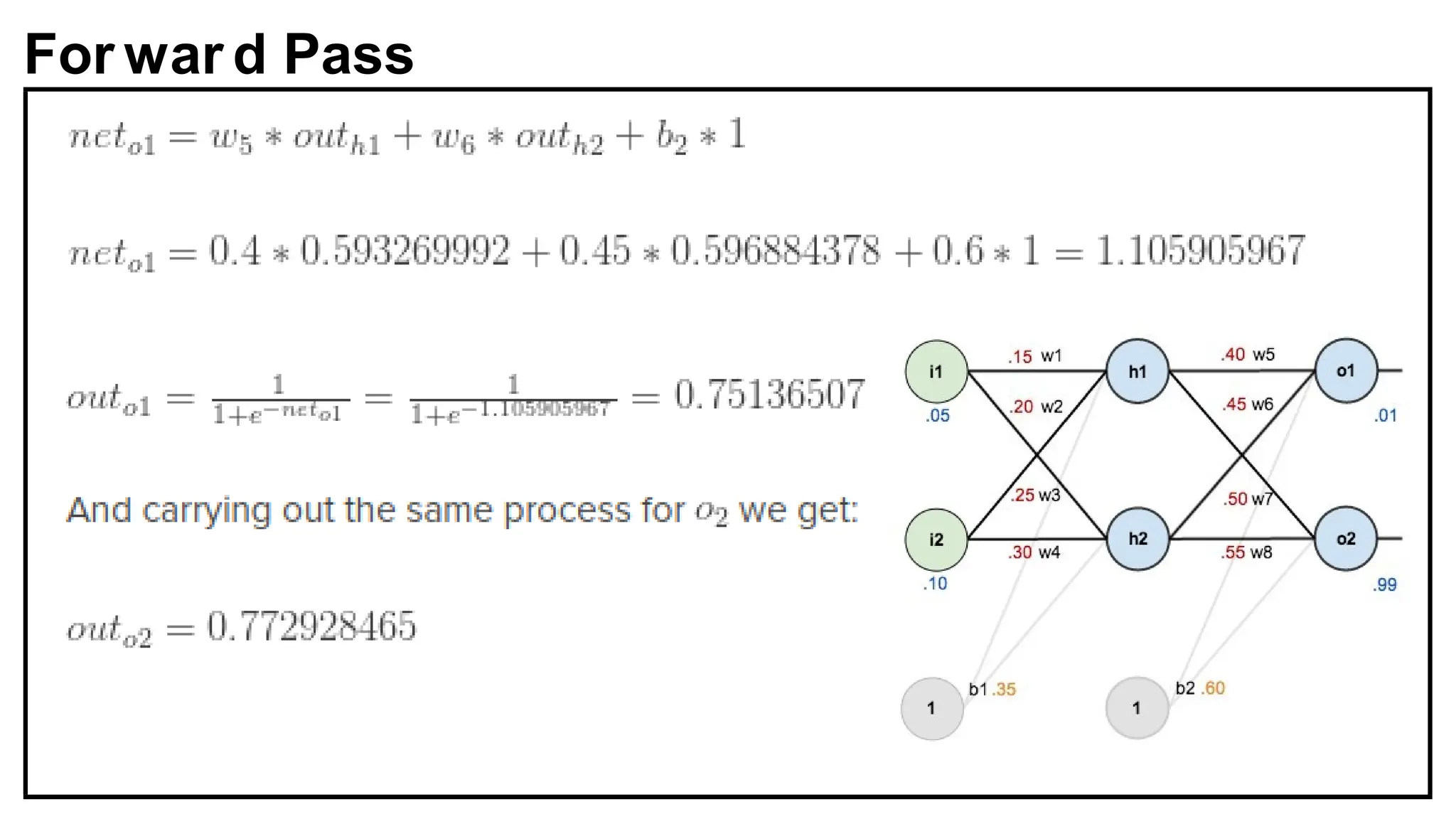

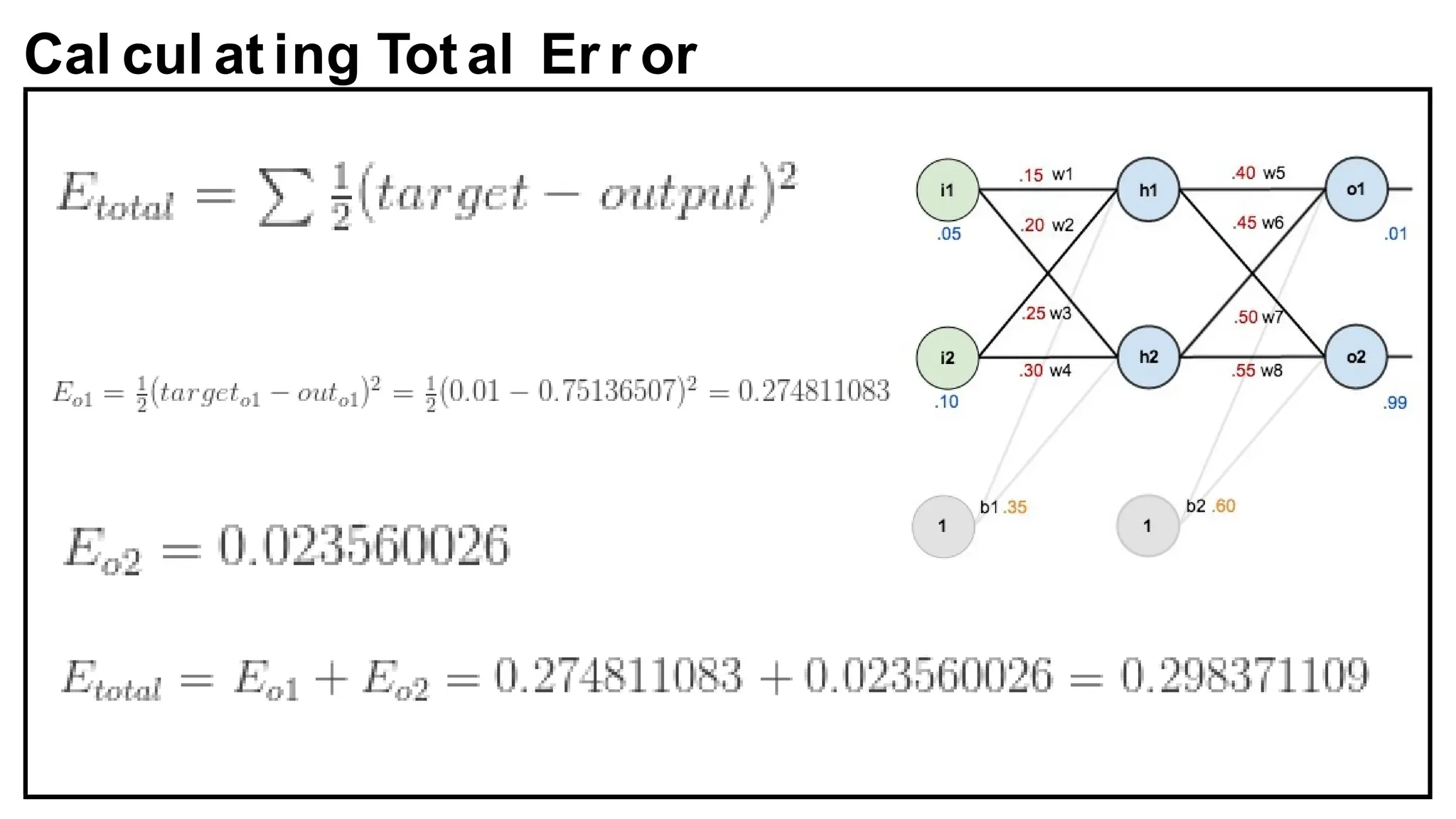

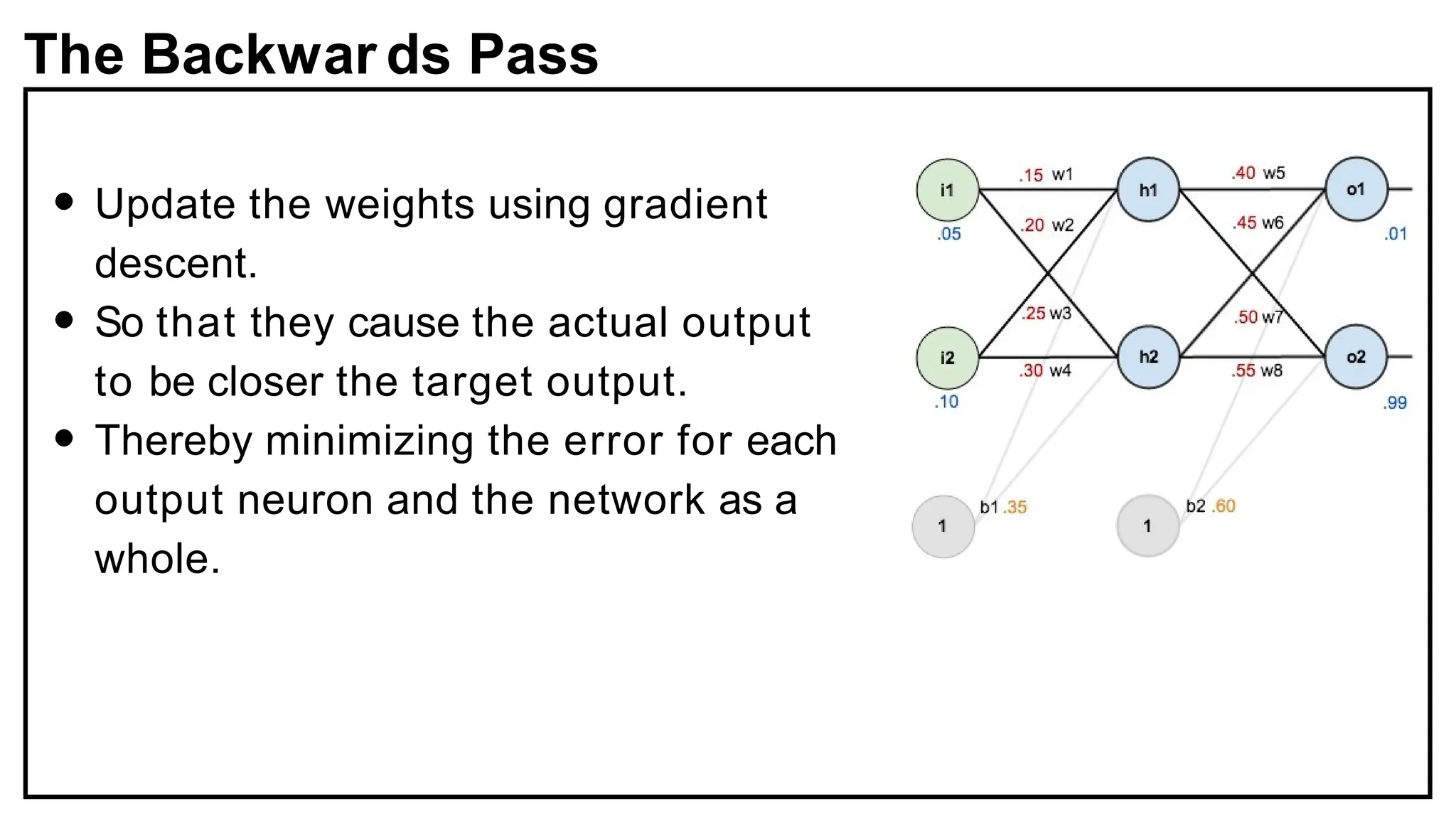

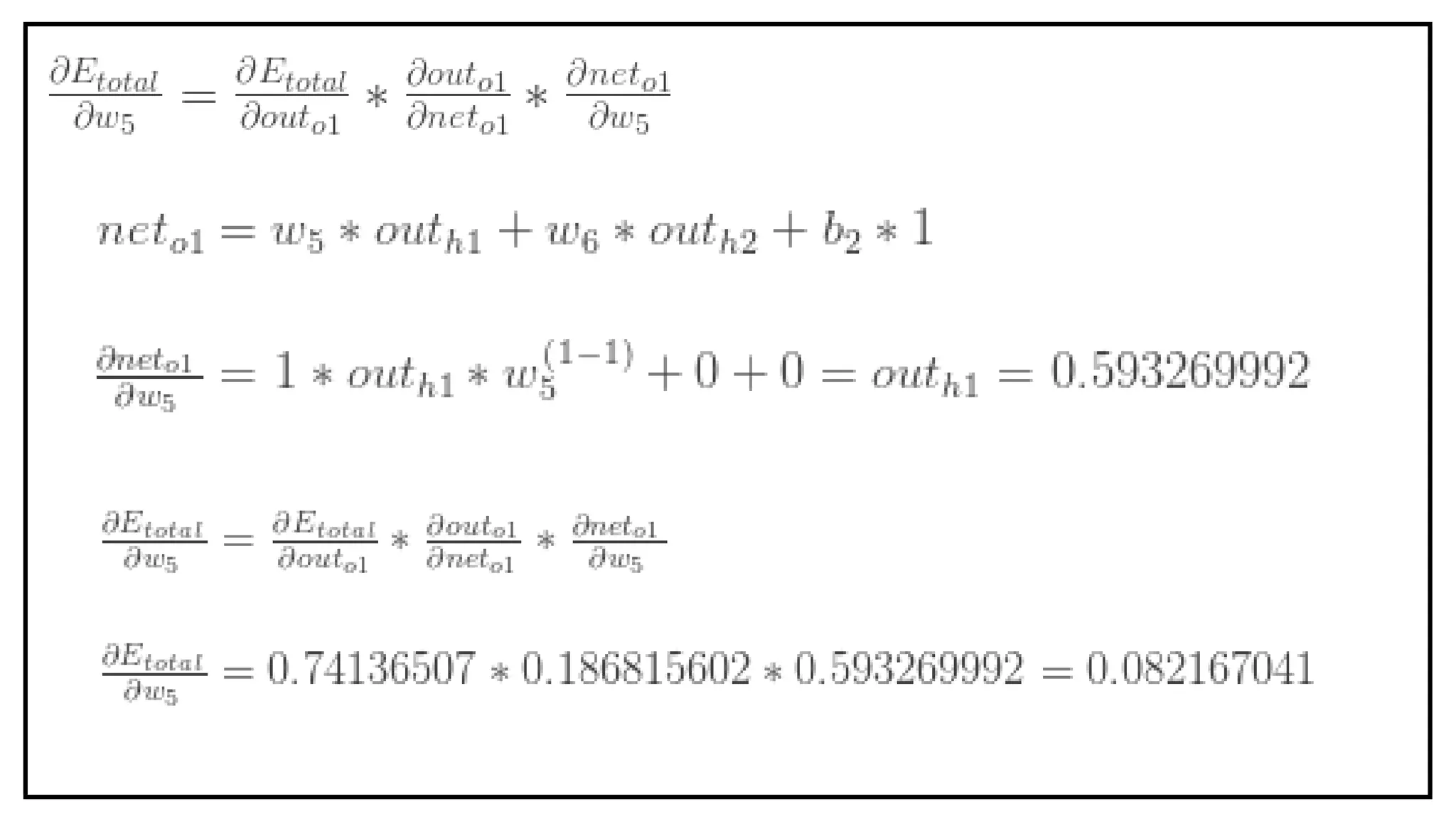

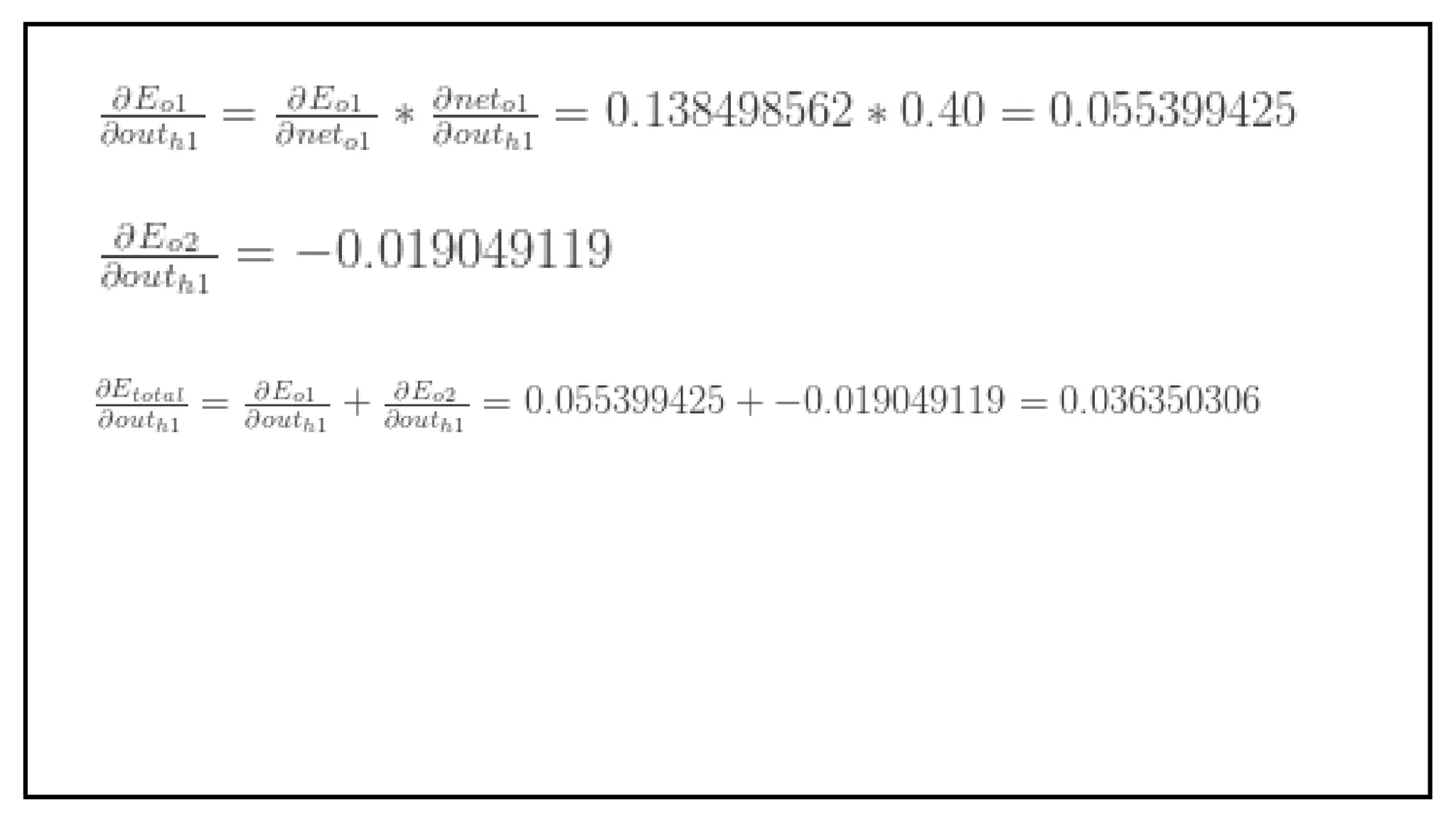

Backpropagation algorithm in Neural Network 1. 2. 3. 4. 5. The Backwar ds Pass

Update the weights using gradient

descent.

So that they cause the actual output

to be closer the target output.

Thereby minimizing the error for each

output neuron and the network as a

whole.

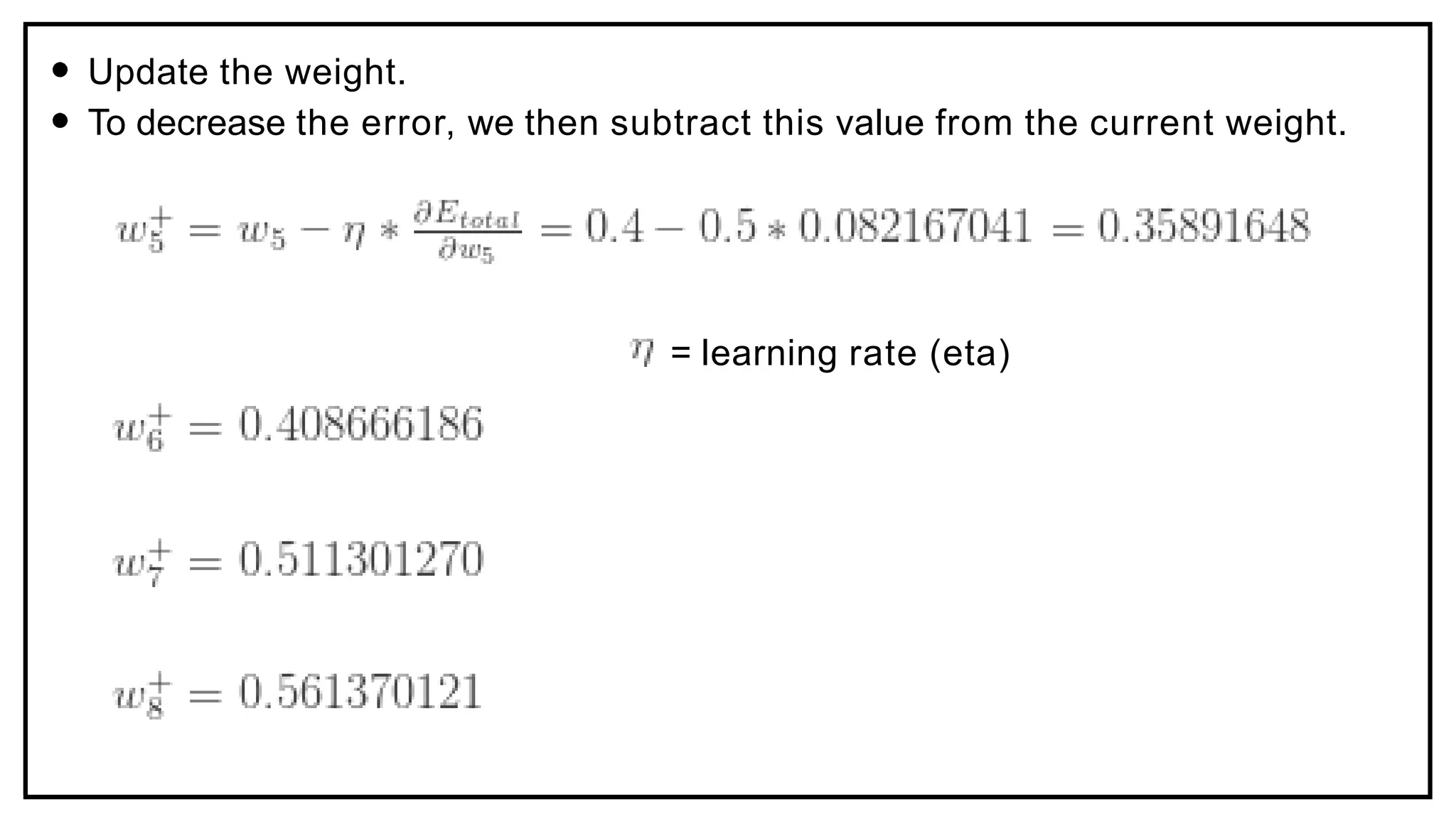

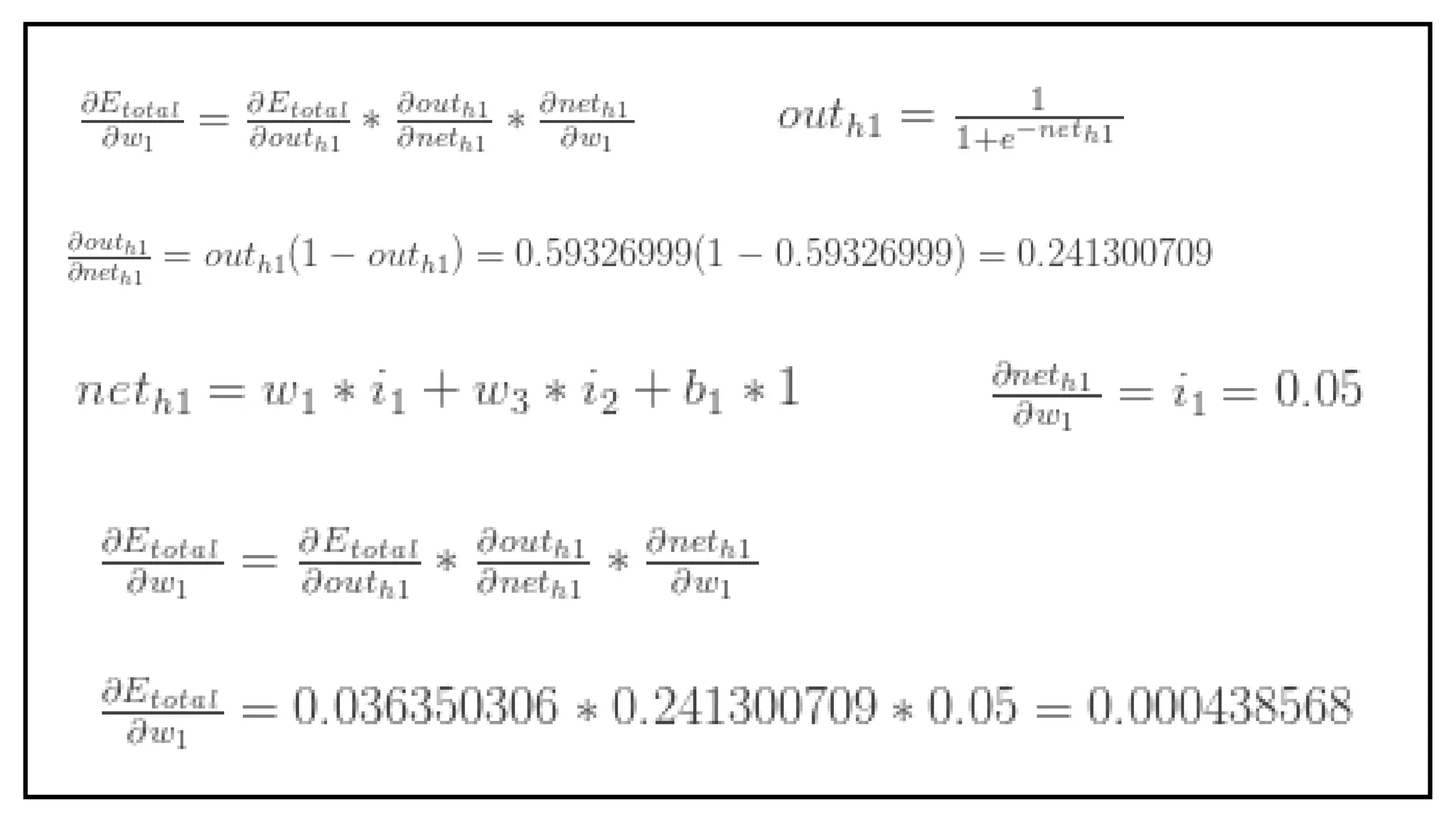

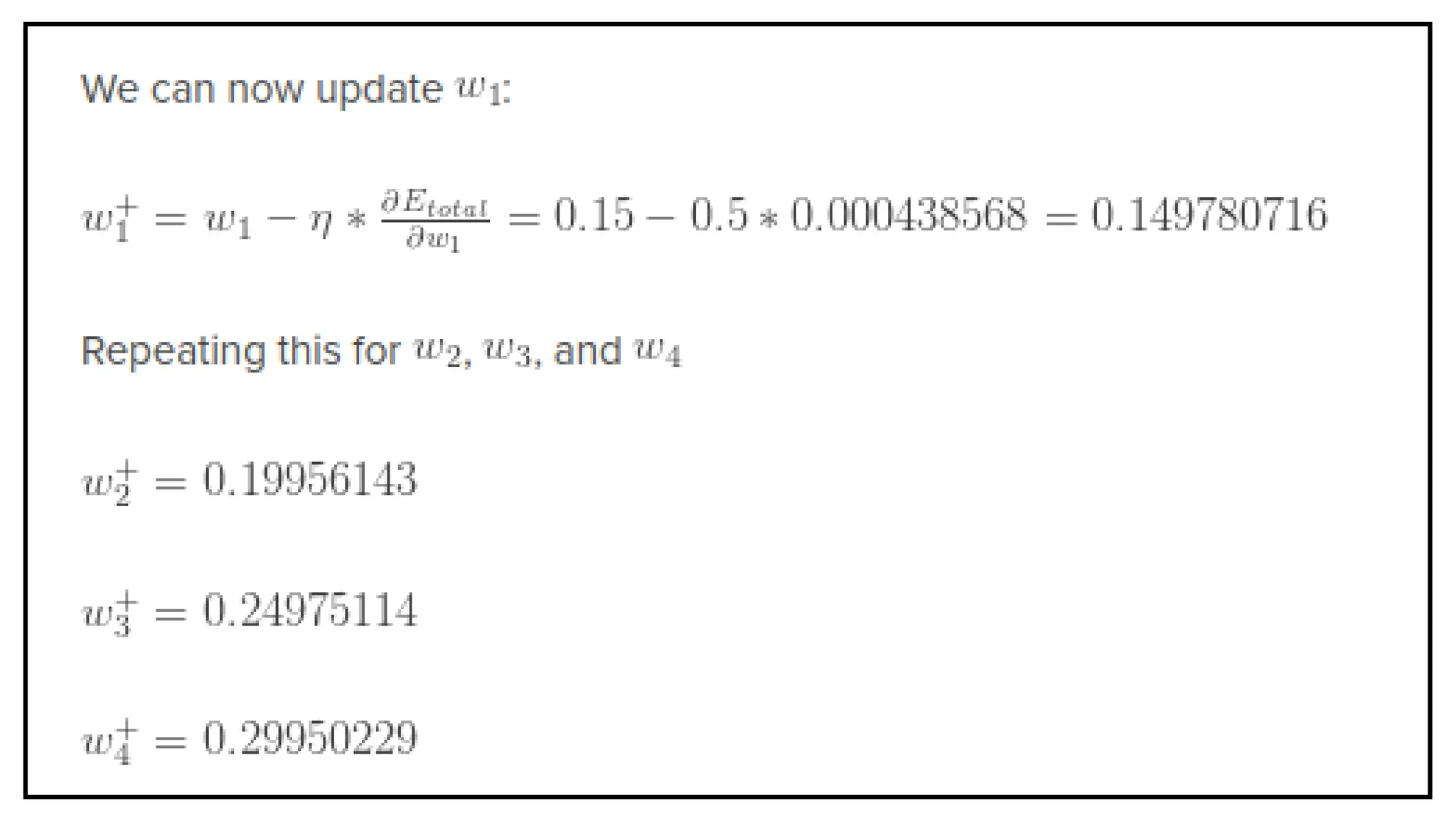

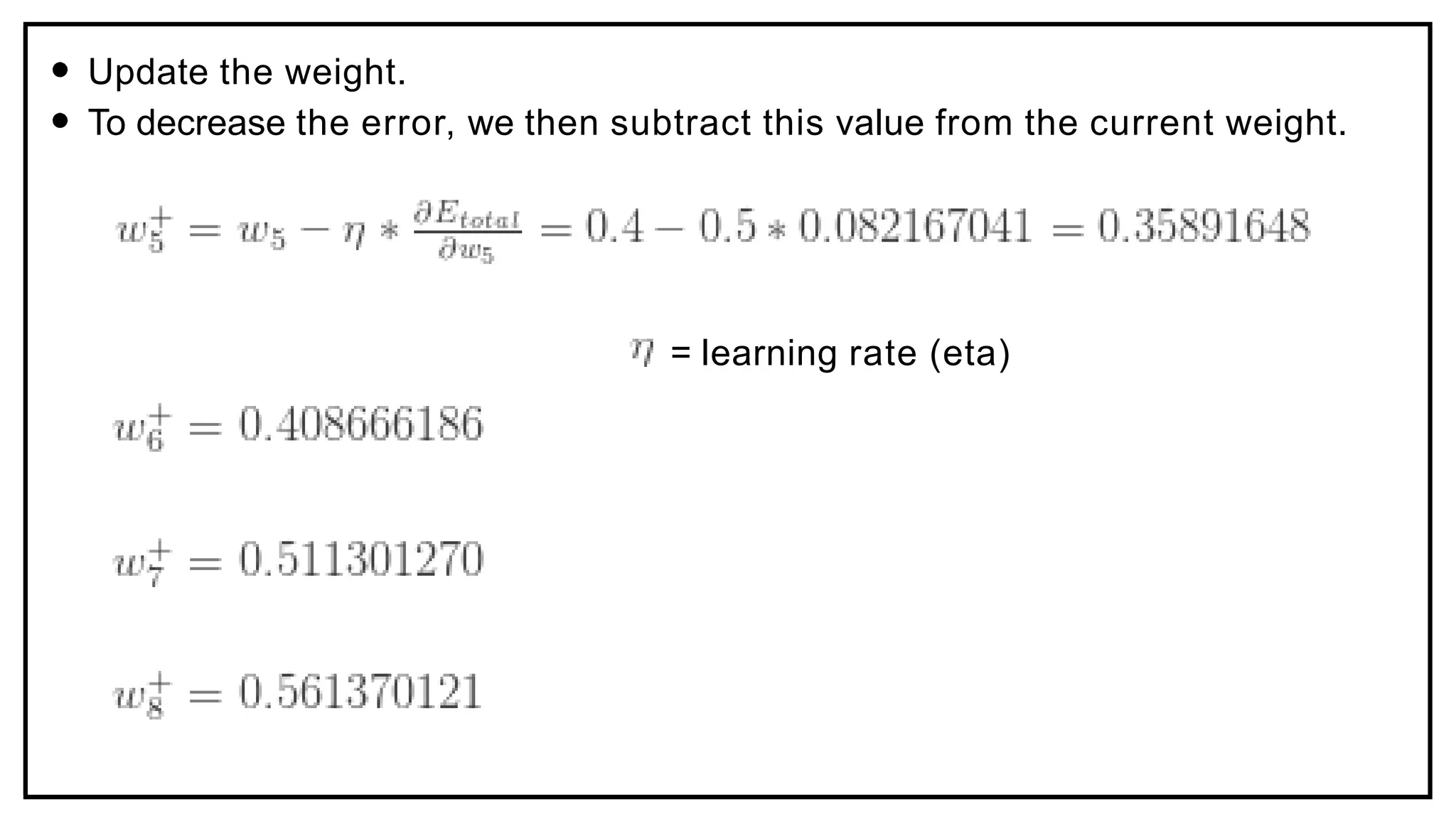

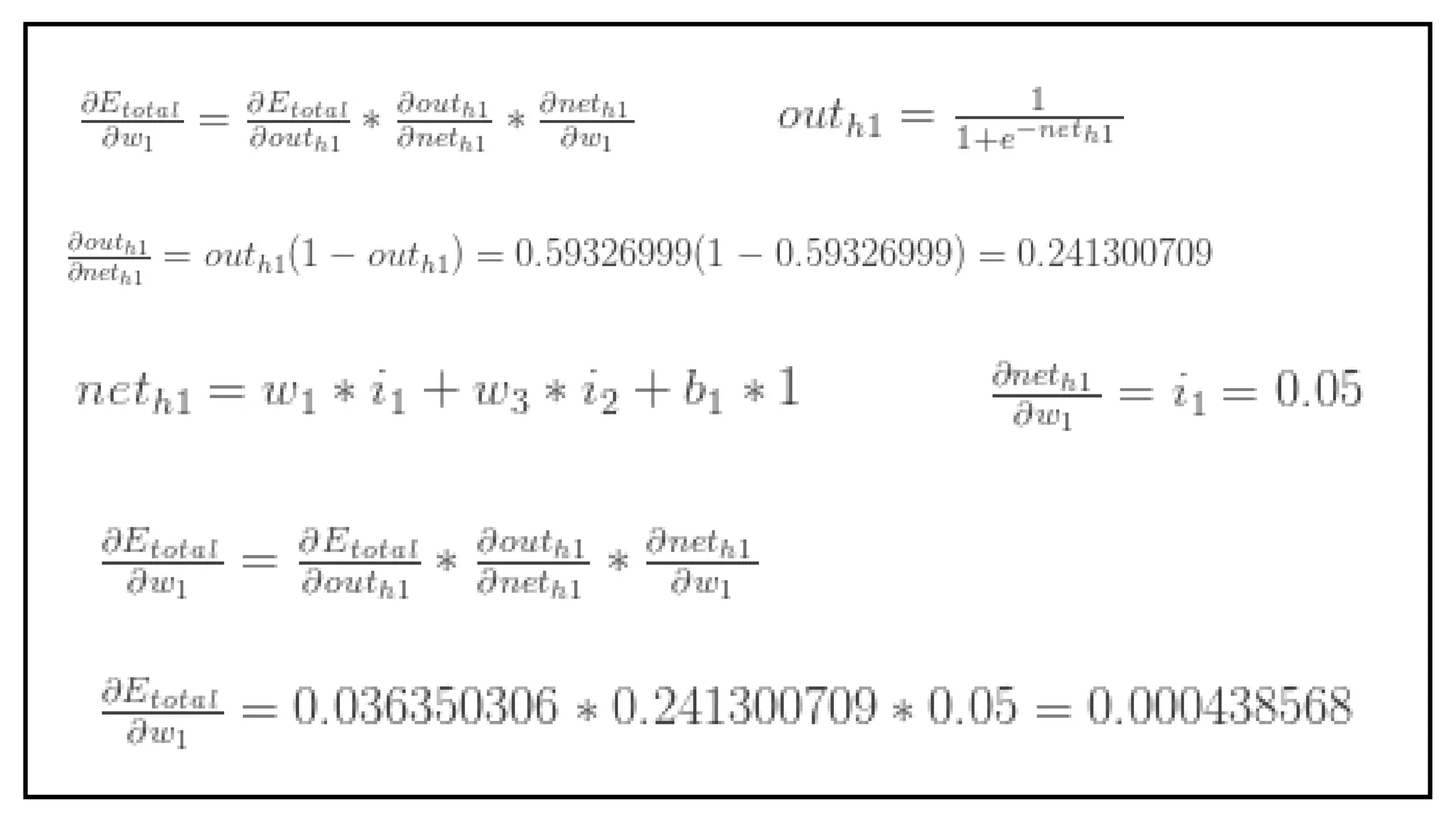

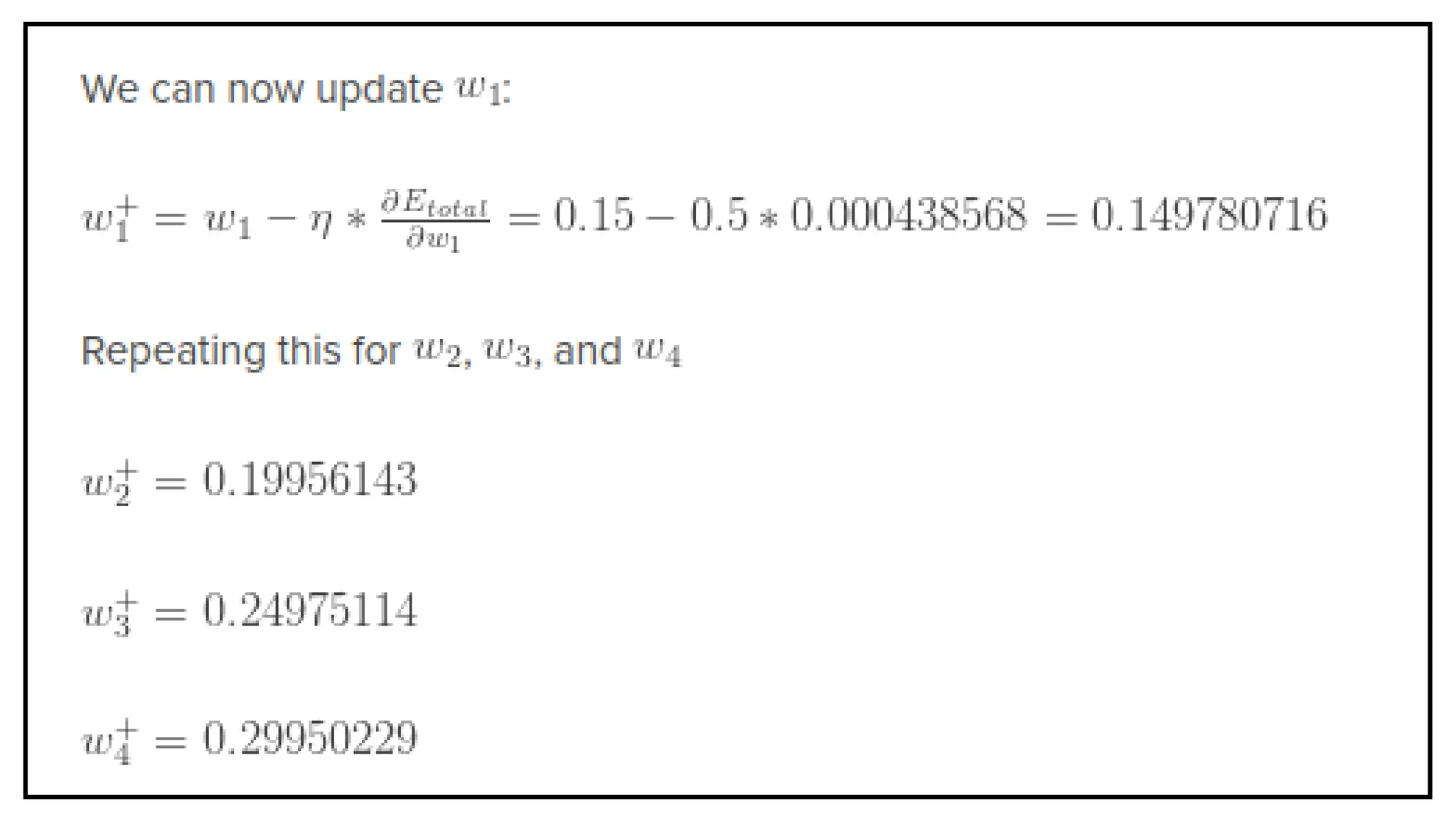

6. 10. Update the weight.

To decrease the error, we then subtract this value from the current weight.

= learning rate (eta)

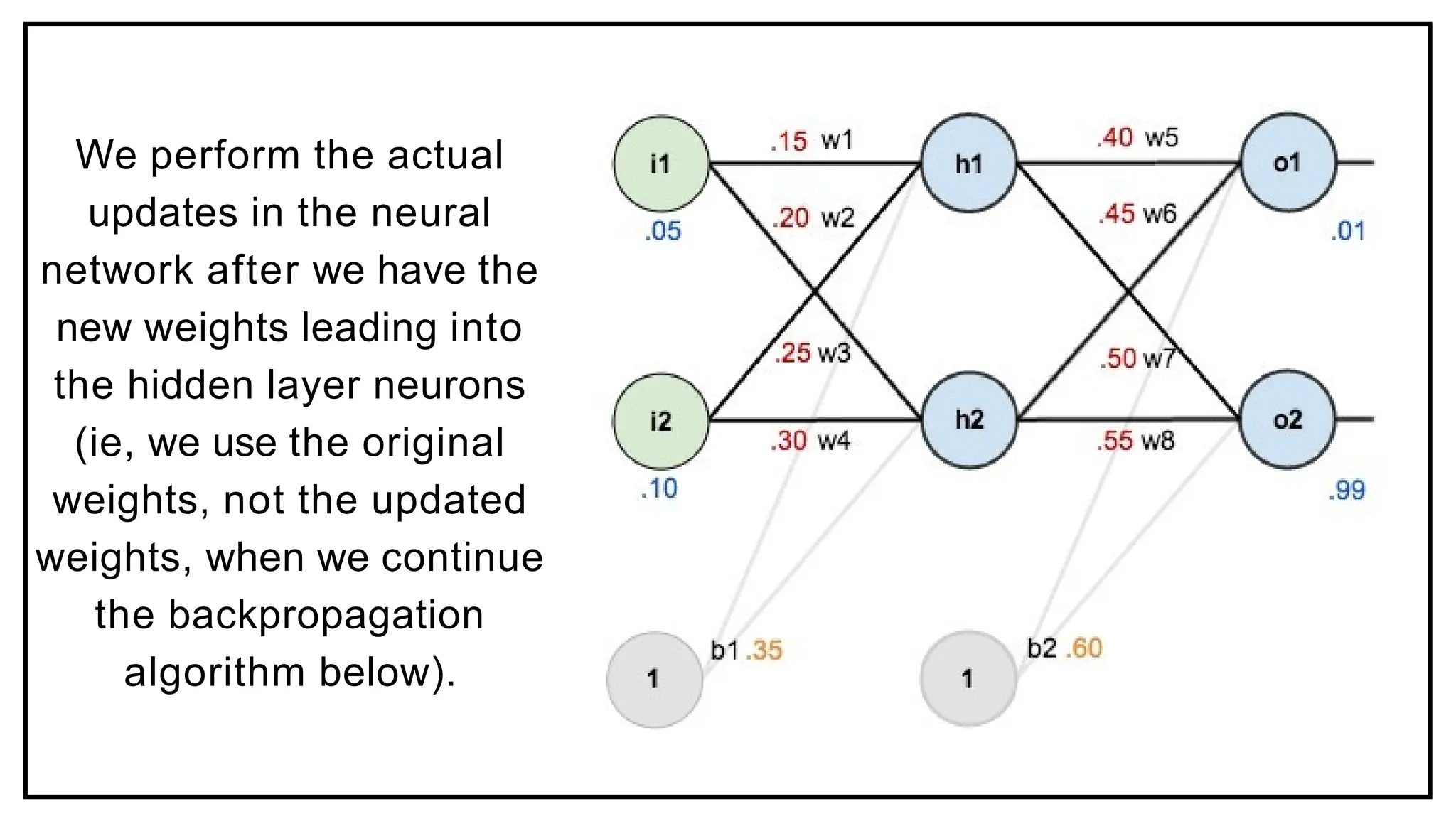

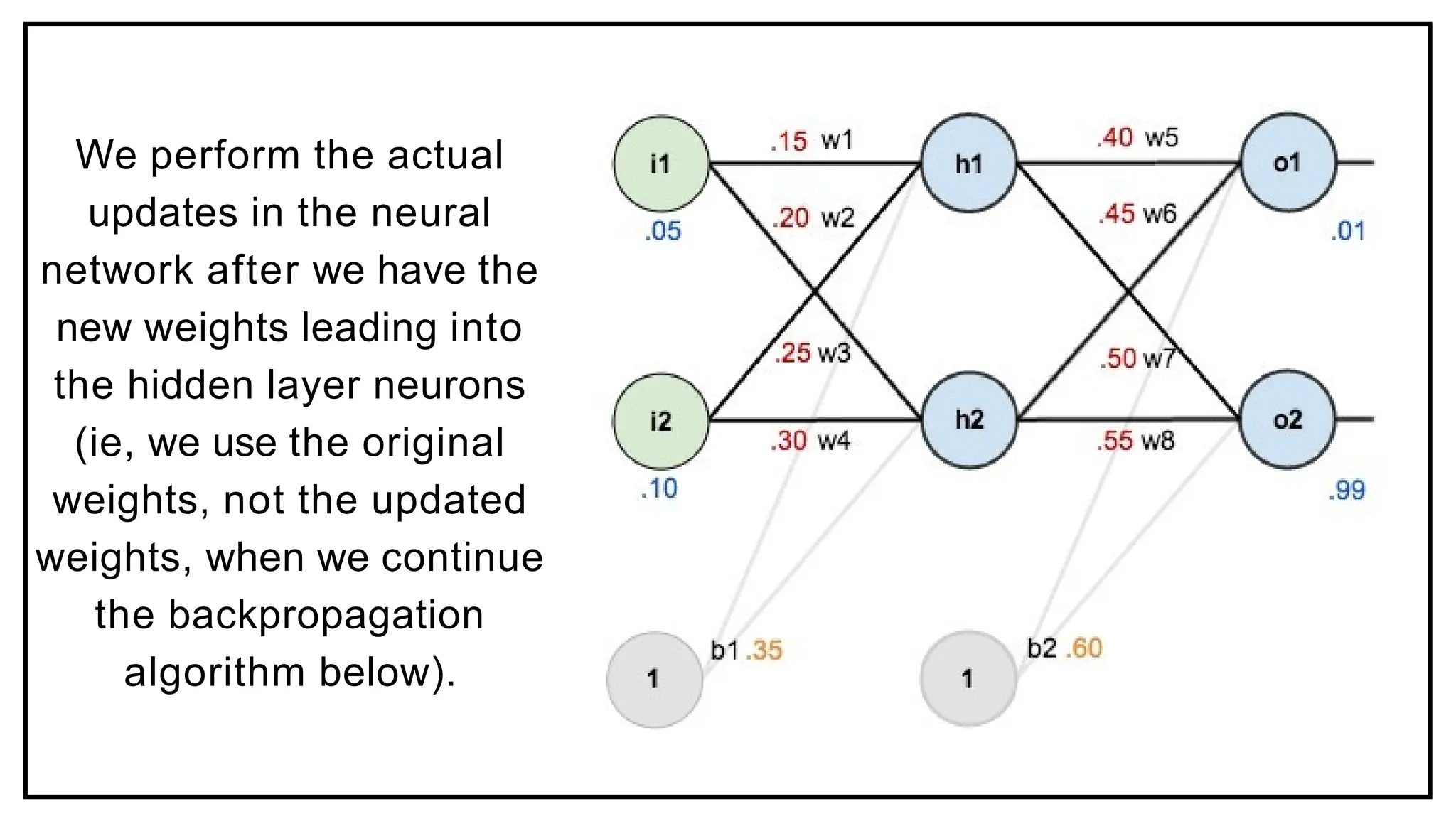

11. We perform the actual

updates in the neural

network after we have the

new weights leading into

the hidden layer neurons

(ie, we use the original

weights, not the updated

weights, when we continue

the backpropagation

algorithm below).

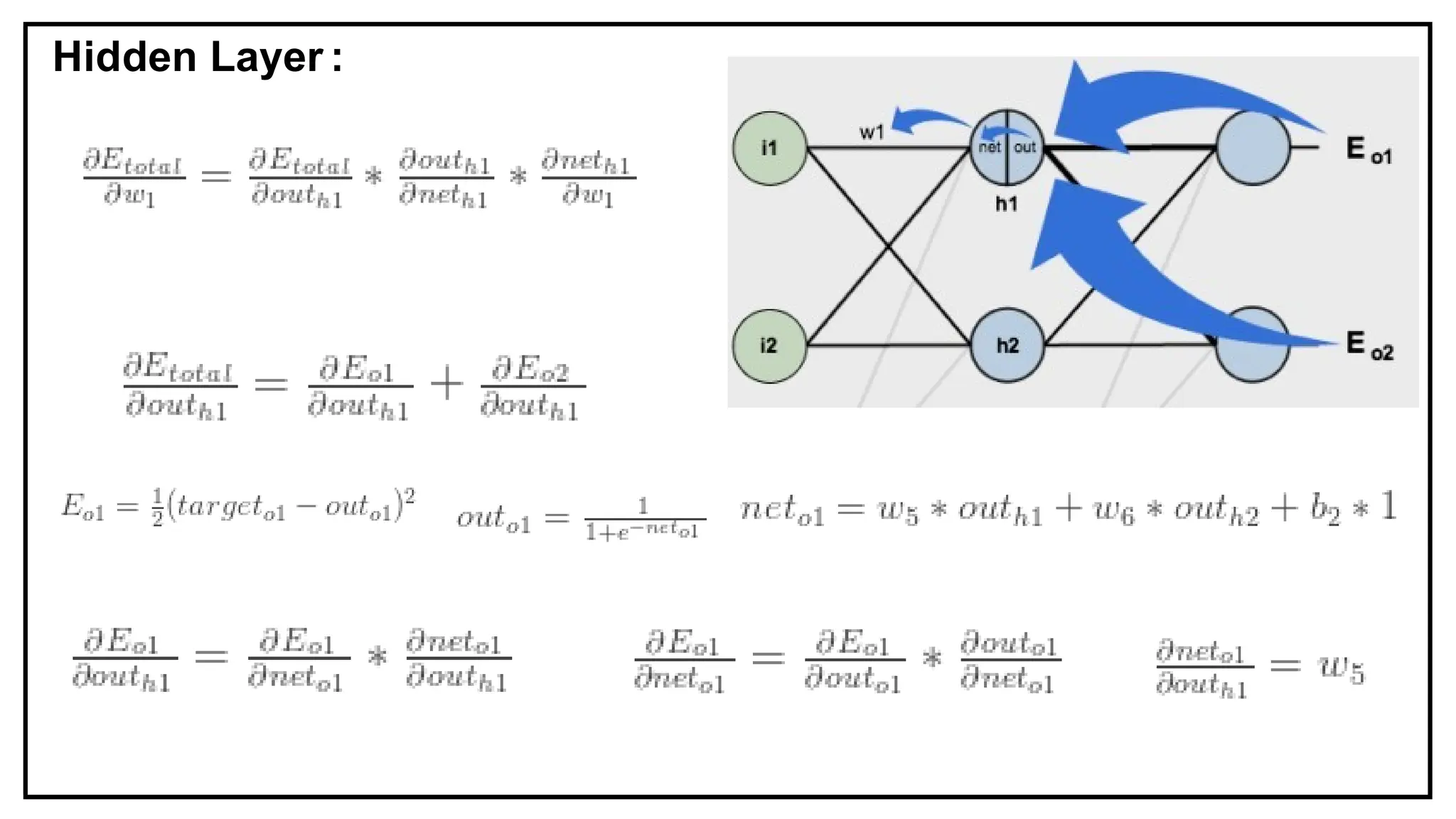

12. 16. Finally, we’ve updated all of our weights!

When we fed forward the 0.05 and 0.1 inputs originally, the error on the

network was 0.298371109.

After this first round of backpropagation, the total error is now down to

0.291027924.