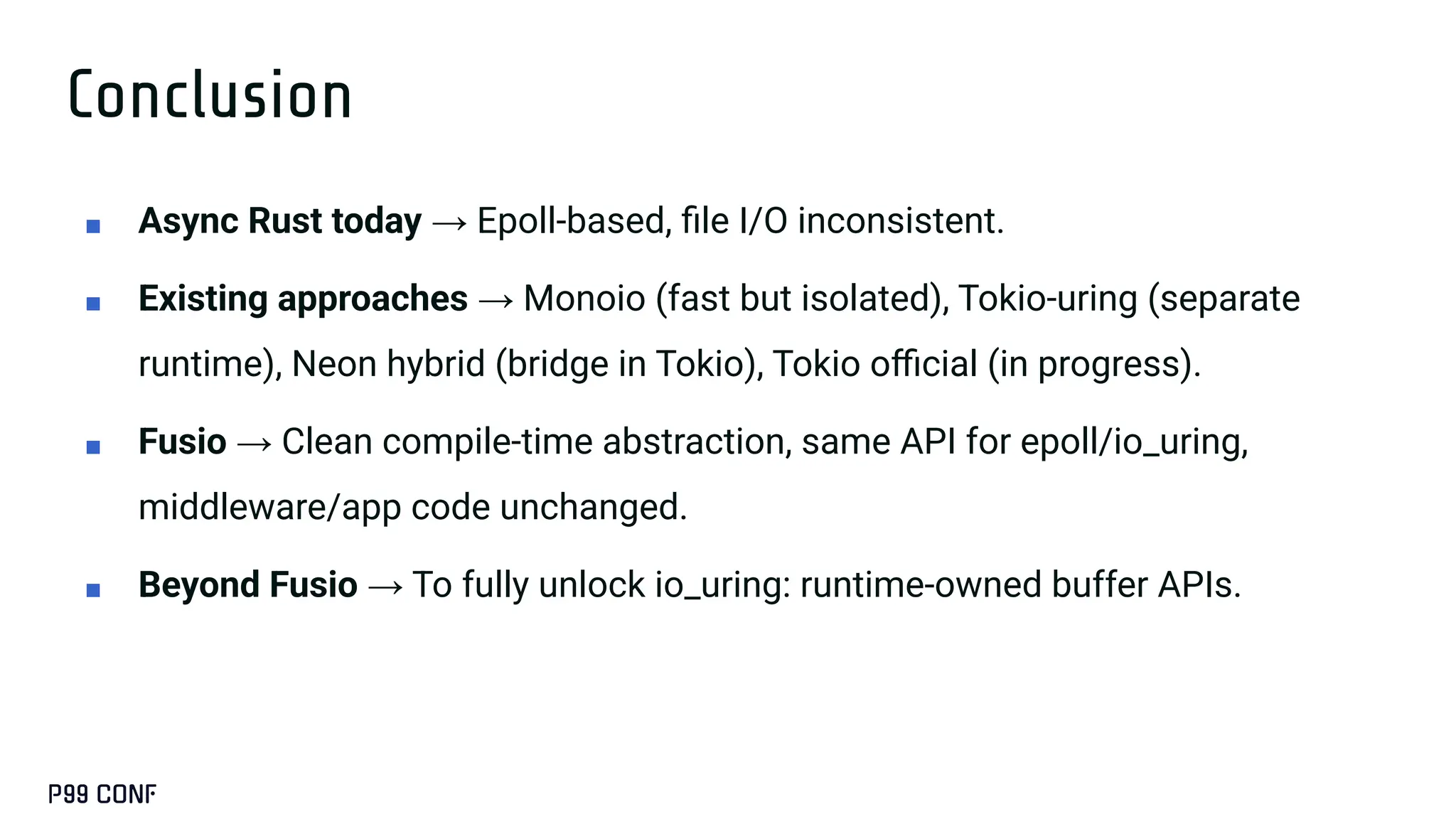

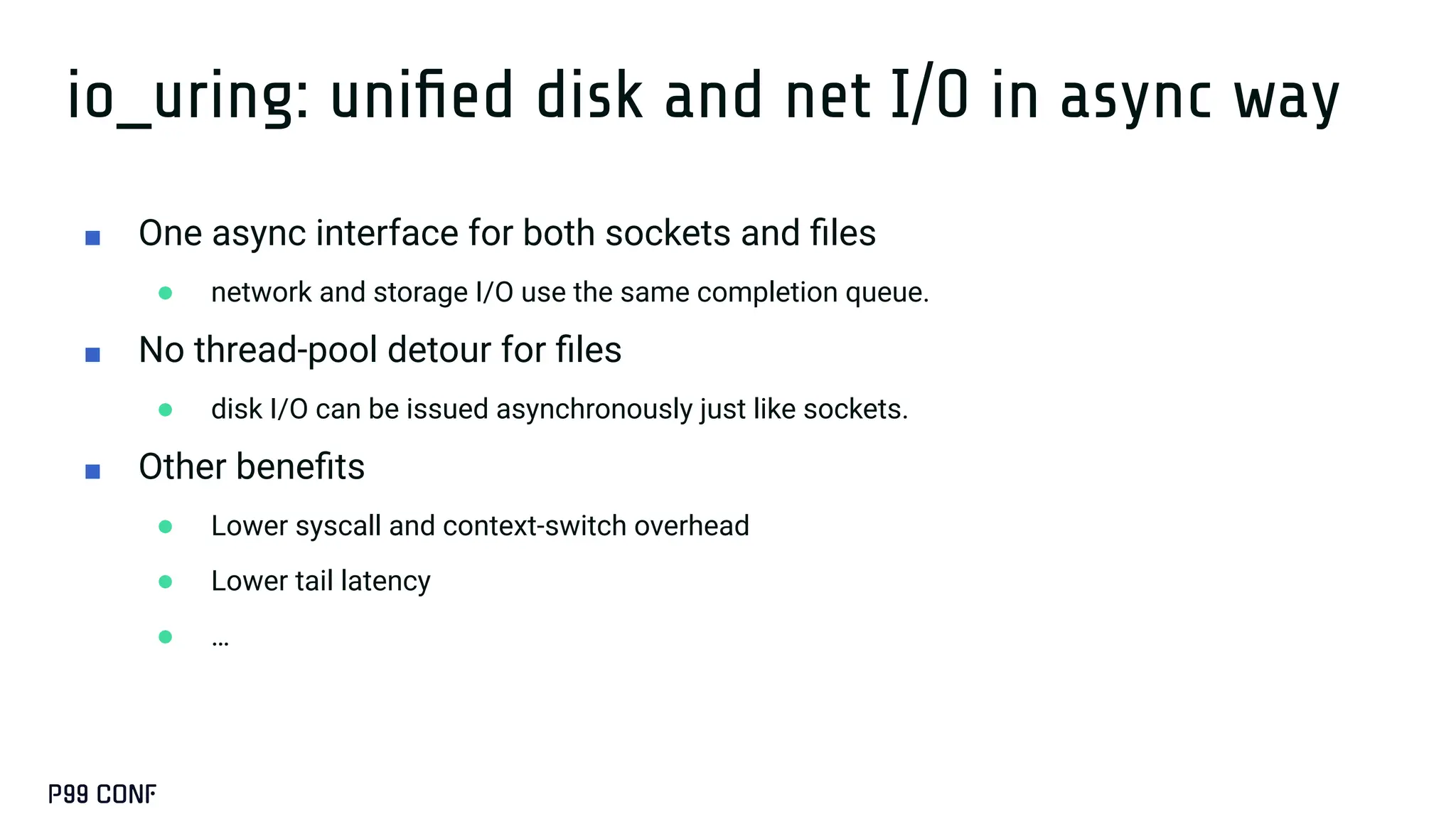

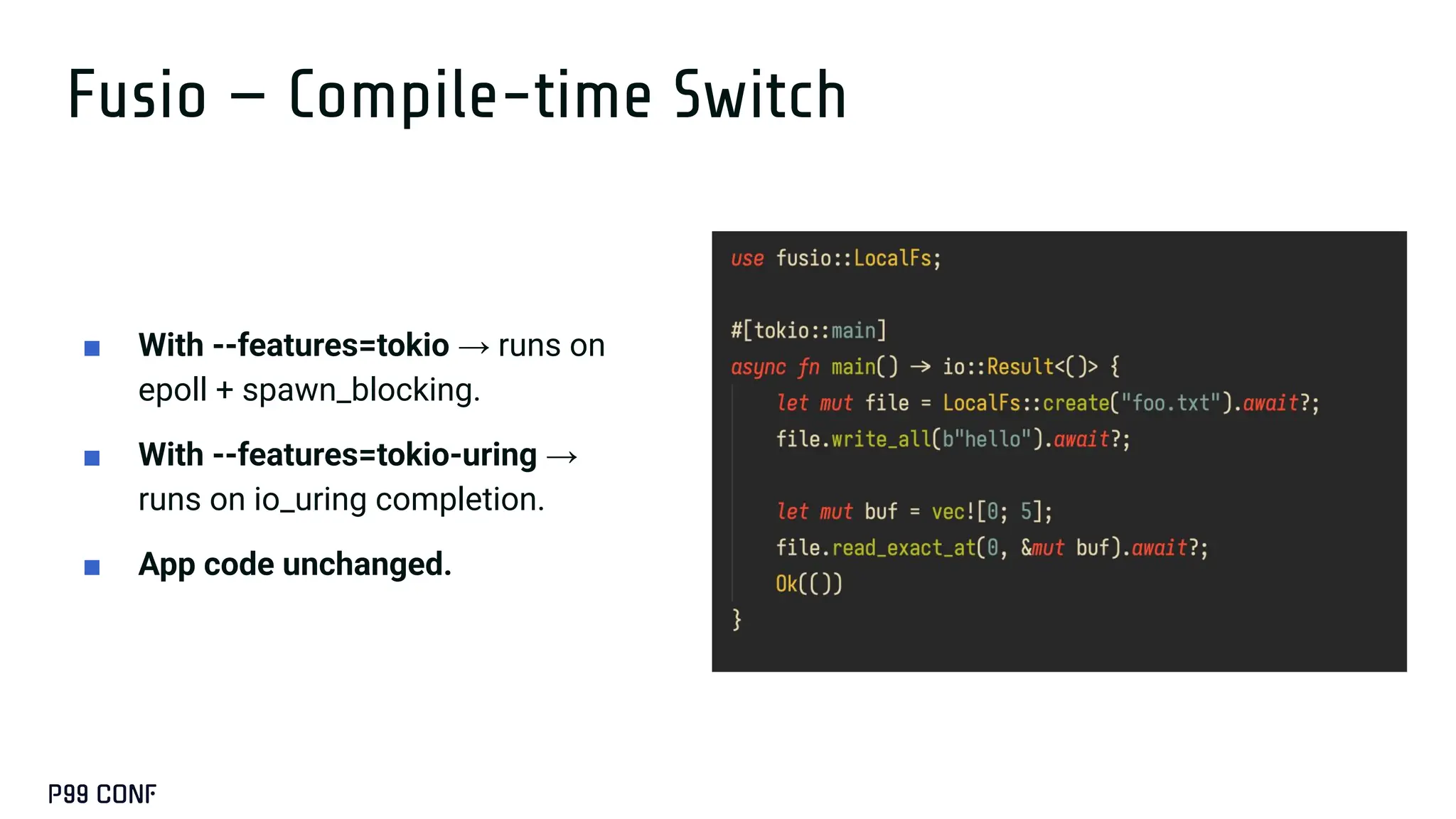

Tokio dominates async Rust, but its epoll-based model makes it hard to adopt io_uring. This talk explains why async Rust’s design creates that friction and introduces an approach to support both I/O models: switching runtimes at compile time. With this method, I/O middleware can work with epoll and io_uring without changing its code.

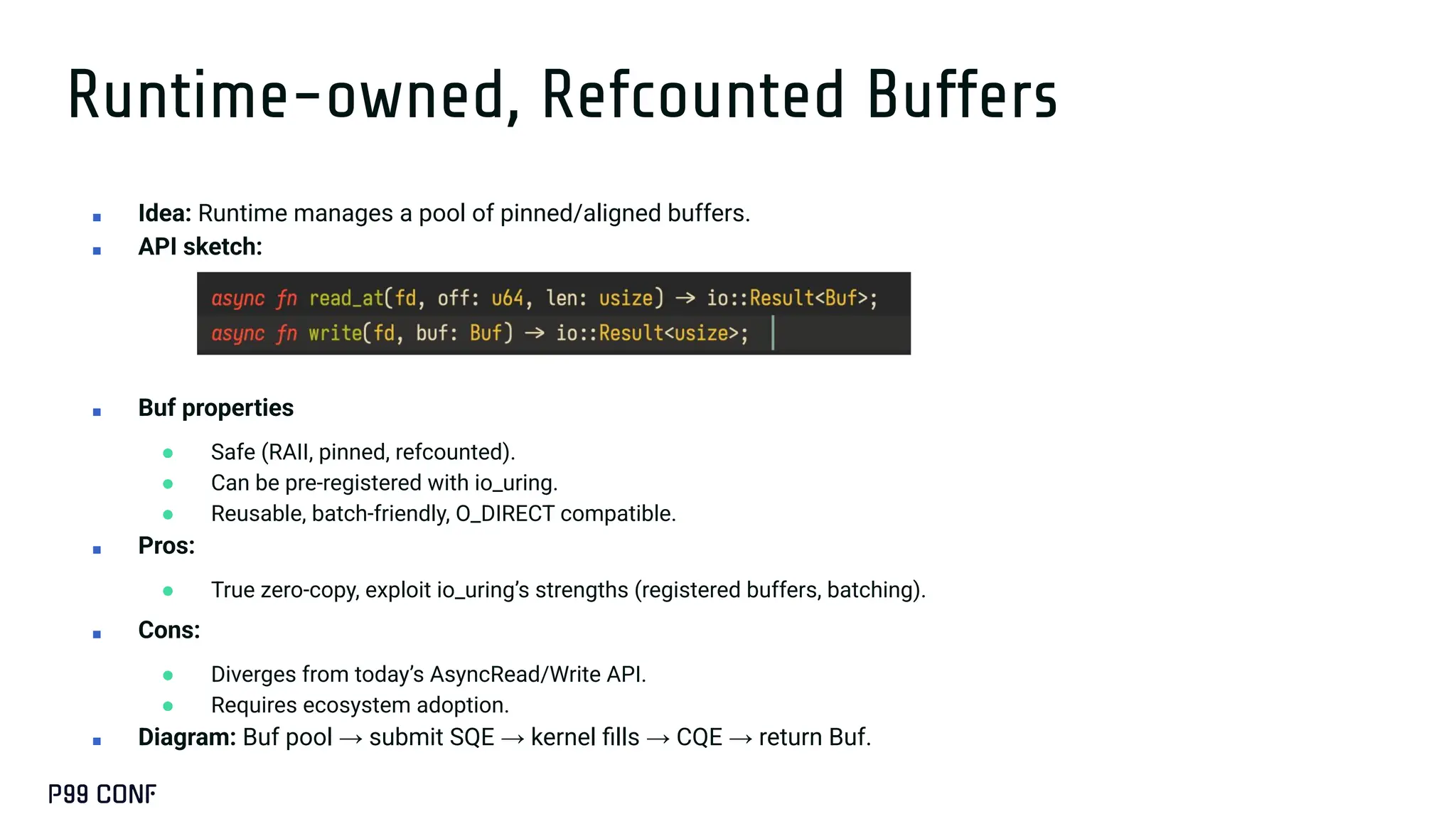

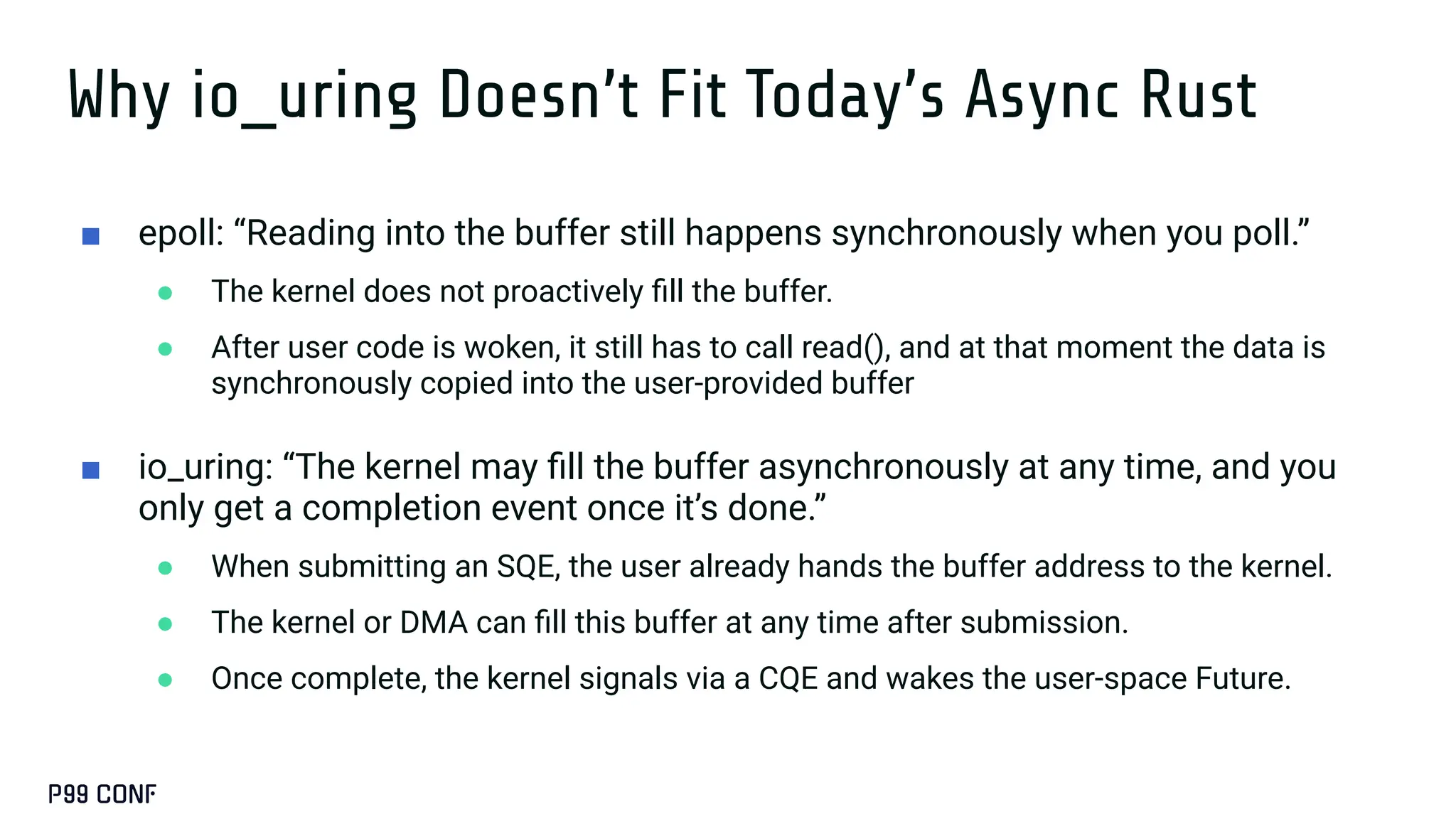

![The Buffer Problem

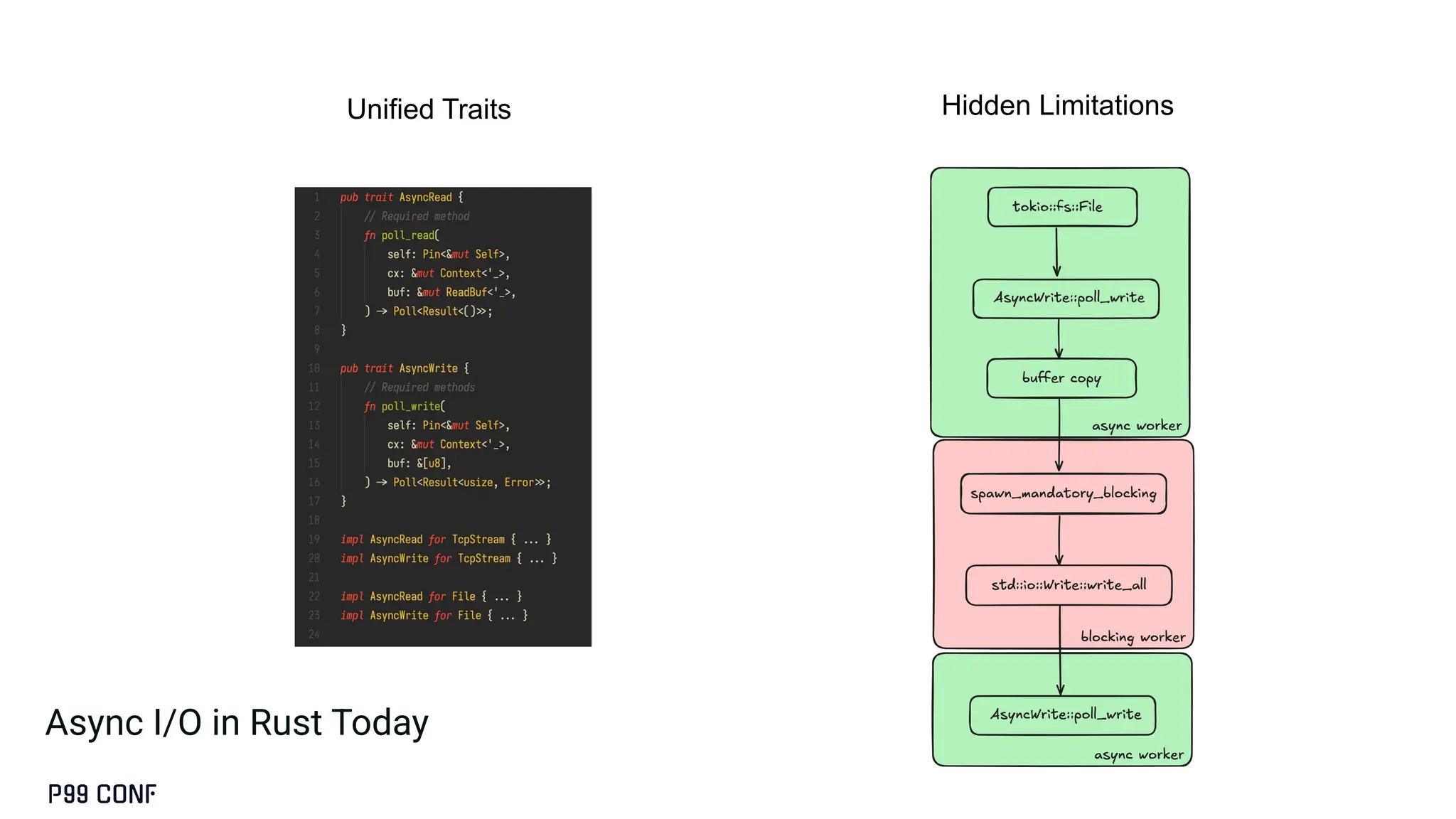

■ Current async traits

● AsyncRead/Write expect caller-provided &mut [u8].

● Works for epoll (readiness: copy happens synchronously).

■ Problem with io_uring

● Kernel may fill buffer at any time after submission.

● Runtime must guarantee buffer lifetime, alignment, pinning.

■ Implication

● If we stick to current API, runtime has to copy from its own buffer → extra overhead.

■ Takeaway: To fully unlock io_uring, we need new buffer semantics.](https://image.slidesharecdn.com/tzugwop99conf2025slide-251017221822-1fdeabf1/75/Bridging-epoll-and-io_uring-in-Async-Rust-by-Tzu-Gwo-19-2048.jpg)