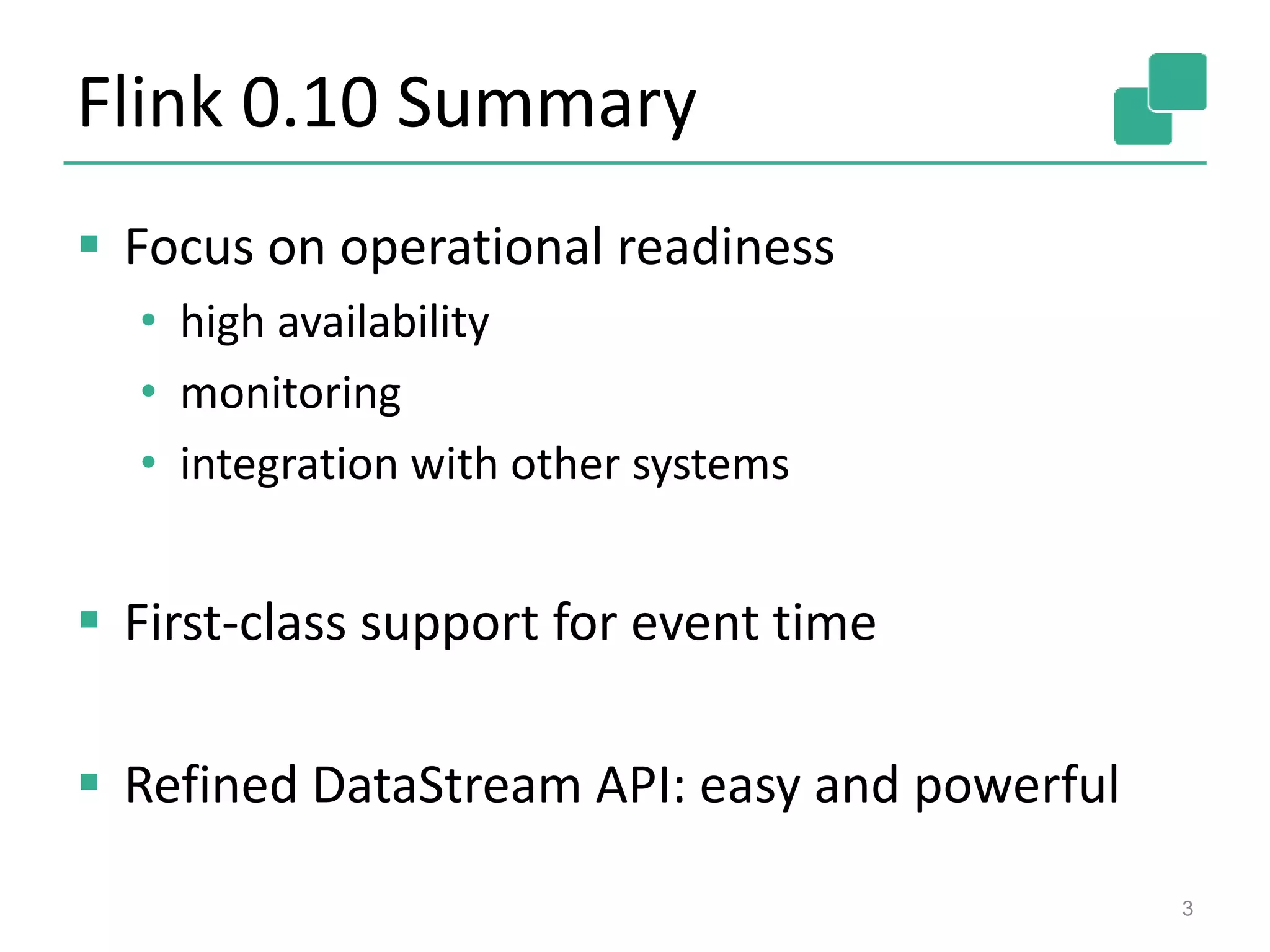

Flink 0.10 focuses on operational readiness with improvements to high availability, monitoring, and integration with other systems. It provides first-class support for event time processing and refines the DataStream API to be both easy to use and powerful for stream processing tasks.

![Improved DataStream API

5

case class Event(location: Location, numVehicles: Long)

val stream: DataStream[Event] = …;

stream

.filter { evt => isIntersection(evt.location) }](https://image.slidesharecdn.com/flink0-151030024902-lva1-app6892/75/Flink-0-10-Bay-Area-Meetup-October-2015-5-2048.jpg)

![Improved DataStream API

6

case class Event(location: Location, numVehicles: Long)

val stream: DataStream[Event] = …;

stream

.filter { evt => isIntersection(evt.location) }

.keyBy("location")

.timeWindow(Time.of(15, MINUTES), Time.of(5, MINUTES))

.sum("numVehicles")](https://image.slidesharecdn.com/flink0-151030024902-lva1-app6892/75/Flink-0-10-Bay-Area-Meetup-October-2015-6-2048.jpg)

![Improved DataStream API

7

case class Event(location: Location, numVehicles: Long)

val stream: DataStream[Event] = …;

stream

.filter { evt => isIntersection(evt.location) }

.keyBy("location")

.timeWindow(Time.of(15, MINUTES), Time.of(5, MINUTES))

.trigger(new Threshold(200))

.sum("numVehicles")](https://image.slidesharecdn.com/flink0-151030024902-lva1-app6892/75/Flink-0-10-Bay-Area-Meetup-October-2015-7-2048.jpg)

![Improved DataStream API

8

case class Event(location: Location, numVehicles: Long)

val stream: DataStream[Event] = …;

stream

.filter { evt => isIntersection(evt.location) }

.keyBy("location")

.timeWindow(Time.of(15, MINUTES), Time.of(5, MINUTES))

.trigger(new Threshold(200))

.sum("numVehicles")

.keyBy( evt => evt.location.grid )

.mapWithState { (evt, state: Option[Model]) => {

val model = state.orElse(new Model())

(model.classify(evt), Some(model.update(evt)))

}}](https://image.slidesharecdn.com/flink0-151030024902-lva1-app6892/75/Flink-0-10-Bay-Area-Meetup-October-2015-8-2048.jpg)

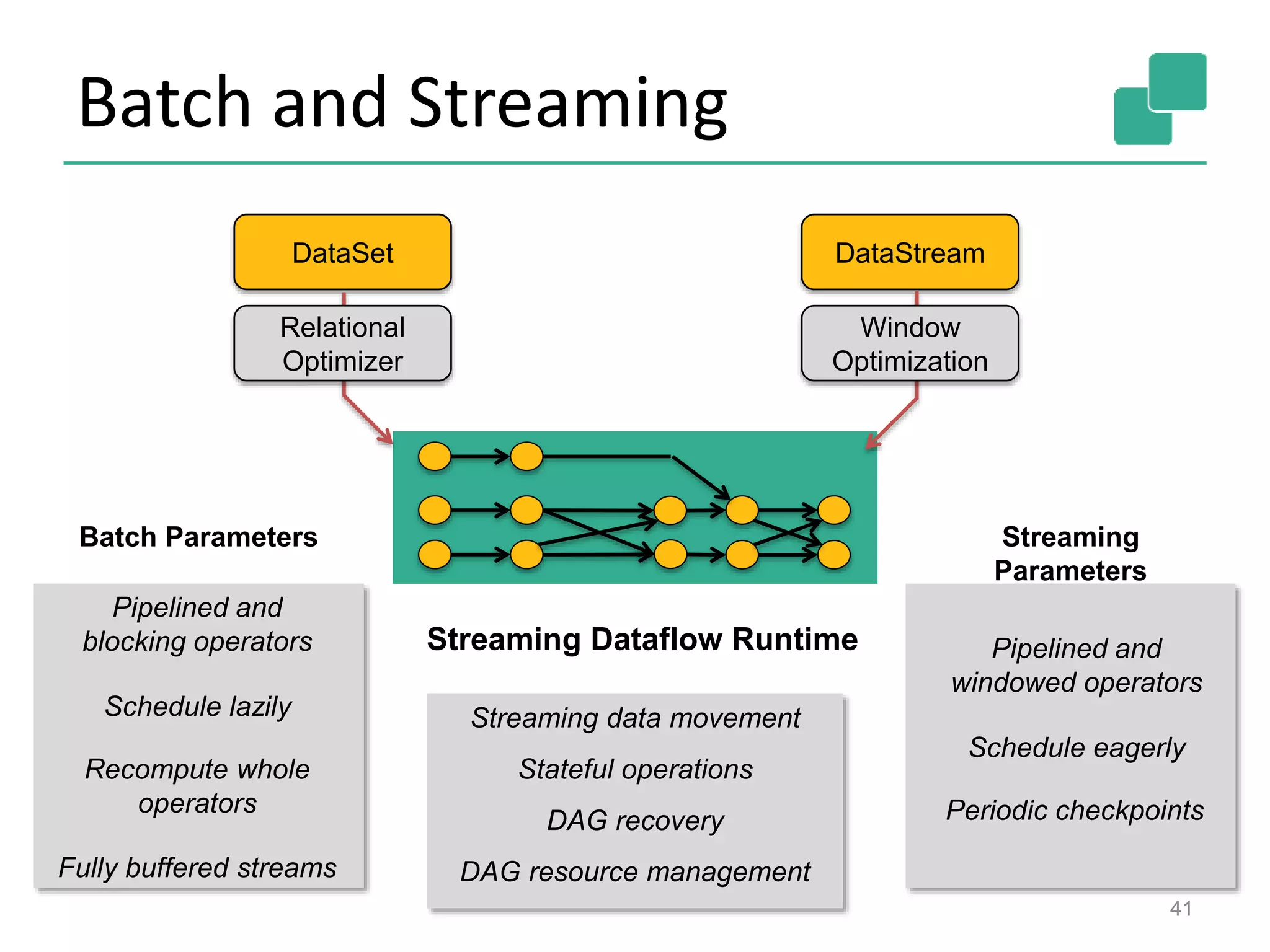

![Batch and Streaming

39

case class WordCount(word: String, count: Int)

val text: DataStream[String] = …;

text

.flatMap { line => line.split(" ") }

.map { word => new WordCount(word, 1) }

.keyBy("word")

.window(GlobalWindows.create())

.trigger(new EOFTrigger())

.sum("count")

Batch Word Count in the DataStream API](https://image.slidesharecdn.com/flink0-151030024902-lva1-app6892/75/Flink-0-10-Bay-Area-Meetup-October-2015-39-2048.jpg)

![Batch and Streaming

40

Batch Word Count in the DataSet API

case class WordCount(word: String, count: Int)

val text: DataStream[String] = …;

text

.flatMap { line => line.split(" ") }

.map { word => new WordCount(word, 1) }

.keyBy("word")

.window(GlobalWindows.create())

.trigger(new EOFTrigger())

.sum("count")

val text: DataSet[String] = …;

text

.flatMap { line => line.split(" ") }

.map { word => new WordCount(word, 1) }

.groupBy("word")

.sum("count")](https://image.slidesharecdn.com/flink0-151030024902-lva1-app6892/75/Flink-0-10-Bay-Area-Meetup-October-2015-40-2048.jpg)

![Monitoring

45

Life system metrics and

user-defined accumulators/statistics

Get http://flink-m:8081/jobs/7684be6004e4e955c2a558a9bc463f65/accumulators

Monitoring REST API for

custom monitoring tools

{ "id": "dceafe2df1f57a1206fcb907cb38ad97", "user-accumulators": [

{ "name":"avglen", "type":"DoubleCounter", "value":"123.03259440000001" },

{ "name":"genwords", "type":"LongCounter", "value":"75000000" } ] }](https://image.slidesharecdn.com/flink0-151030024902-lva1-app6892/75/Flink-0-10-Bay-Area-Meetup-October-2015-45-2048.jpg)