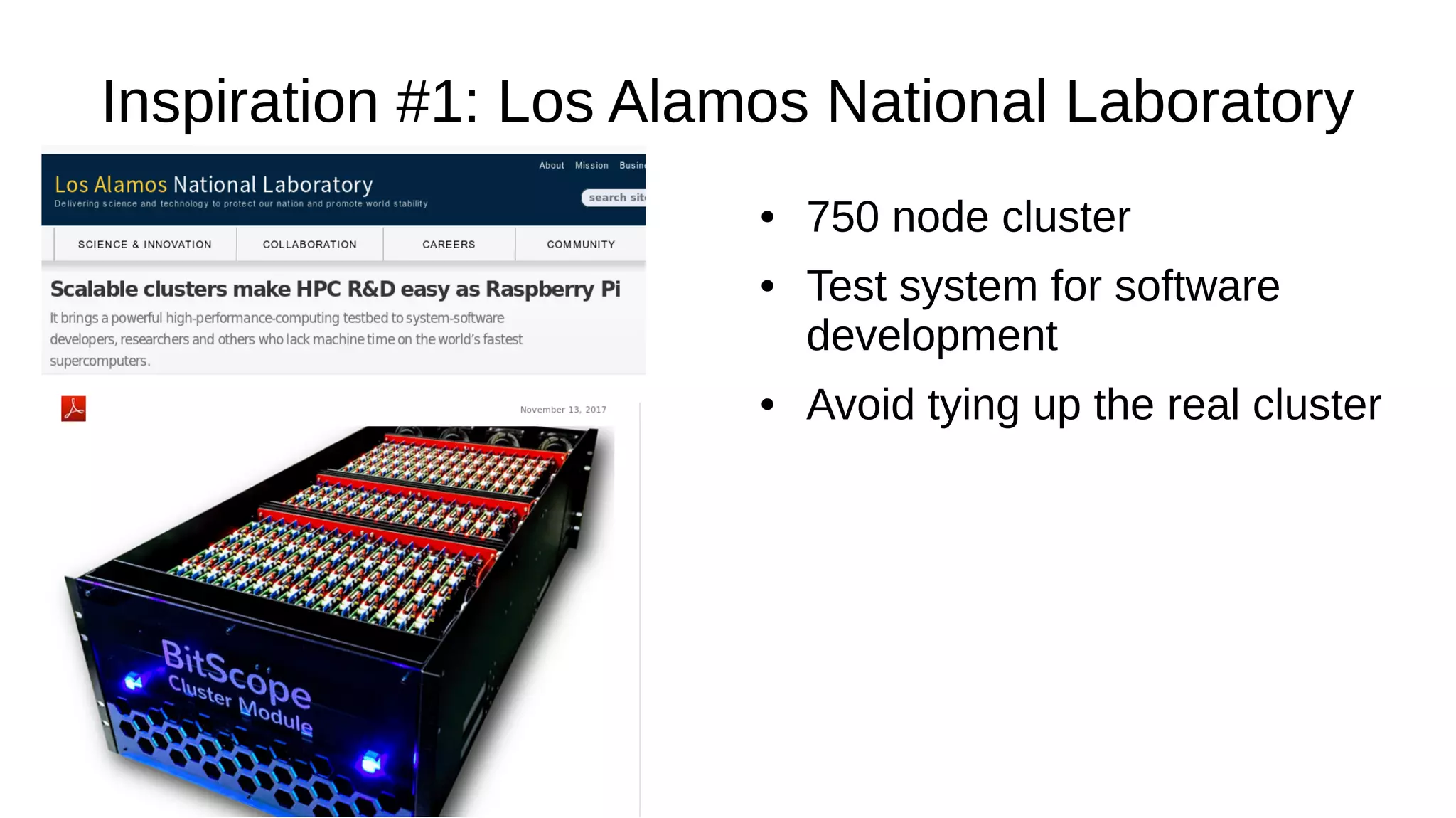

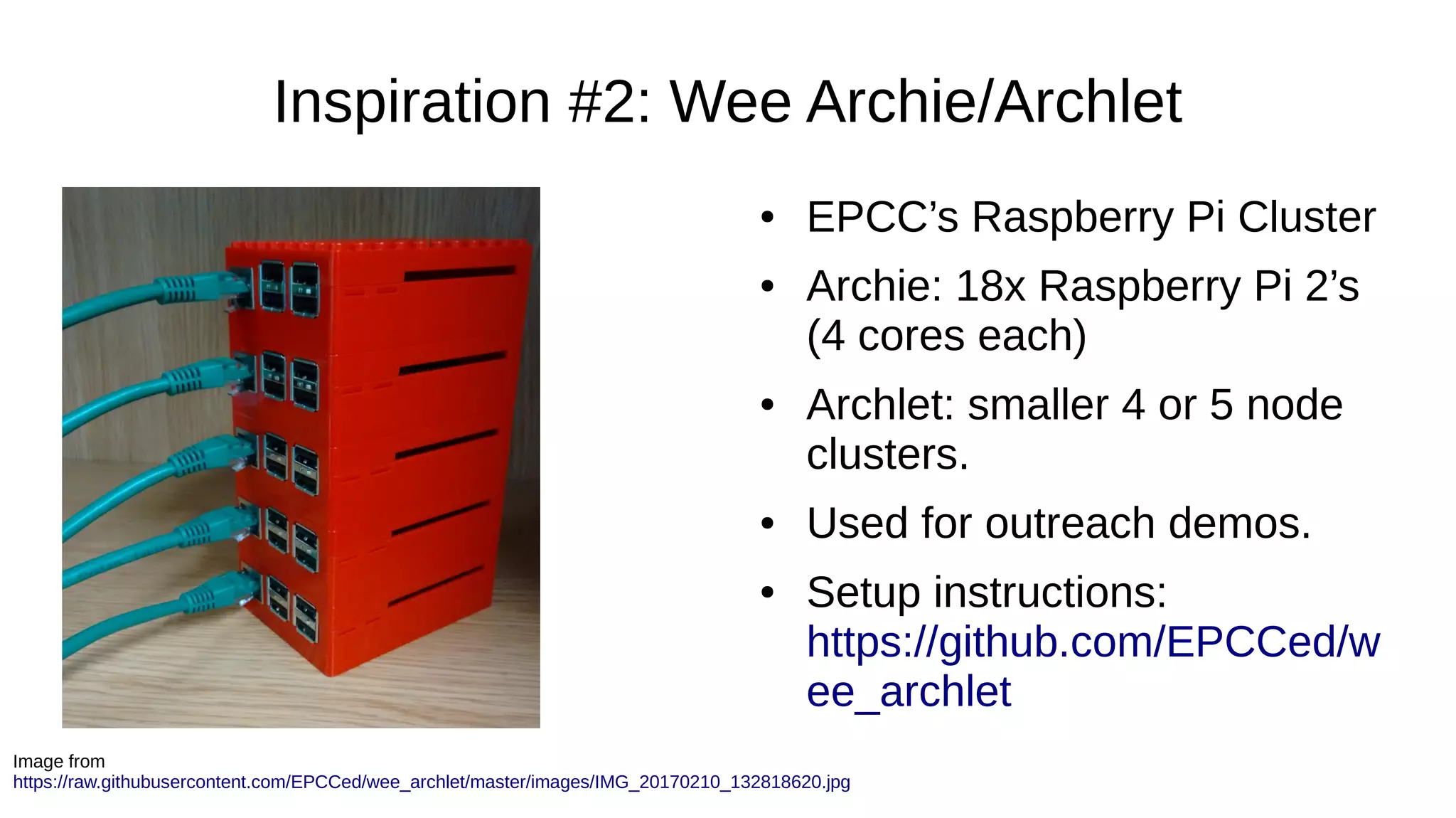

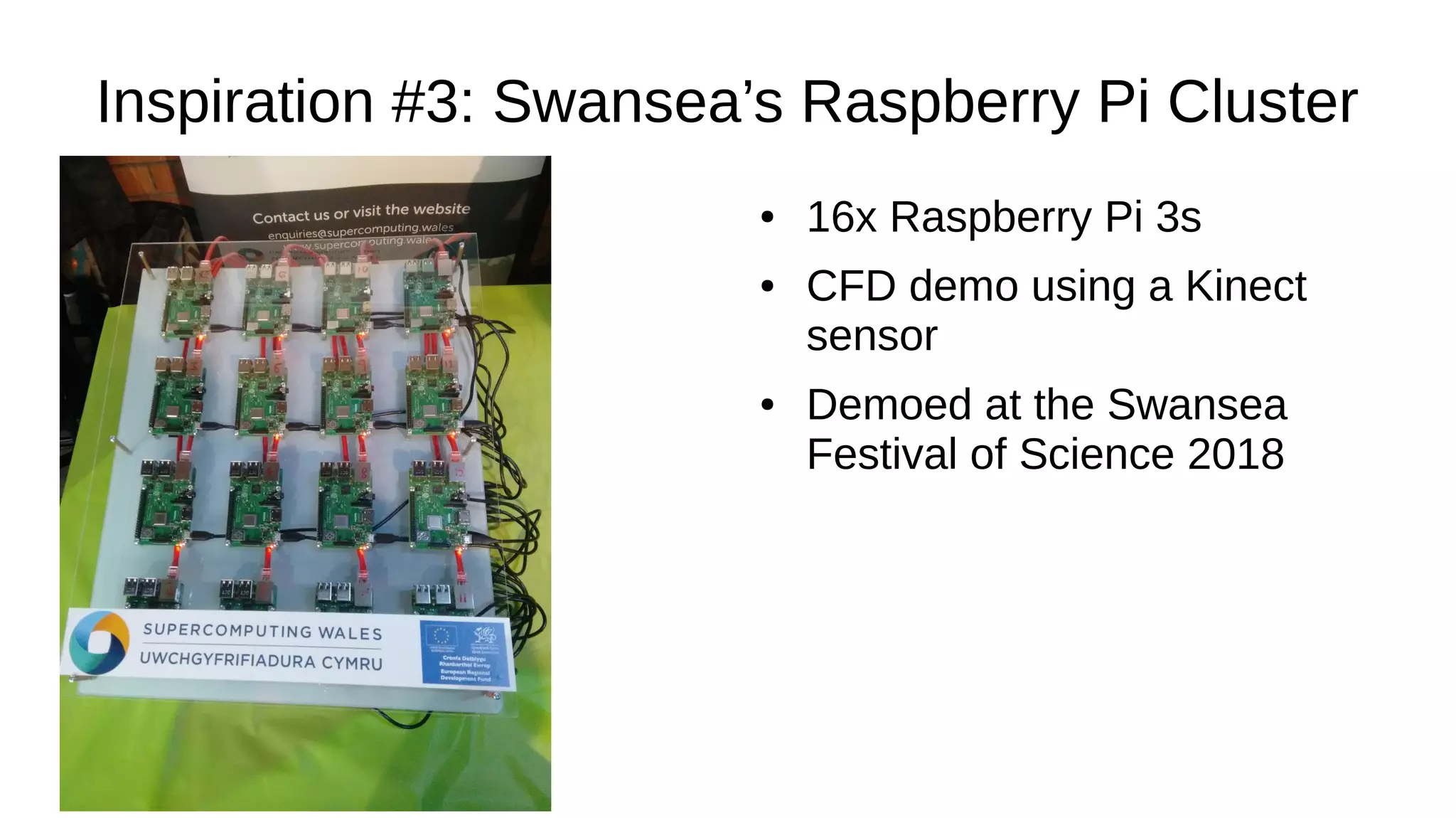

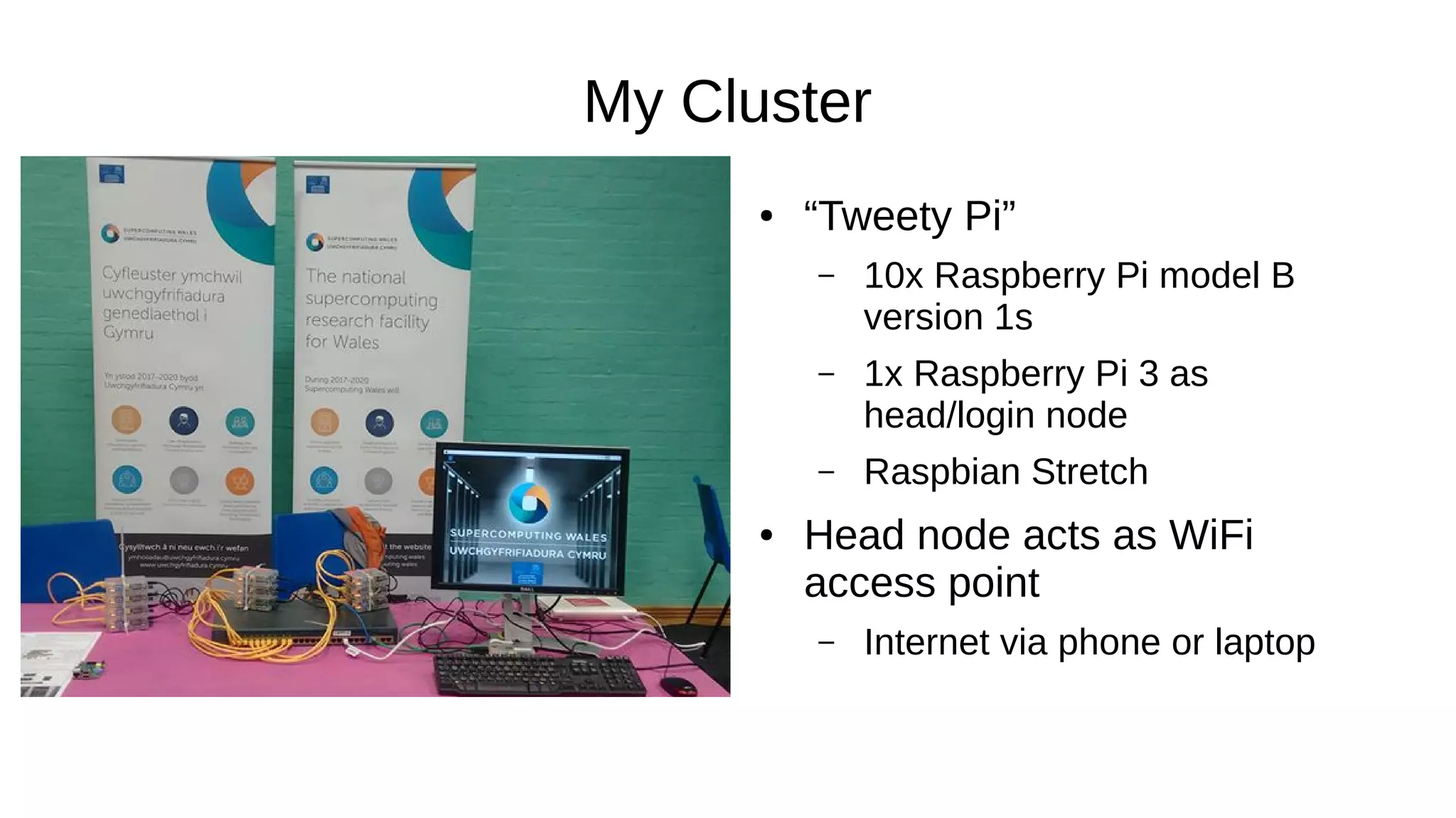

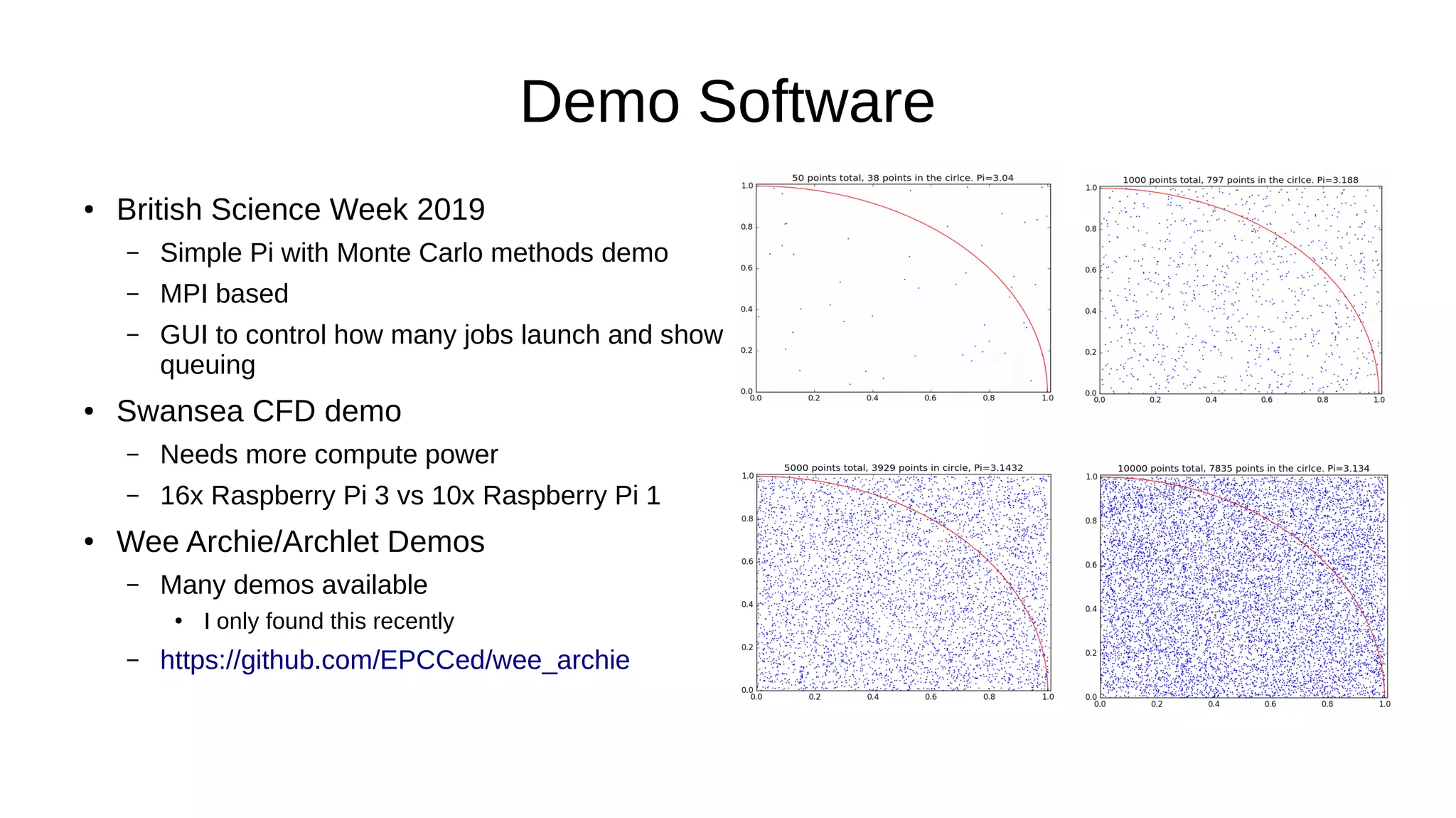

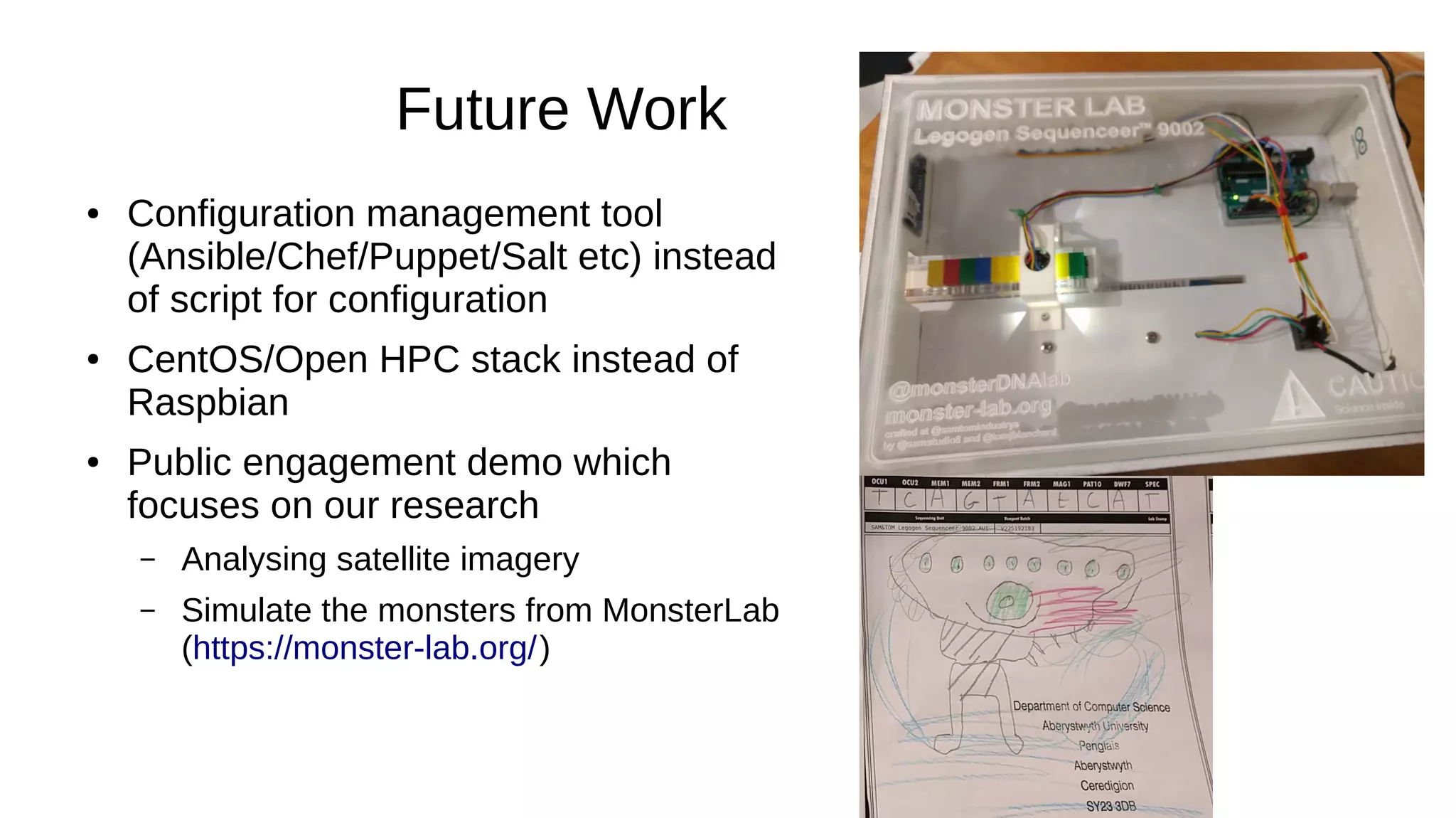

The document discusses the implementation of High-Performance Computing (HPC) using a Raspberry Pi cluster in educational settings, highlighting the author's background, inspirations, and teaching materials. It details the author's personal project, 'Tweety Pi', and various demonstrations utilizing Raspberry Pi for HPC training, alongside challenges and feedback from teaching experiences. Future work includes enhancements in configuration management and public engagement initiatives using HPC for research applications.