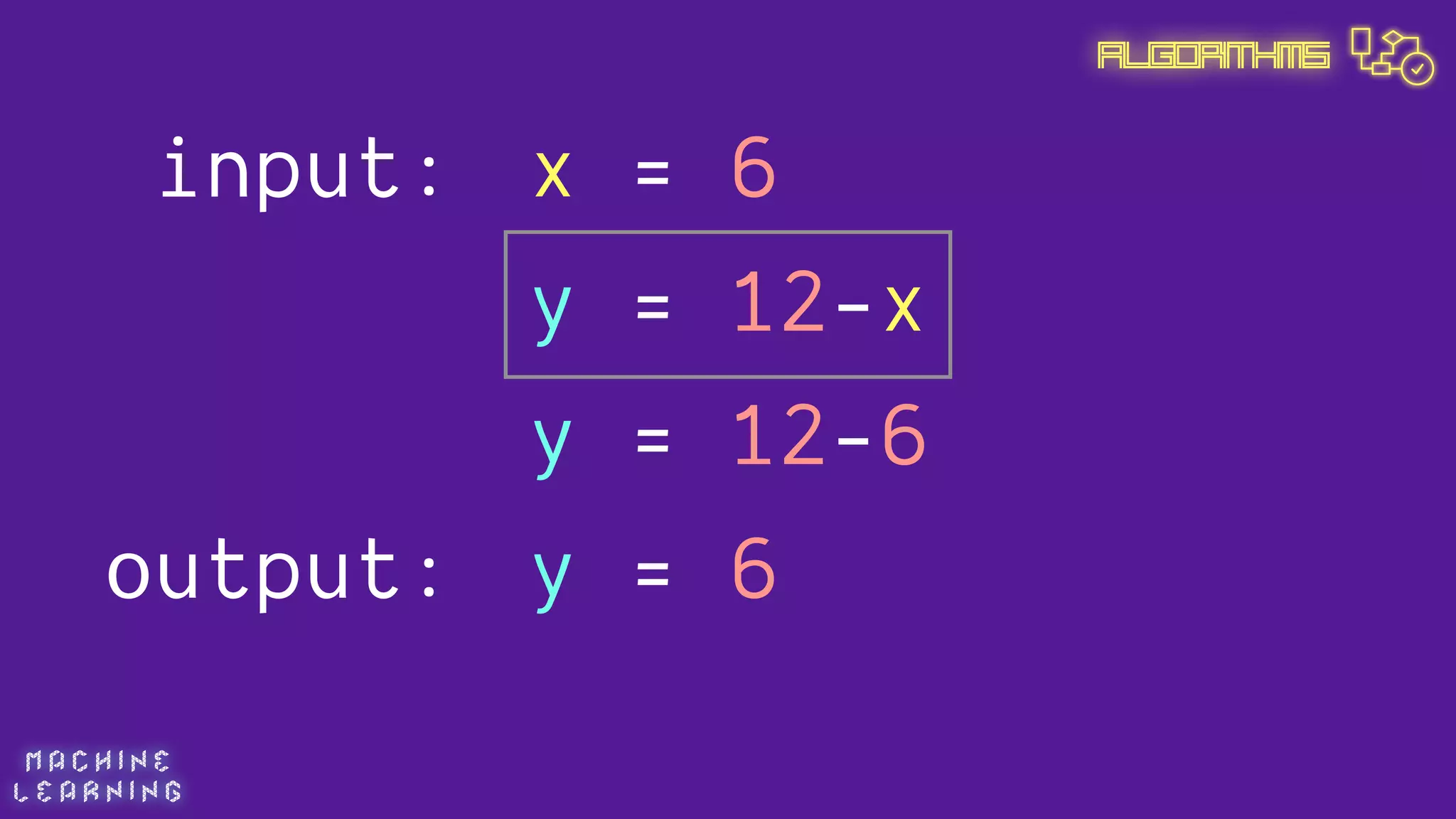

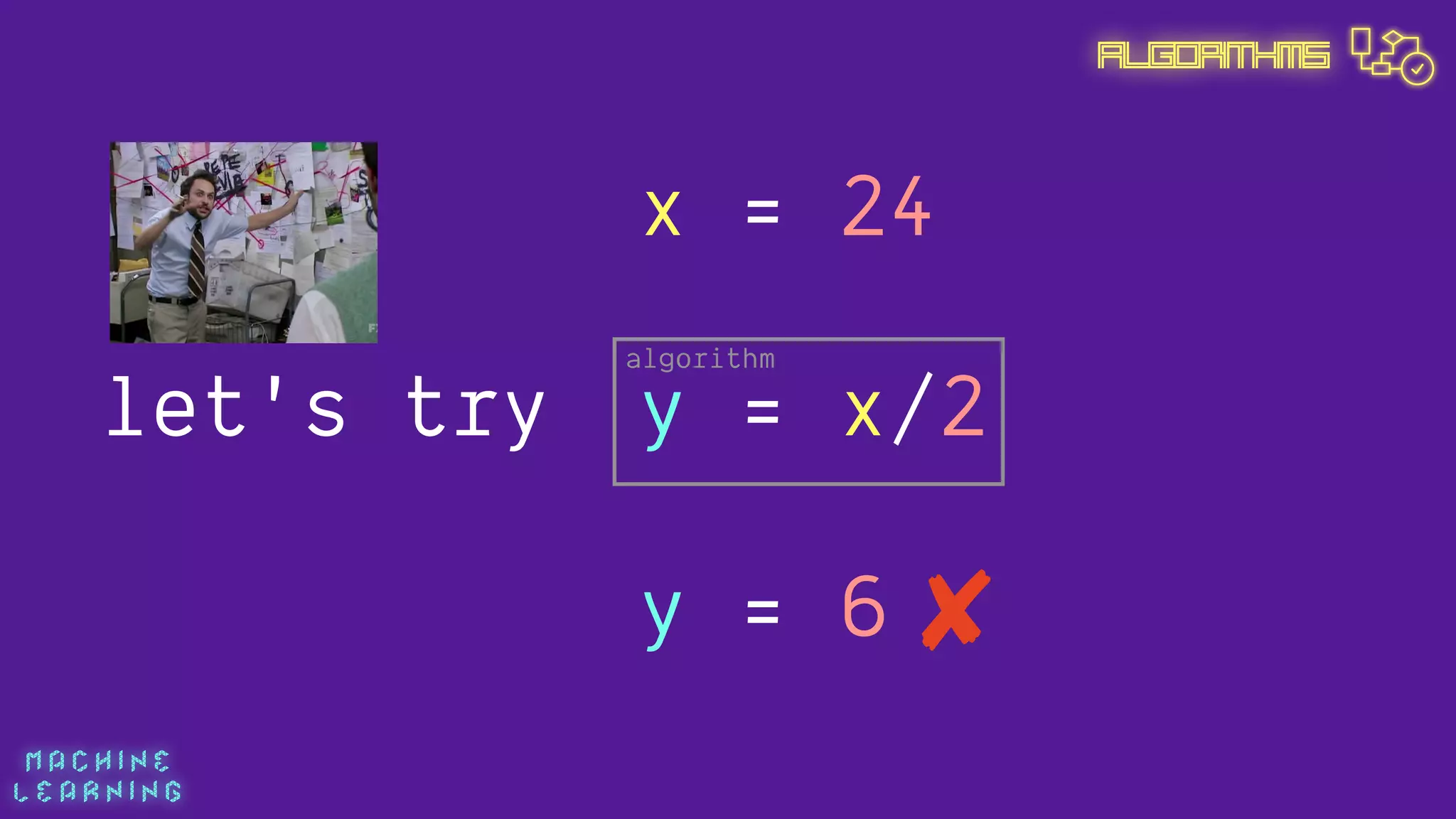

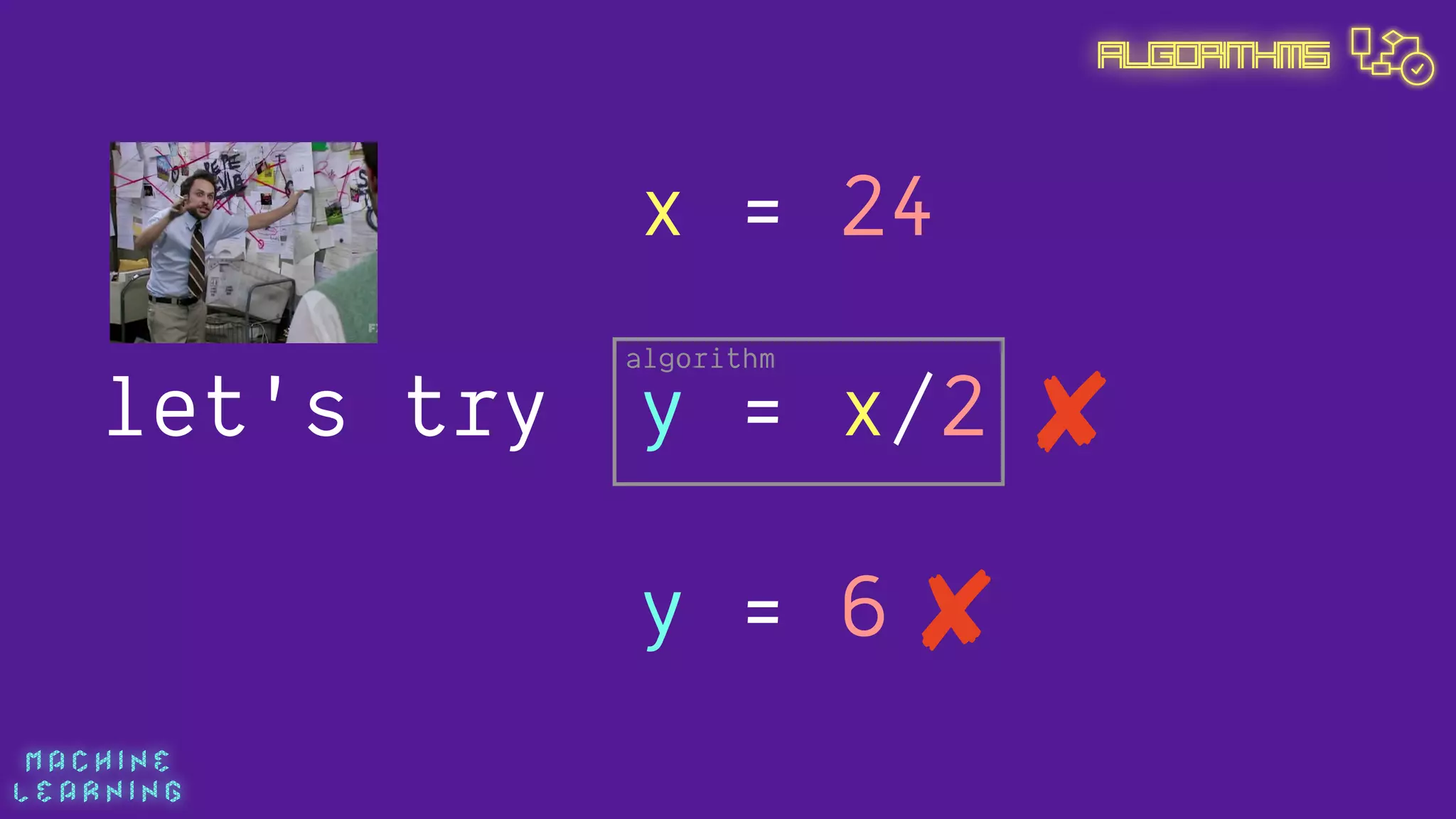

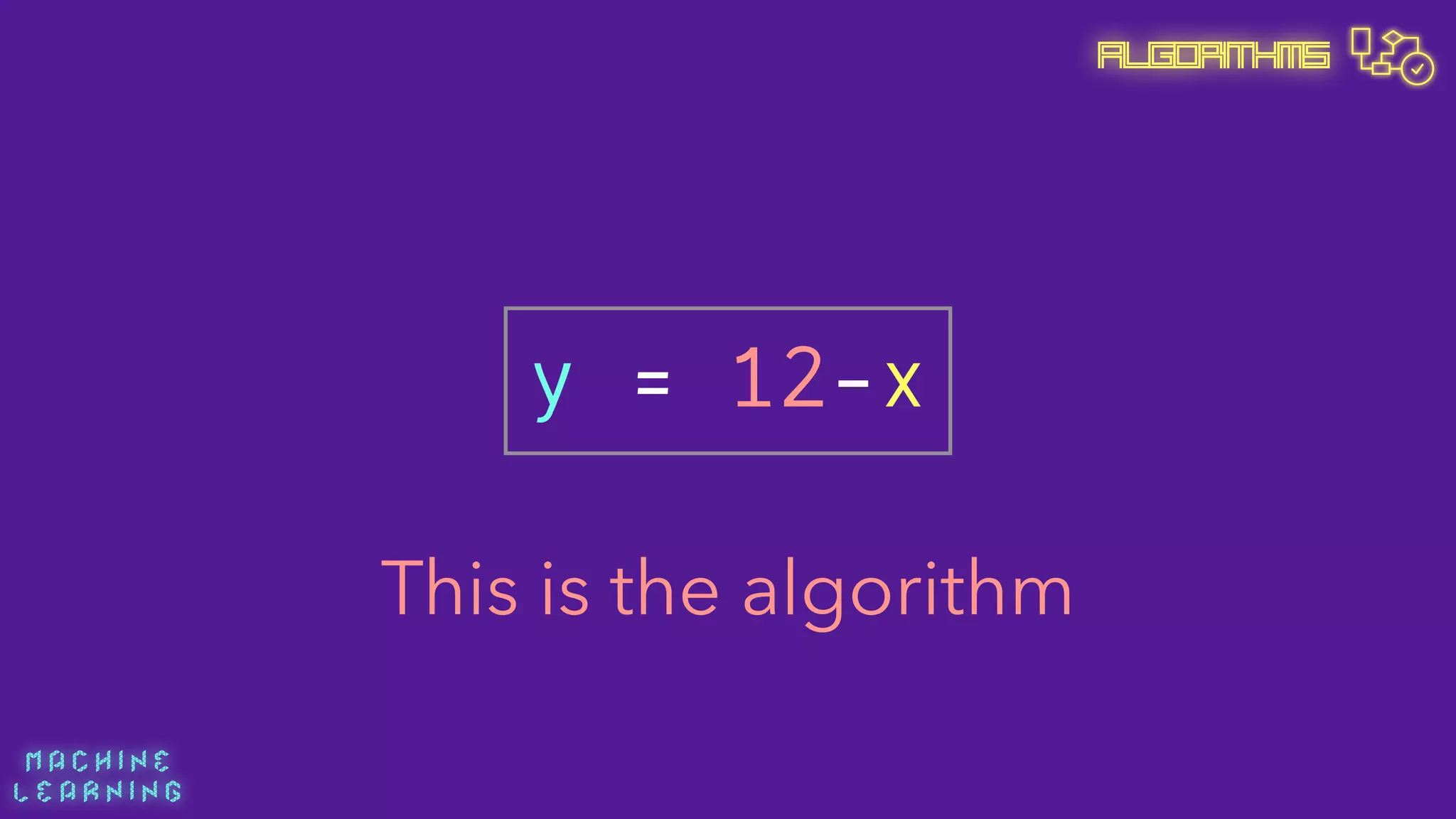

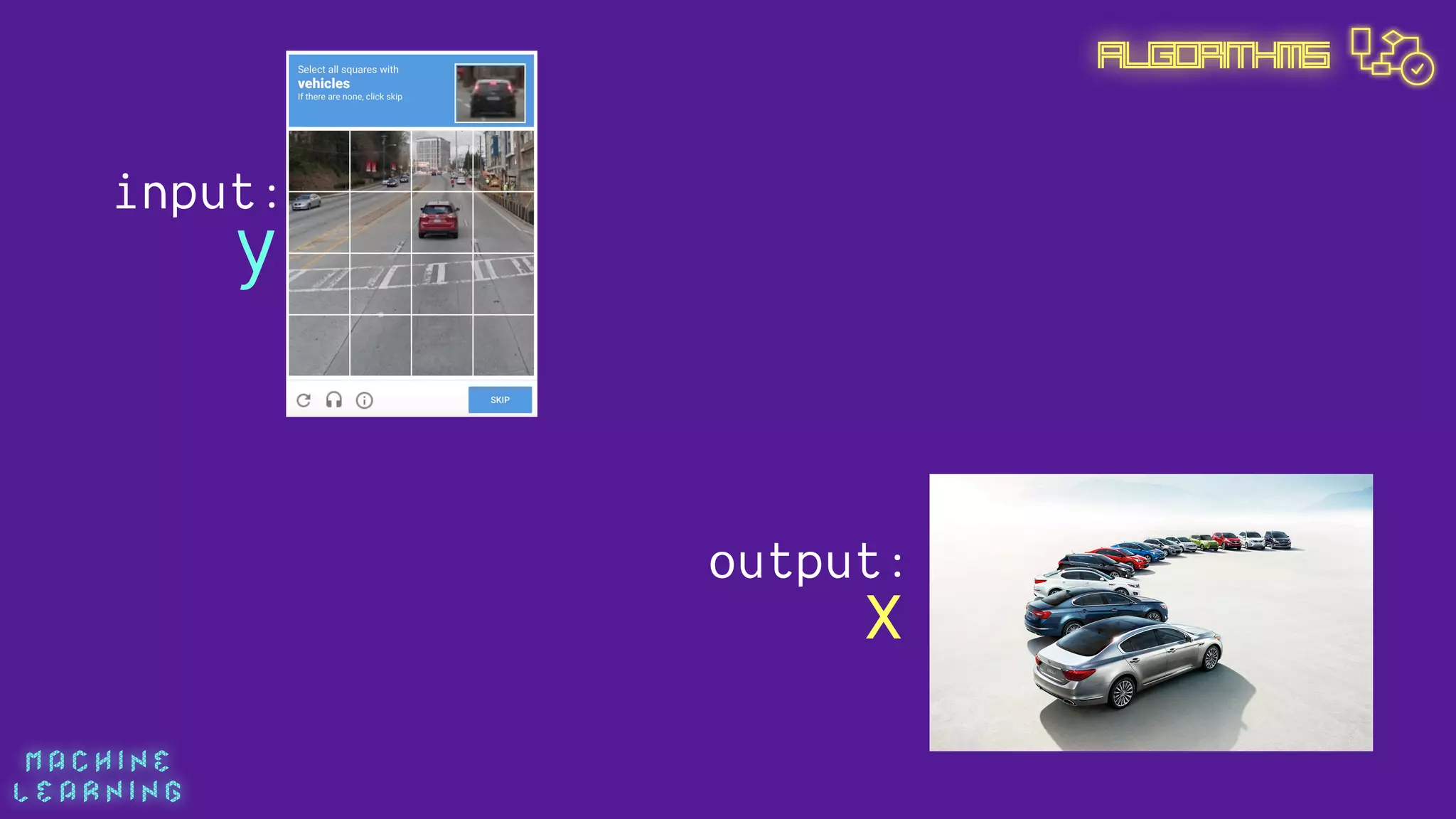

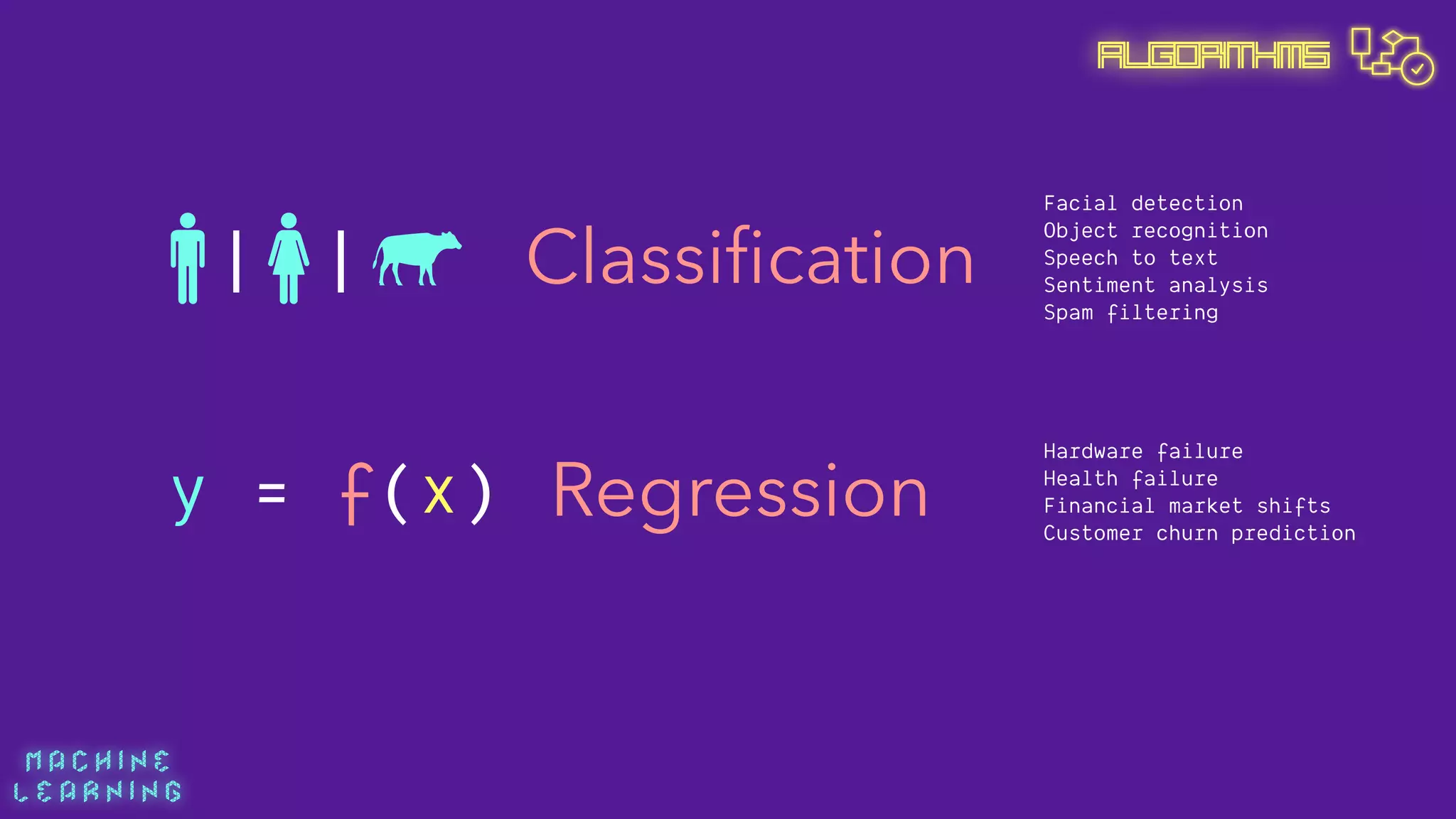

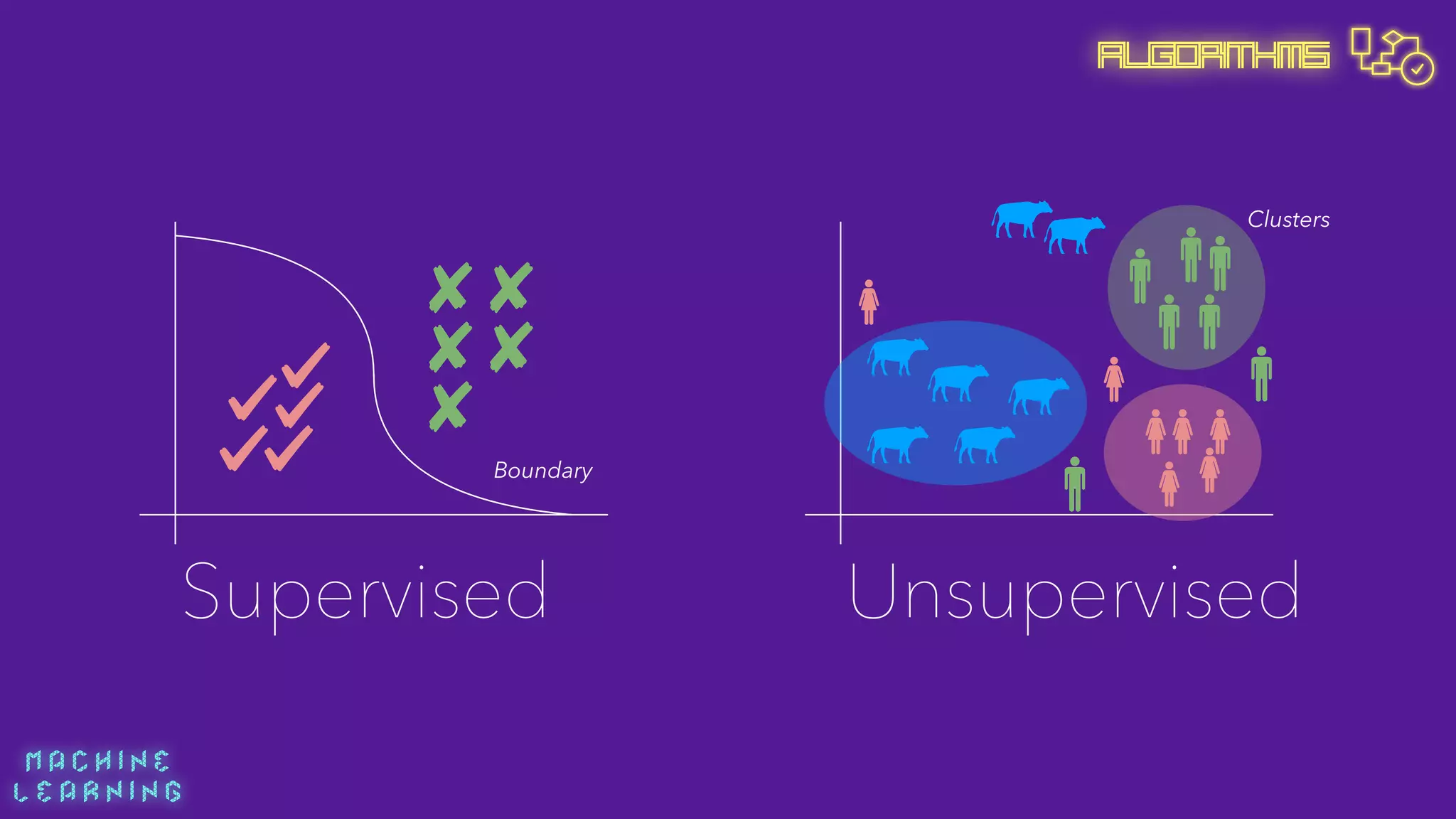

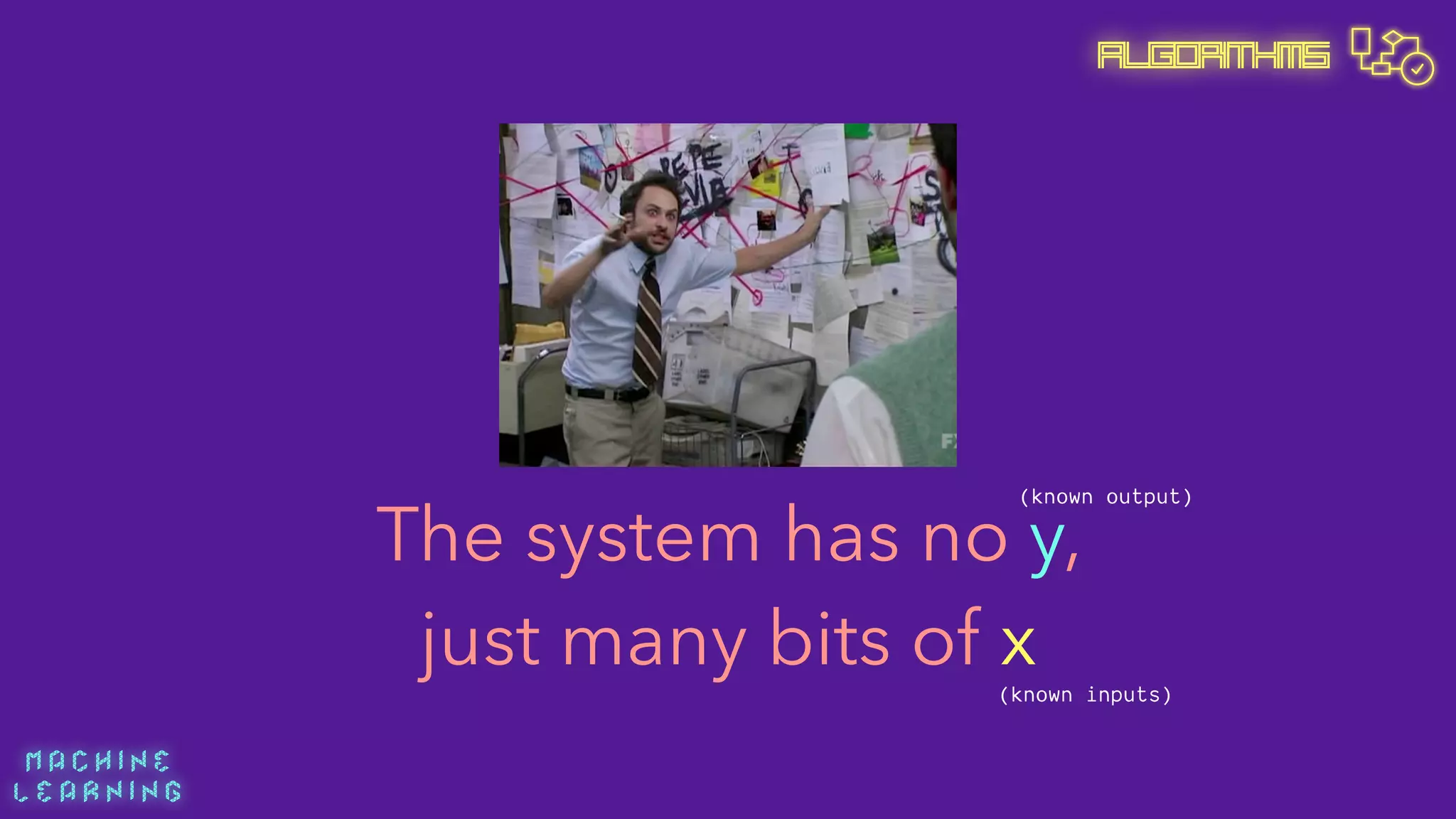

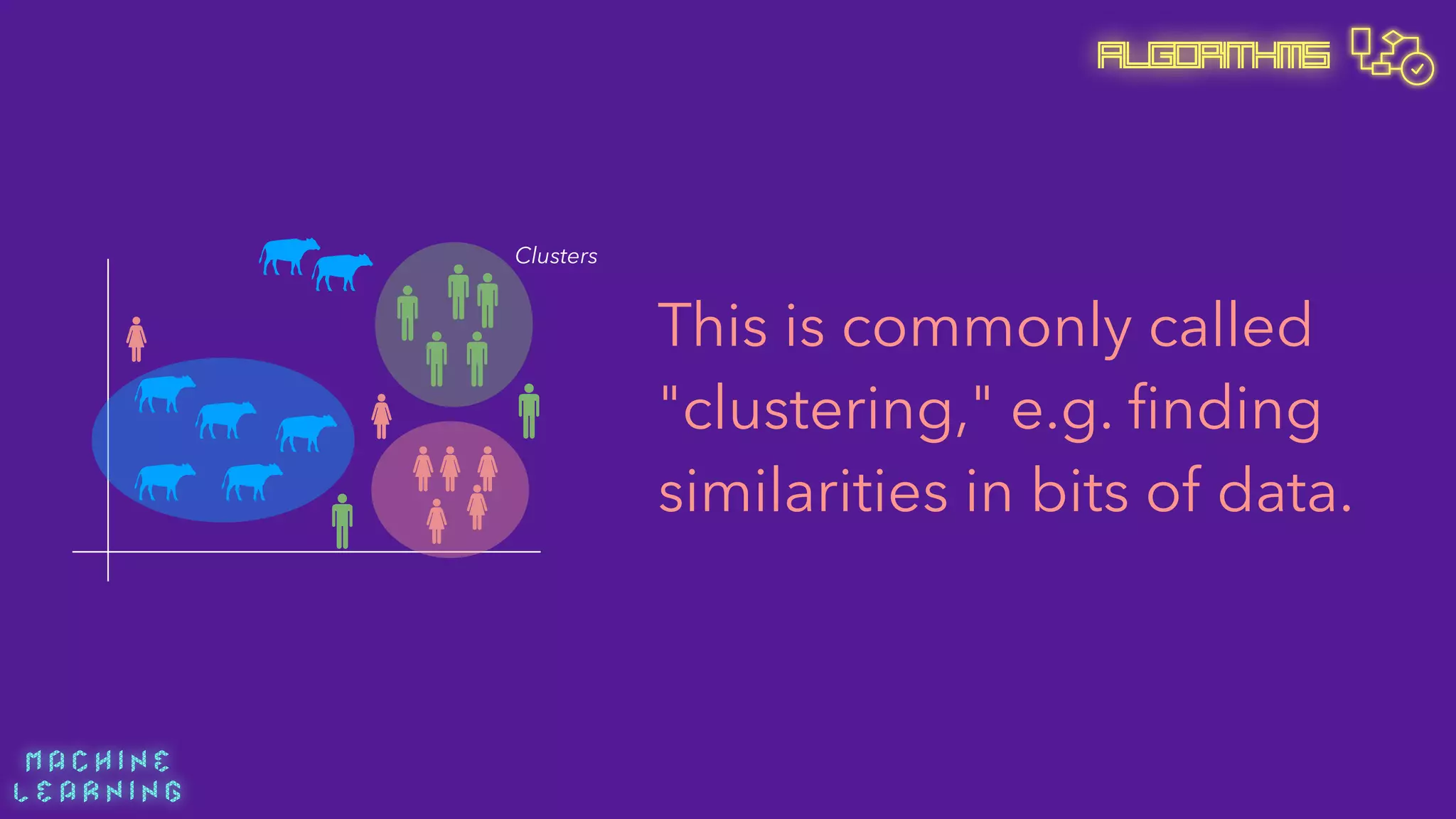

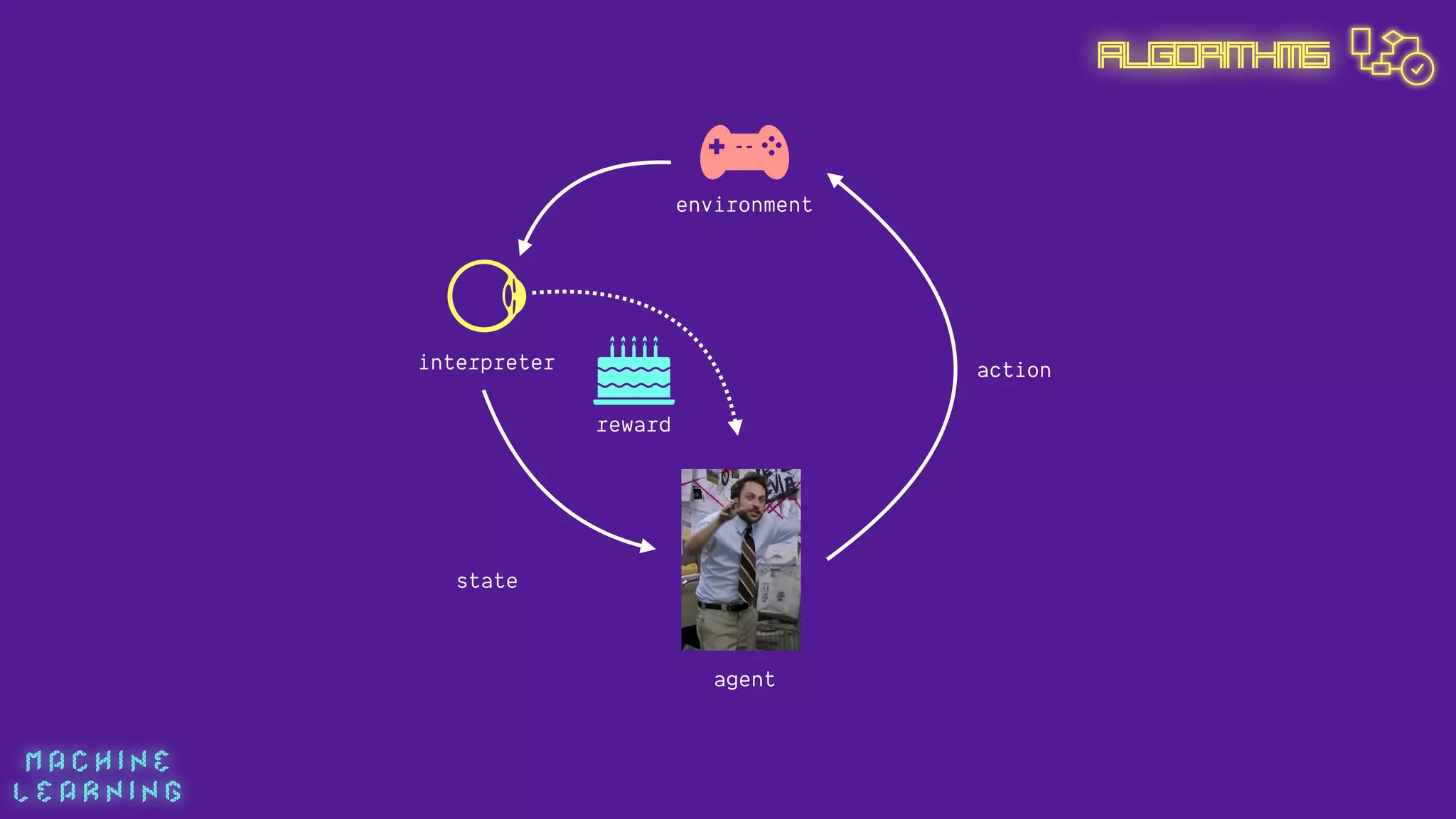

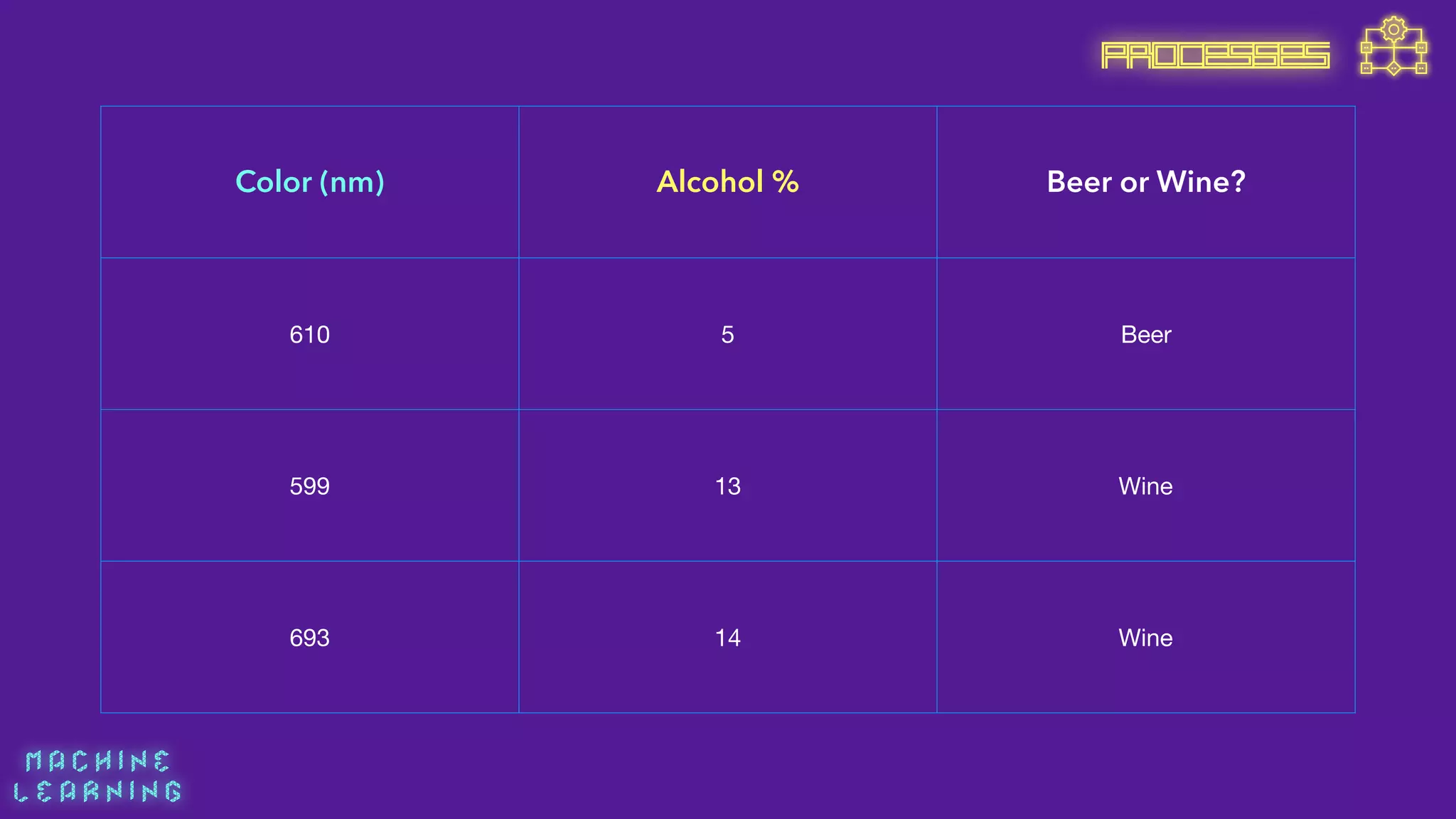

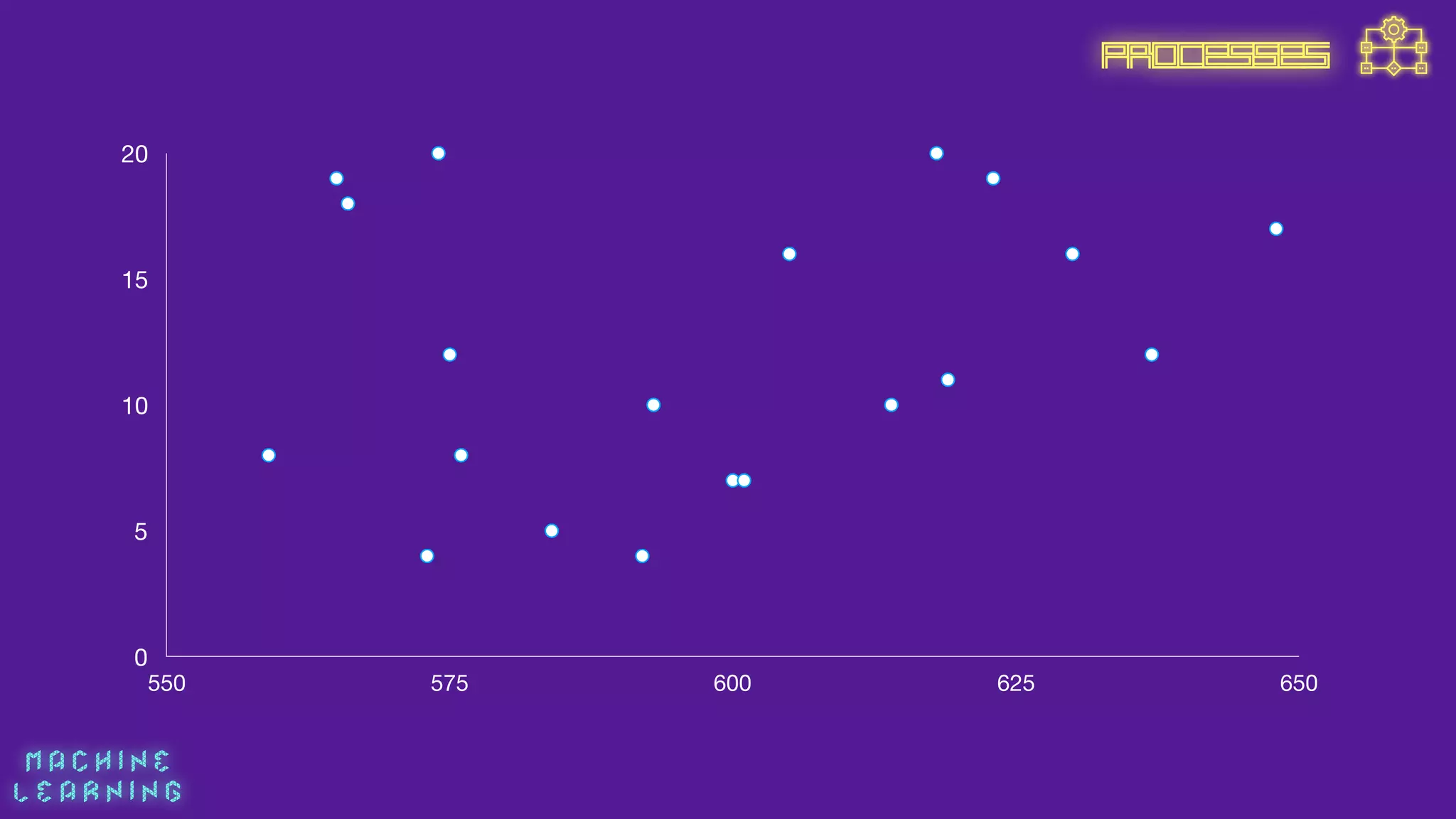

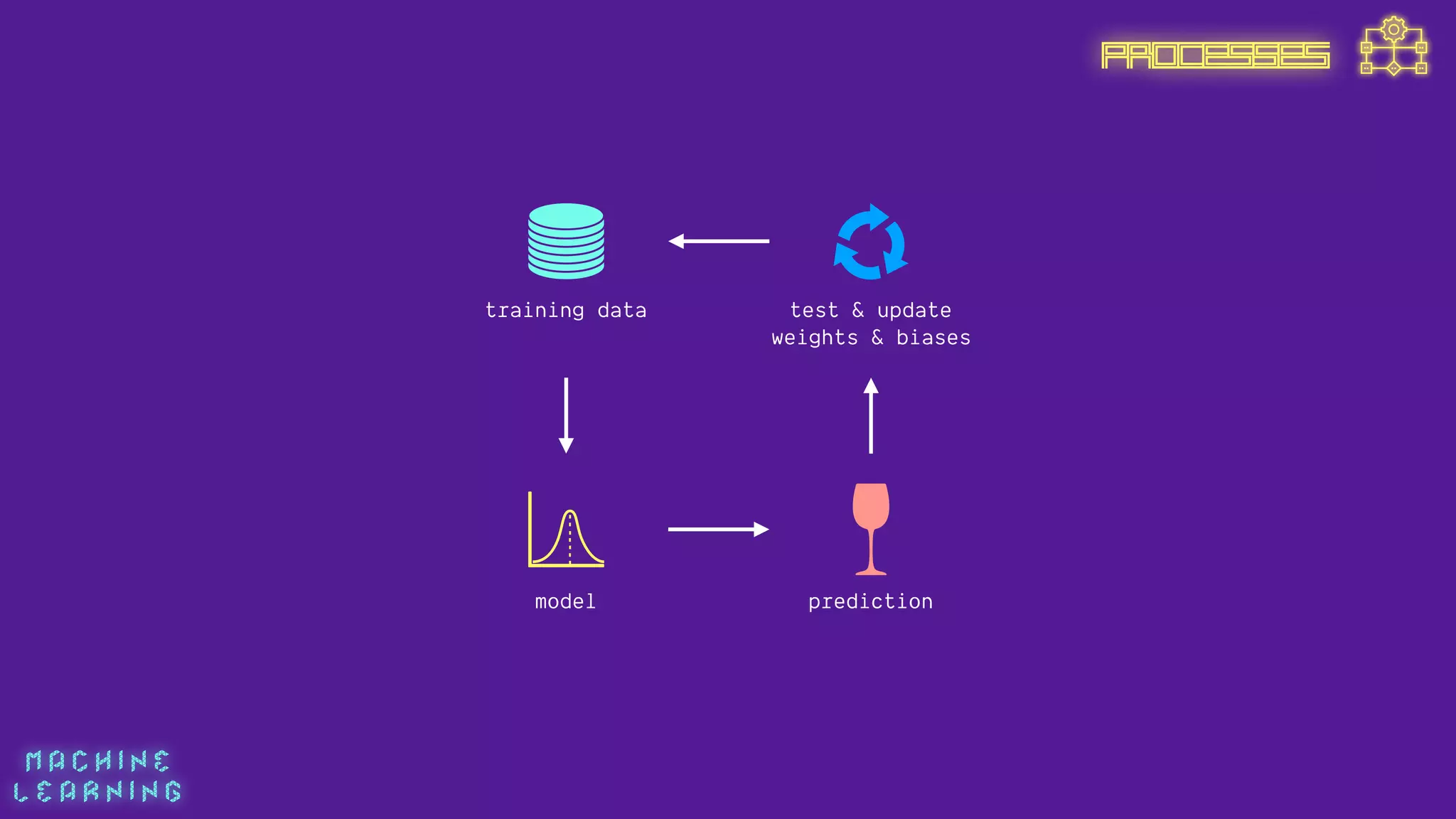

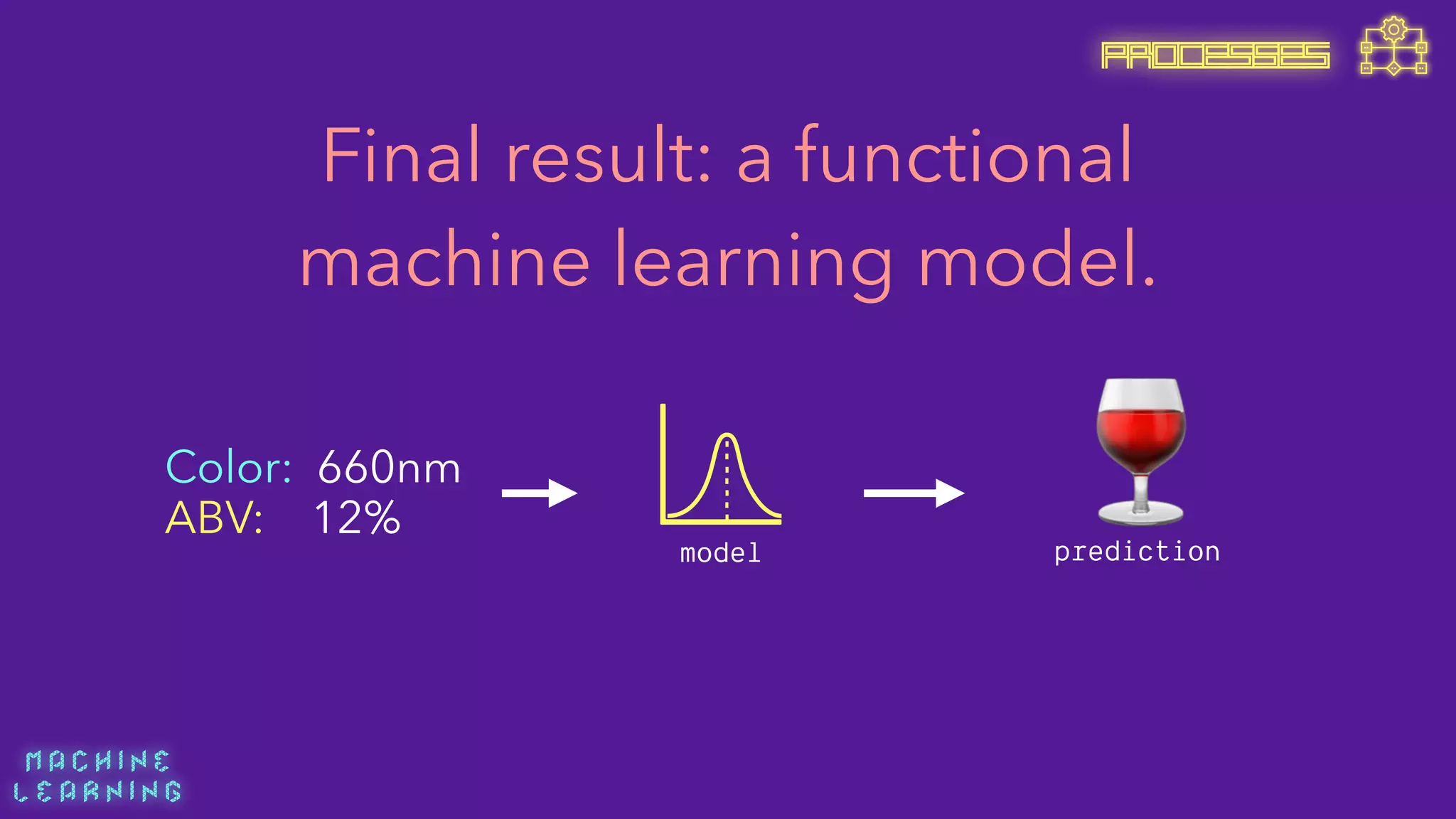

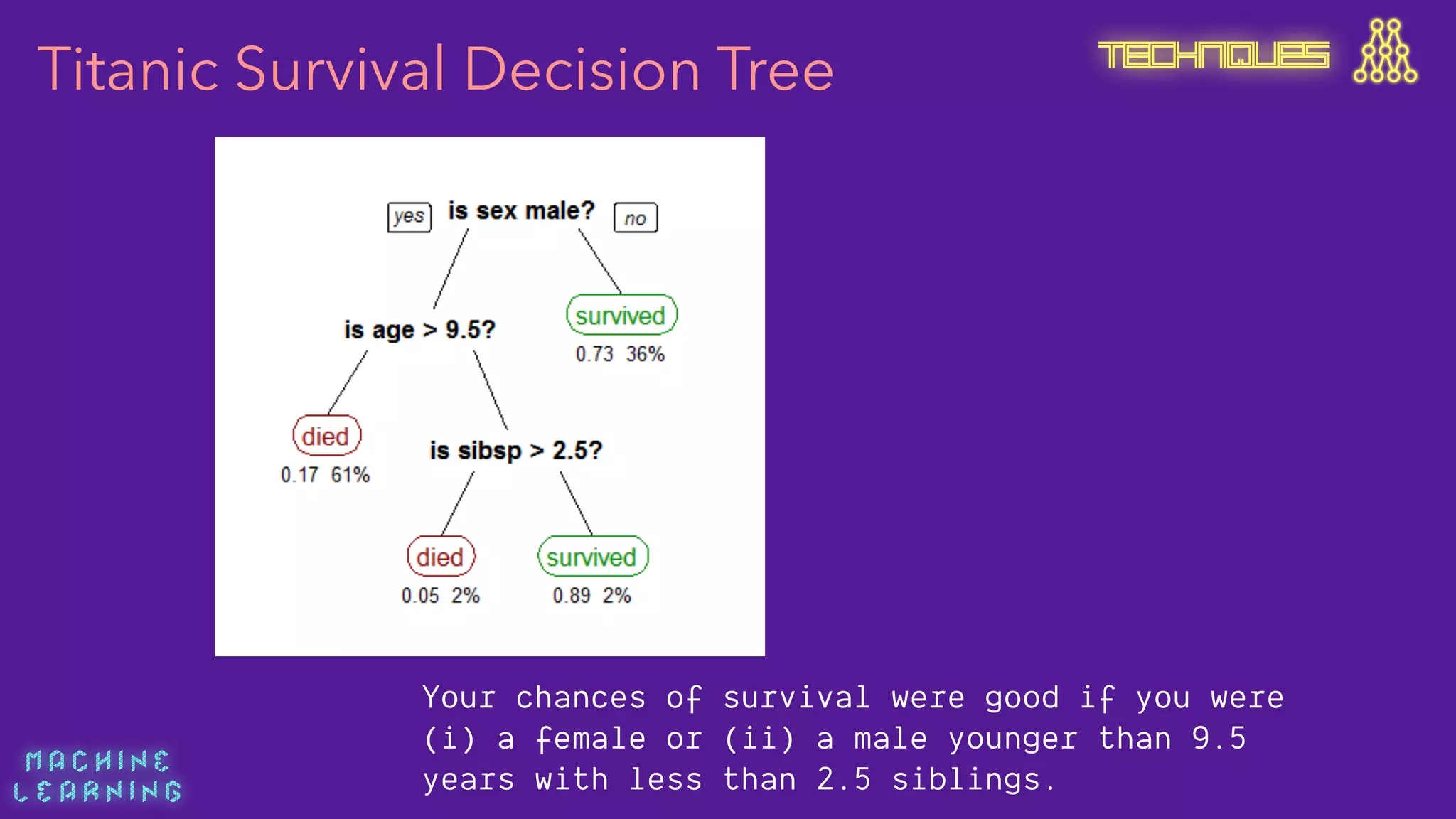

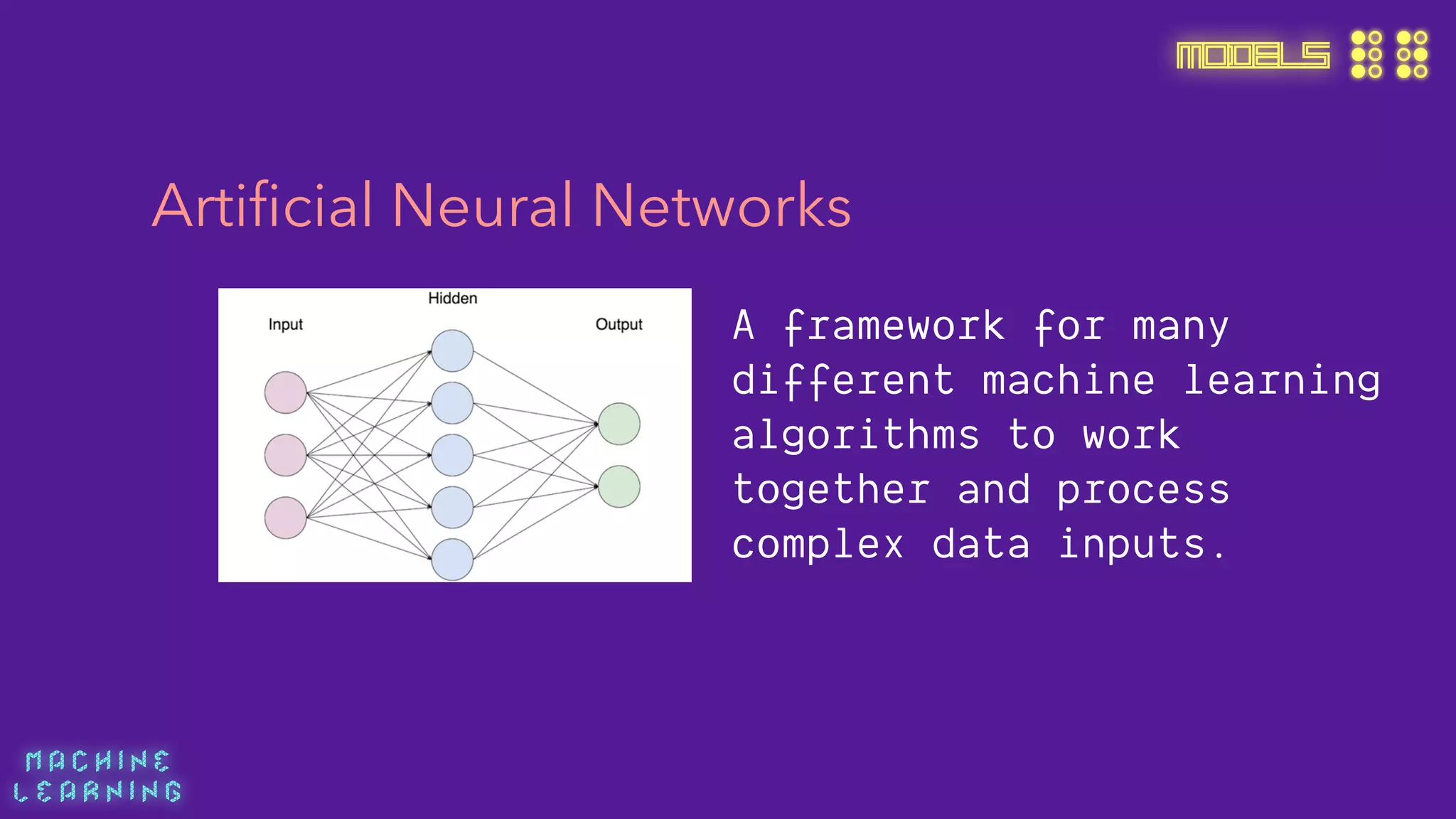

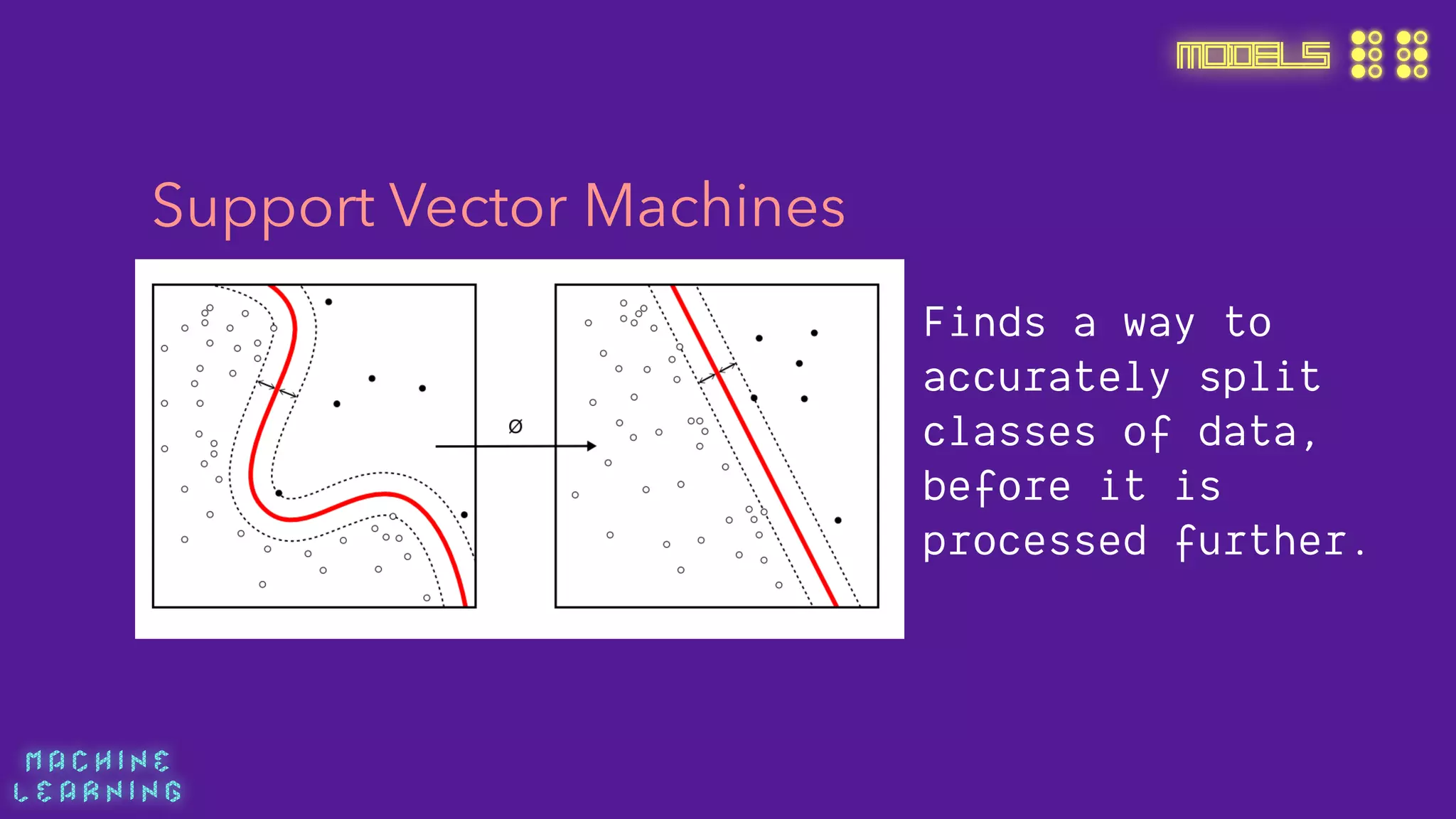

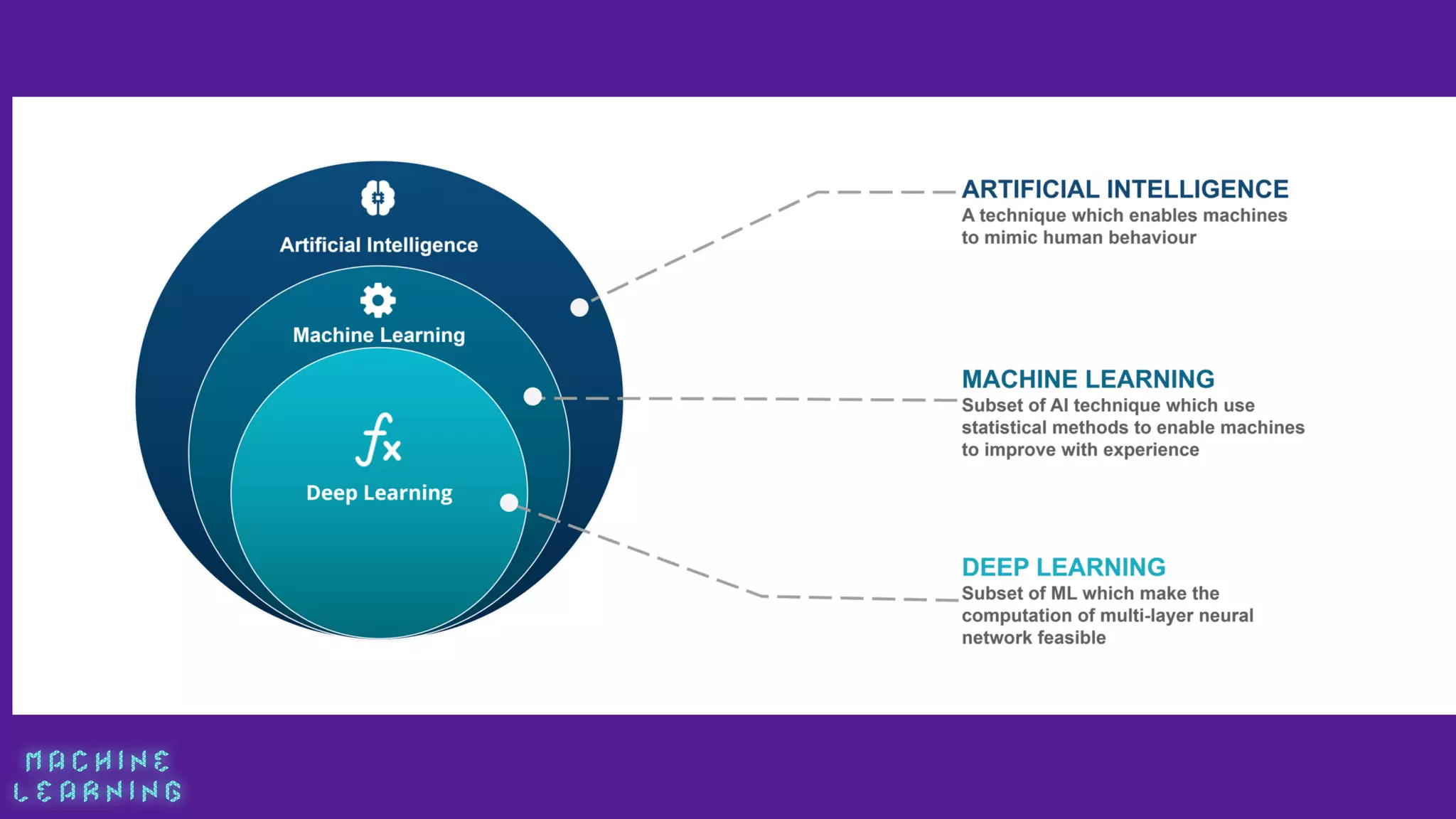

Machine learning is a field focused on enabling computers to analyze data and draw conclusions without explicit instructions, using algorithms that can perform classification, regression, and grouping tasks. The three main types of machine learning approaches are supervised, unsupervised, and reinforcement learning, each with different techniques and applications. Machine learning involves a process beginning with data collection, preparation, algorithm selection, training, and evaluation to create functional predictive models.