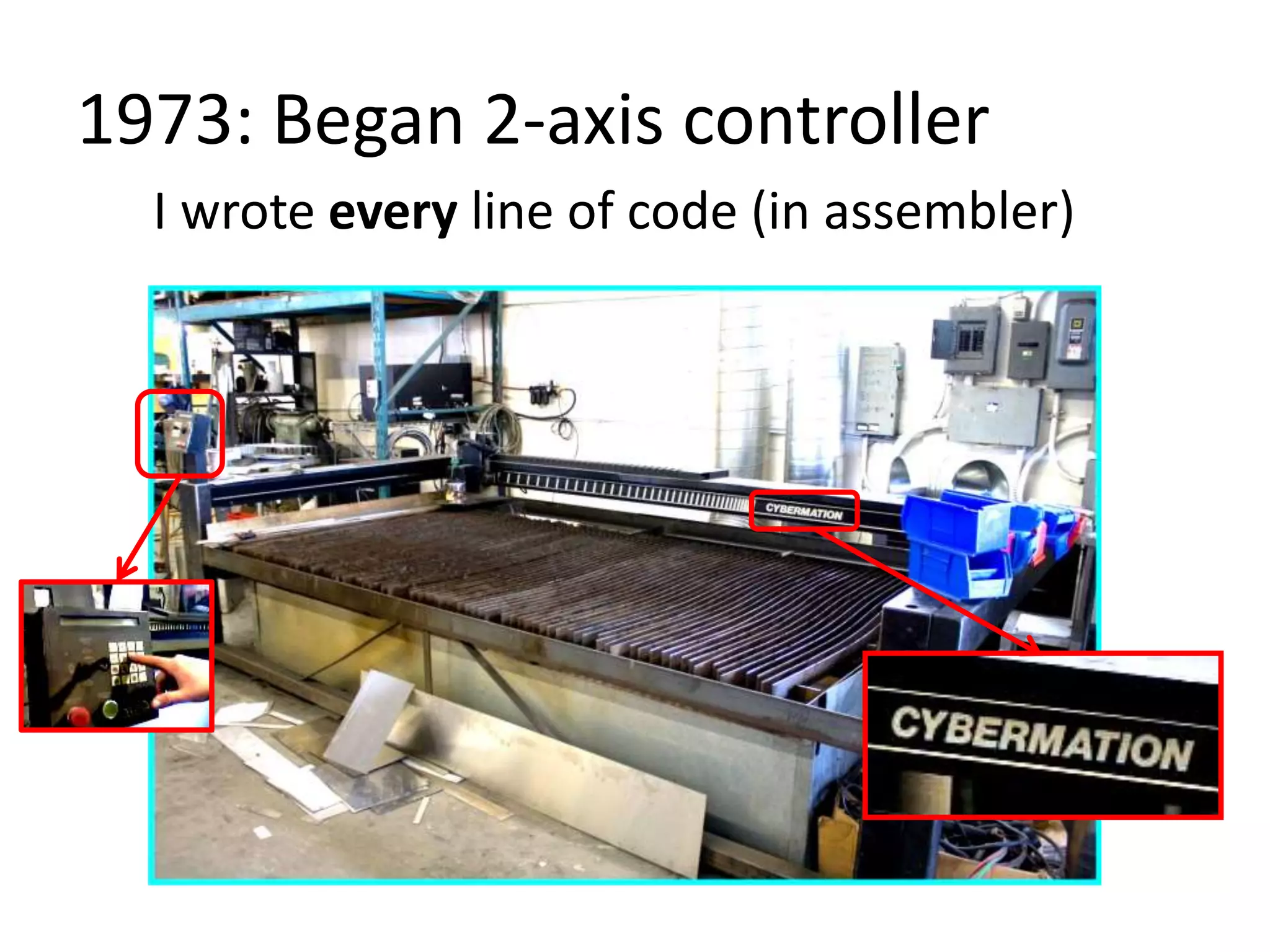

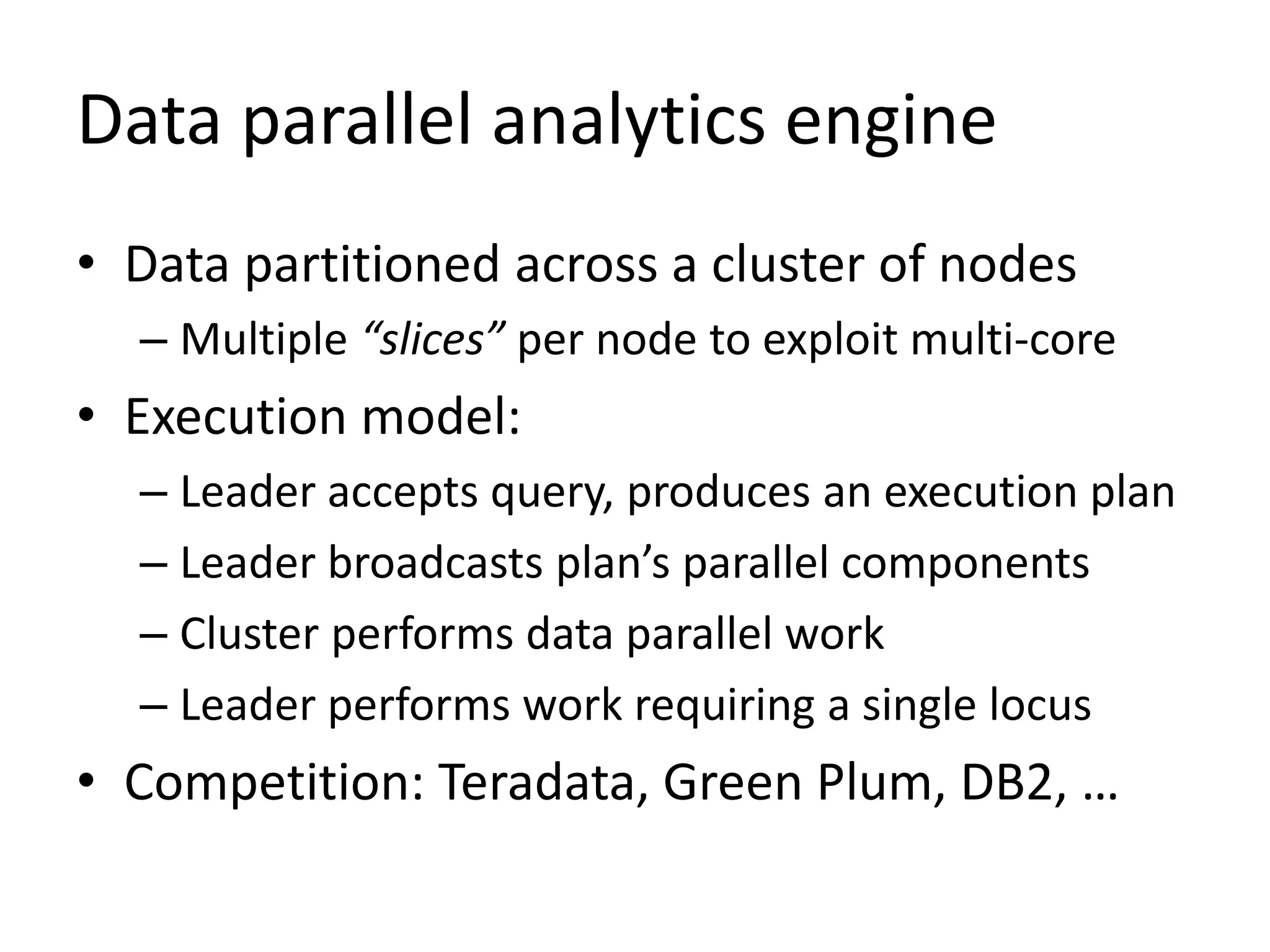

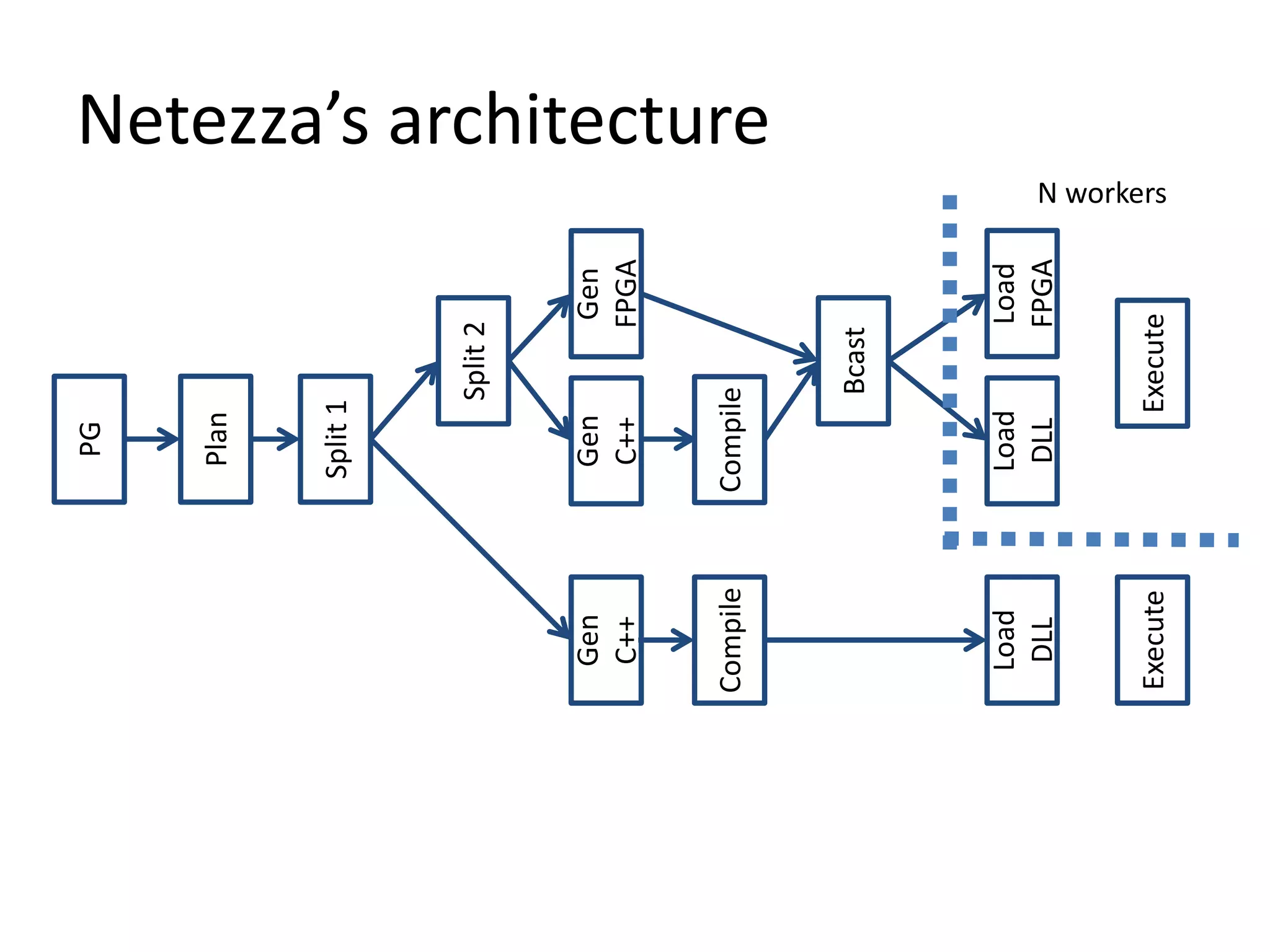

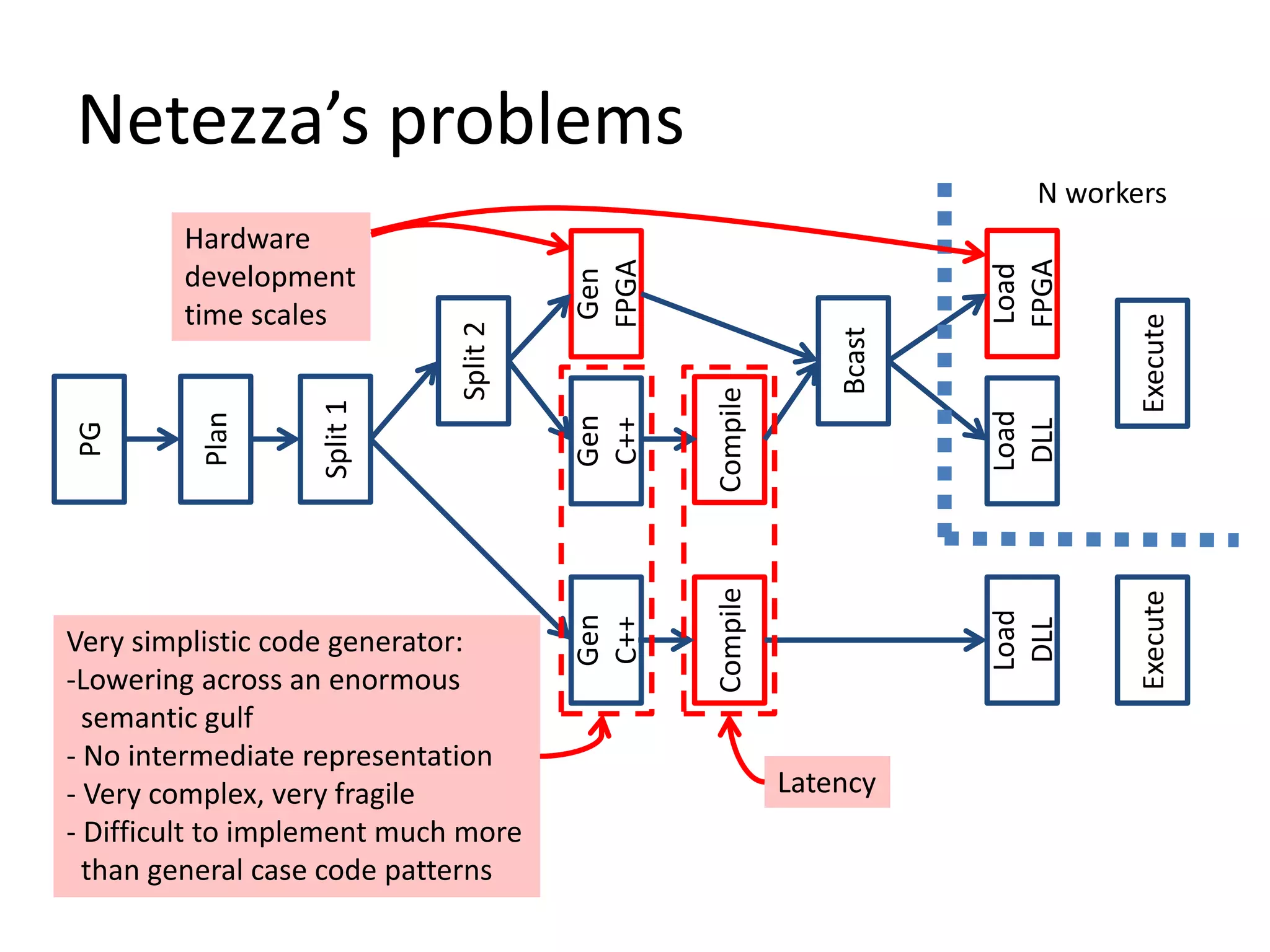

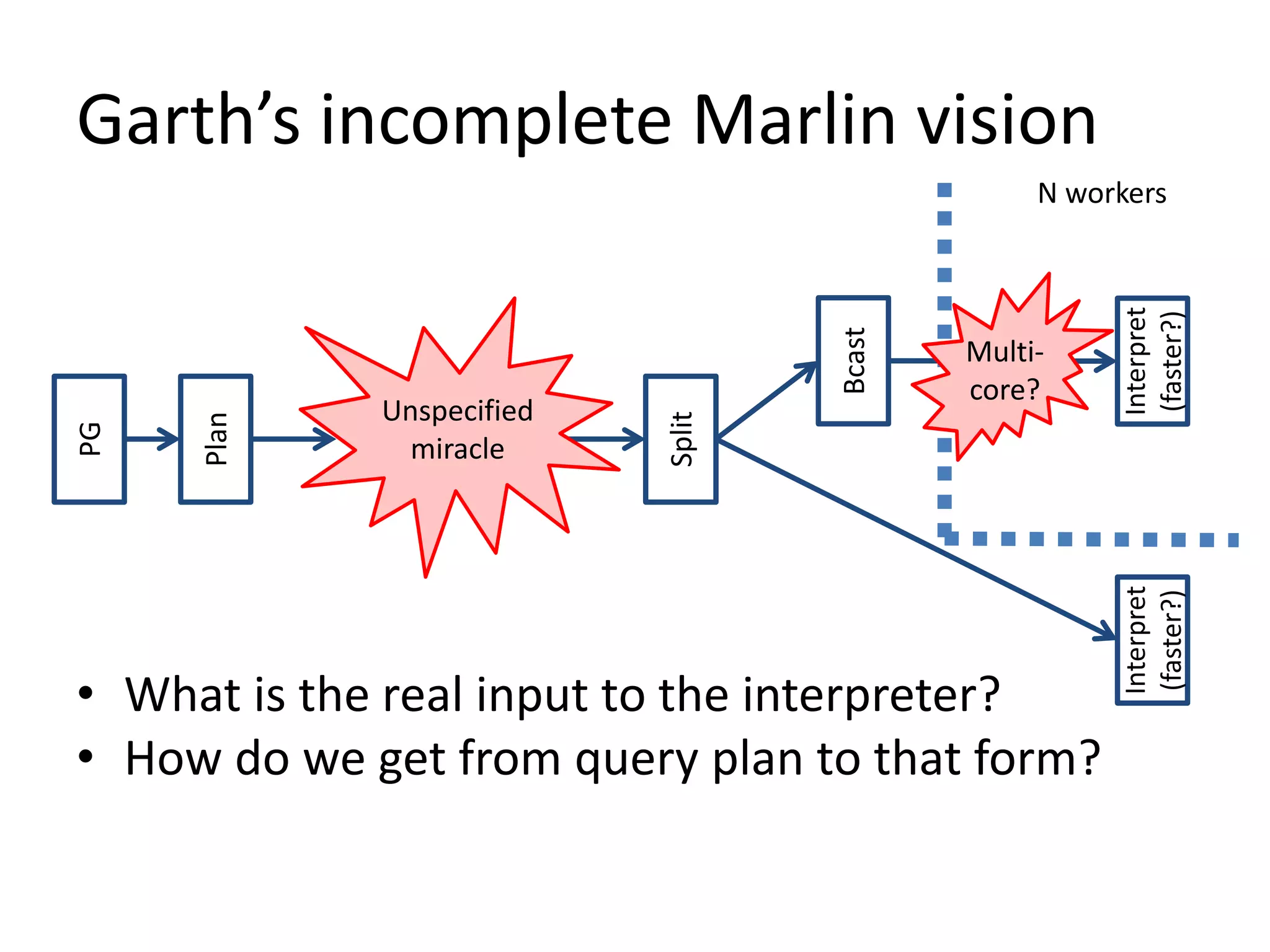

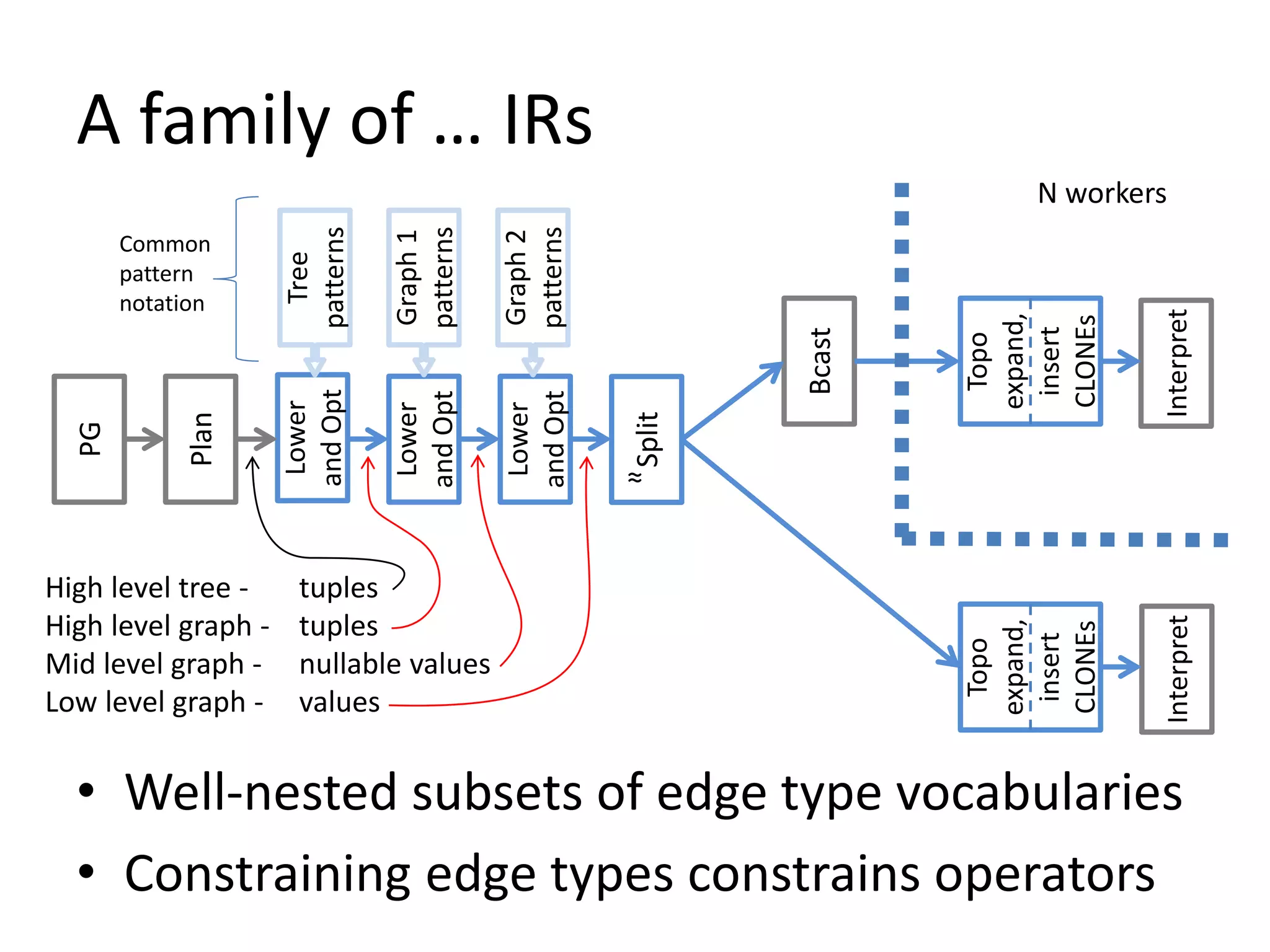

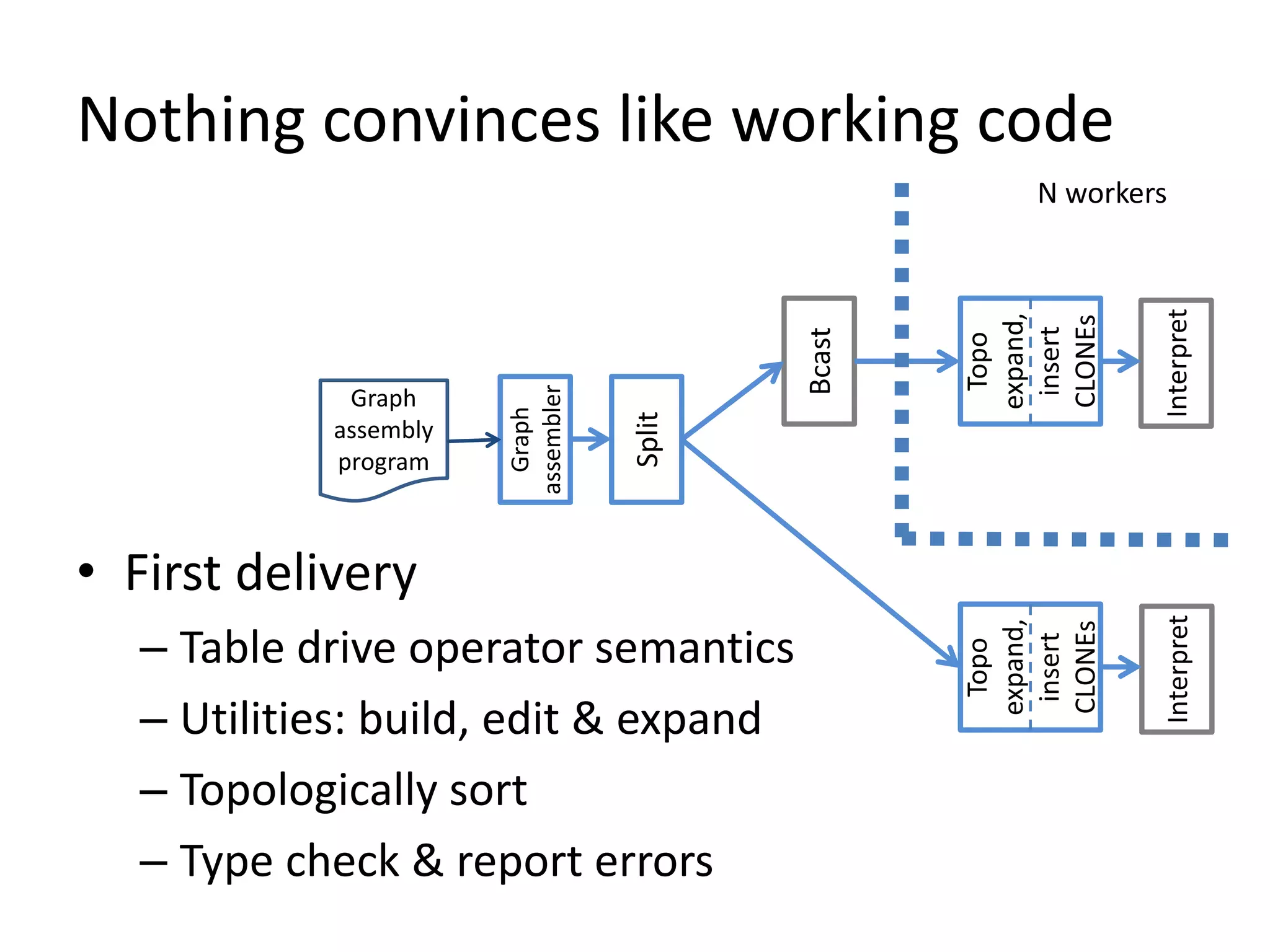

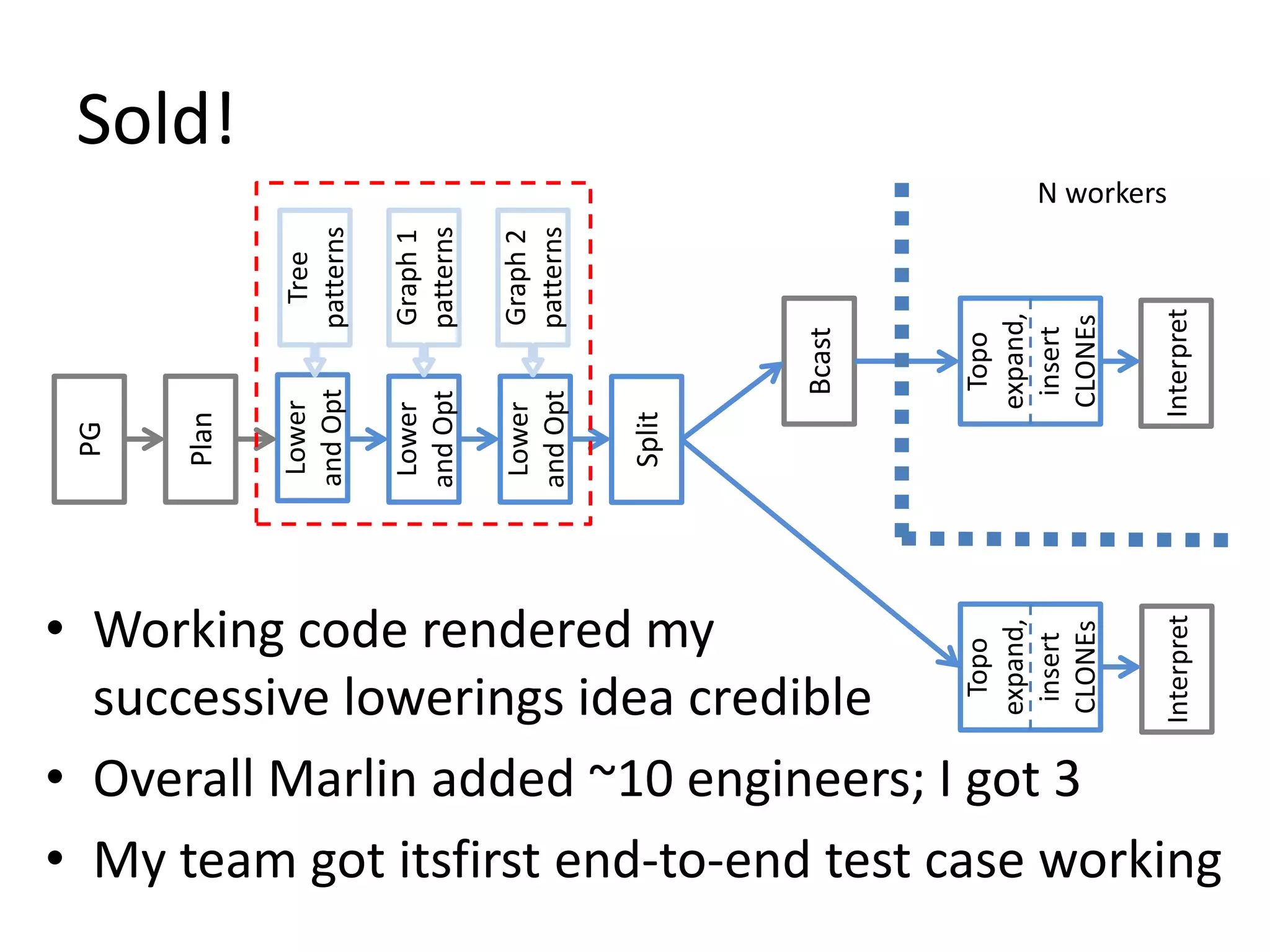

John Yates has been a programmer since 1970. In 2010, he was working at Netezza where they needed a new data analytics architecture. He proposed using a family of statically typed acyclic data flow graph intermediate representations (IRs). This involved representing the query plan as a graph of operators with data flowing along edges. The graph could then be optimized and executed in parallel across cluster nodes. Yates provided an initial implementation of this approach to prove the concept, which helped convince Netezza to adopt it. He then led the development of the new Marlin architecture at Netezza based on this IR approach.

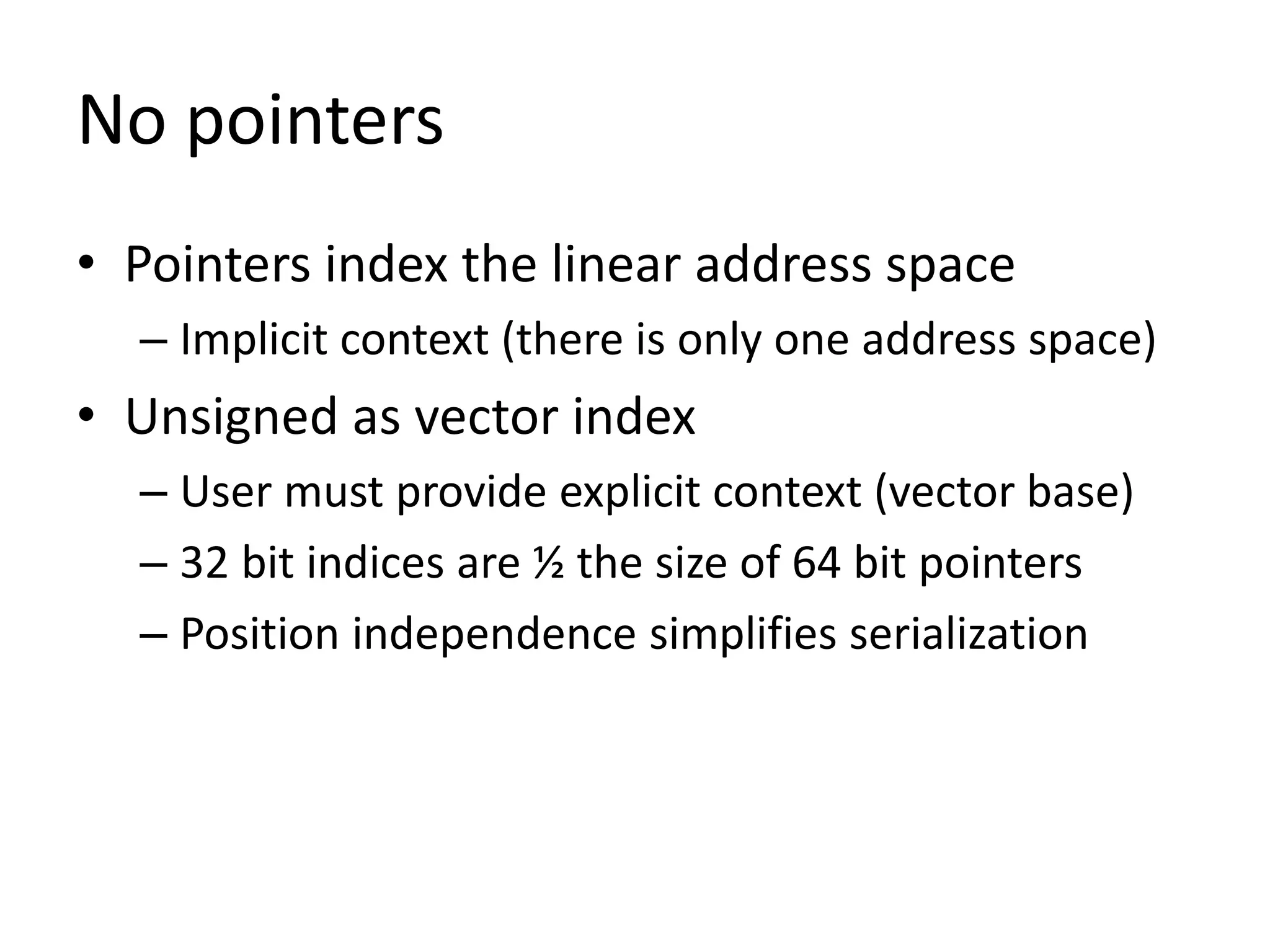

![Connectivity: Operator objects

• Operator private members

– Operator’s edges are sub-vectors of g.vecIn, g.vecOut

– Start of EdgeIn objects: EdgeInIndex baseIn_;

– Start of EdgeOut objects: EdgeOutIndex baseOut_;

• Number of connections

– Inputs: vecOp[x+1].baseIn_ - vecOp[x].baseIn_

– Outputs: vecOp[x+1].baseOut_ - vecOp[x].baseOut_](https://image.slidesharecdn.com/c67eb25a-2046-4ebf-9d6f-749fa4c8e517-160502211949/75/MathWorks-Interview-Lecture-29-2048.jpg)

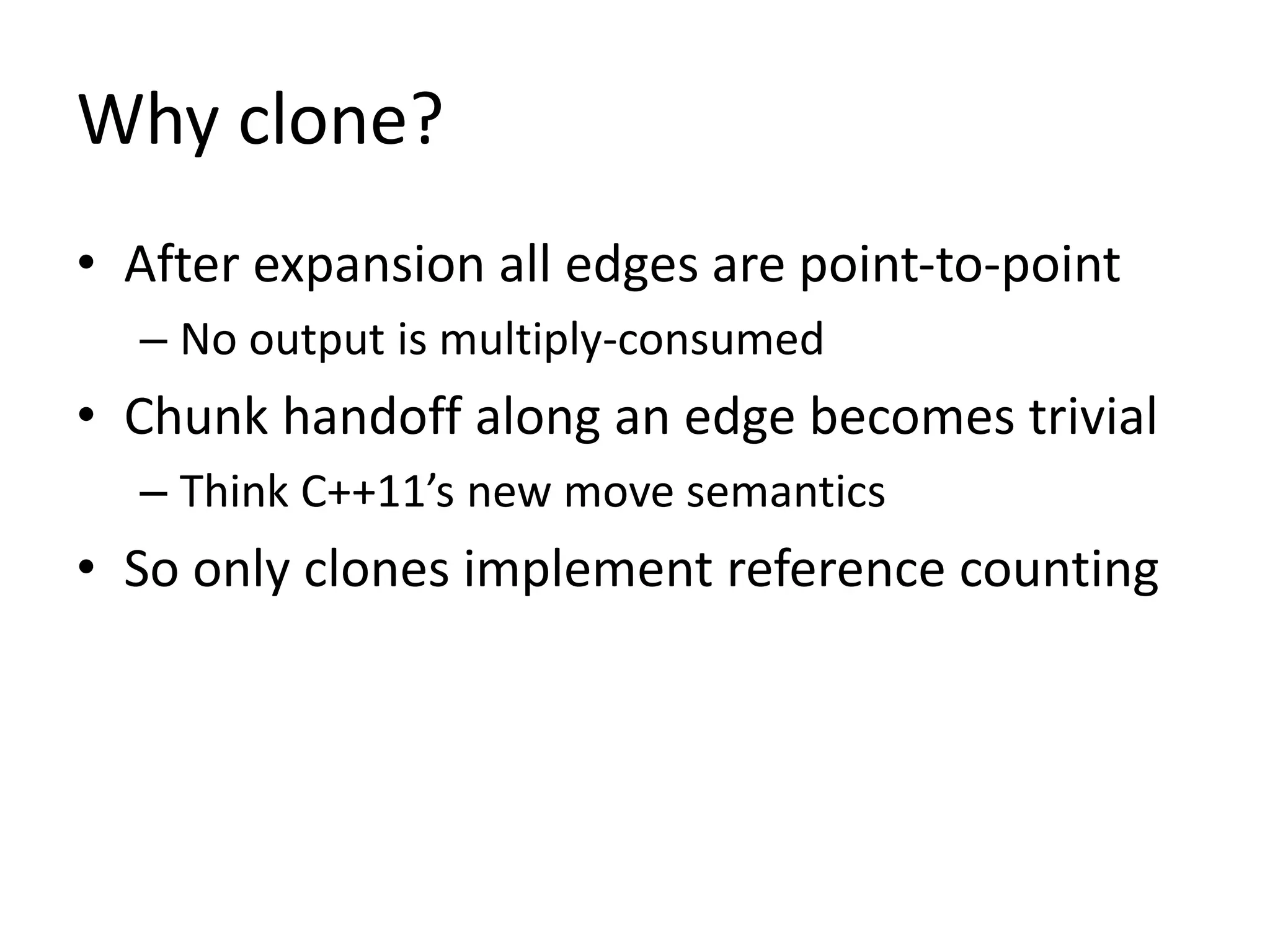

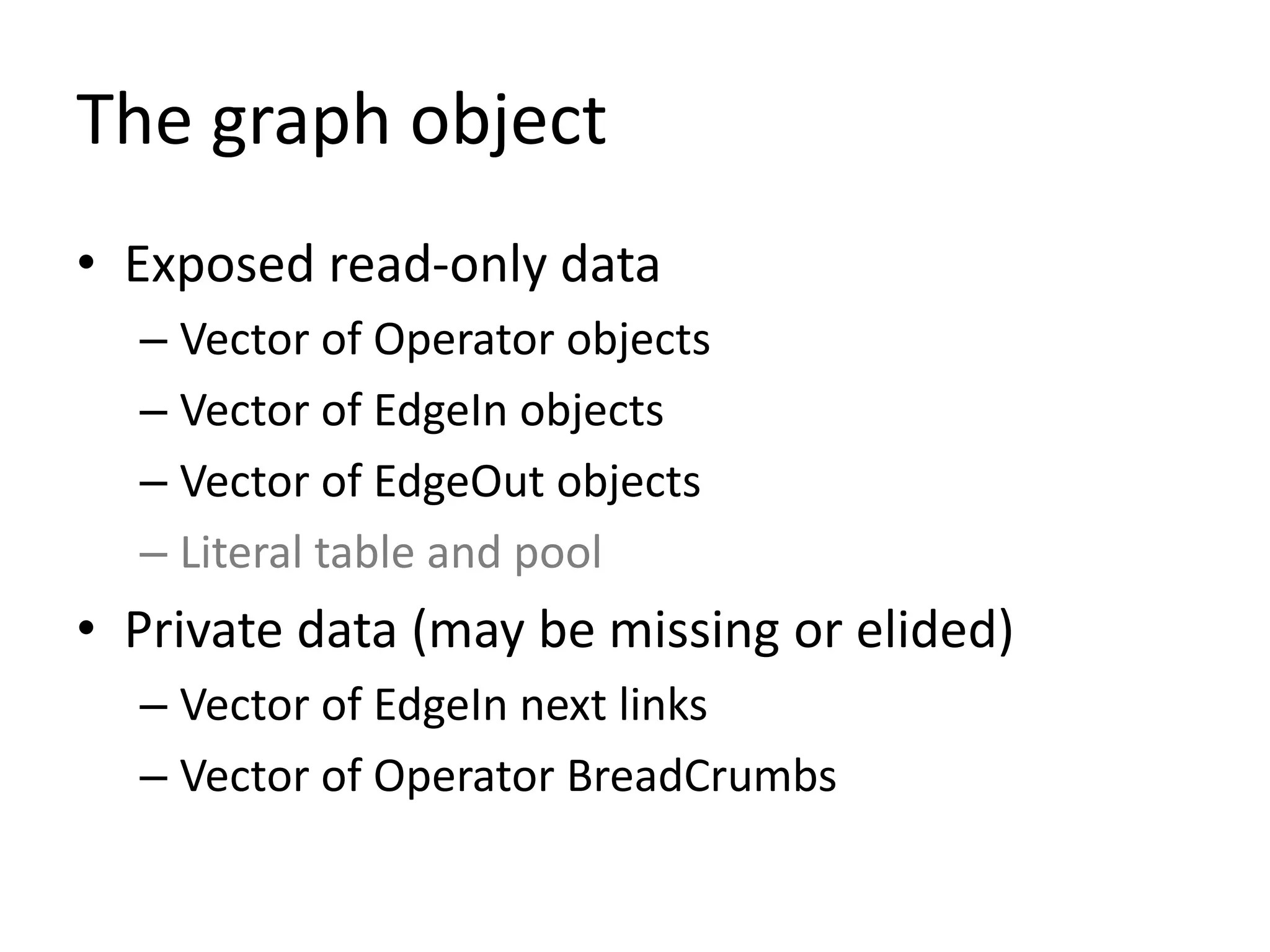

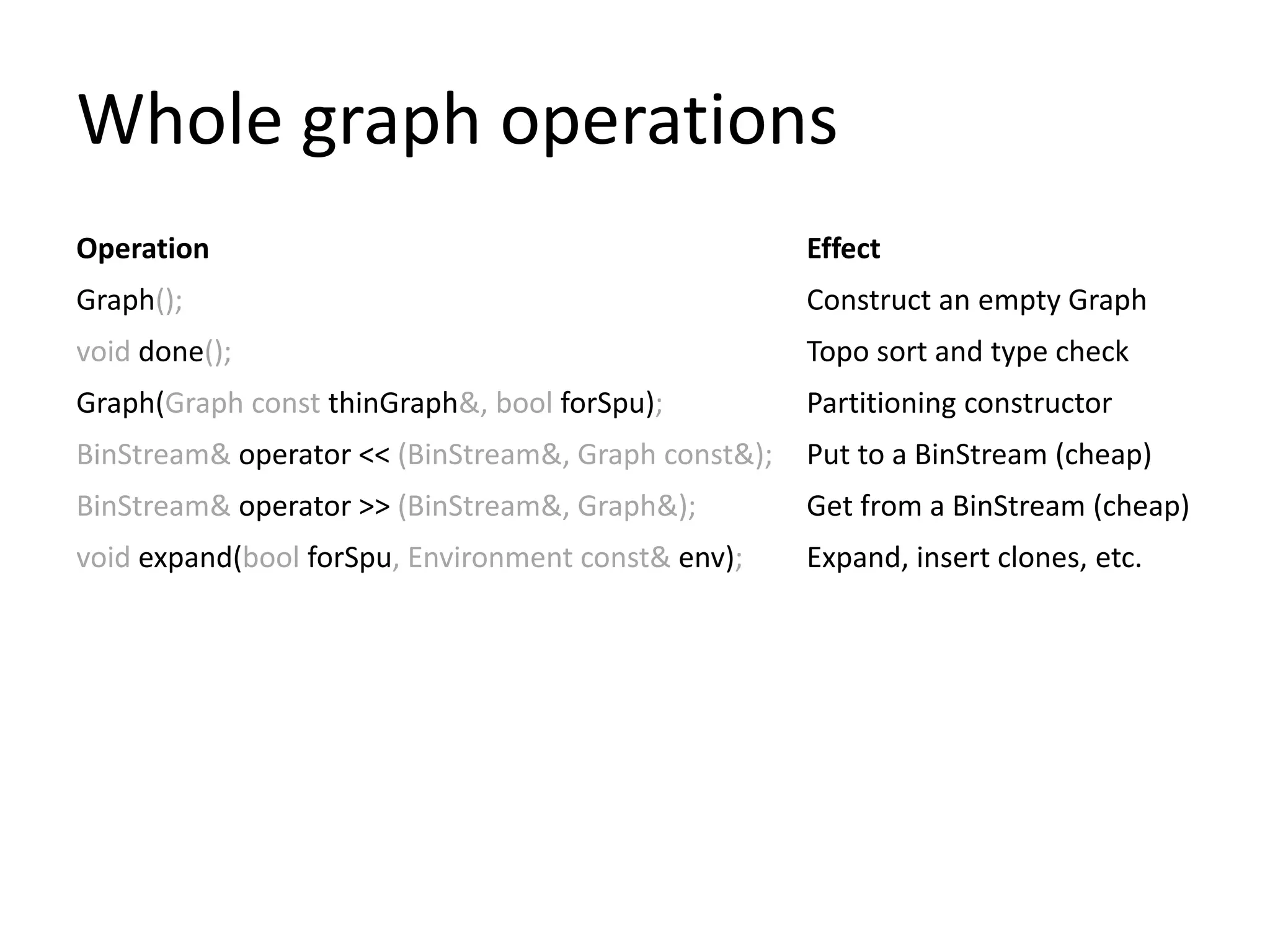

![Connectivity: EdgeIn objects

• EdgeIn private members

– Sink Operator: OperatorIndex dstOp_;

– Source EdgeOut: EdgeOutIndex src_;

• EdgeIn connection position

– Use pointer arithmetic:

this - (vecIn + vecOp[dstOp_].baseIn_);](https://image.slidesharecdn.com/c67eb25a-2046-4ebf-9d6f-749fa4c8e517-160502211949/75/MathWorks-Interview-Lecture-30-2048.jpg)

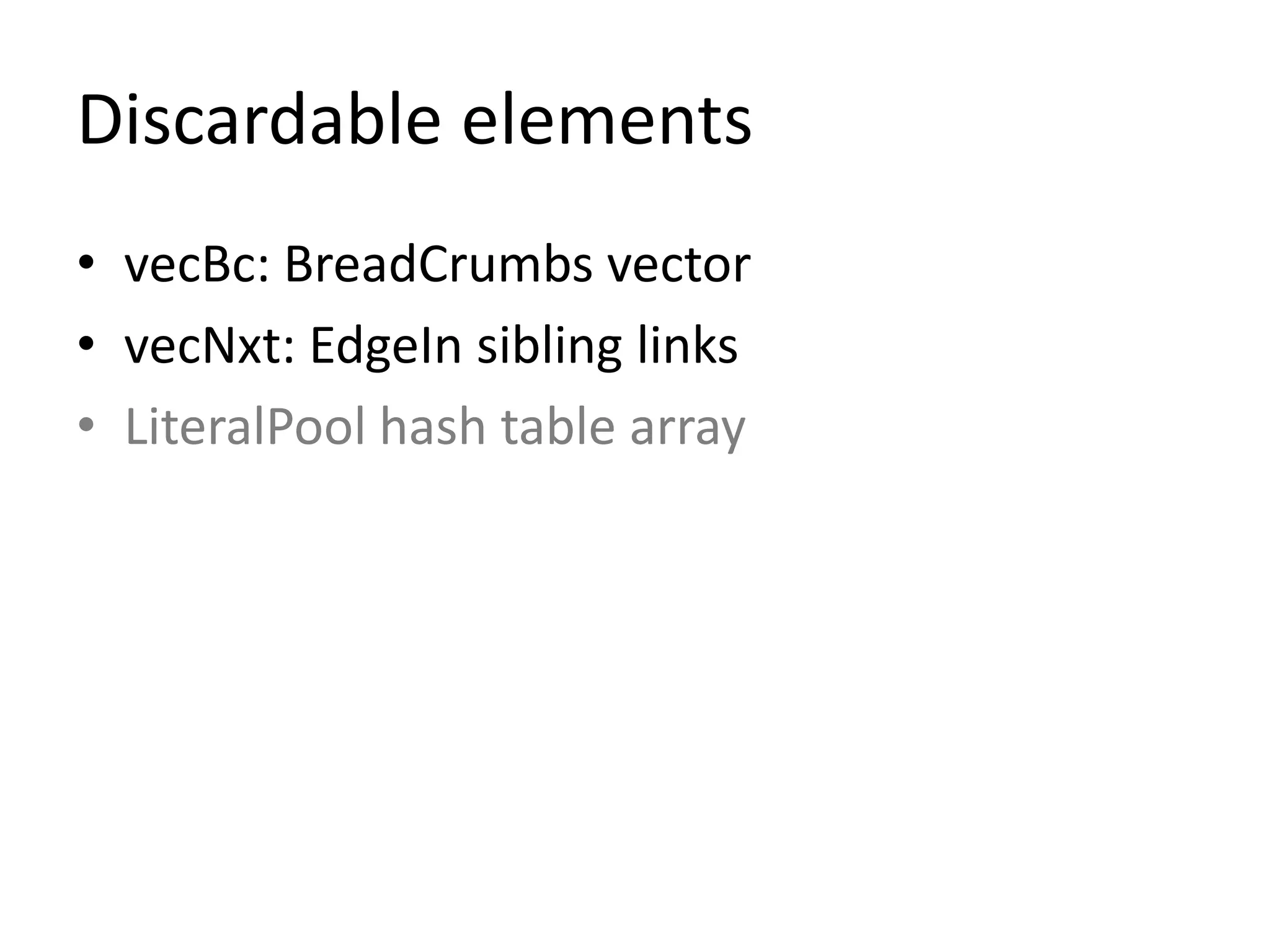

![Connectivity: EdgeOut objects

• EdgeOut private members

– Source Operator: OperatorIndex srcOp_;

– Sink EdgeIn: EdgeInXIndex dst_;

• EdgeOut connection position

– Use pointer arithmetic

this - (vecOut + vecOp[srcOp_].baseOut_);](https://image.slidesharecdn.com/c67eb25a-2046-4ebf-9d6f-749fa4c8e517-160502211949/75/MathWorks-Interview-Lecture-31-2048.jpg)

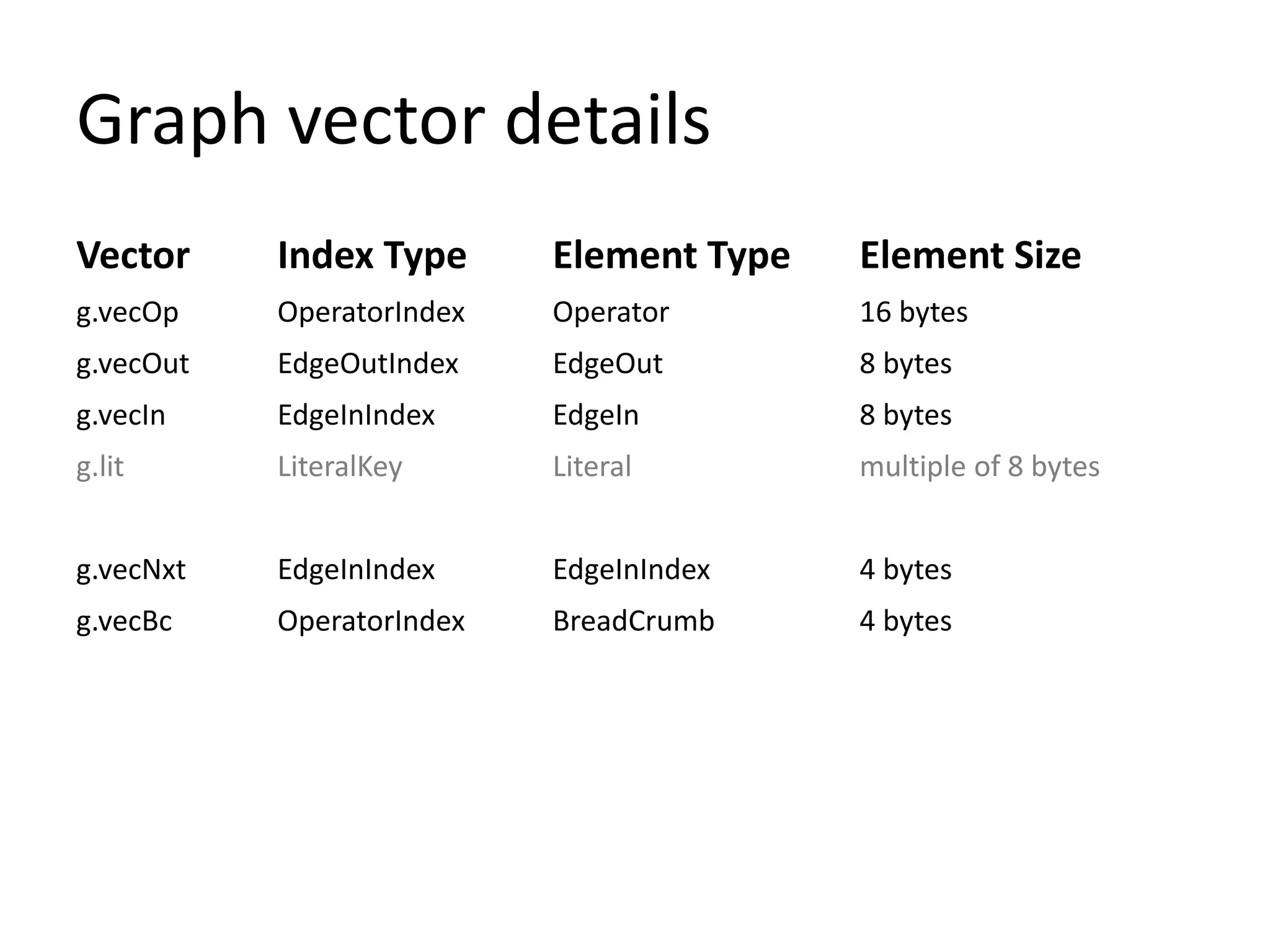

![Thin graph construction

Method Effect

graph.add(BreadCrumb, Op, Locus, Expansion,

unsigned nVarIn =0, unsigned nVarOut =0);

Add an Operator and its

Edge resources

graph.connect(OperatorIndex srcOp, unsigned srcPos,

OperatorIndex dstOp, unsigned dstPos);

Guarantee a srcOp[srcPos] to

dstOp[dstPos] edge exists](https://image.slidesharecdn.com/c67eb25a-2046-4ebf-9d6f-749fa4c8e517-160502211949/75/MathWorks-Interview-Lecture-33-2048.jpg)

![Graph overlay

• Template object publically derived from Graph

• Macro hides lots of template boilerplate

• User supplied types for parallel vectors

– MyOperator ovOp[OperatorIndex]

– MyEdgeIn ovIn[EdgeInIndex]

– MyEdgeOut ovOut[EdgeOutIndex]

• Constructor shares vectors and LiteralTable](https://image.slidesharecdn.com/c67eb25a-2046-4ebf-9d6f-749fa4c8e517-160502211949/75/MathWorks-Interview-Lecture-36-2048.jpg)