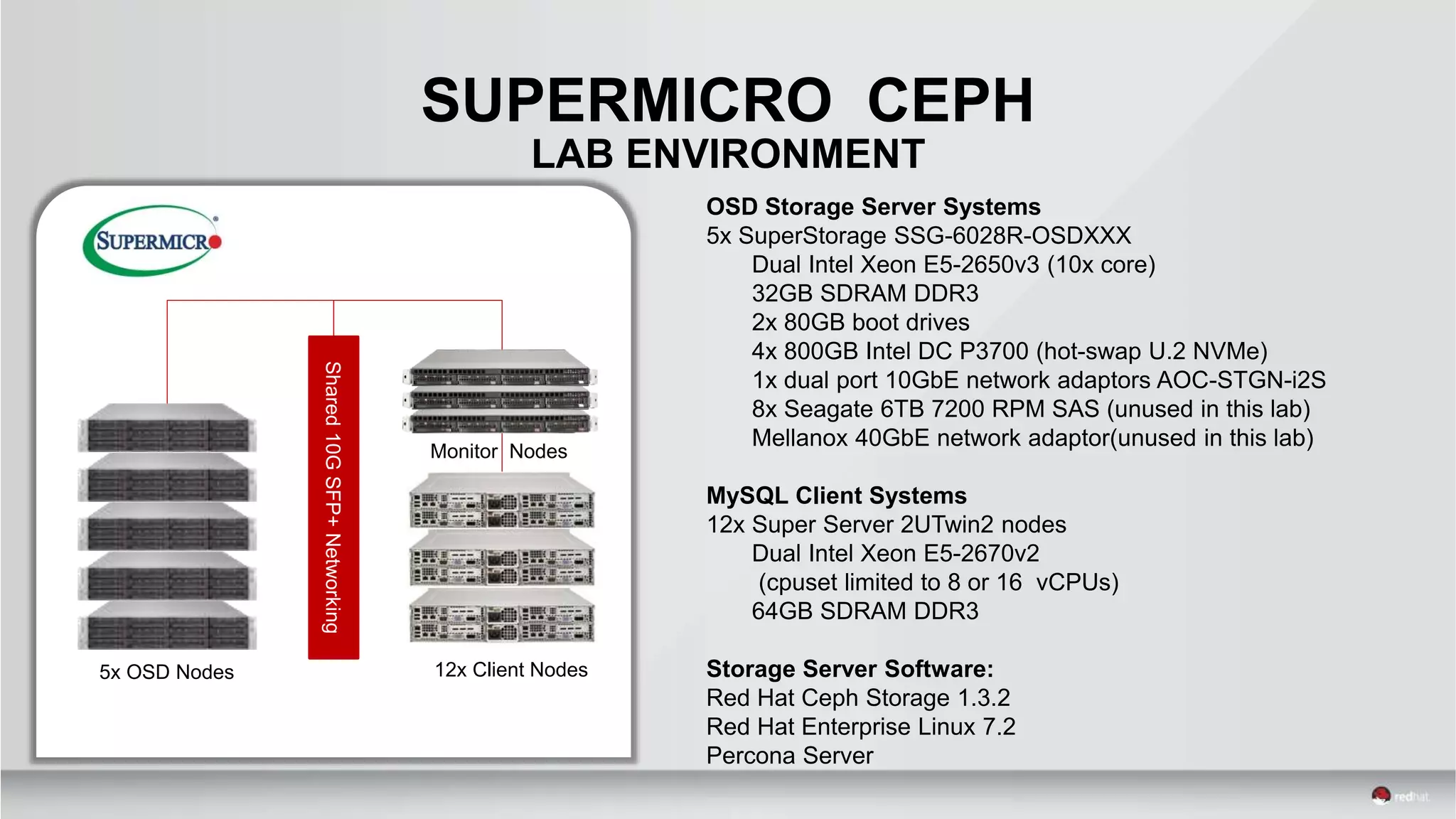

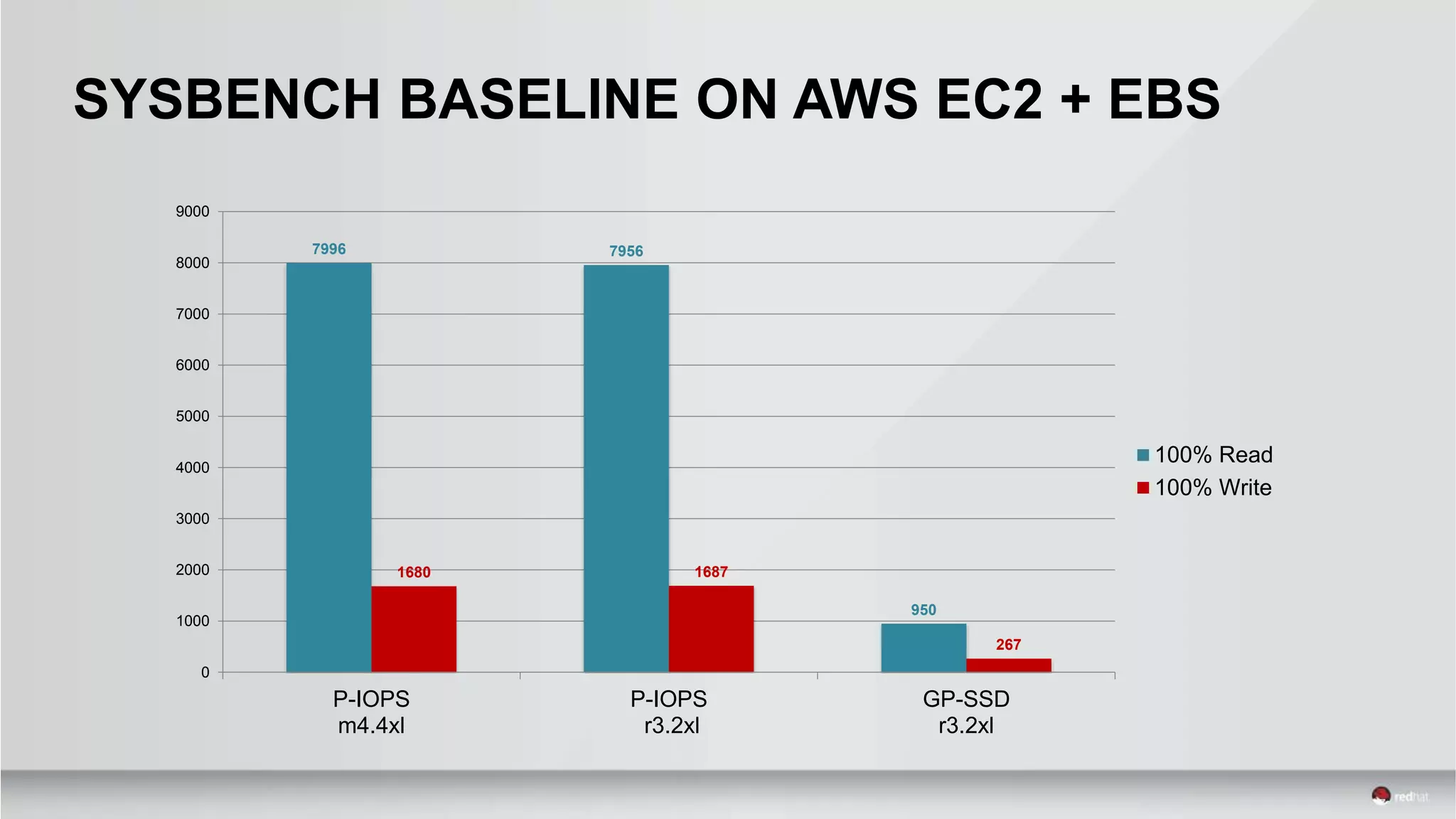

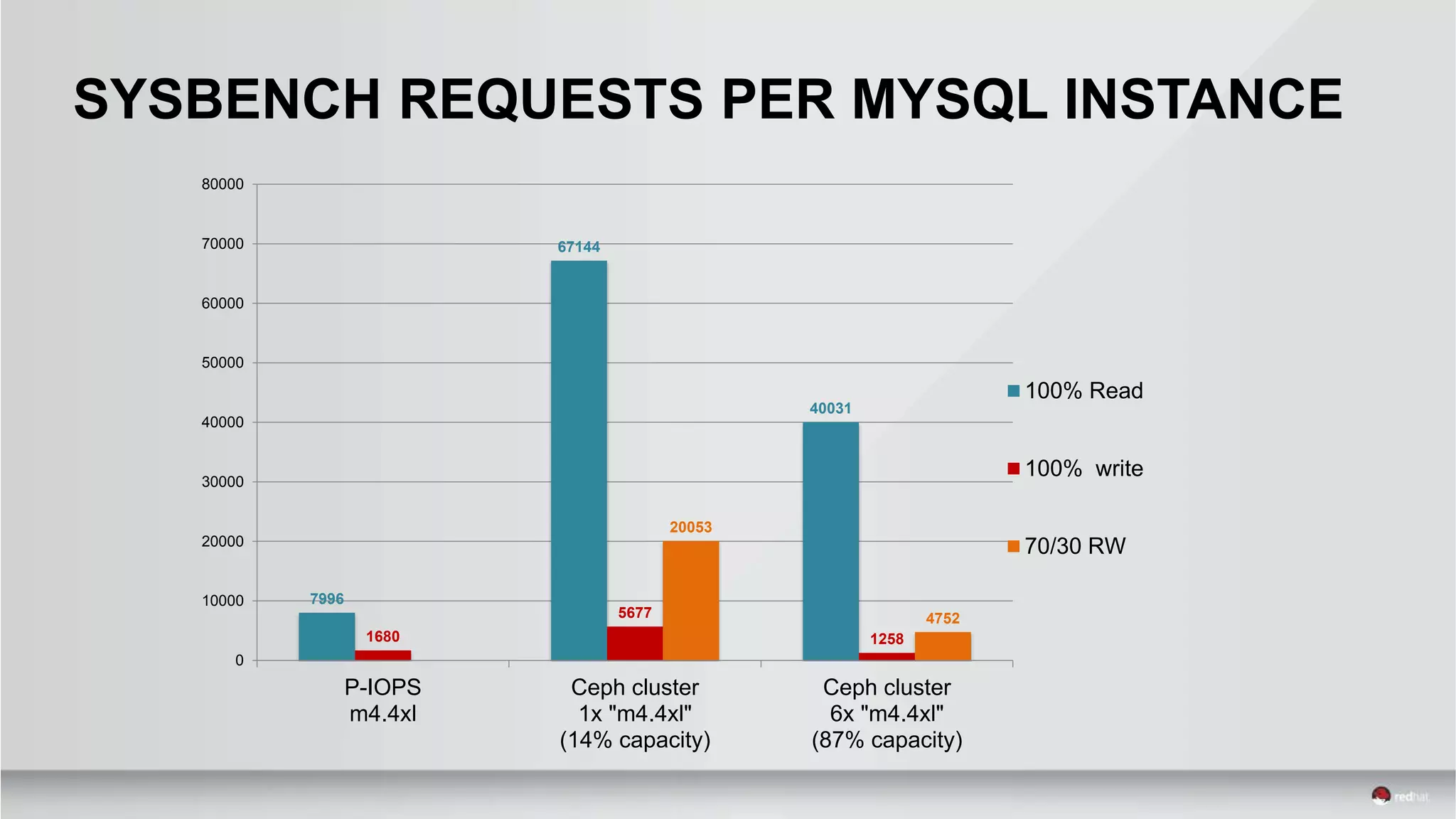

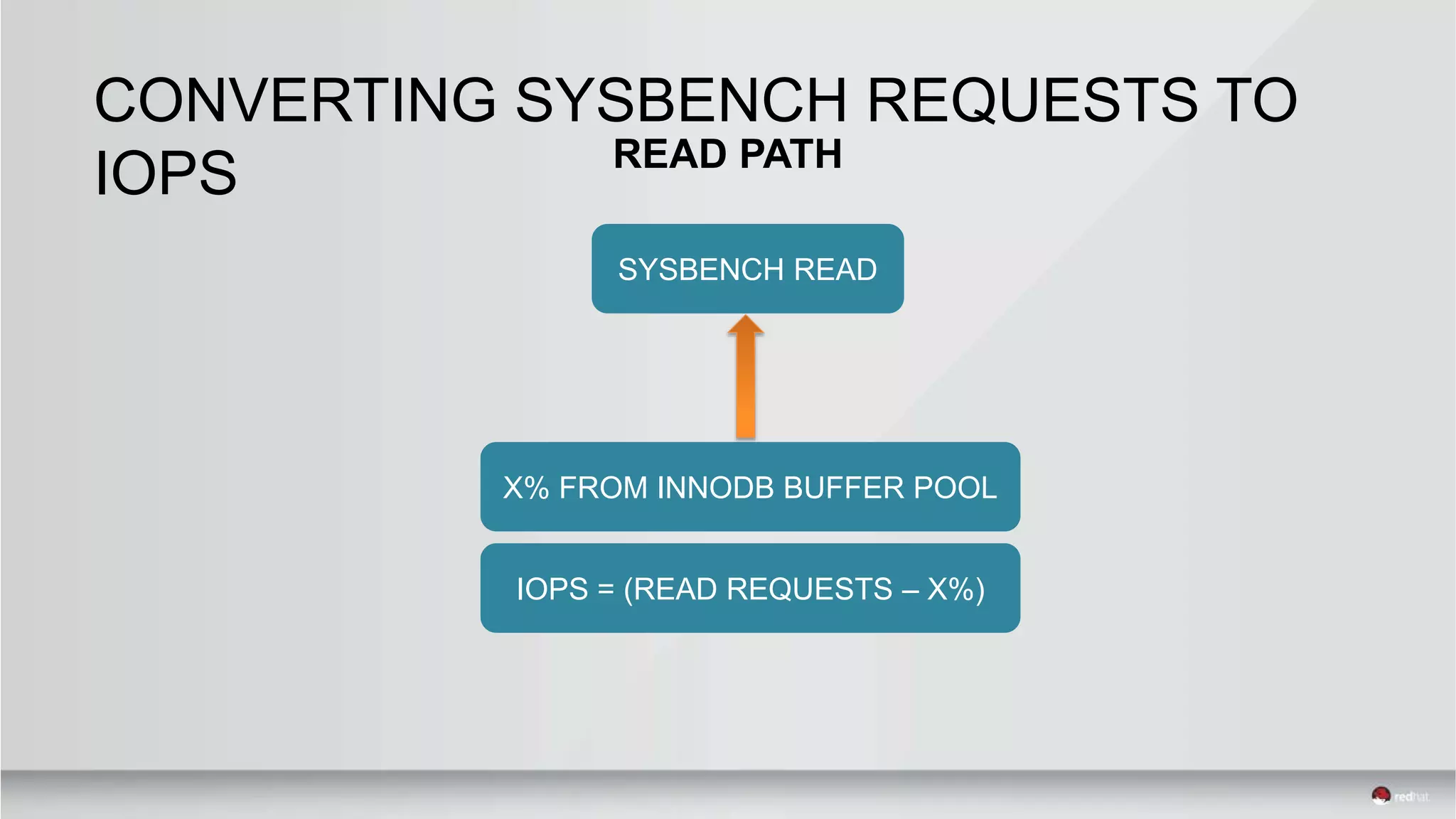

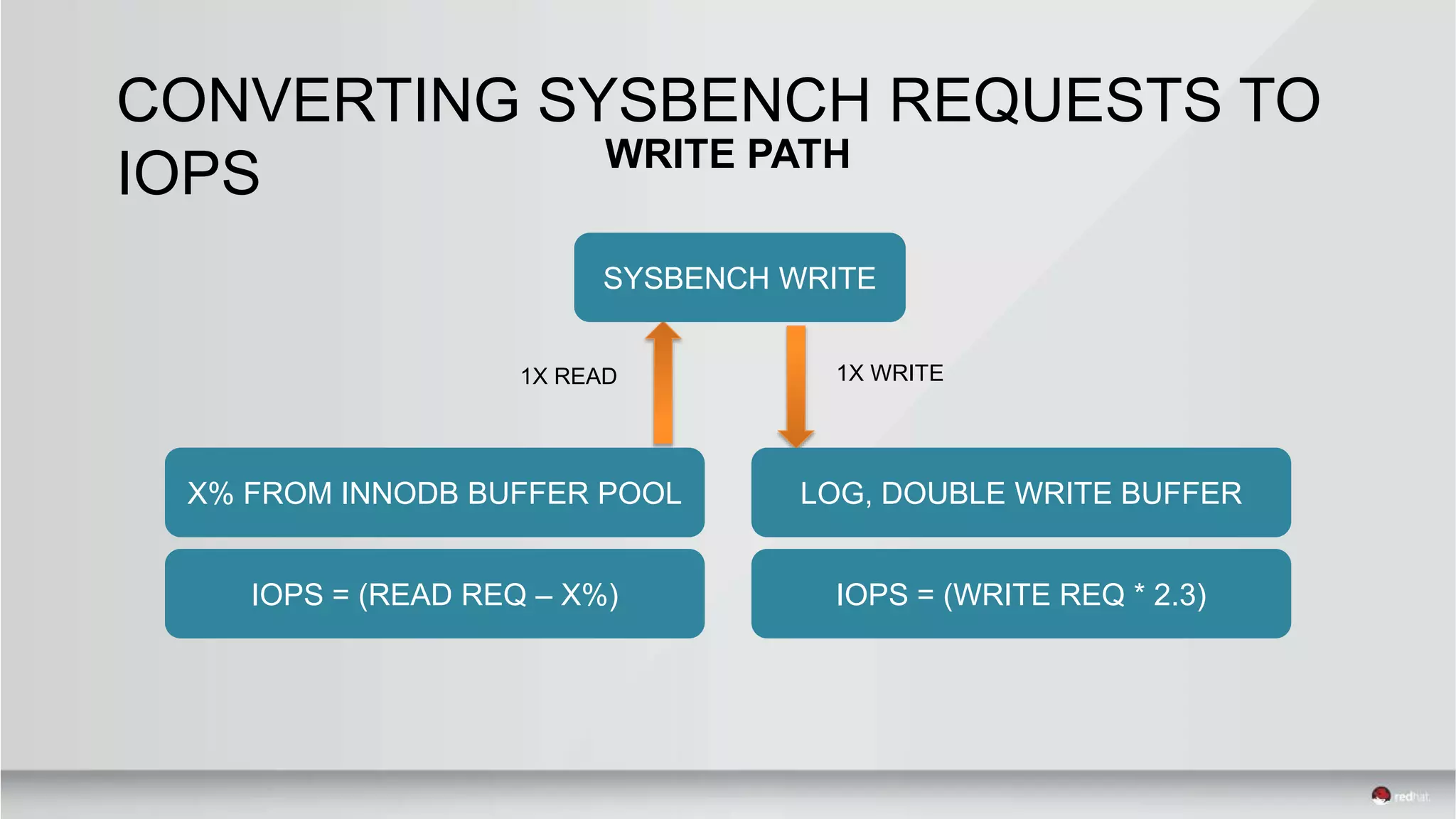

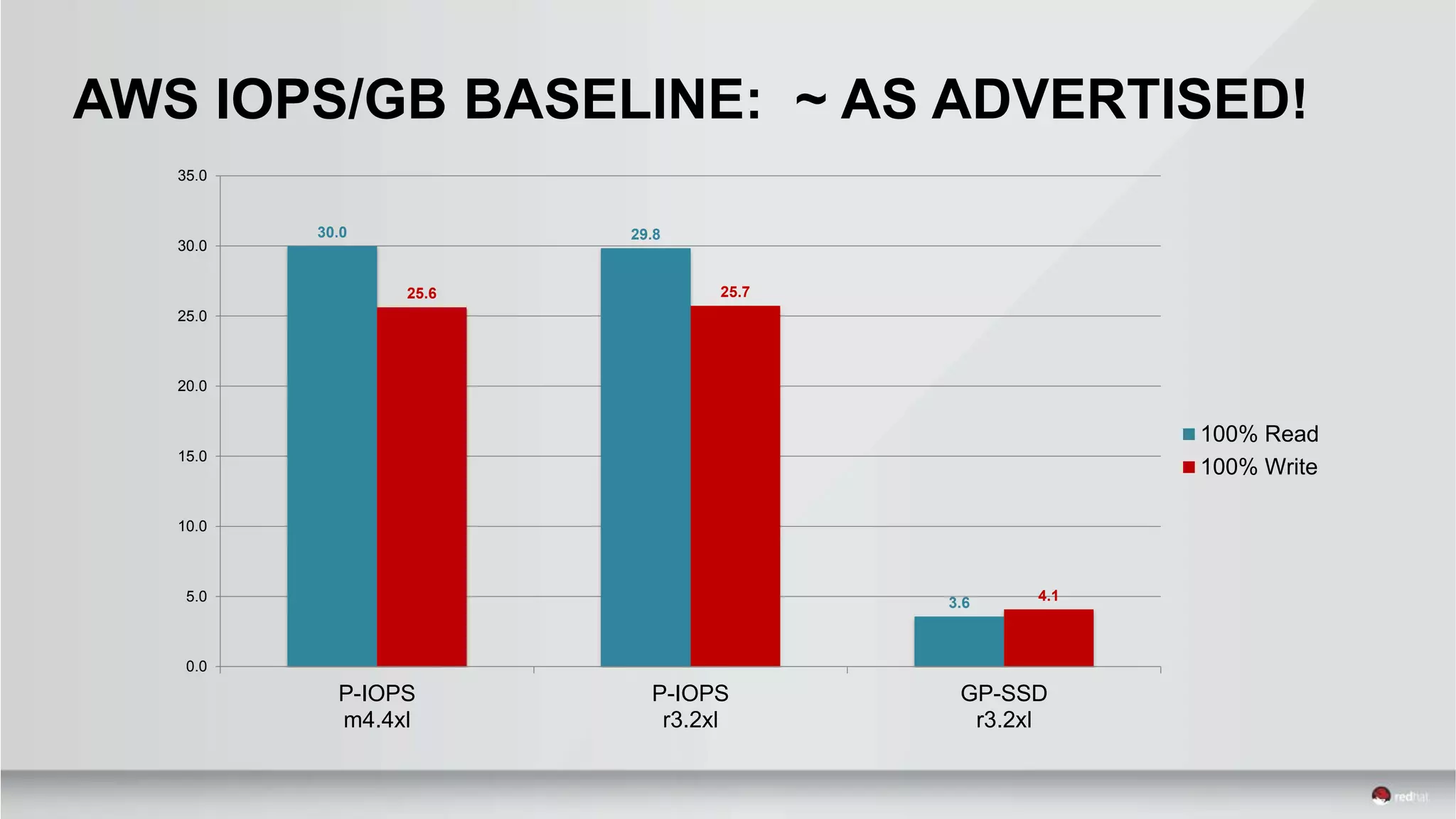

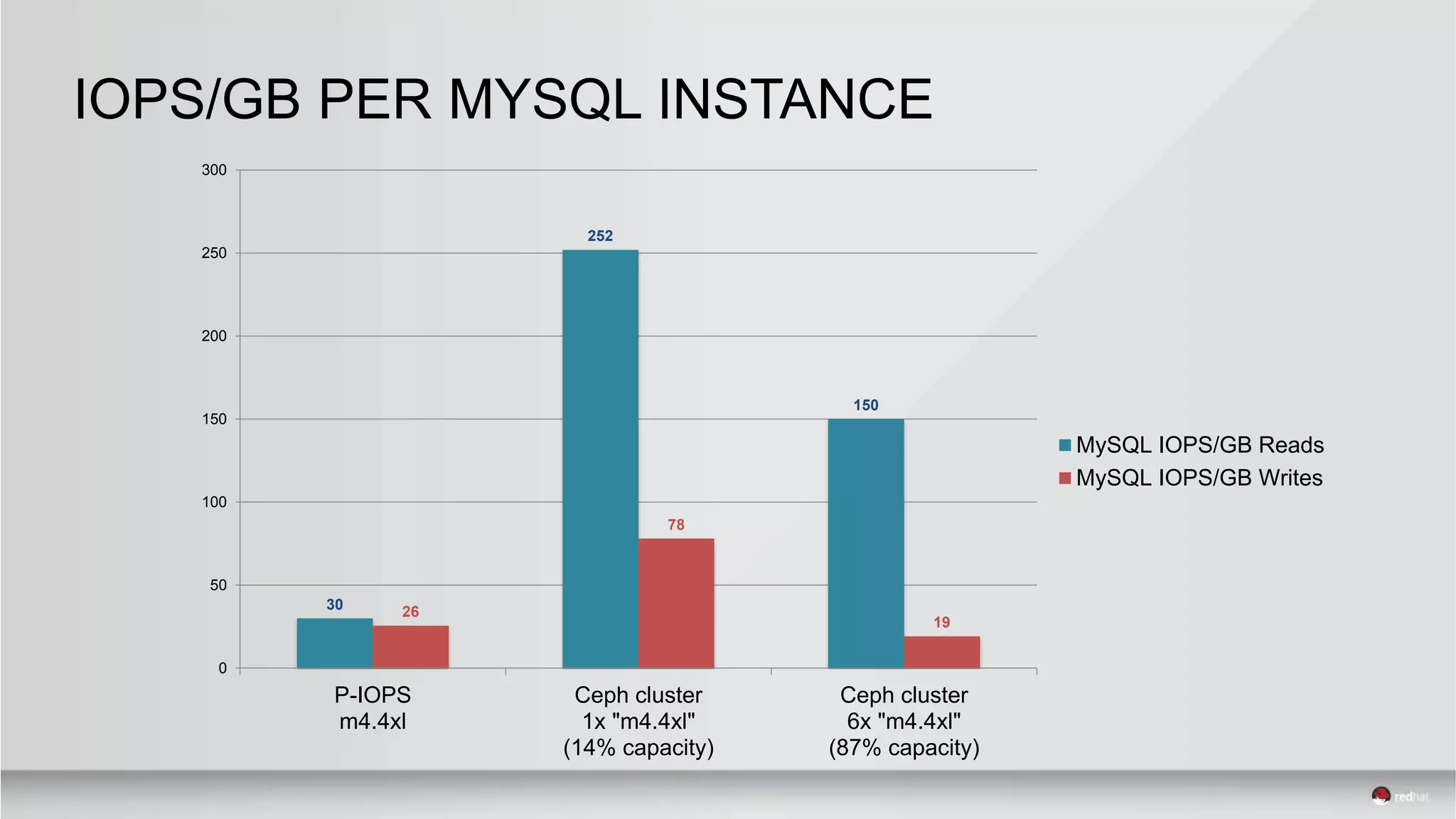

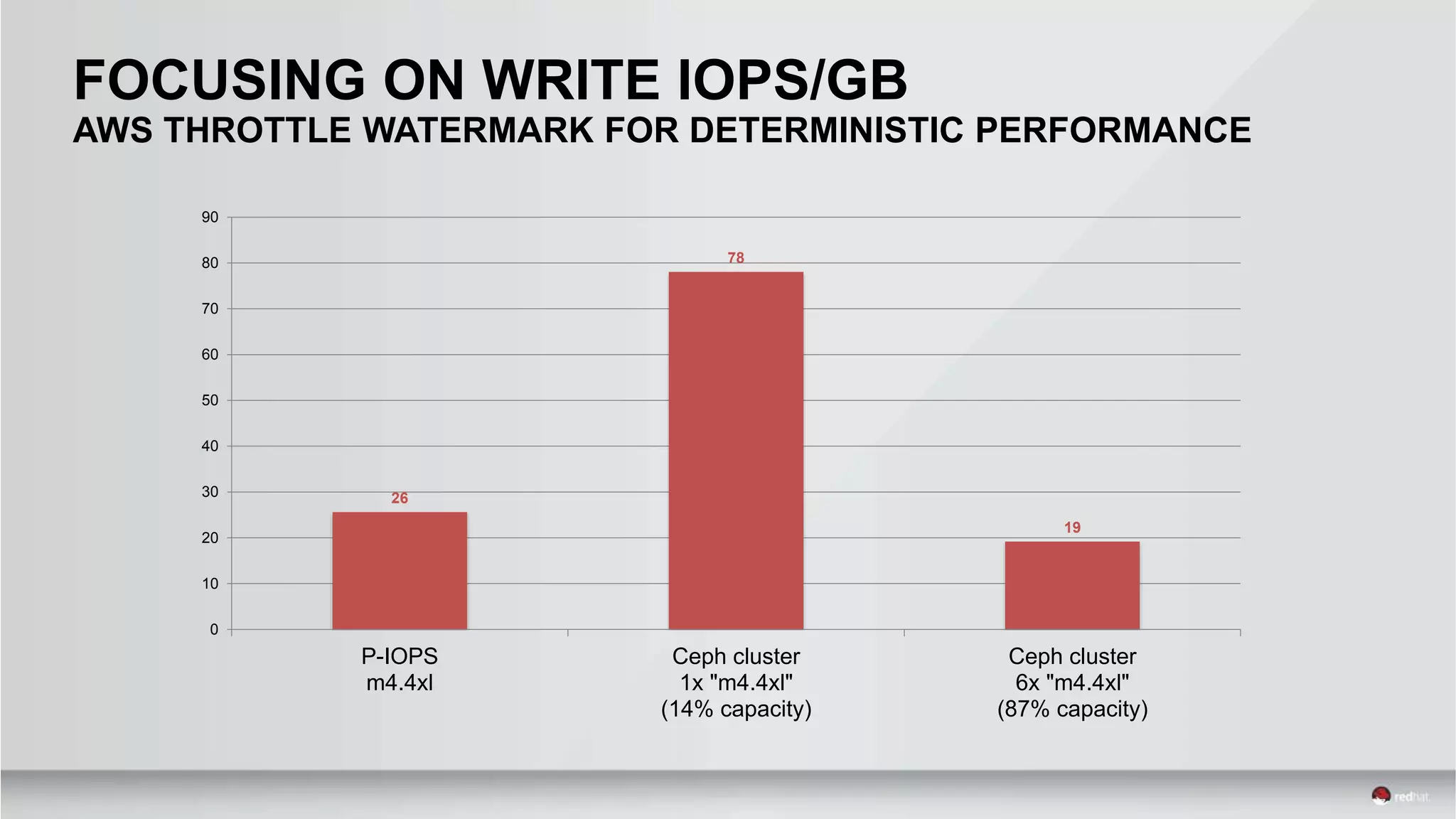

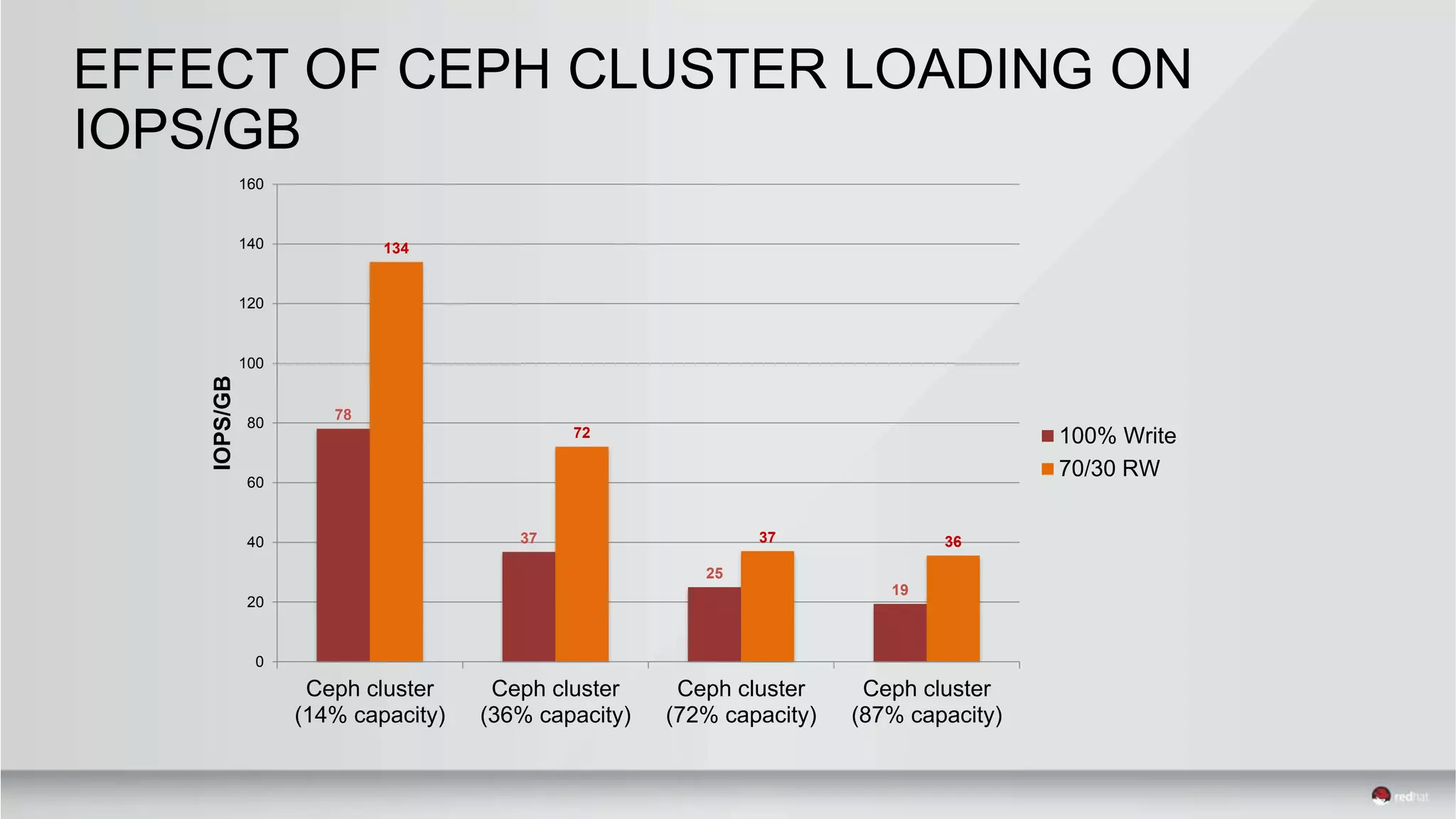

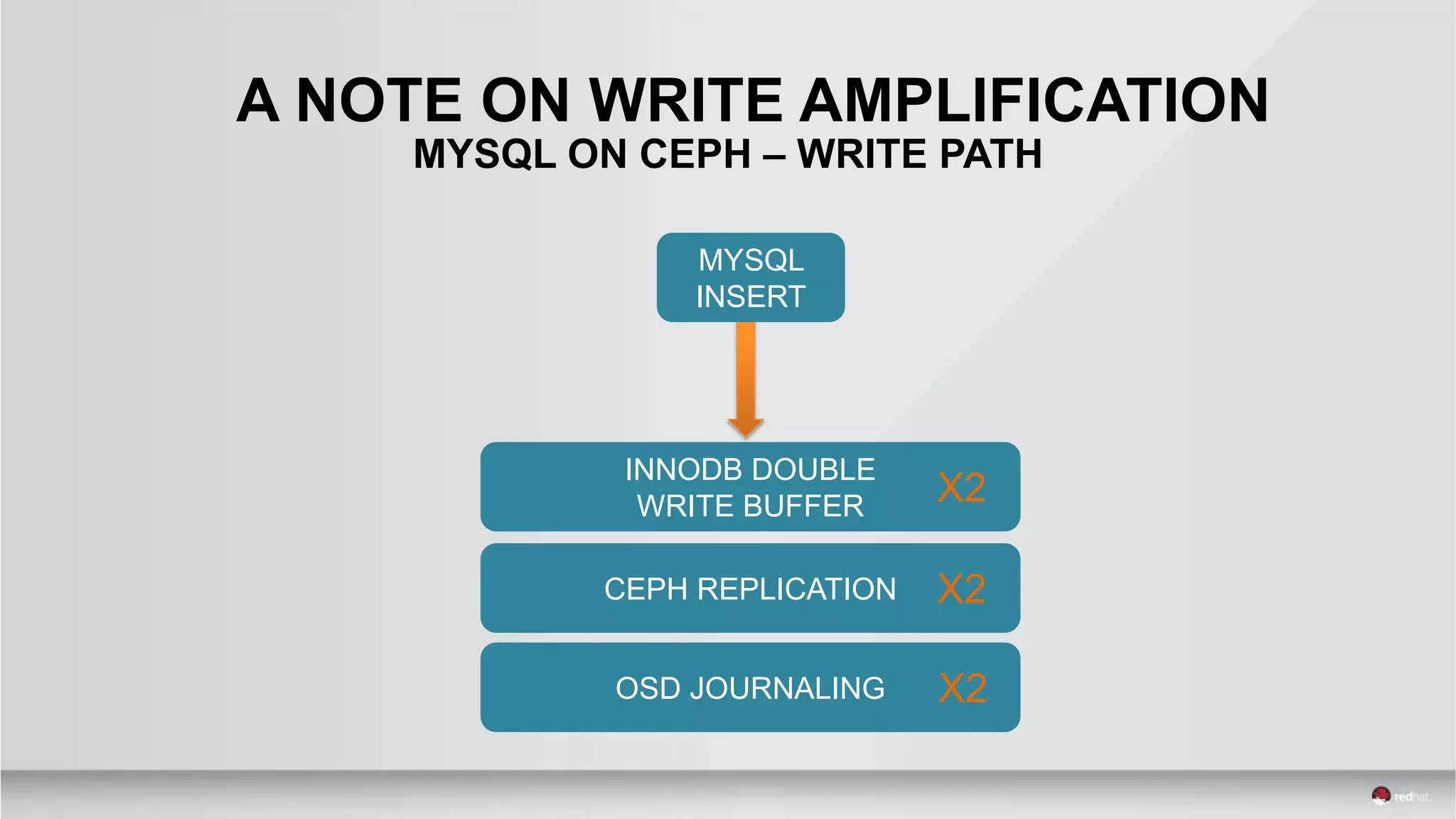

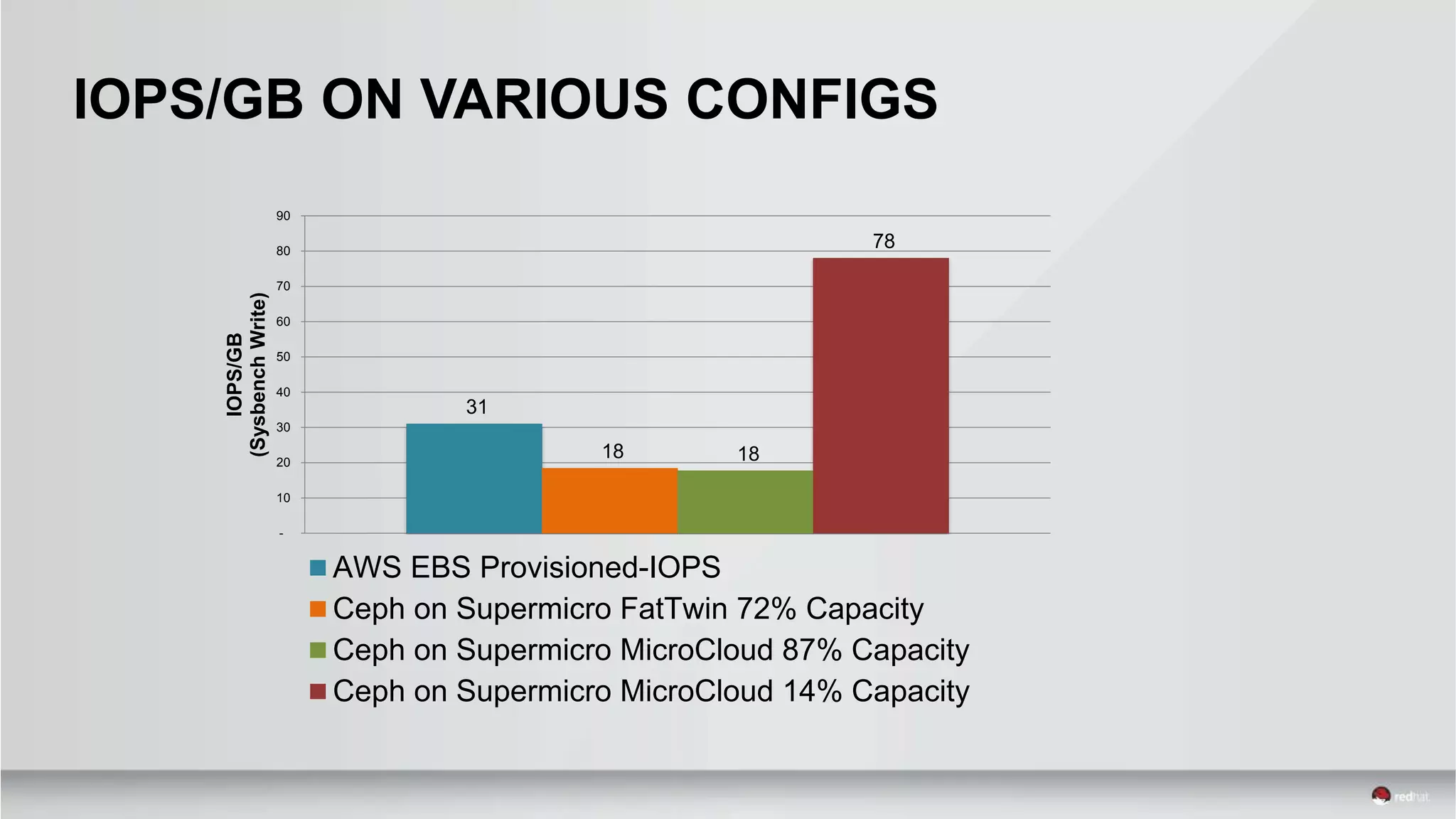

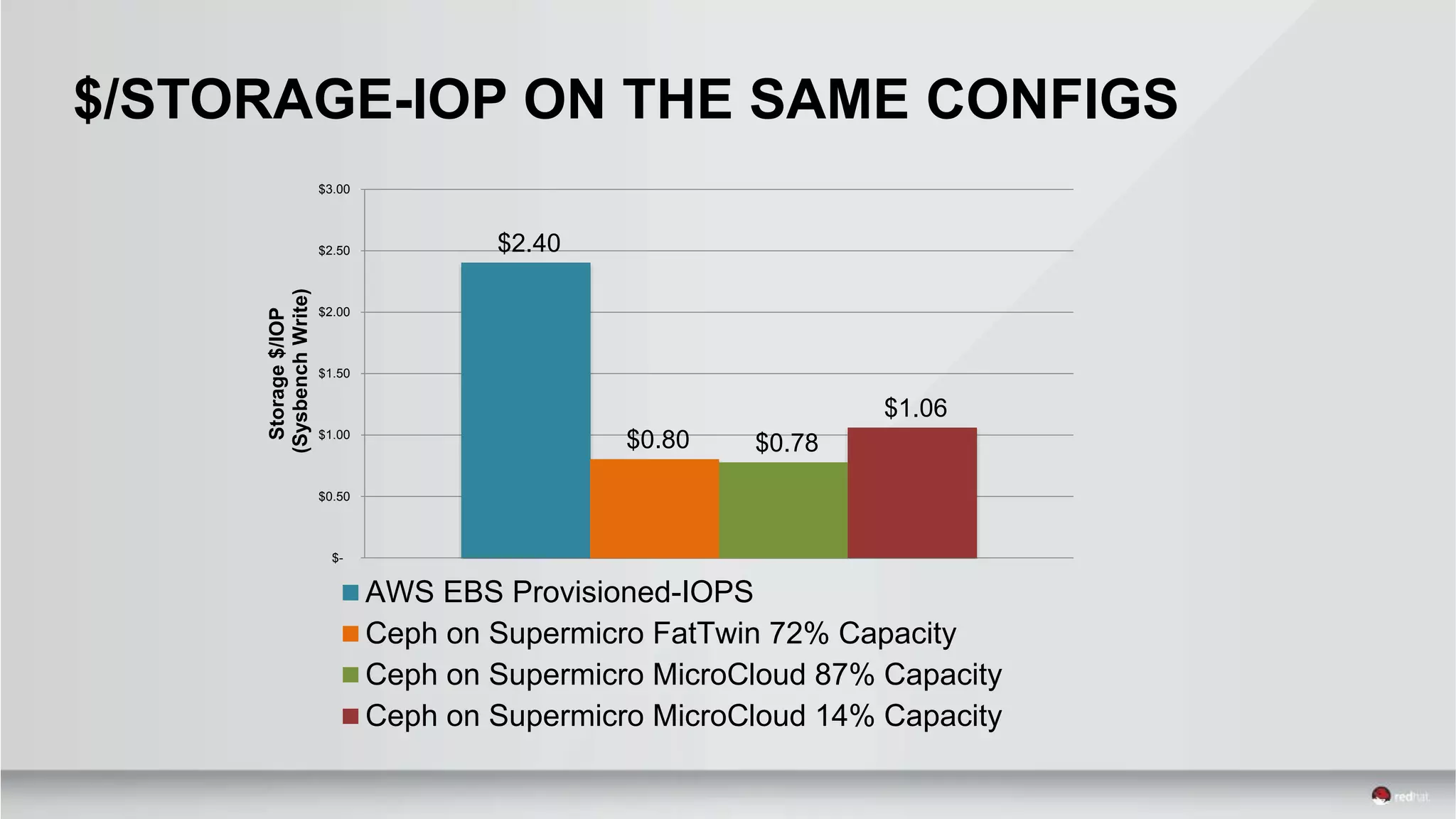

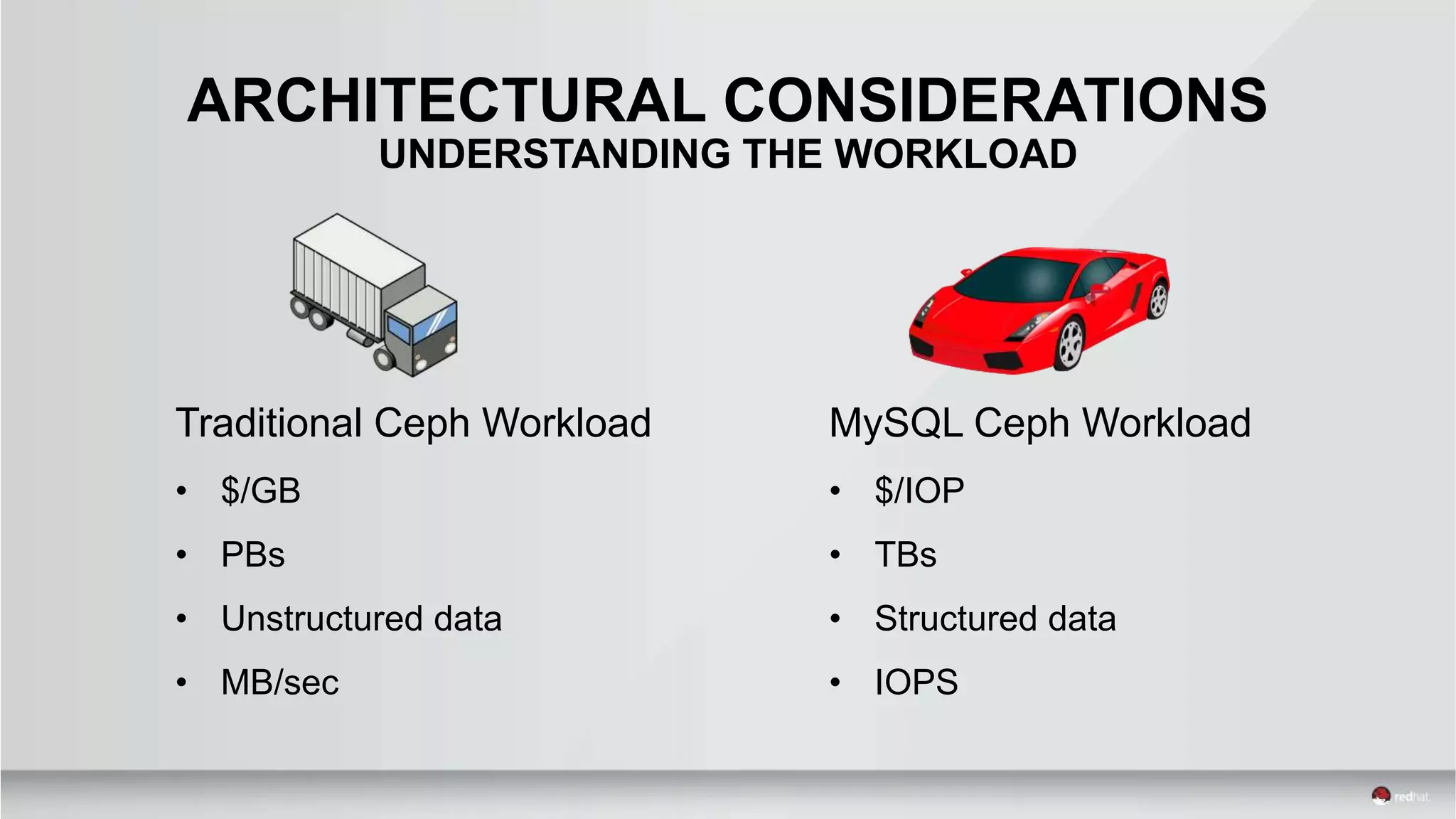

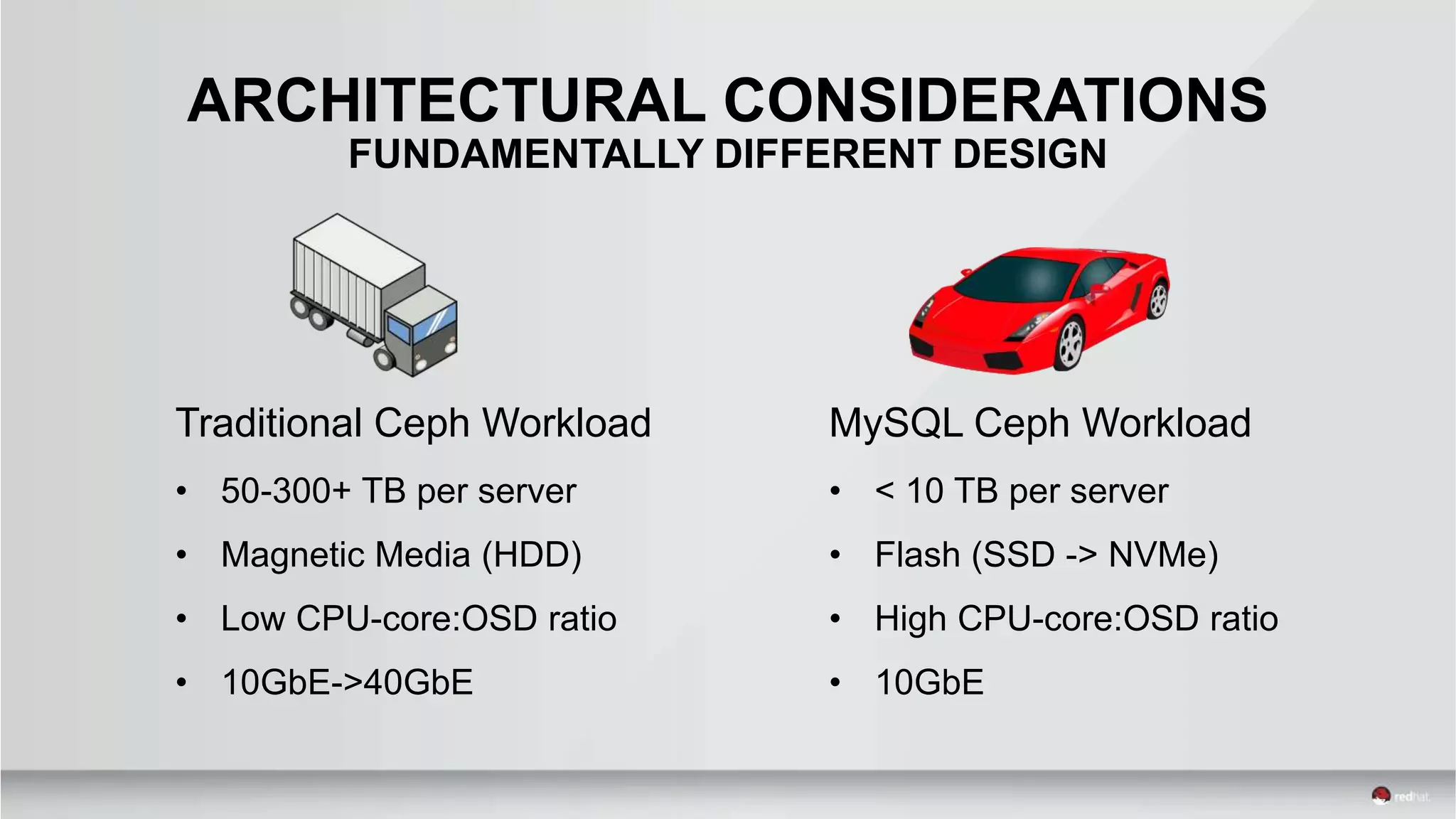

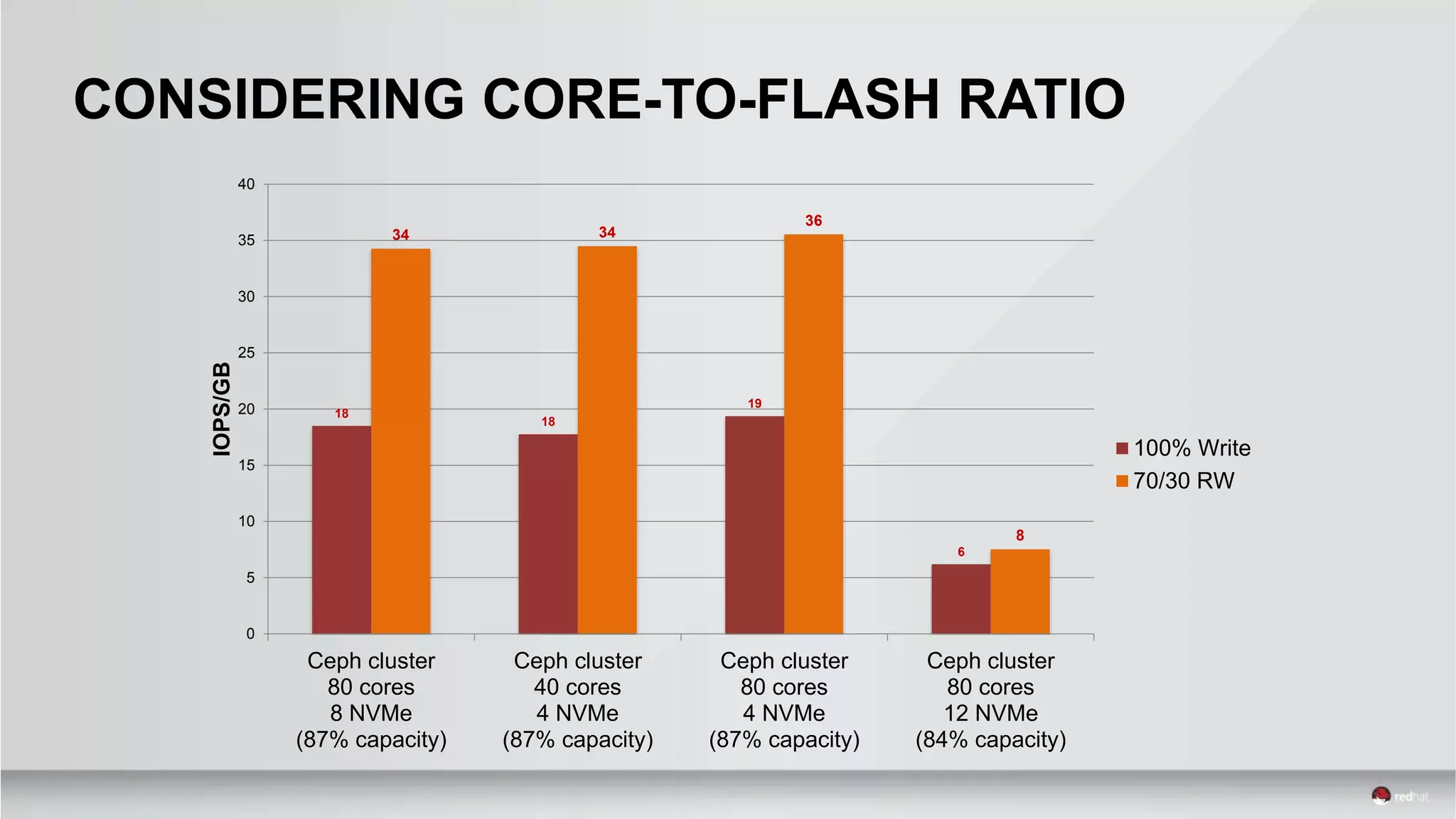

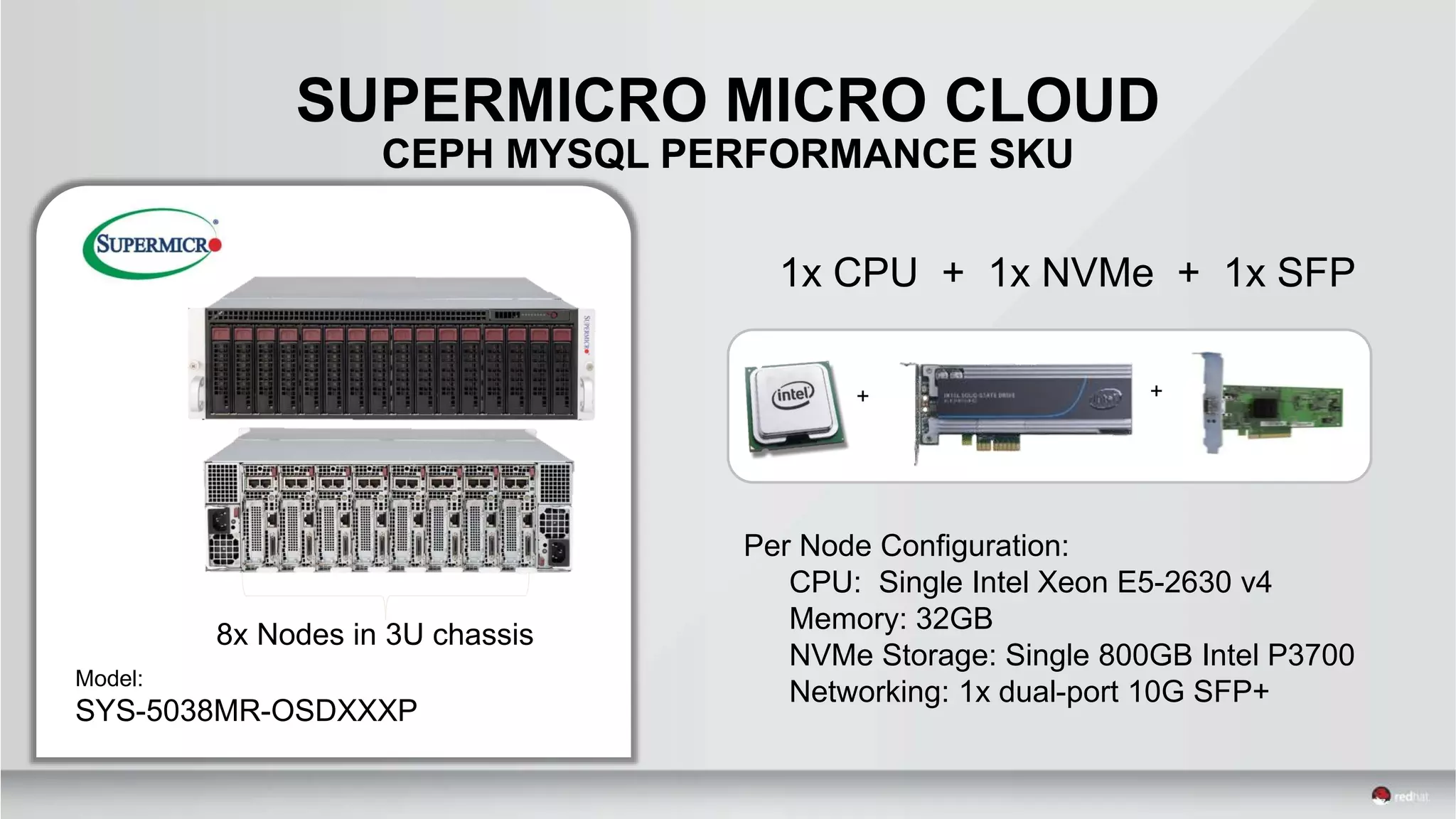

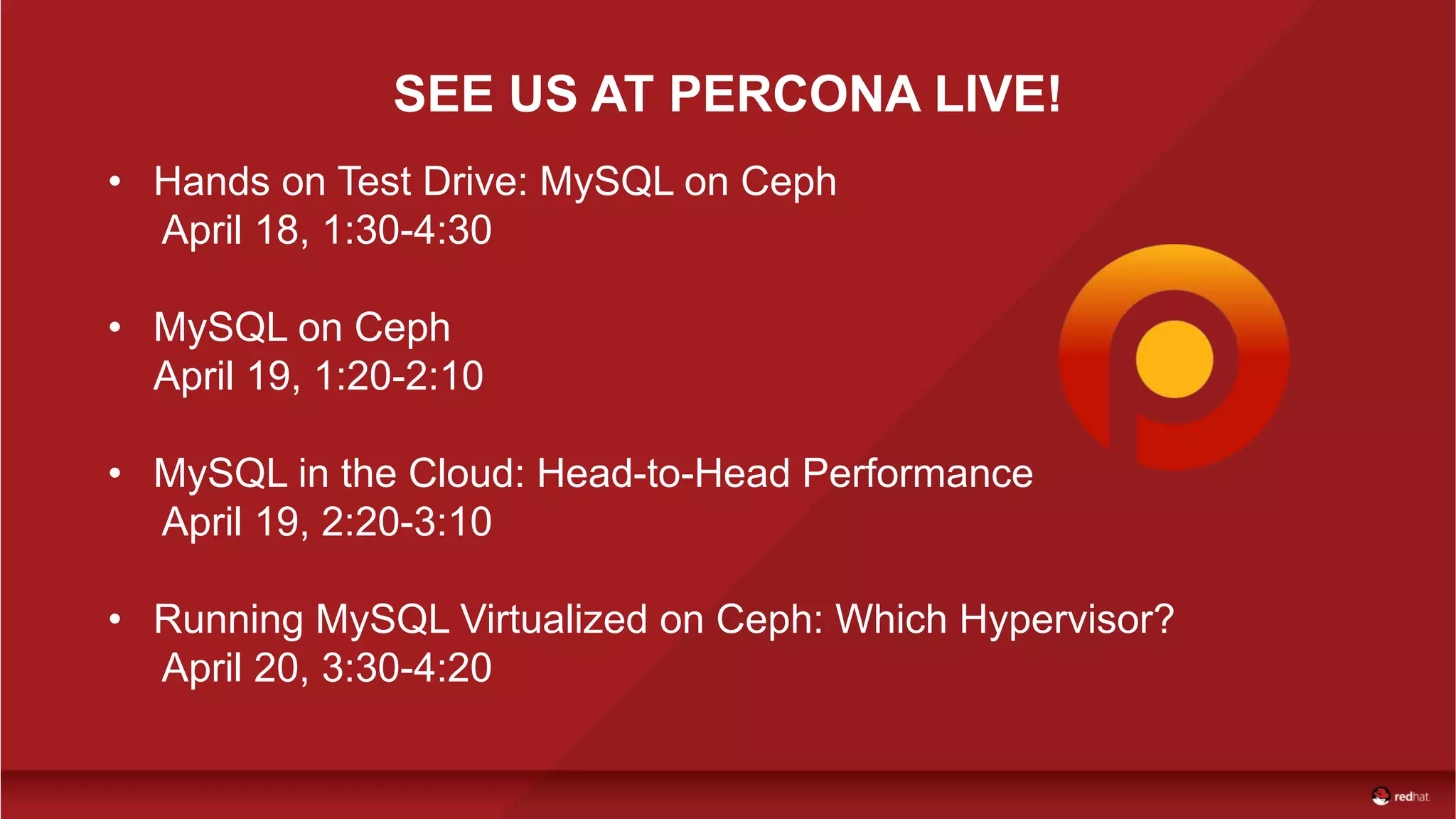

This document outlines an agenda for a conference on MySQL and Ceph storage solutions. The agenda includes sessions on MySQL performance on Ceph versus AWS, a head-to-head performance lab comparing the two platforms, and architectural considerations for optimizing MySQL on Ceph. Specific topics covered are MySQL and Ceph capabilities like live migration and snapshots, ensuring a consistent developer experience between private Ceph and public cloud, results from sysbench tests showing Ceph can match or exceed AWS performance on price per IOPS, and how Ceph node configuration like CPU cores and flash storage affect MySQL workload performance.