This series is your gateway to understanding the WHY, HOW, and WHAT of this revolutionary technology. Over six interesting sessions, we will learn about the amazing power of agentic automation. We will give you the information and skills you need to succeed in this new era.

![9

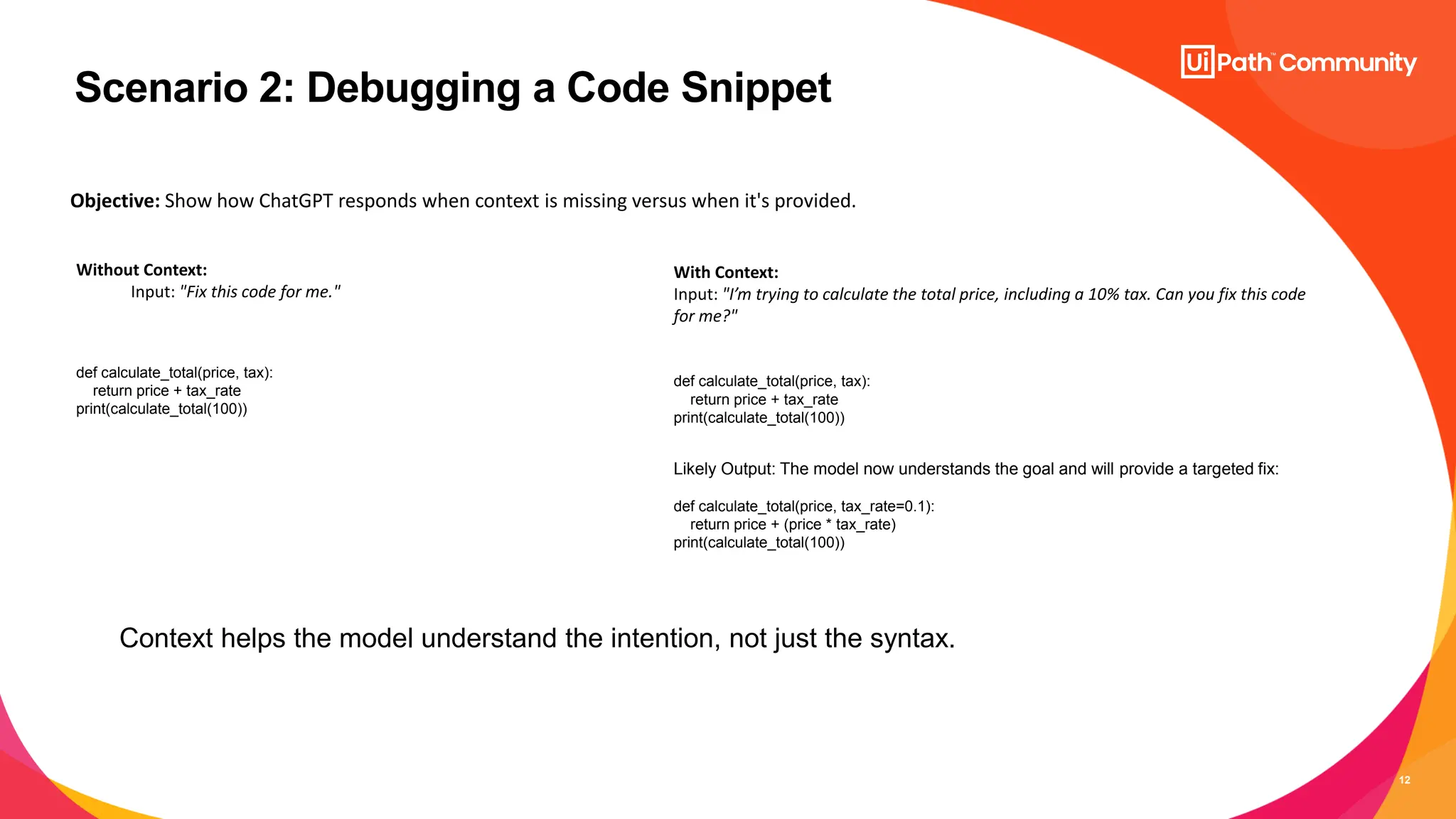

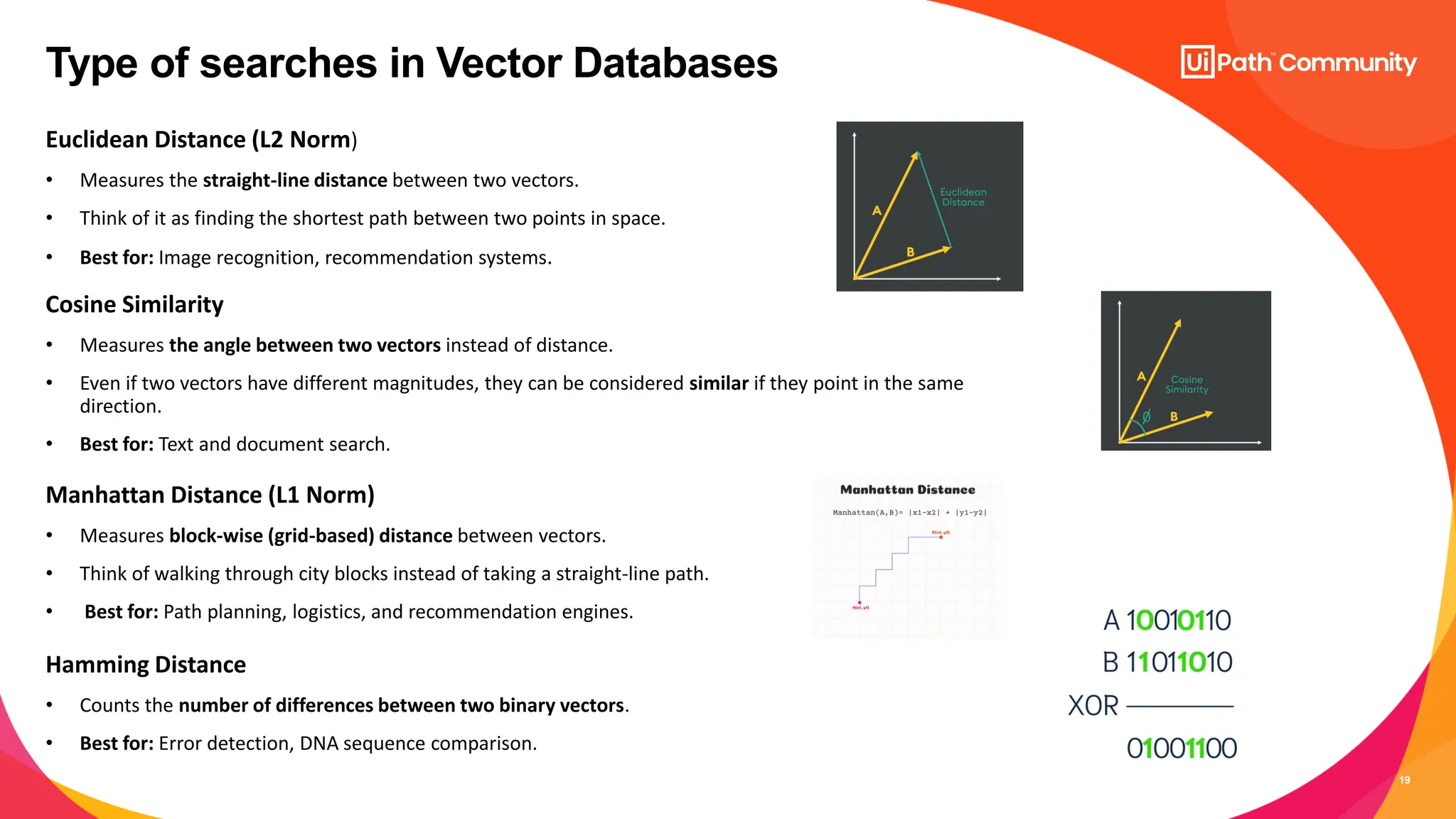

How LLMs "Understand" Context

Breaking Text into Tokens:

• LLMs divide the input text into small chunks called tokens. These tokens can be words, parts of words, or even characters.

• For example, the sentence "I love apples" might be split into tokens like ["I", "love", "apples"].

Context Window:

• LLMs process the tokens in a context window. This window represents how much recent information the model can "remember" while

generating a response.

• If the input is too long, only the most recent tokens might be considered.

Predicting the Next Word:

• Based on the tokens in the context window, the LLM predicts the next word or phrase that fits best.

• Example: If the input is "I love apples and bananas, but my favorite fruit is...", the model predicts something like "mangoes" because it

fits the context.](https://image.slidesharecdn.com/presentationsession2-contextgrounding-250327163041-b20bcece/75/Presentation-Session-2-Context-Grounding-pdf-9-2048.jpg)

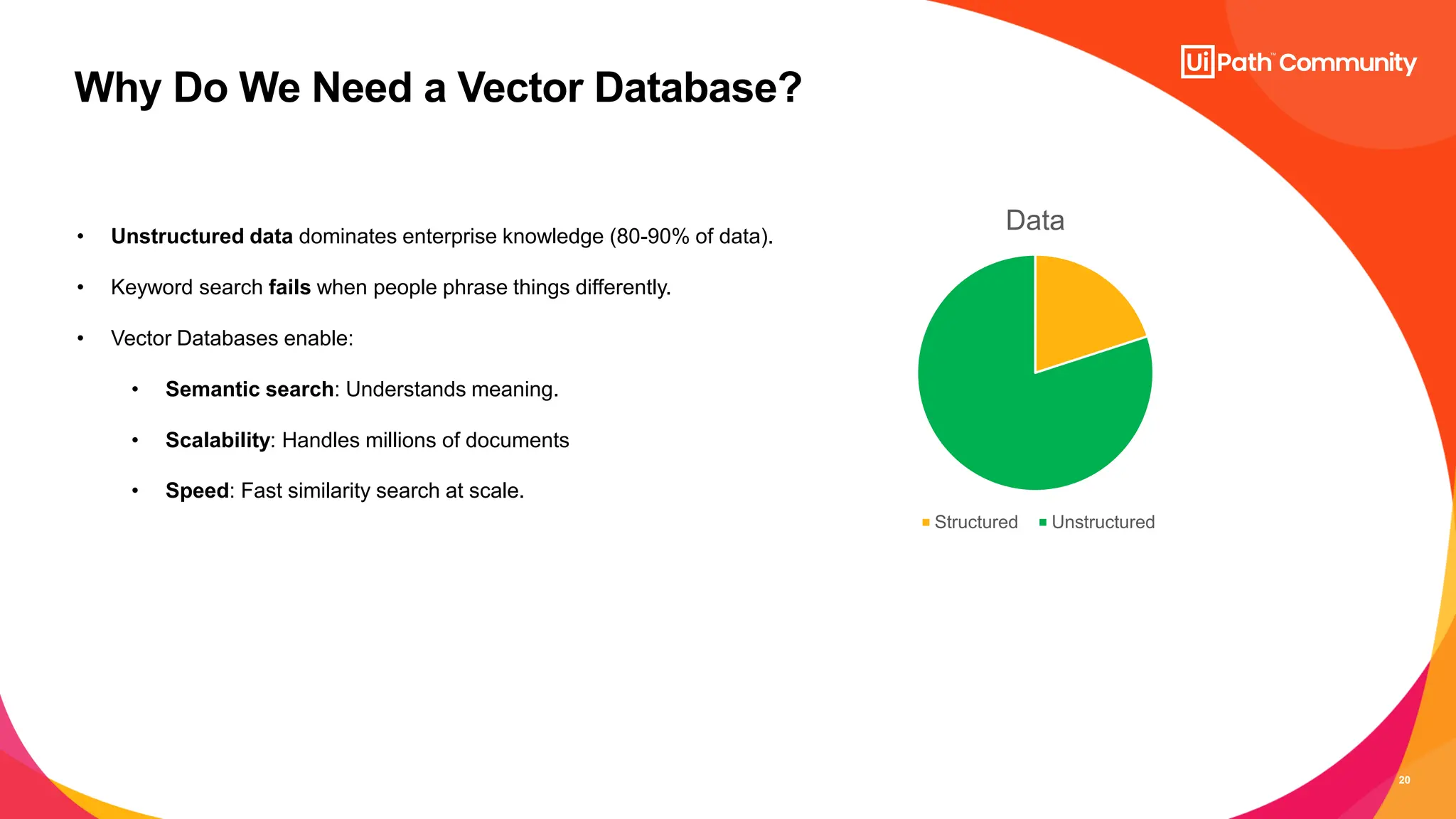

![11

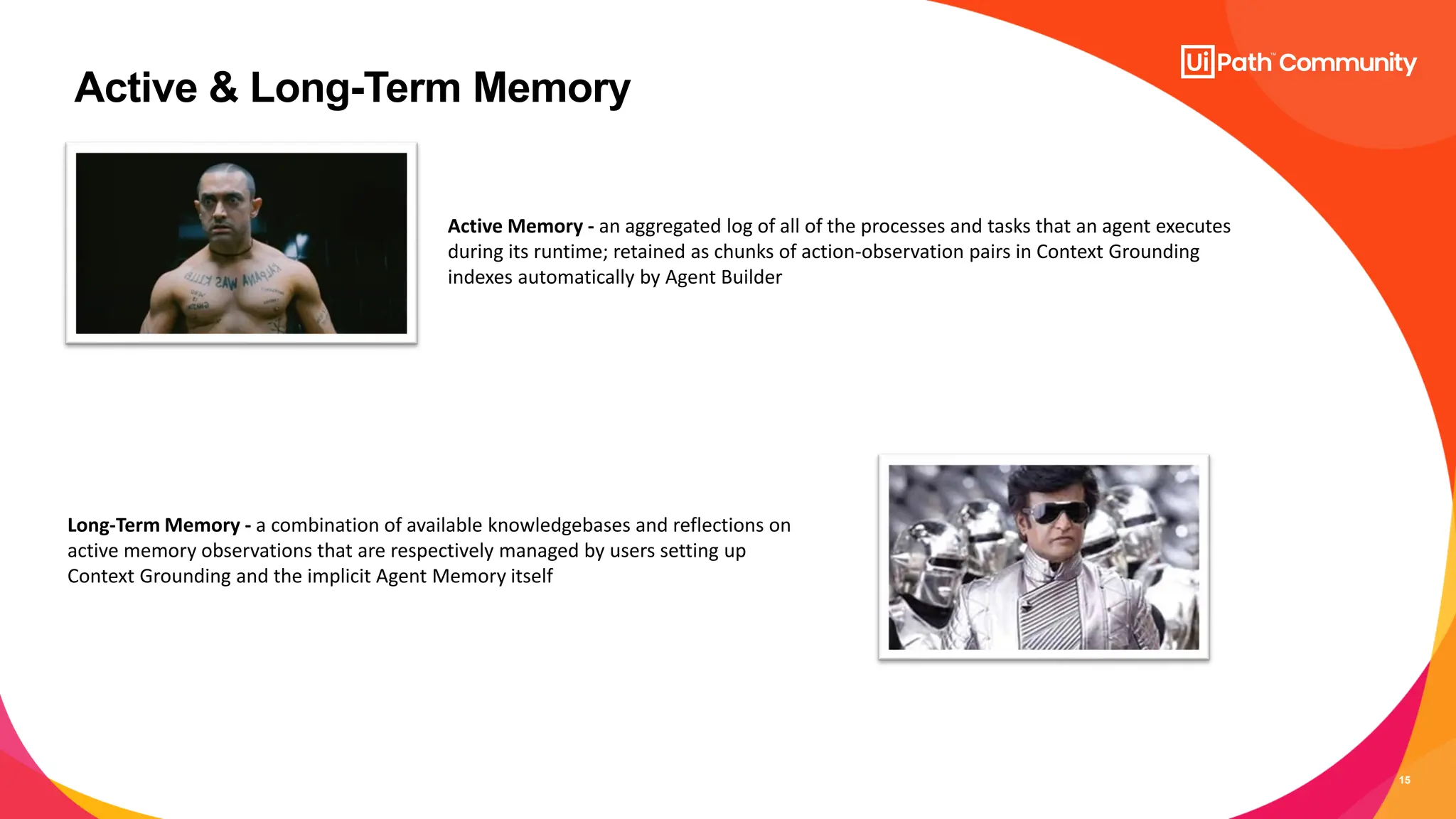

Scenario 1: Responding to a Helpdesk Query

Without Context: Prompt:

"How do I reset my password?"

Expected Output:

generates a generic response: "You can reset your password by

going to the login page and clicking on 'Forgot Password.' Follow

the instructions provided."

With Context:

Prompt:

"A corporate user is unable to log into their Active Directory account due to a forgotten

password. Provide a step-by-step guide for resetting it using the organization's self-service

password reset (SSPR) portal, ensuring security compliance and minimal downtime."

Expected Output:

Steps to Reset Active Directory Password via SSPR:

• Access the SSPR Portal: Open a browser and navigate to the organization's Self-Service

Password Reset Portal at [URL].

• Enter Credentials: Input your Active Directory username or corporate email address.

• Verify Identity: Complete the authentication process using Multi-Factor Authentication

(MFA) (e.g., OTP via SMS/email, security questions, or biometric verification).

• Set a New Password: Choose a compliant password following corporate security policies

(e.g., minimum 12 characters, uppercase, lowercase, numbers, special characters, and no

previous passwords).

• Confirm and Apply Changes: Click "Submit" to reset the password. If successful, you will

receive a confirmation message.

• Login & Sync: Log in using the new password on your workstation. If you use multiple

devices, ensure password synchronization by logging into VPN or a domain-joined system.

Troubleshooting:

If the reset fails, check network connectivity, MFA device availability, or contact IT Support at

[Helpdesk Contact].](https://image.slidesharecdn.com/presentationsession2-contextgrounding-250327163041-b20bcece/75/Presentation-Session-2-Context-Grounding-pdf-11-2048.jpg)