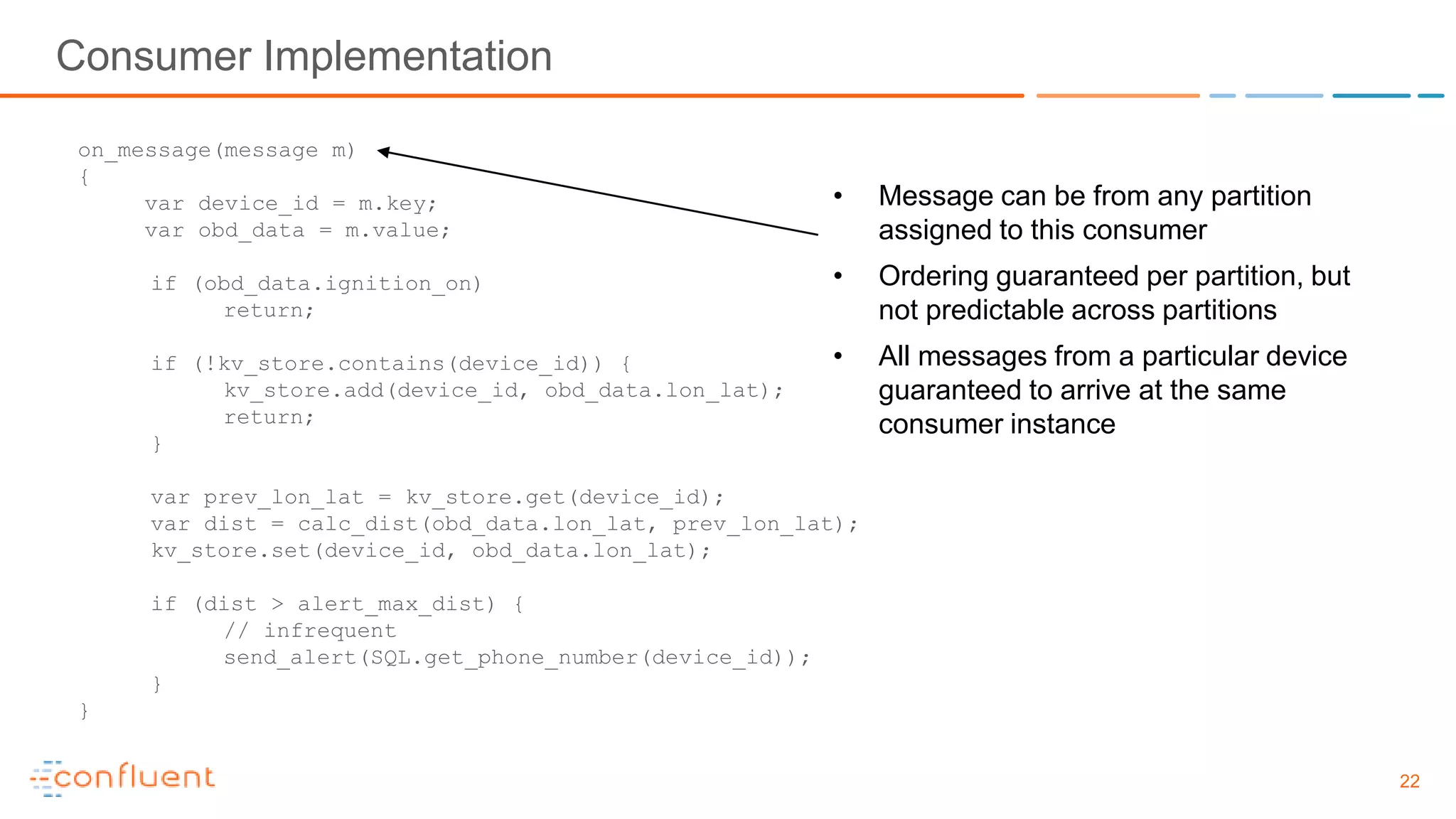

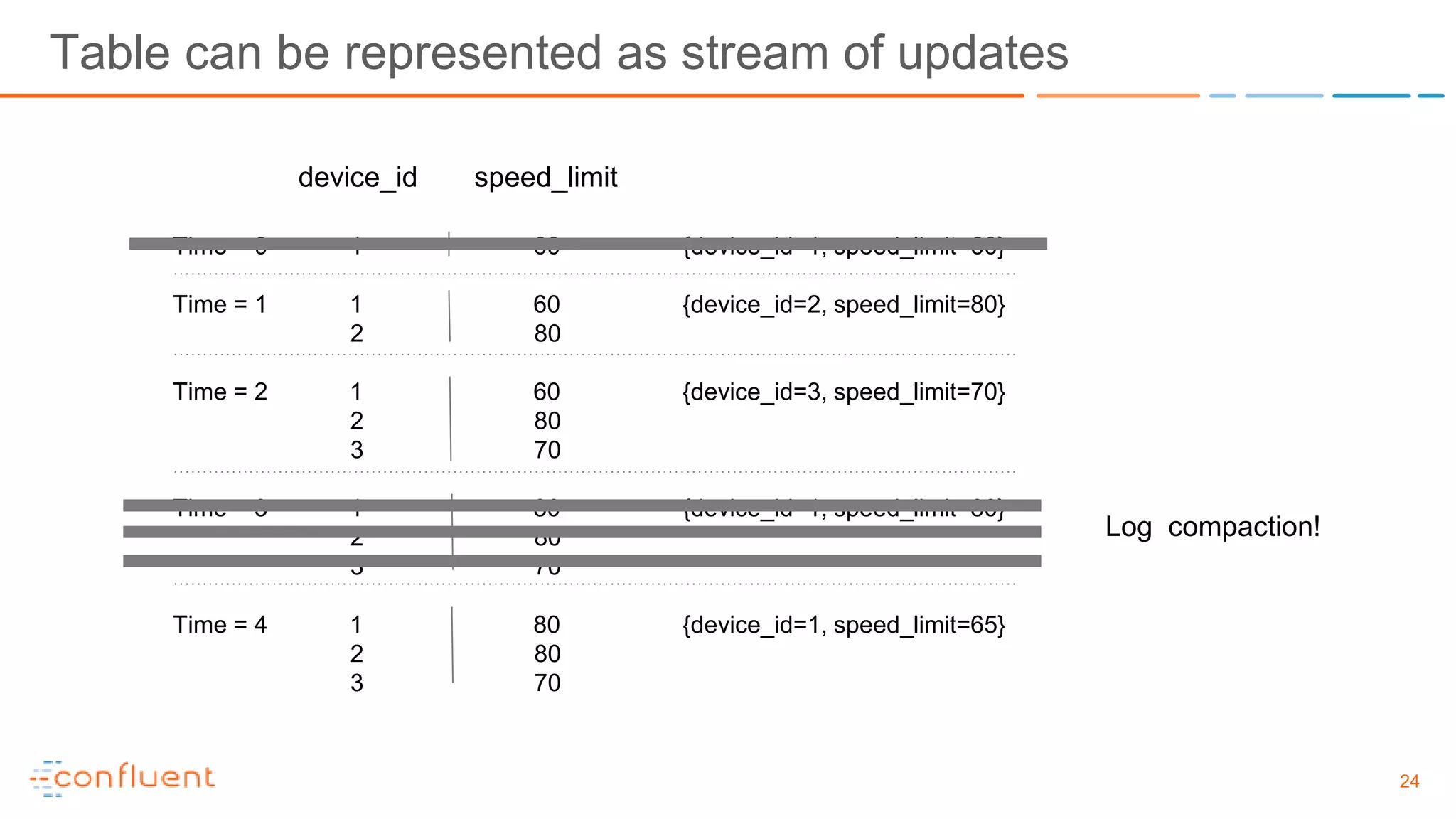

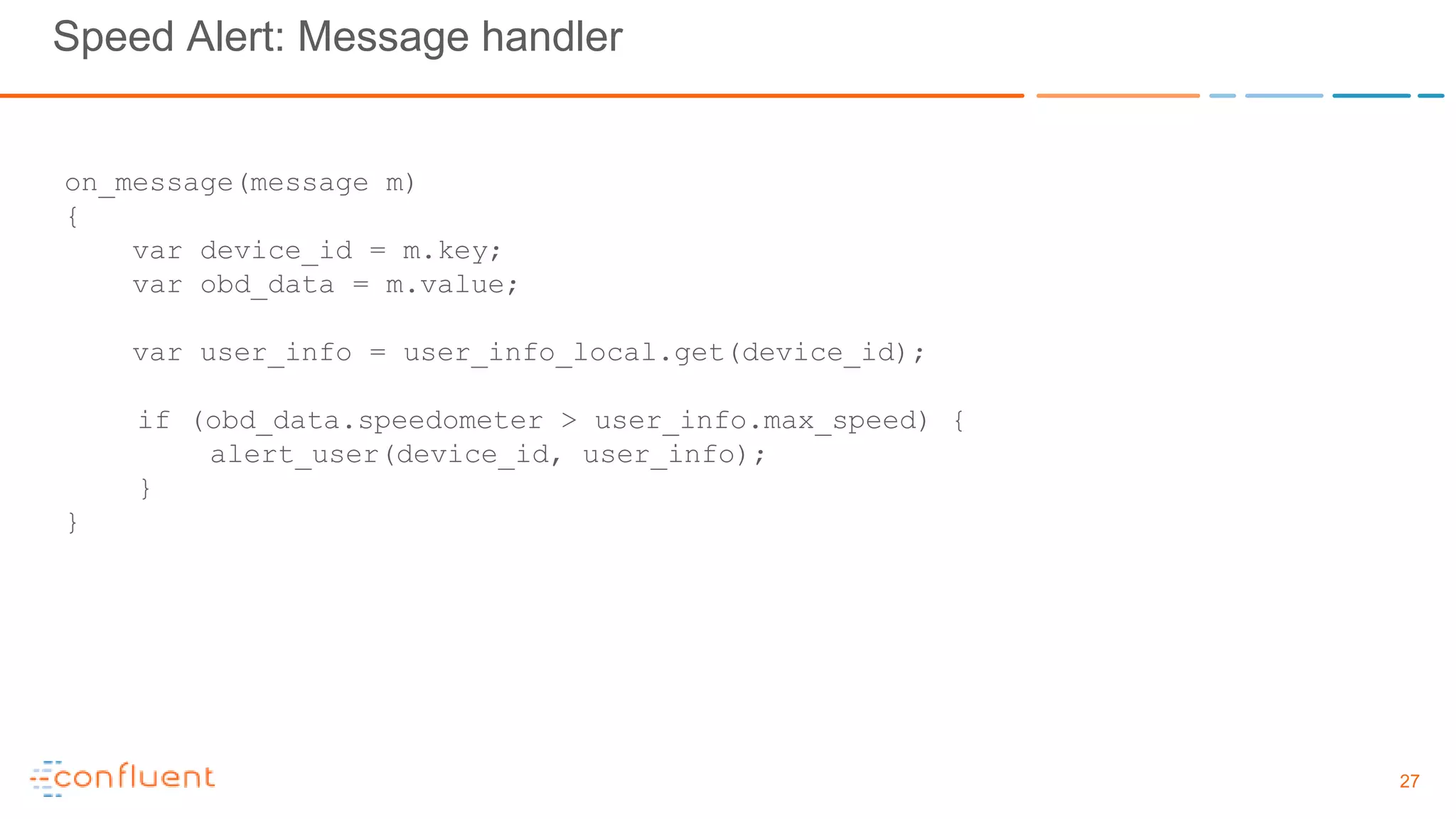

The document outlines a system designed for processing OBD-II data from millions of vehicles using Apache Kafka and MQTT, focusing on real-time data transportation and event processing. It discusses the strengths of Kafka's distributed streaming platform, including data durability, stream re-processing, and handling surges in usage. Additionally, it details various implementation scenarios, including speed alerts and location-based offers, leveraging Kafka's capabilities to create a multi-stage data pipeline.

![6

• Simple API

• Hierarchical topics

• myhome/kitchen/door/front/battery/level

• wildcard subscription: myhome/*/door/*/battery/level

• 3 qualities of service (on both produce and consume)

• At most once (QoS 0)

• At least once (QoS 1)

• Exactly once (QoS 2) [not universally supported]

• Persistent consumer sessions

• Important for QoS 1, QoS 2

• Last will and testament

• Last known good value

• Authorization, SSL/TLS

MQTT Features](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-6-2048.jpg)

![7

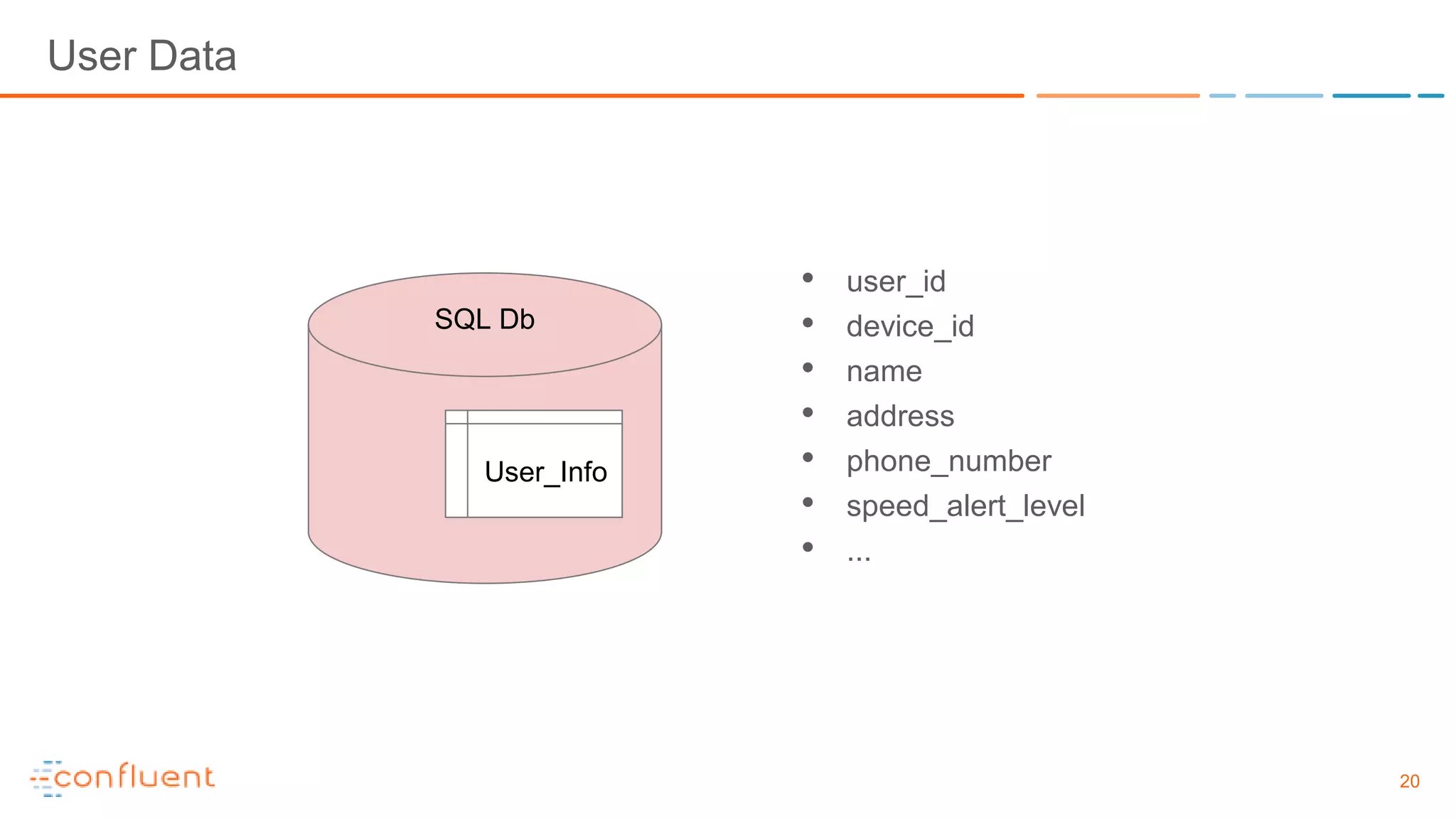

• Device Id

• GPS Location [lon, lat]

• Ignition on / off

• Speedometer reading

• Timestamp

• …plus a lot more

Assume: data sent via 3G wireless connection at ~30 second interval

OBD-II Data](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-7-2048.jpg)

![8

Deficiencies:

• Single MQTT server can handle maybe ~100K

connections

• Can’t handle usage surges (no buffering)

• No storage of events or reprocess capability

MQTT

Server 1

Processor 1 Processor 2 ...

Ingest Architecture V1

topic: [deviceid]/obd](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-8-2048.jpg)

![9

MQTT

Server

Coordinator

MQTT

Server 1

MQTT

Server 2

MQTT

Server 3

MQTT

Server 4

topic: [deviceid]/obd

http / REST

...

• Easily Shardable

• Treat MQTT server as

commodity service

Ingest Architecture V2](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-9-2048.jpg)

![10

MQTT

Server

Coordinator

MQTT

Server 1

MQTT

Server 2

MQTT

Server 3

MQTT

Server 4

topic: [deviceid]/obd

Kafka Connect

OBD_Data

Stream

processing

kafka

OBD -> MQTT -> Kafka](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-10-2048.jpg)

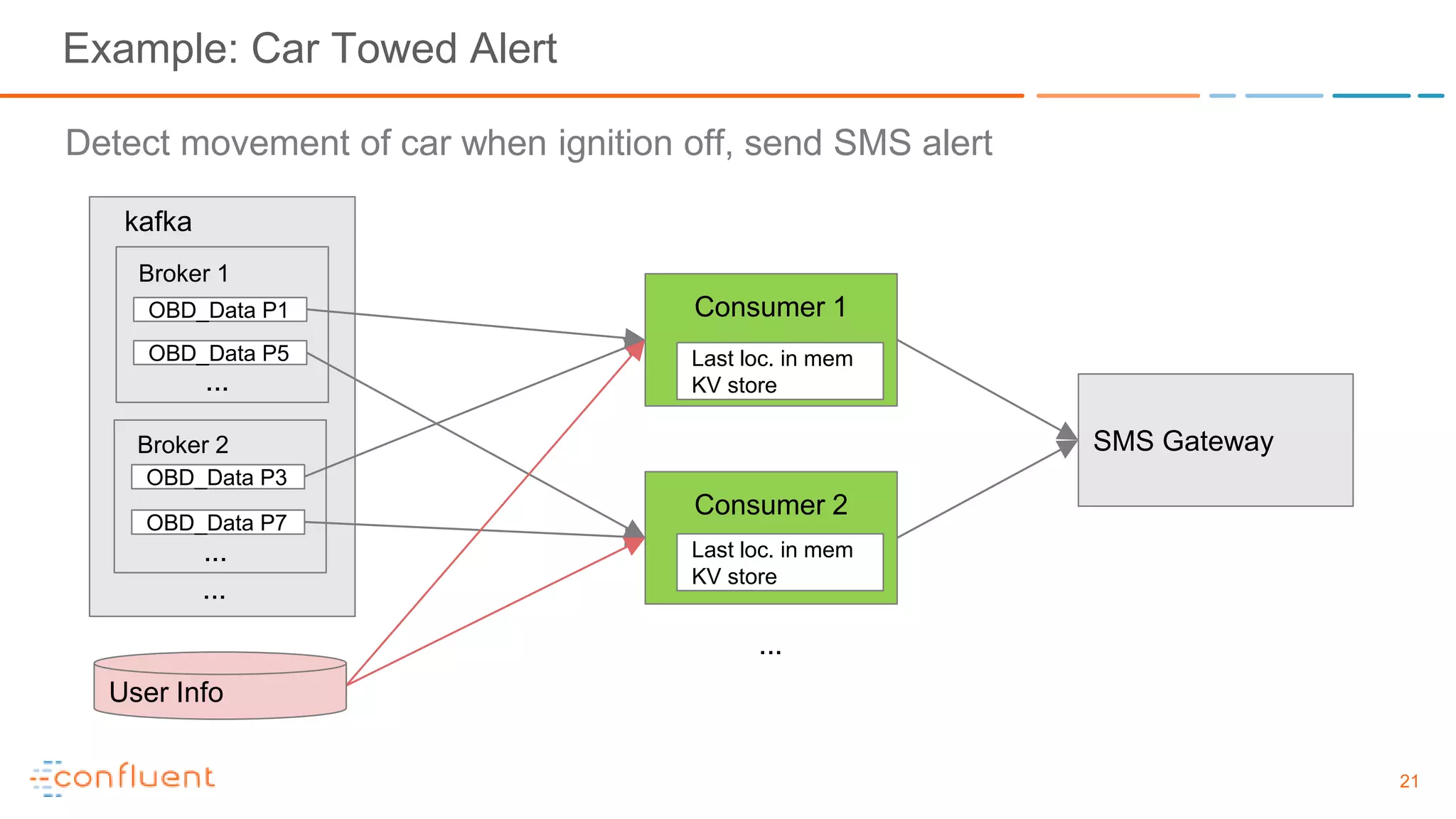

![23

Example: Speed Alert

• Scenario: Parent wants to monitor son/daughter driving and be alerted if they exceed a

specified speed.

• In the Tow Alert example User_Info only needs to be queried in the event of an alert.

• In this example, the table needs to be queried for every OBD data record in every partition.

OBD_data

[can update

at any time]

User Info

table

Not scalable! Cache?

...

Highfrequency

P1](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-23-2048.jpg)

![25

Debezium

Kafka Connector that turns database tables into streams of update records.

debezium

Partition 1

Partition 2

Partition 3

Partition 4

Partition 5

Partition 6

...

MySQL

User Info

[key: userId]

User_Info

[changelog topic]Partition by device_id](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-25-2048.jpg)

![26

Stream / Table Join

Partition 1

Partition 2

Partition 3

Partition 4

Partition 5

Partition 6

Partition 7

...

Partition 1

Partition 2

Partition 3

Partition 4

Partition 5

...

Consumer 1

Relevant subset of

User_Info

device_id speed_limit

1 80

3 70

User_Info

[ChangeLog, compacted]

OBD_Data

[Record Stream]

...

debezium

key:device_id

key:device_id](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-26-2048.jpg)

![28

MQTT Phone Client Connectivity

MQTT

Server

Coordinator

MQTT

Server 1

MQTT

Server 2

[deviceid]/alert

...

Consumer 1 ...

MQTT

Server 3

...

[deviceid]/obd](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-28-2048.jpg)

![29

Speed Limit Alert: Rate limiting

Partition 1

Partition 2

Partition 3

Partition 4

Partition 5

Partition 6

Partition 7

...

app_state kafka topic

• Prefer to rate limit on server to minimize network overhead.

• Create new Kafka topic app_state, partitioned on

device_id.

• When alert triggered, store alert time in this topic.

• [can use this topic as general store for other per device

state info too]

• Materialize this change-log stream on consumers as

necessary.](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-29-2048.jpg)

![30

Partition 1

Partition 2

Partition 3

Partition 4

Partition 5

Partition 6

Partition 7

...

Partition 1

Partition 2

Partition 3

...

Consumer 1

Relevant

subset of

User_Info

...

OBD_Data

[Record Stream]

User_Info

[ChangeLog, compacted]

Partition 4

Partition 1

Partition 2

Partition 3

...

Partition 4

App_State

[compacted]

Relevant

subset of

App_State](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-30-2048.jpg)

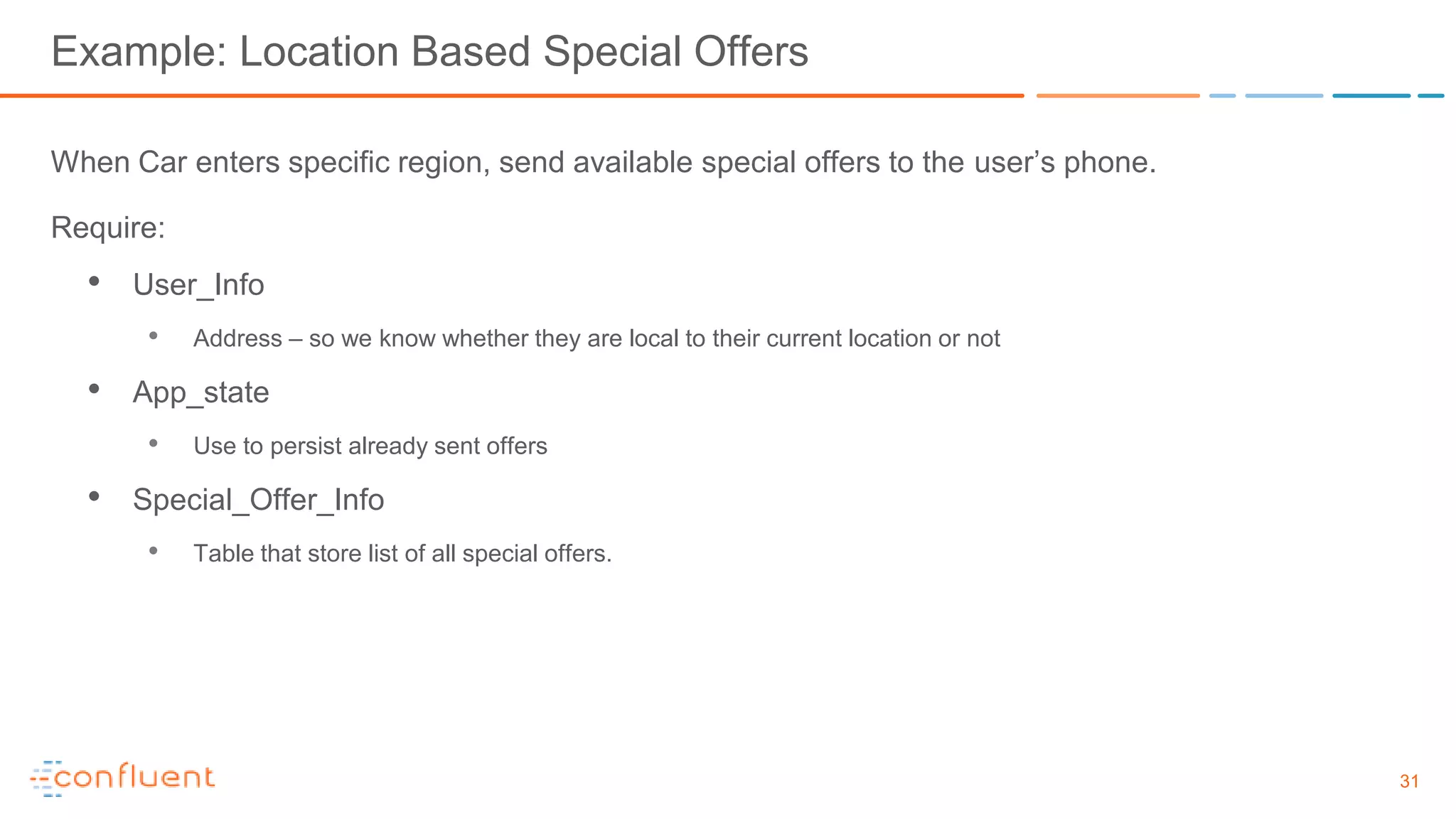

![33

Special Offer Change-log Stream

debezium

Partition 1

Partition 2

Partition 3

Partition 4

Partition 5

Partition 6

...

MySQL

Special Offer

Info

Special_Offers

[changelog,

compacted]

Partition by location_id](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-33-2048.jpg)

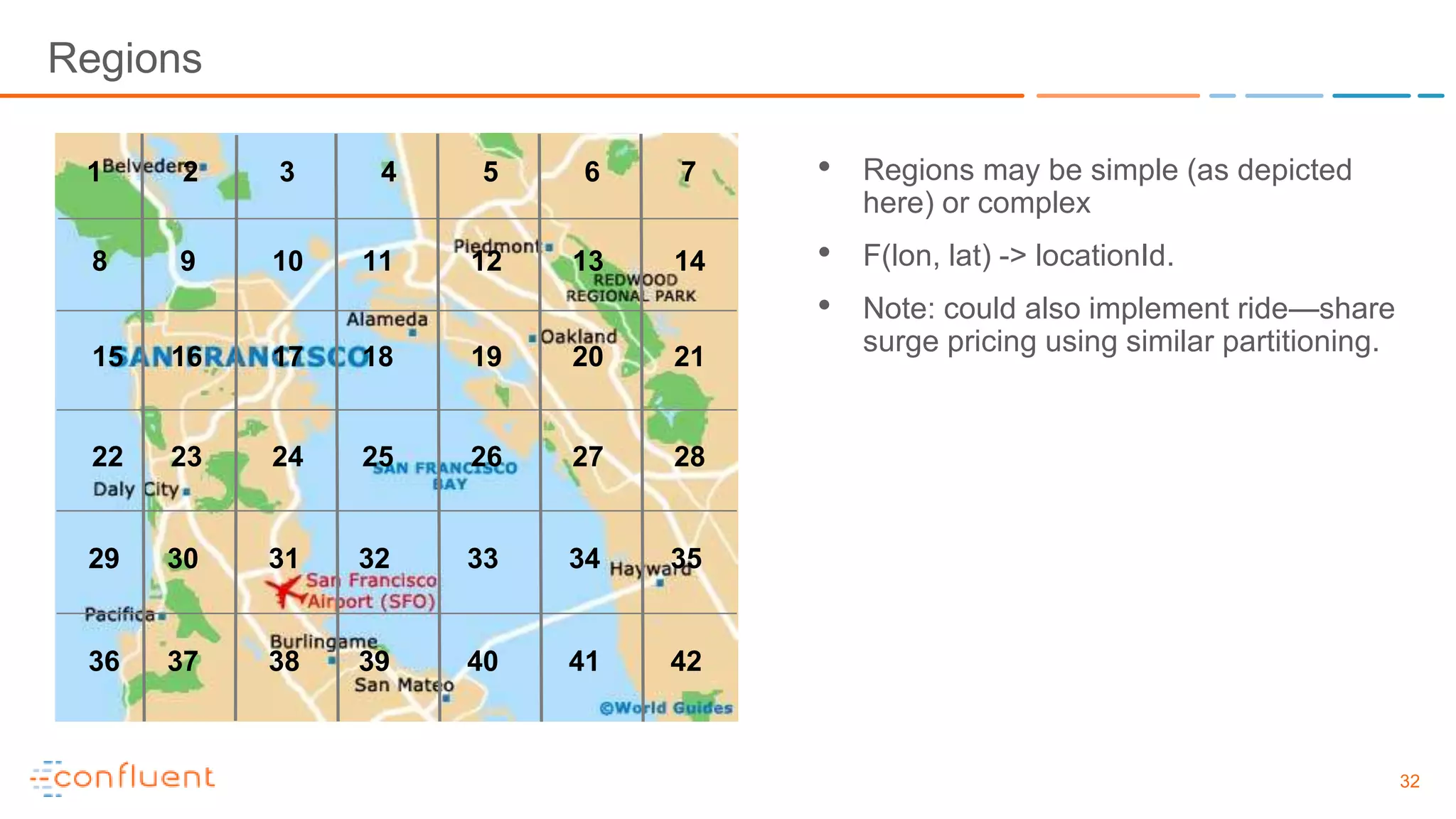

![34

Multi-stage Data Pipeline

OBD_Data App_State

[offers already sent]

User_Info

[address]

K: device_id

V: OBD record

consume enrich

K: device_id

V: OBD record

address

K: device_id

V: OBD record

Address

offers_sent

enrich](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-34-2048.jpg)

![35

Multi-stage Data Pipeline (continued)

K: [device_id]

V: OBD record

Address

offers_sent

K: location_id

V: OBD record

Address

offers_sent

OBD_Data_By_Location

P1

……

…

Repartition by location_id

P2

P1

P3

Data from given device will still all be on the same partition

(except when region changes)](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-35-2048.jpg)

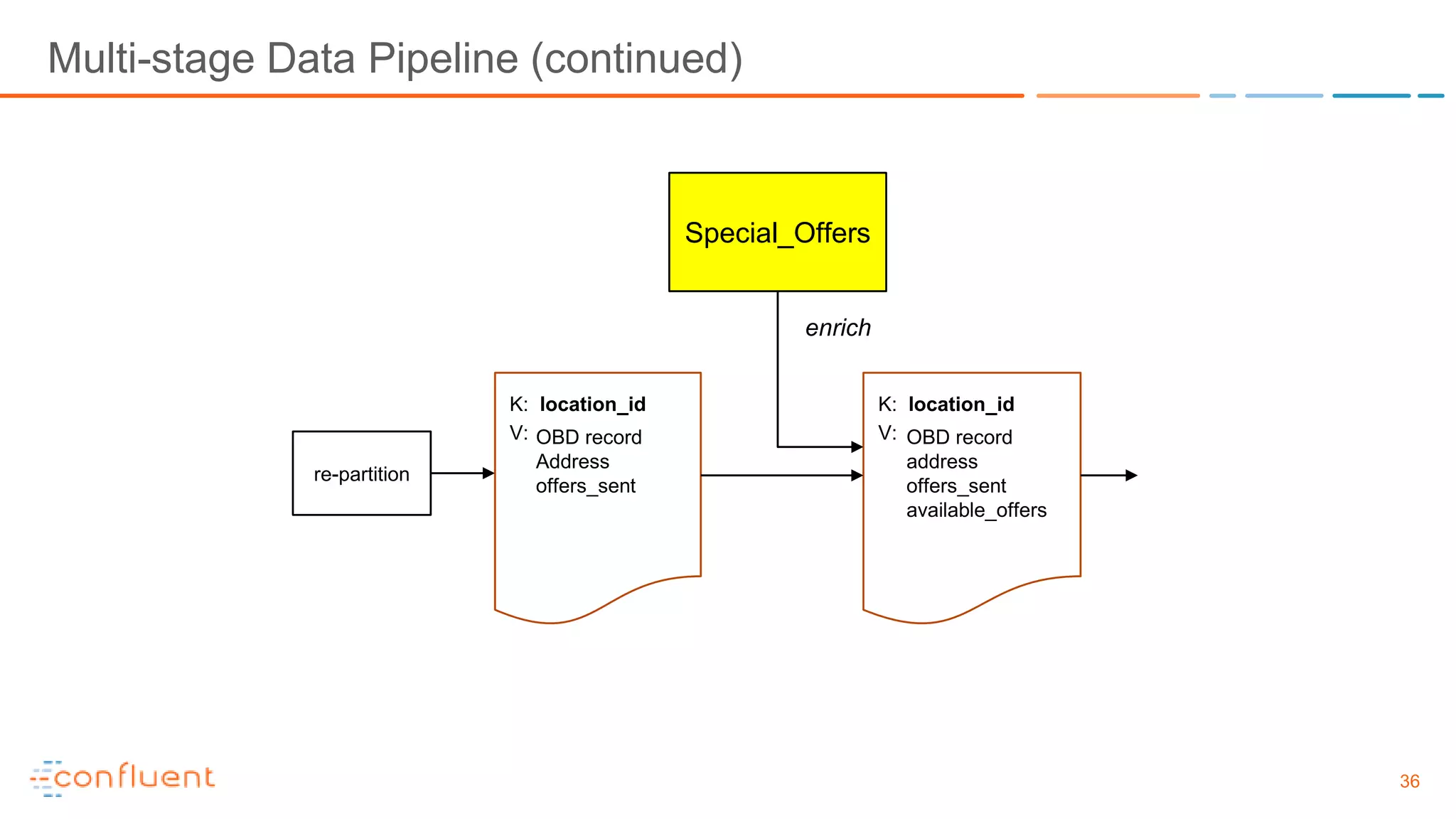

![37

Multi-stage Data Pipeline (continued)

Special offer available in

location

Special offer not already

sent

User address near location?

MQTT

Server

filter

filter

filter

...

[deviceId]/alert](https://image.slidesharecdn.com/iotkafka2-170118202043/75/Processing-IoT-Data-with-Apache-Kafka-37-2048.jpg)