This document provides an overview and introduction to programming Google App Engine. It discusses the runtime environment, data storage via the datastore, available services, task queues, and developer tools. It is intended to help developers get started with building applications on App Engine and includes information about setting up development environments, developing apps, and registering applications.

![Programming Google App Engine

by Dan Sanderson

Copyright © 2010 Dan Sanderson. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions

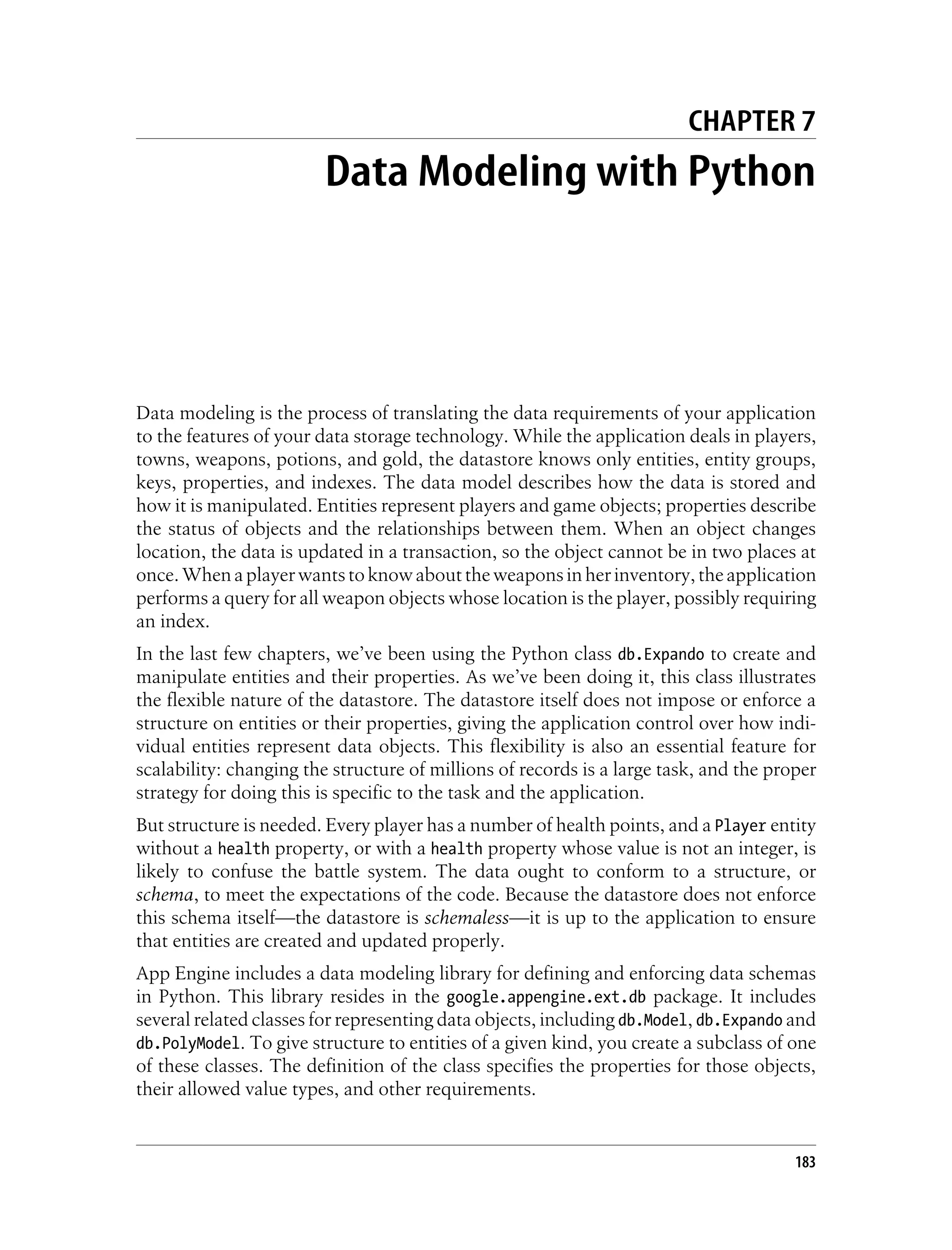

are also available for most titles (http://my.safaribooksonline.com). For more information, contact our

corporate/institutional sales department: (800) 998-9938 or corporate@oreilly.com.

Editor: Mike Loukides Indexer: Ellen Troutman Zaig

Production Editor: Sumita Mukherji Cover Designer: Karen Montgomery

Proofreader: Sada Preisch Interior Designer: David Futato

Illustrator: Robert Romano

Printing History:

November 2009: First Edition.

Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of

O’Reilly Media, Inc. Programming Google App Engine, the image of a waterbuck, and related trade dress

are trademarks of O’Reilly Media, Inc.

Many of the designations used by manufacturers and sellers to distinguish their products are claimed as

trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a

trademark claim, the designations have been printed in caps or initial caps.

While every precaution has been taken in the preparation of this book, the publisher and author assume

no responsibility for errors or omissions, or for damages resulting from the use of the information con-

tained herein.

TM

This book uses RepKover™, a durable and flexible lay-flat binding.

ISBN: 978-0-596-52272-8

[M]

1257864694](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-5-2048.jpg)

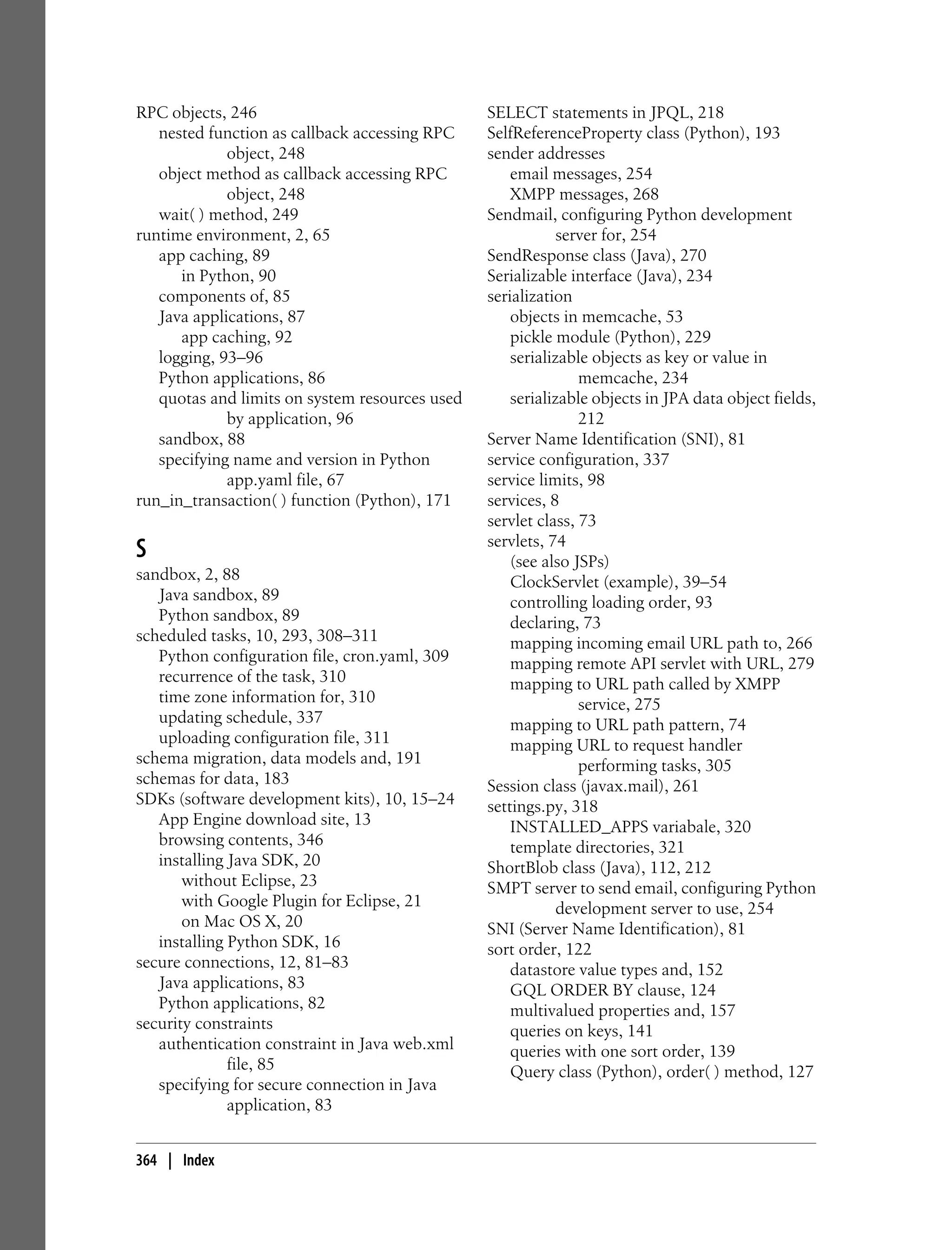

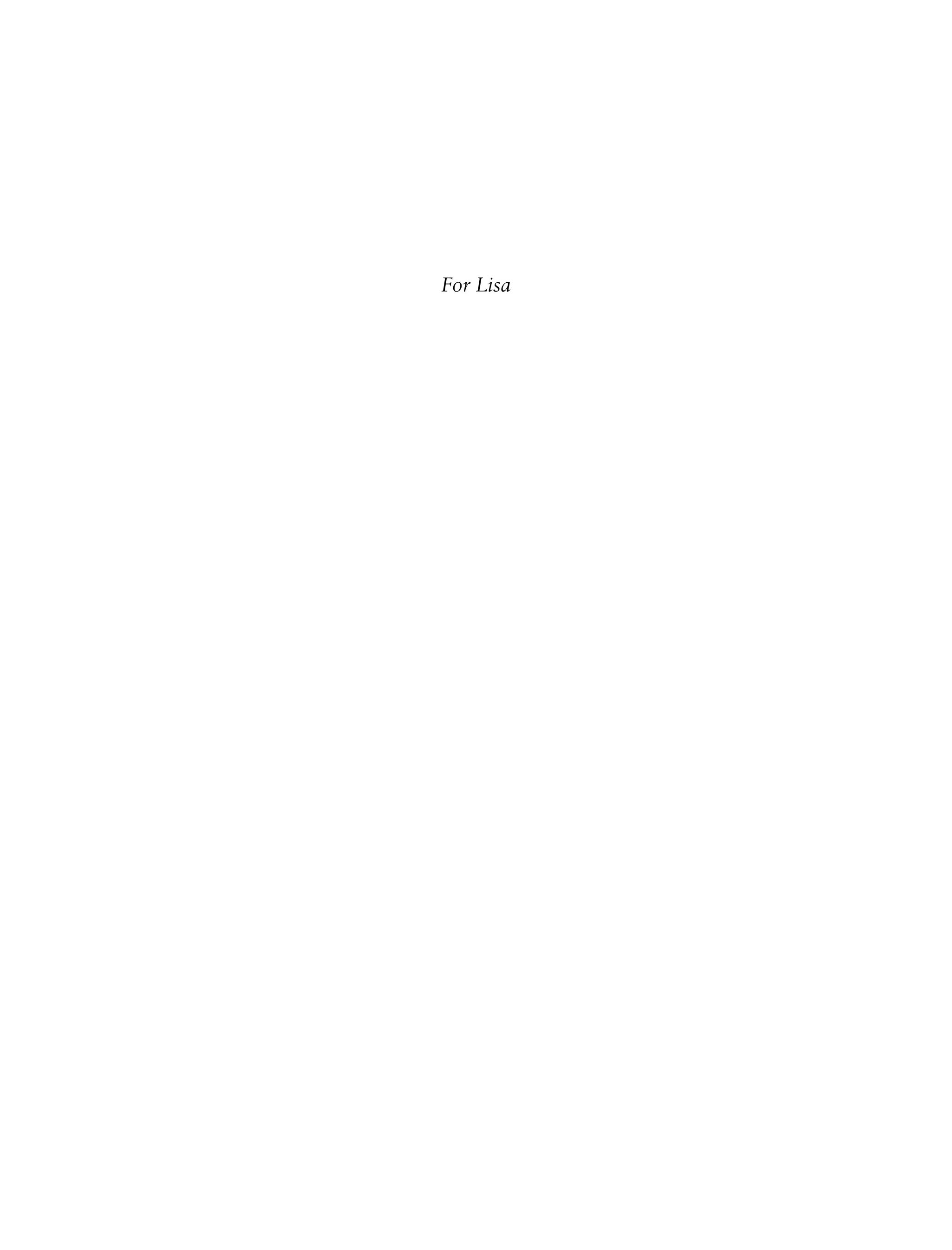

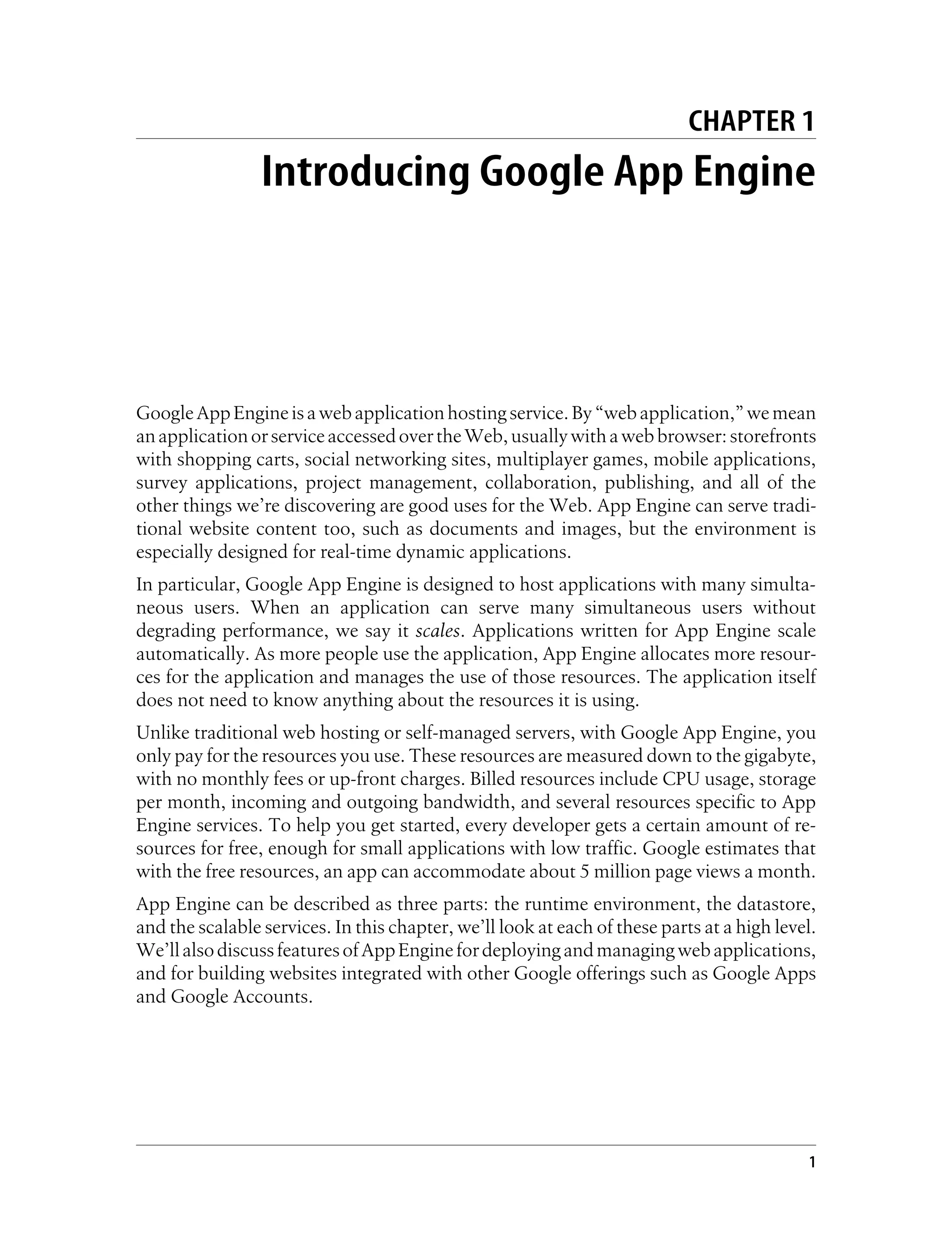

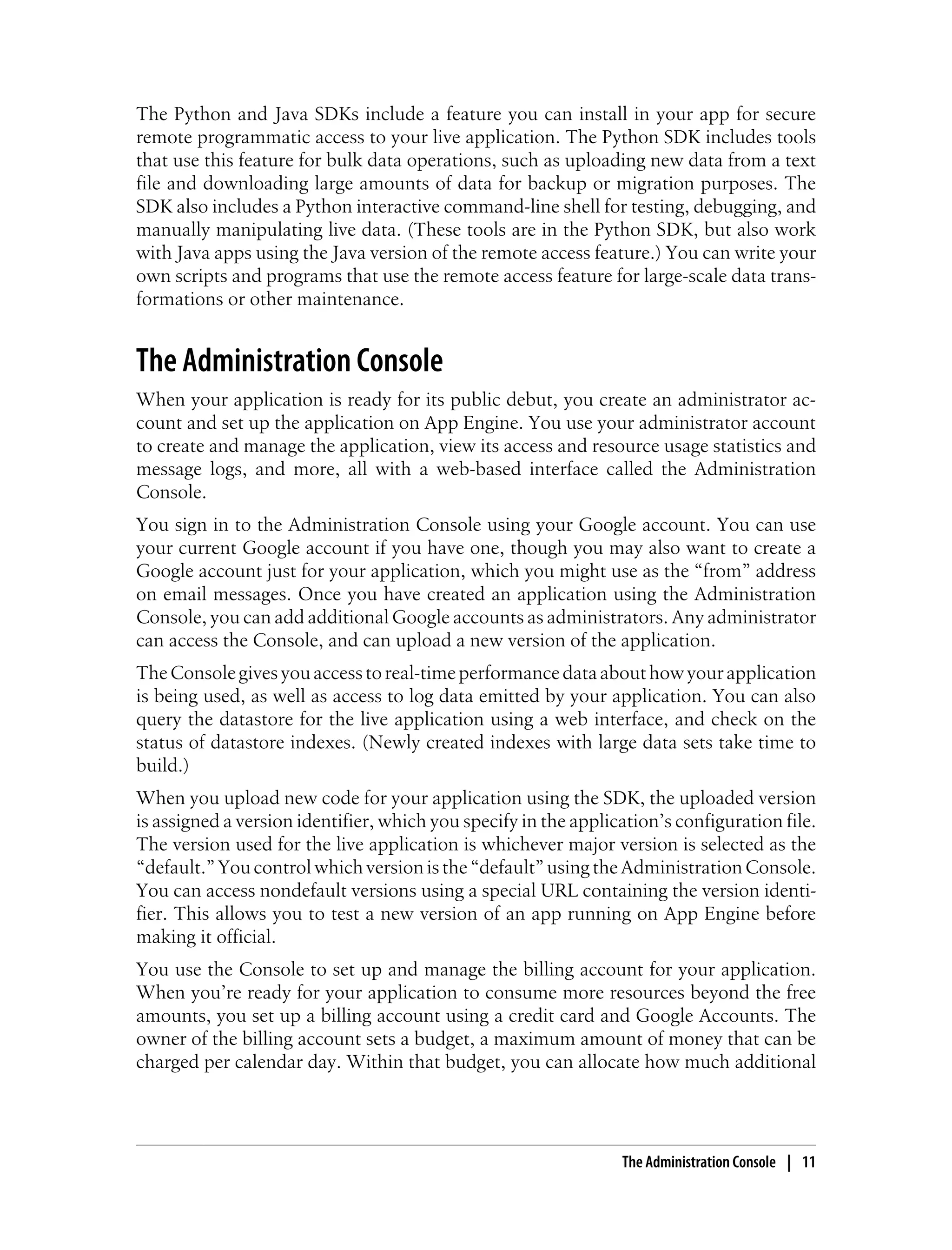

![Let’s upgrade the clock app to use the webapp framework. Replace the contents of

main.py with the version shown in Example 2-3. Reload the page in your browser to

see the new version in action. (You won’t notice a difference other than an updated

time. This example is equivalent to the previous version.)

Example 2-3. A simple request handler using the webapp framework

from google.appengine.ext import webapp

from google.appengine.ext.webapp.util import run_wsgi_app

import datetime

class MainPage(webapp.RequestHandler):

def get(self):

time = datetime.datetime.now()

self.response.headers['Content-Type'] = 'text/html'

self.response.out.write('<p>The time is: %s</p>' % str(time))

application = webapp.WSGIApplication([('/', MainPage)],

debug=True)

def main():

run_wsgi_app(application)

if __name__ == '__main__':

main()

Example 2-3 imports the module google.appengine.ext.webapp, then defines a request

handler class called MainPage, a subclass of webapp.RequestHandler. The class defines

methods for each HTTP method supported by the handler, in this case one method for

HTTP GET called get(). When the application handles a request, it instantiates the

handler class, sets self.request and self.response to values the handler method can

access and modify, then calls the appropriate handler method, in this case get(). When

the handler method exits, the application uses the value of self.response as the HTTP

response.

The application itself is represented by an instance of the class

webapp.WSGIApplication. The instance is initialized with a list of mappings of URLs to

handler classes. The debug parameter tells the application to print error messages to the

browser window when a handler returns an exception if the application is running

under the development web server. webapp detects whether it is running under the

development server or running as a live App Engine application, and will not print

errors to the browser when running live even if debug is True. You can set it to False to

have the development server emulate the live server when errors occur.

The script defines a main() function that runs the application using a utility method.

Lastly, the script calls main() using the Python idiom of if __name__ ==

'__main__': ..., a condition that is always true when the script is run by the web server.

This idiom allows you to import the script as a module for other code, including the

classes and functions defined in the script, without running the main() routine.

Developing the Application | 29](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-50-2048.jpg)

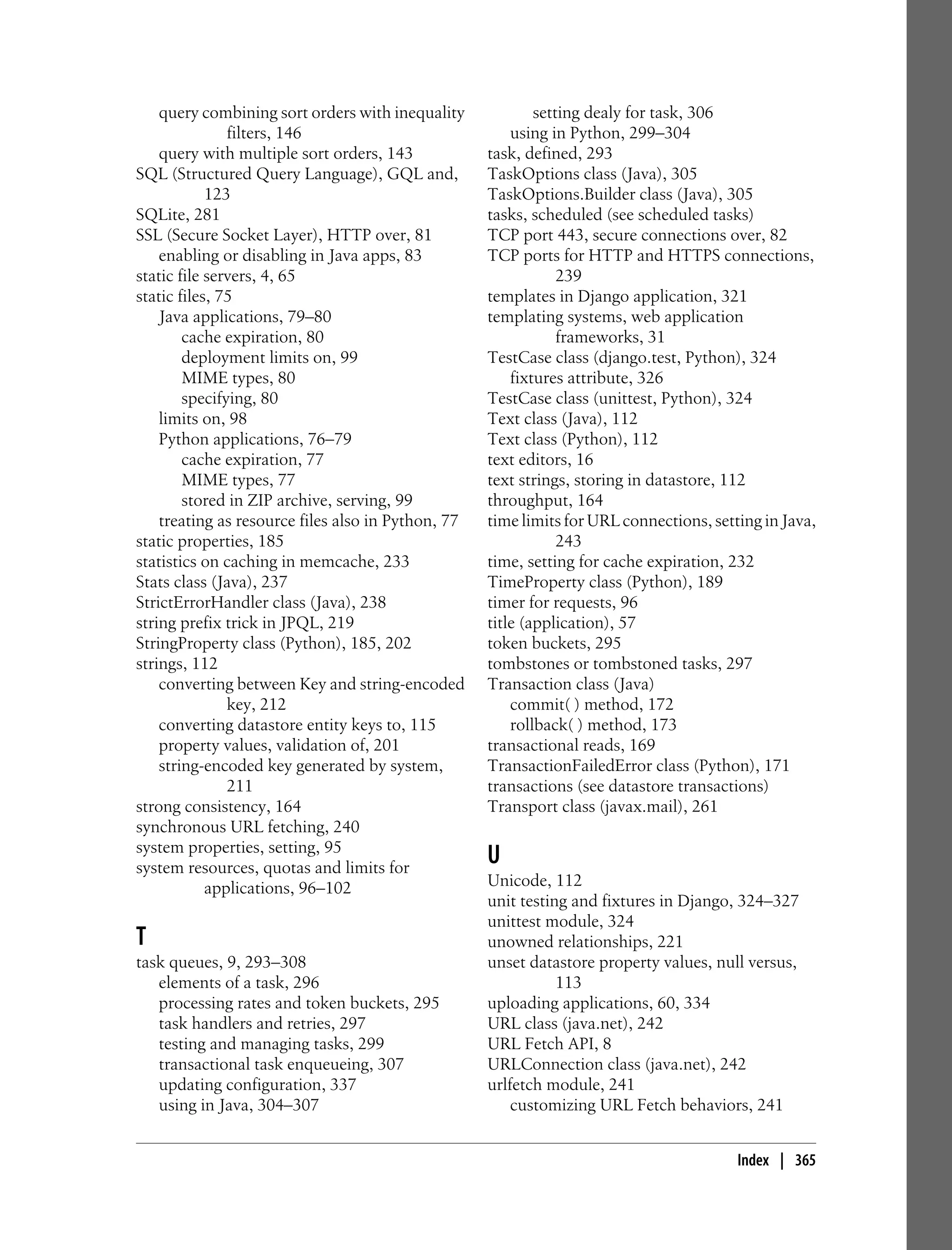

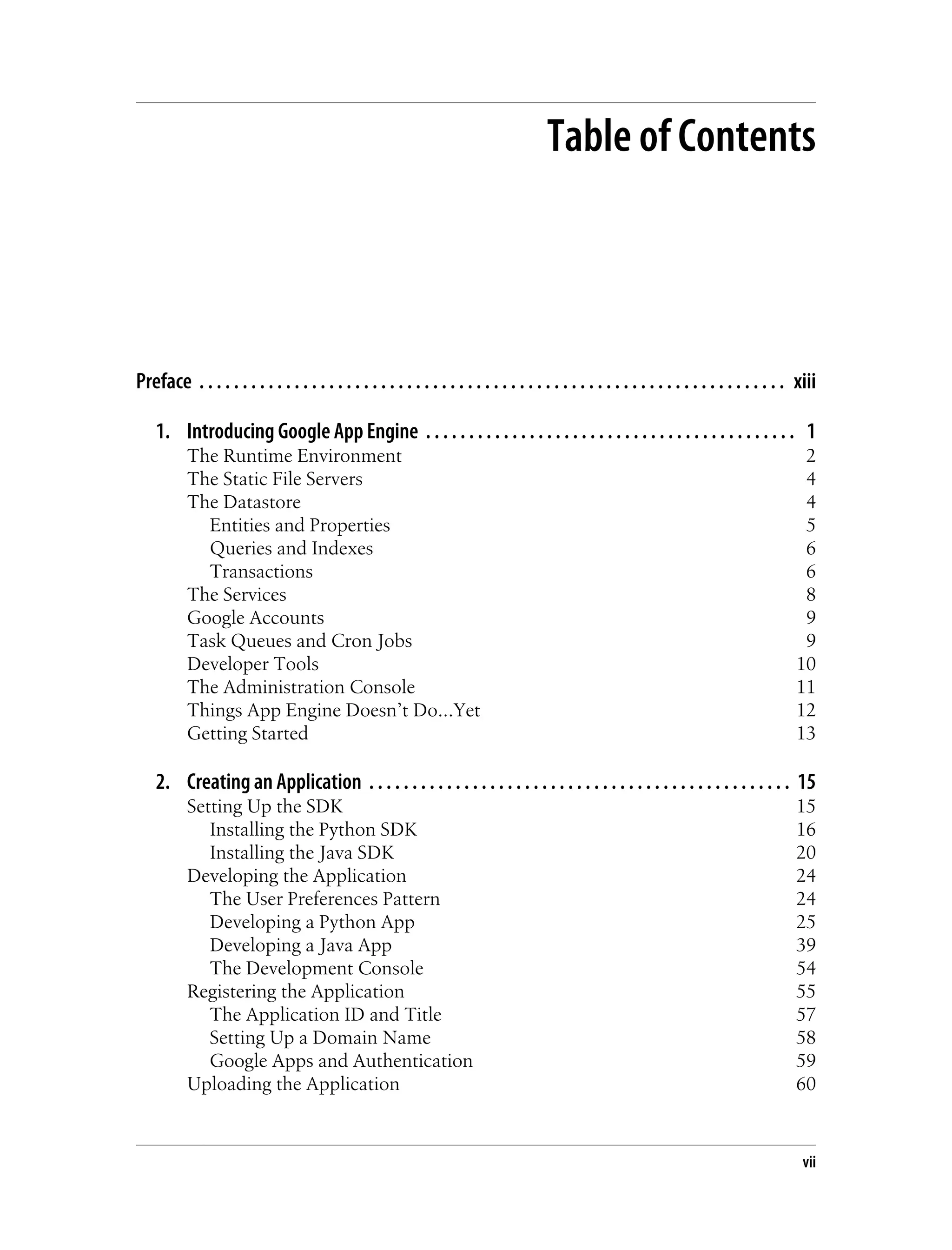

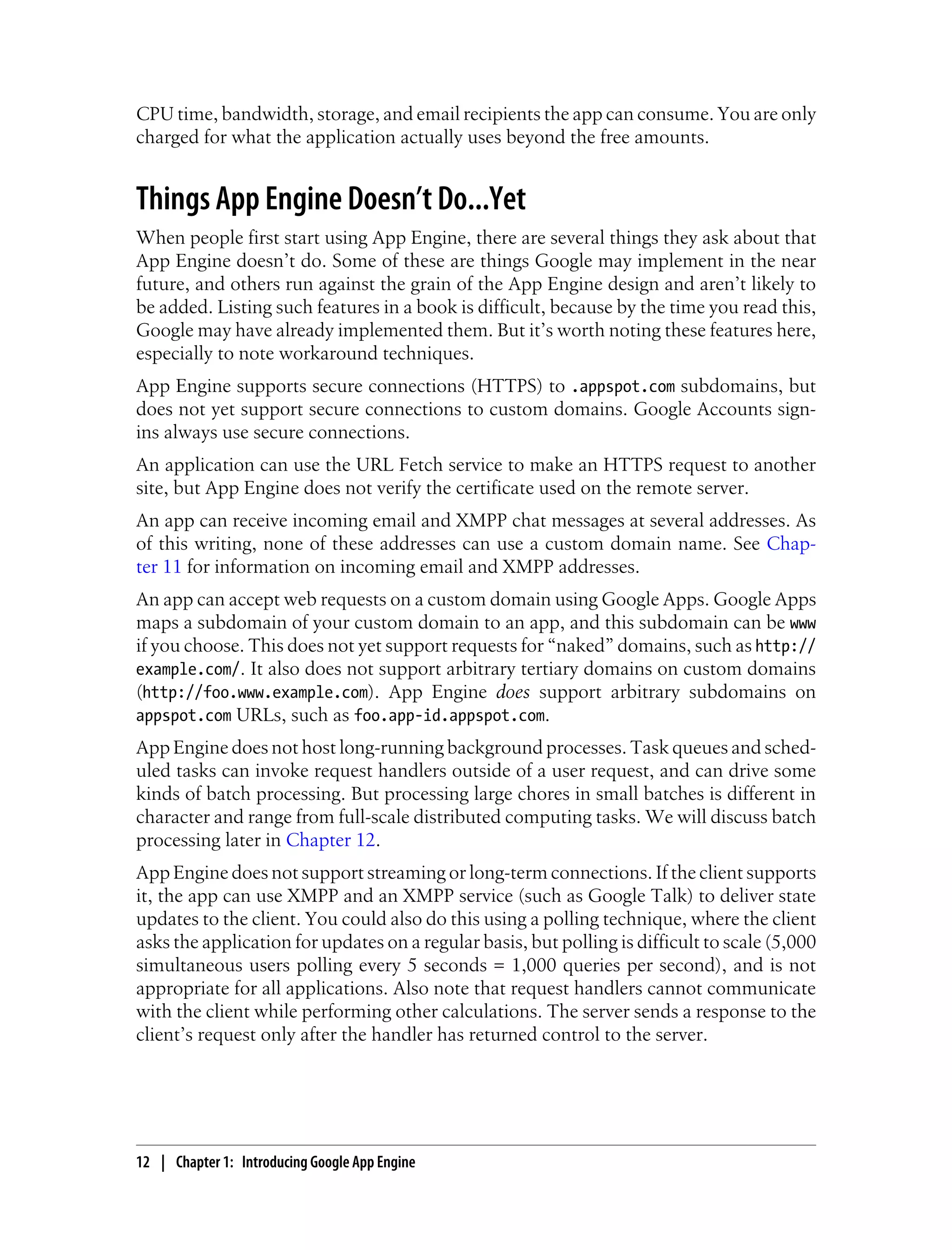

![Defining a main() function this way allows App Engine to cache the

compiled handler script, making subsequent requests faster to execute.

For more information on app caching, see Chapter 3.

A single WSGIApplication instance can handle multiple URLs, routing the request to

different RequestHandler classes based on the URL pattern. But we’ve already seen that

the app.yaml file maps URL patterns to handler scripts. So which URL patterns should

appear in app.yaml, and which should appear in the WSGIApplication? Many web

frameworks include their own URL dispatcher logic, and it’s common to route all dy-

namic URLs to the framework’s dispatcher in app.yaml. With webapp, the answer

mostly depends on how you’d like to organize your code. For the clock application, we

will create a second request handler as a separate script to take advantage of a feature

of app.yaml for user authentication, but we could also put this logic in main.py and

route the URL with the WSGIApplication object.

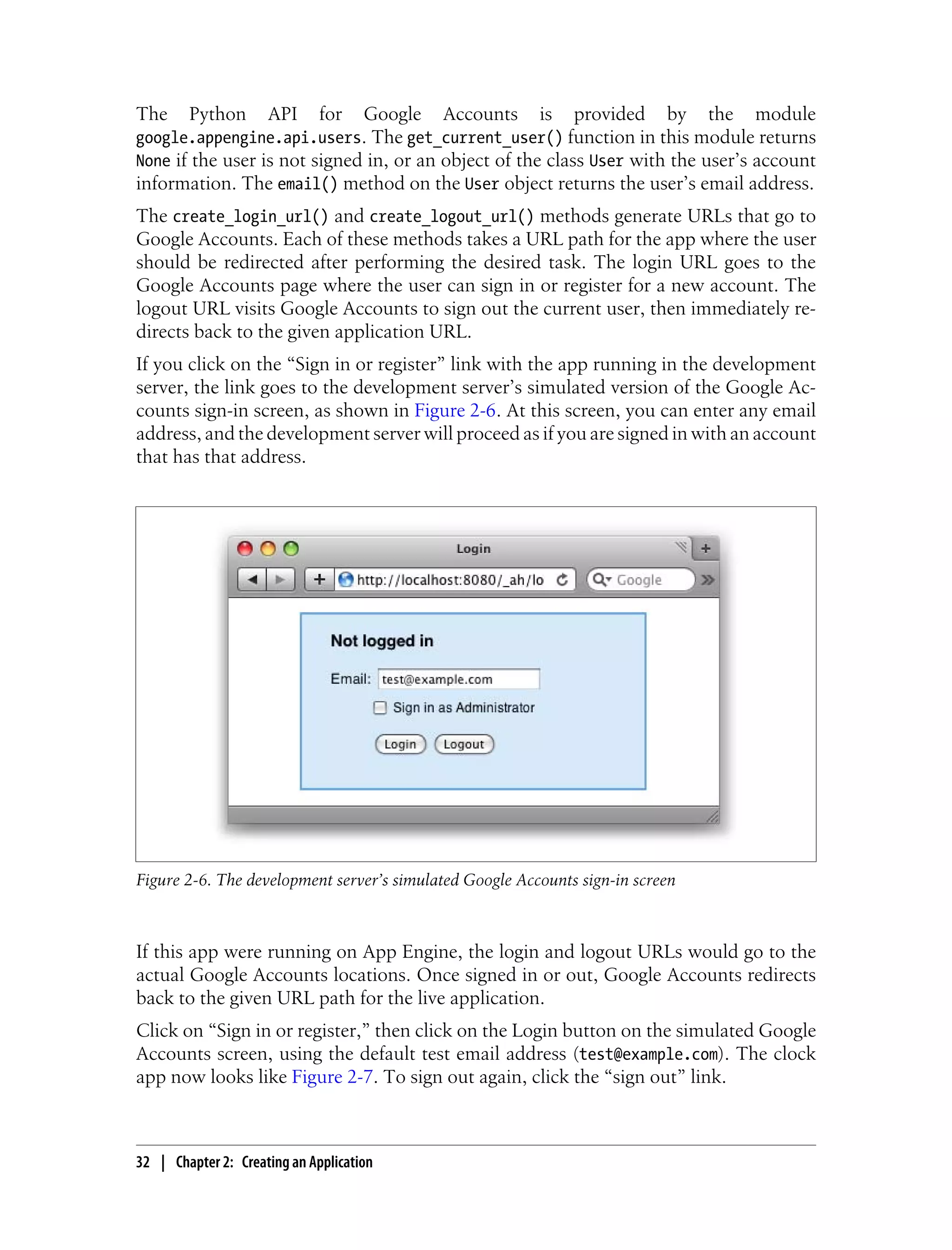

Users and Google Accounts

So far, our clock shows the same display for every user. To allow each user to customize

the display and save her preferences for future sessions, we need a way to identify the

user making a request. An easy way to do this is with Google Accounts.

Let’s add something to the page that indicates whether the user is signed in, and pro-

vides links for signing in and signing out of the application. Edit main.py to resemble

Example 2-4.

Example 2-4. A version of main.py that displays Google Accounts information and links

from google.appengine.api import users

from google.appengine.ext import webapp

from google.appengine.ext.webapp.util import run_wsgi_app

import datetime

class MainPage(webapp.RequestHandler):

def get(self):

time = datetime.datetime.now()

user = users.get_current_user()

if not user:

navbar = ('<p>Welcome! <a href="%s">Sign in or register</a> to customize.</p>'

% (users.create_login_url(self.request.path)))

else:

navbar = ('<p>Welcome, %s! You can <a href="%s">sign out</a>.</p>'

% (user.email(), users.create_logout_url(self.request.path)))

self.response.headers['Content-Type'] = 'text/html'

self.response.out.write('''

<html>

<head>

<title>The Time Is...</title>

</head>

30 | Chapter 2: Creating an Application](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-51-2048.jpg)

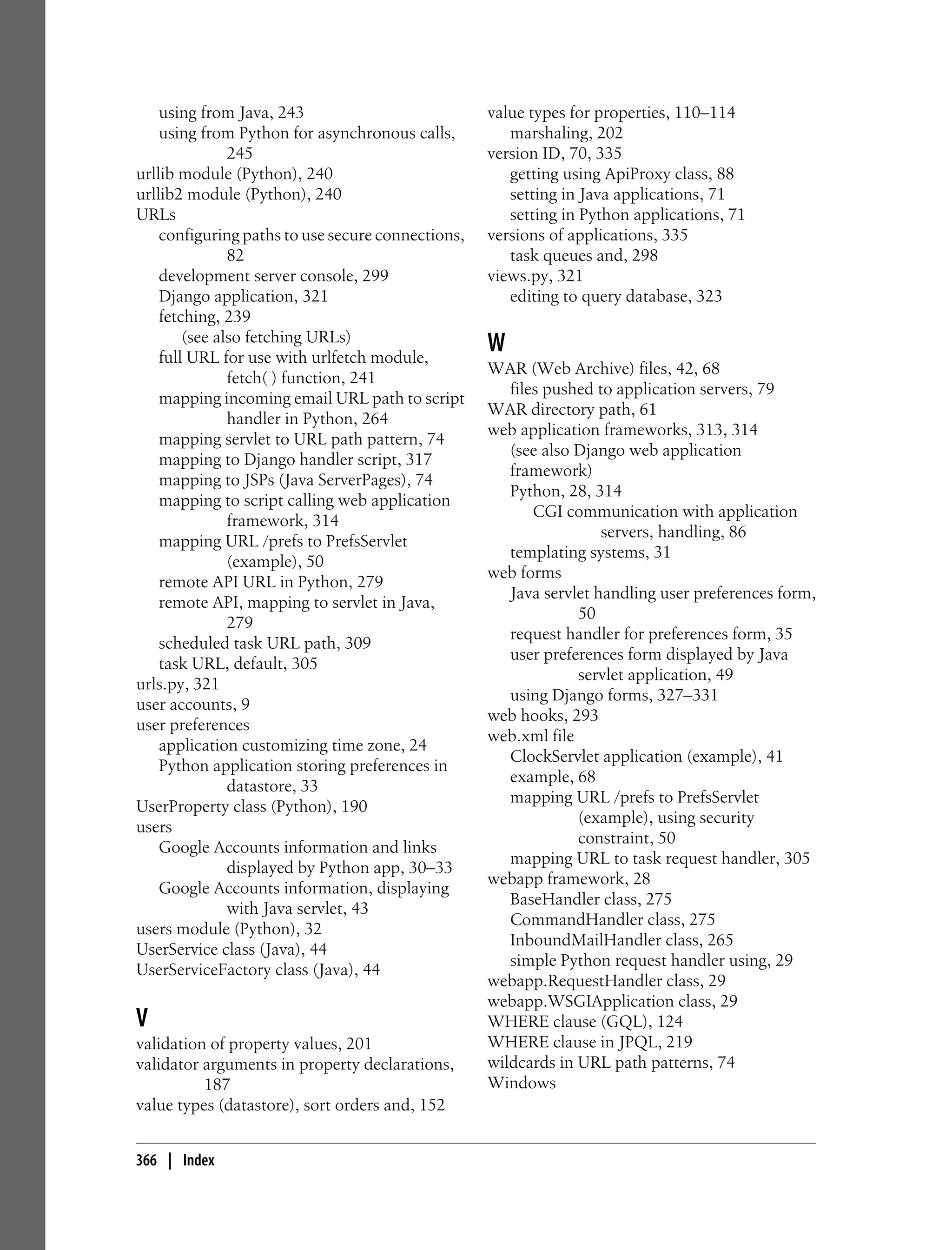

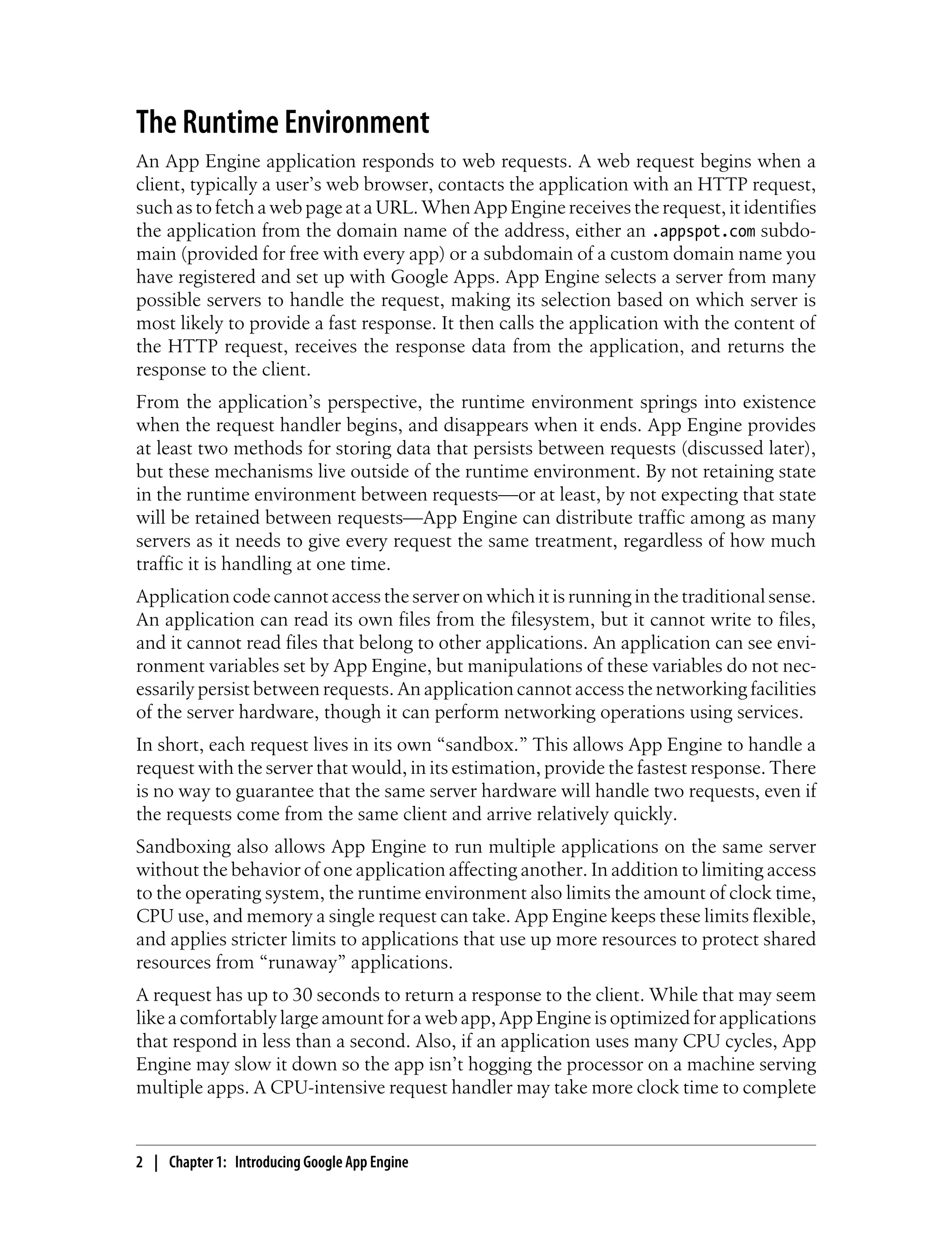

![<body>

%s

<p>The time is: %s</p>

</body>

</html>

''' % (navbar, str(time)))

application = webapp.WSGIApplication([('/', MainPage)],

debug=True)

def main():

run_wsgi_app(application)

if __name__ == '__main__':

main()

In a real application, you would use a templating system for the output, separating the

HTML and display logic from the application code. While many web application

frameworks include a templating system, webapp does not. Since the clock app only

has one page, we’ll put the HTML in the handler code, using Python string formatting

to keep things organized.

The Python runtime environment includes a version of Django, whose

templating system can be used with webapp. When Google released

version 1 of the Python runtime environment, the latest version of

Django was 0.96, so this is what the runtime includes. For more infor-

mation on using Django templates with webapp, see the App Engine

documentation.

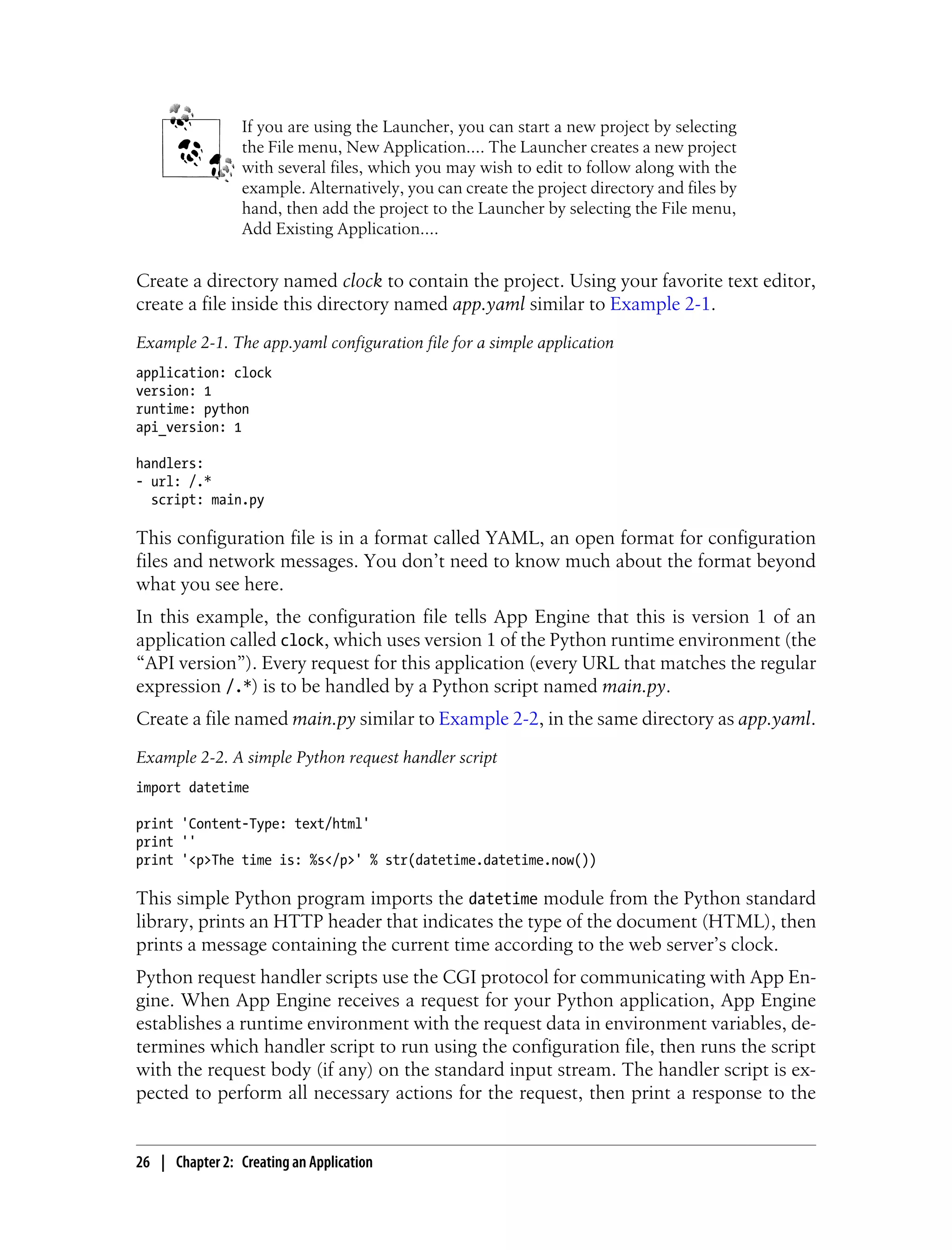

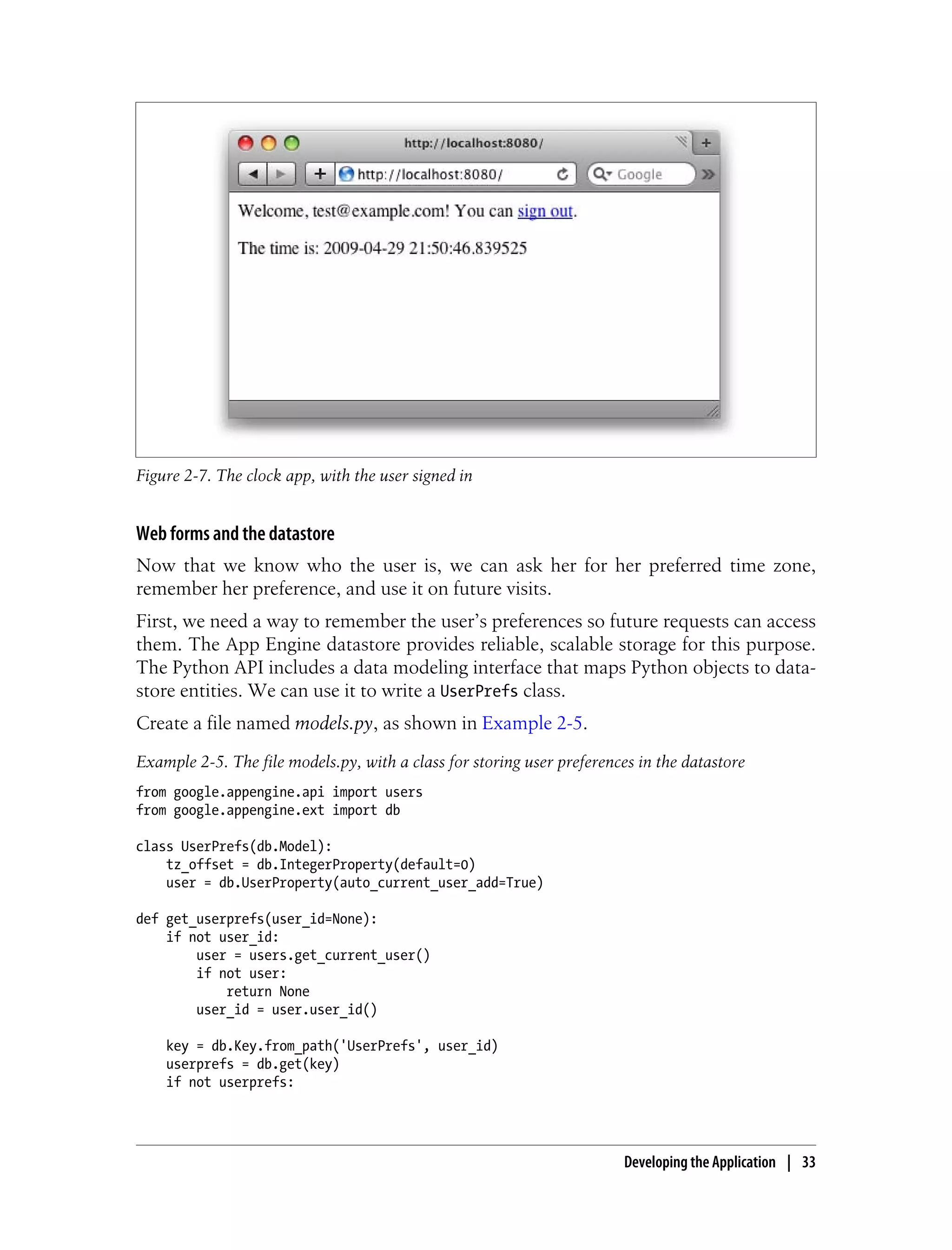

Reload the page in your browser. The new page resembles Figure 2-5.

Figure 2-5. The clock app with a link to Google Accounts when the user is not signed in

Developing the Application | 31](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-52-2048.jpg)

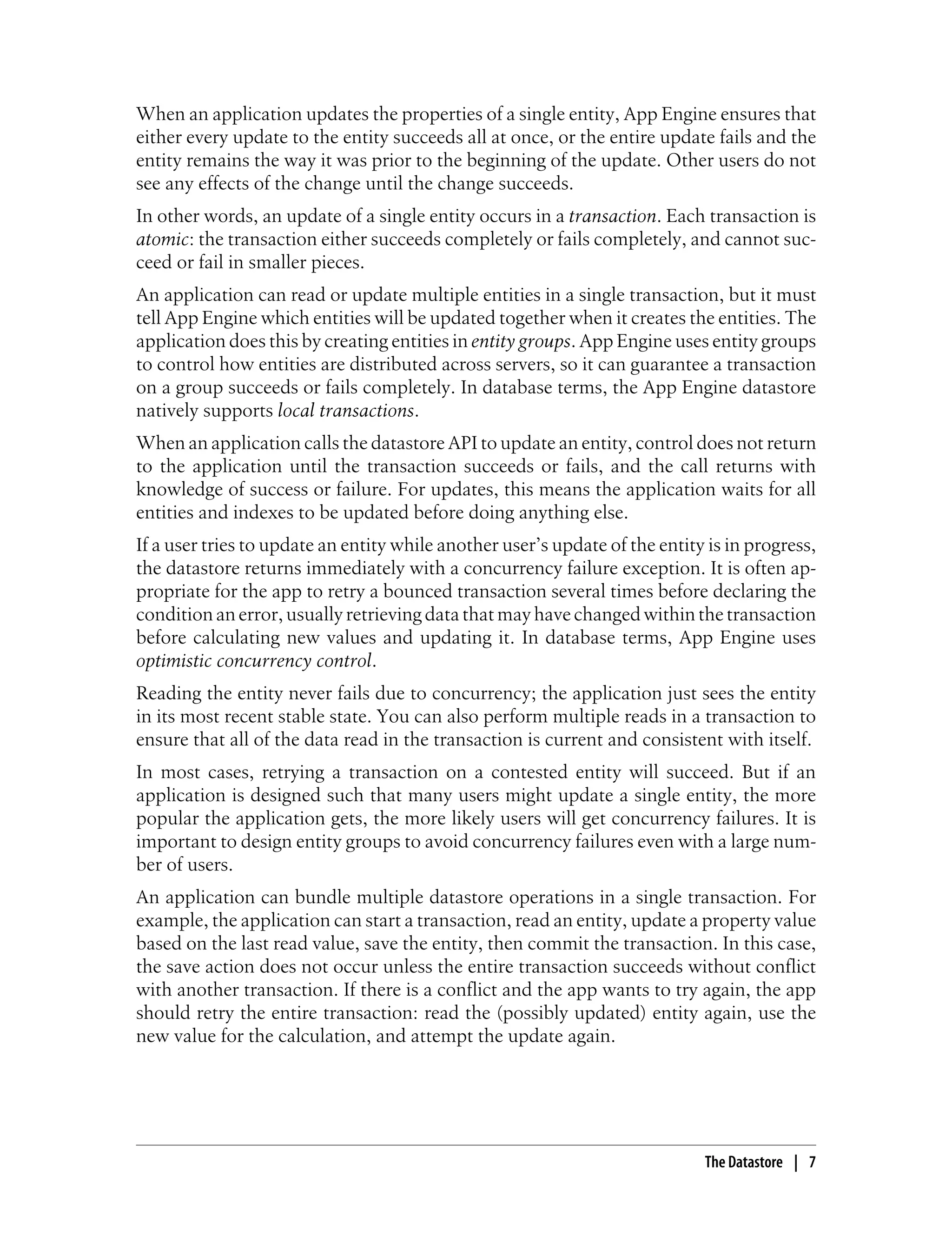

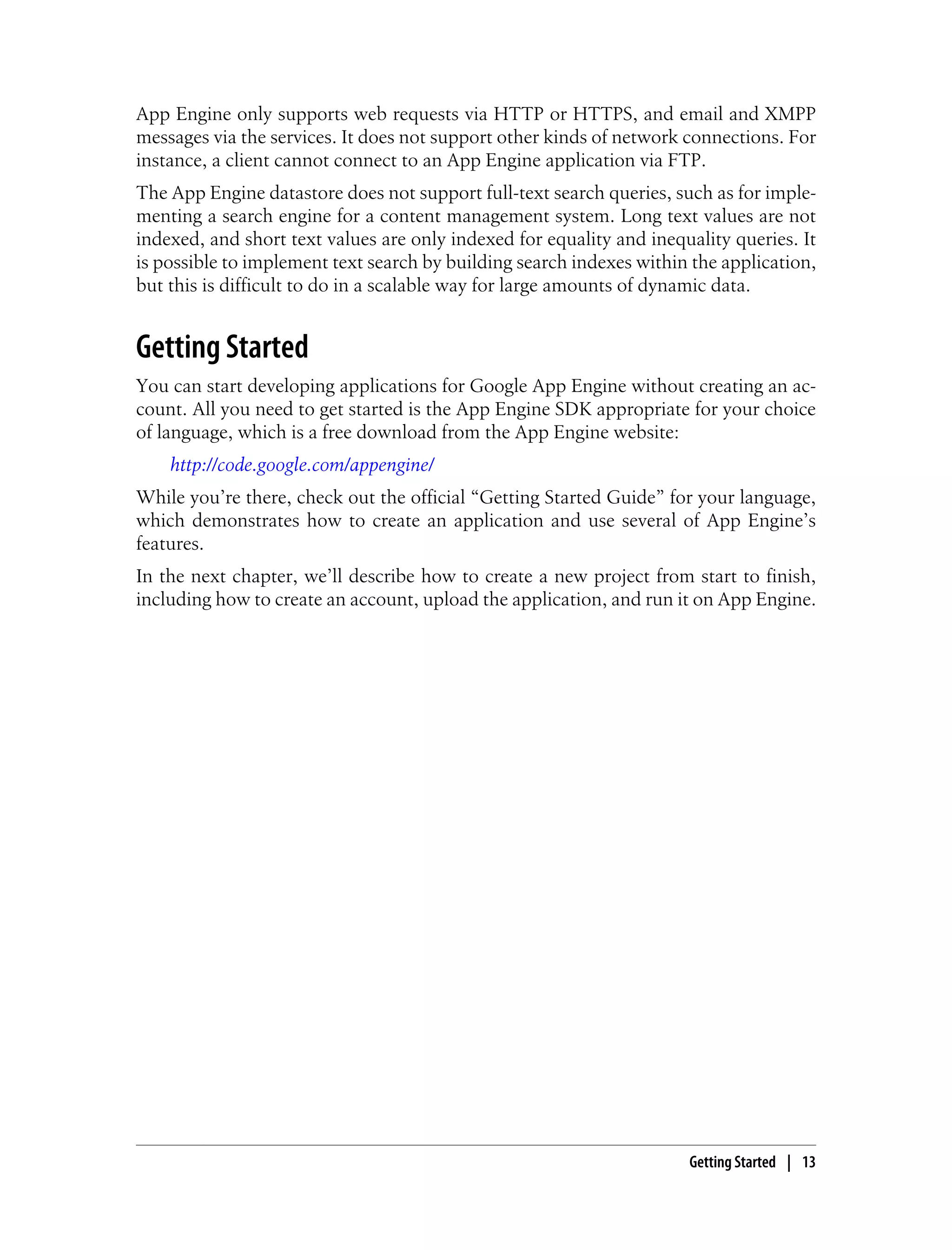

![if not user:

navbar = ('<p>Welcome! <a href="%s">Sign in or register</a> to customize.</p>'

% (users.create_login_url(self.request.path)))

tz_form = ''

else:

userprefs = models.get_userprefs()

navbar = ('<p>Welcome, %s! You can <a href="%s">sign out</a>.</p>'

% (user.nickname(), users.create_logout_url(self.request.path)))

tz_form = '''

<form action="/prefs" method="post">

<label for="tz_offset">

Timezone offset from UTC (can be negative):

</label>

<input name="tz_offset" id="tz_offset" type="text"

size="4" value="%d" />

<input type="submit" value="Set" />

</form>

''' % userprefs.tz_offset

time += datetime.timedelta(0, 0, 0, 0, 0, userprefs.tz_offset)

self.response.headers['Content-Type'] = 'text/html'

self.response.out.write('''

<html>

<head>

<title>The Time Is...</title>

</head>

<body>

%s

<p>The time is: %s</p>

%s

</body>

</html>

''' % (navbar, str(time), tz_form))

application = webapp.WSGIApplication([('/', MainPage)],

debug=True)

def main():

run_wsgi_app(application)

if __name__ == '__main__':

main()

To enable the preferences form, we need a request handler to parse the form data and

update the datastore. Let’s implement this as a new request handler script. Create a file

named prefs.py with the contents shown in Example 2-7.

Example 2-7. A new handler script, prefs.py, for the preferences form

from google.appengine.ext import webapp

from google.appengine.ext.webapp.util import run_wsgi_app

import models

class PrefsPage(webapp.RequestHandler):

def post(self):

Developing the Application | 35](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-56-2048.jpg)

![userprefs = models.get_userprefs()

try:

tz_offset = int(self.request.get('tz_offset'))

userprefs.tz_offset = tz_offset

userprefs.put()

except ValueError:

# User entered a value that wasn't an integer. Ignore for now.

pass

self.redirect('/')

application = webapp.WSGIApplication([('/prefs', PrefsPage)],

debug=True)

def main():

run_wsgi_app(application)

if __name__ == '__main__':

main()

This request handler handles HTTP POST requests to the URL /prefs, which is the

URL (“action”) and HTTP method used by the form. The handler calls the

get_userprefs() function from models.py to get the UserPrefs object for the current

user, which is either a new unsaved object with default values, or the object for an

existing entity. The handler parses the tz_offset parameter from the form data as an

integer, sets the property of the UserPrefs object, then saves the object to the datastore

by calling its put() method. The put() method creates the object if it doesn’t exist, or

updates the existing object.

If the user enters a noninteger in the form field, we don’t do anything. It’d be appro-

priate to return an error message, but we’ll leave this as is to keep the example simple.

Finally, edit app.yaml to map the handler script to the URL /prefs in the handlers:

section, as shown in Example 2-8.

Example 2-8. A new version of app.yaml mapping the URL /prefs, with login required

application: clock

version: 1

runtime: python

api_version: 1

handlers:

- url: /prefs

script: prefs.py

login: required

- url: /.*

script: main.py

The login: required line says that the user must be signed in to Google Accounts to

access the /prefs URL. If the user accesses the URL while not signed in, App Engine

automatically directs the user to the Google Accounts sign-in page, then redirects her

36 | Chapter 2: Creating an Application](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-57-2048.jpg)

![class MainHandler(webapp.RequestHandler):

def get(self):

host = self.request.headers['Host']

self.response.out.write('Host: %s' % host)

App IDs and Versions

Every App Engine application has an application ID that uniquely distinguishes the

app from all other applications. As described in Chapter 2, you can register an ID for

a new application using the Administration Console. Once you have an ID, you add it

to the app’s configuration so the developer tools know that the files in the app root

directory belong to the app with that ID.

The app’s configuration also includes a version identifier. Like the app ID, the version

identifier is associated with the app’s files when the app is uploaded. App Engine retains

one set of files and frontend configuration for each distinct version identifier used dur-

ing an upload. If you do not change the app version in the configuration when you

upload, the existing files for that version of the app are replaced.

Each distinct version of the app is accessible at its own domain name, of the following

form:

version-id.latest.app-id.appspot.com

When you have multiple versions of an app uploaded to App Engine, you can use the

Administration Console to select which version is the one you want the public to access.

The Console calls this the “default” version. When a user visits your Google Apps

domain (and configured subdomain), or the appspot.com domain without the version

ID, he sees the default version.

The appspot.com domain containing the version ID supports an additional domain part,

just like the default appspot.com domain:

anything.version-id.latest.app-id.appspot.com

Unless you explicitly prevent it, anyone who knows your application ID

and version identifiers can access any uploaded version of your appli-

cation using the appspot.com URLs. You can restrict access to nondefault

versions of the application using code that checks the domain of the

request and only allows authorized users to access the versioned do-

mains. You can’t restrict access to static files this way.

Another way to restrict access to nondefault versions is to use Google

Accounts authorization, described later in this chapter. You can restrict

access to app administrators while a version is in development, then

replace the configuration to remove the restriction just before making

that version the default version.

70 | Chapter 3: Handling Web Requests](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-91-2048.jpg)

![If you’d like browsers to reload a static file resource automatically every

time you launch a new major version of the app, you can use the mul-

tiversion URL handler just discussed, then use the CURRENT_VERSION_ID

environment variable as the “version” in the static file URLs:

self.response.out('<script src="/js/' +

os.environ['CURRENT_VERSION_ID'] +

'/code.js" />')

Static files in Java

As we saw earlier, the WAR directory structure for a Java web application keeps all

application code, JARs, and configuration in a subdirectory named WEB-INF/. Typi-

cally, files outside of WEB-INF/ represent resources that the user can access directly,

including static files and JSPs. The URL paths to these resources are equivalent to the

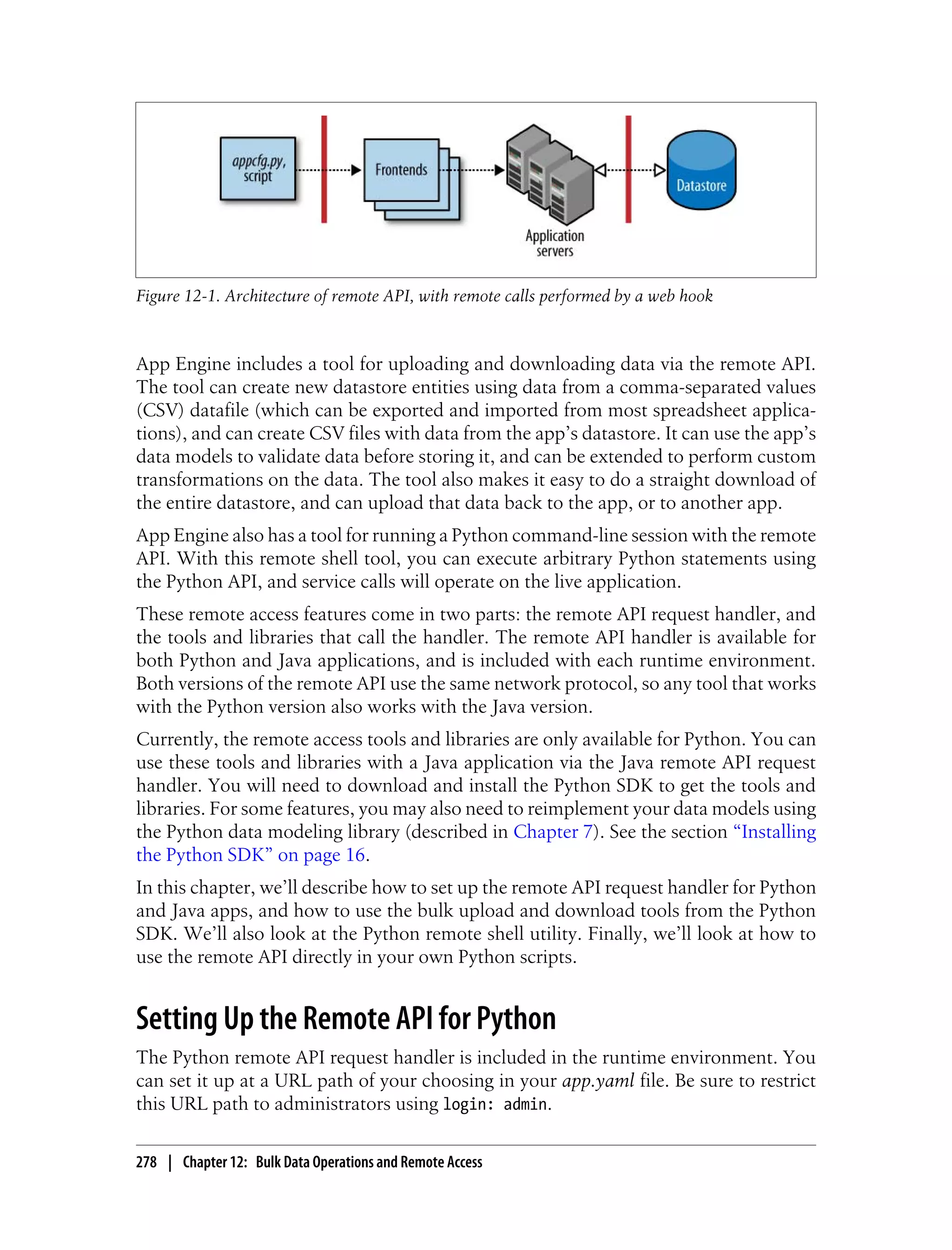

paths to these files within the WAR.

Say an app’s WAR has the following files:

main.jsp

forum/home.jsp

images/logo.png

images/cancelbutton.png

images/okbutton.png

terms.html

WEB-INF/classes/com/example/Forum.class

WEB-INF/classes/com/example/MainServlet.class

WEB-INF/classes/com/example/Message.class

WEB-INF/classes/com/example/UserPrefs.class

WEB-INF/lib/appengine-api.jar

This app has four static files: three PNG images and an HTML file named terms.html.

When the app is uploaded, these four files are pushed to the static file servers. The

frontends know to route requests for URL paths equivalent to these file paths (such

as /images/logo.png) to the static file servers.

The two .jsp files are assumed to be JSPs, and are compiled to servlet classes and

mapped to the URL paths equivalent to their file paths. Since these are application

code, they are handled by the application servers. The JSP source files themselves are

not pushed to the static file servers.

By default, all files in the WAR are pushed to the application servers, and are accessible

by the application code via the filesystem. This includes the files that are identified as

static files and pushed to the static file servers. In other words, all files are considered

resource files, and all files except for JSPs and the WEB-INF/ directory are considered

static files.

Configuring the Frontend | 79](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-100-2048.jpg)

![In practice, neither method of importing offers much advantage. Late importing may

actually be slower if the import is inside a loop or frequent code path, since importing

an already-imported module takes some time, even if it doesn’t load files or evaluate

module code. It’s best to import modules in the way that makes the most sense for code

clarity, typically at the top of each module (source file) that uses the module. Once

you’ve developed your app, if you notice you have a significant slowdown during im-

ports of the first request, you could consider importing selected modules in their code

paths if you can demonstrate that doing so would skip a lot of unneeded code in a

significant number of cases.

App Engine includes a feature similar to import caching to achieve similar savings for

the handler scripts themselves. In general, when the app server invokes a handler script,

it evaluates all of the script’s code, regardless of whether the script has been invoked

by the app instance in the past. But if the script defines a function named main() that

takes no arguments (or arguments with default values), App Engine treats the handler

script like a module. The first invocation evaluates the complete script as usual, but for

all subsequent invocations, App Engine just calls the main() function.

Consider an early version of the clock application we built in Chapter 2, repeated here

as Example 3-4. This handler script imports three modules, defines three global ele-

ments (a class, a global variable, and the main() function), then calls the main() function.

Example 3-4. A simple request handler illustrating the use of app caching

from google.appengine.ext import webapp

from google.appengine.ext.webapp.util import run_wsgi_app

import datetime

class MainPage(webapp.RequestHandler):

def get(self):

time = datetime.datetime.now()

self.response.headers['Content-Type'] = 'text/html'

self.response.out.write('<p>The time is: %s</p>' % str(time))

application = webapp.WSGIApplication([('/', MainPage)],

debug=True)

def main():

run_wsgi_app(application)

if __name__ == '__main__':

main()

The first time an app instance invokes this script, the full script is evaluated, thereby

importing the modules and defining the global elements in the script’s namespace

within the app instance. Evaluating the script also calls the main() function.

How the App Is Run | 91](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-112-2048.jpg)

![A property with the null value is not the same as an unset property. Consider the fol-

lowing Python code:

class Entity(db.Expando):

pass

a = Entity()

a.prop1 = 'abc'

a.prop2 = None

a.put()

b = Entity()

b.prop1 = 'def'

b.put()

This creates two entities of the kind Entity. Both entities have a property named

prop1. The first entity has a property named prop2; the second does not.

Of course, an unset property can be set later:

b.prop2 = 123

b.put()

# b now has a property named "prop2."

Similarly, a set property can be made unset. In the Python API, you delete the property

by deleting the attribute from the object, using the del keyword:

del b.prop2

b.put()

# b no longer has a property named "prop2."

In Java, the low-level datastore API’s Entity class has methods to set properties

(setProperty()) and unset properties (removeProperty()).

Multivalued Properties

As we mentioned earlier, a property can have multiple values. We’ll discuss the more

substantial aspects of multivalued properties when we talk about queries and data

modeling. But for now, it’s worth a brief mention.

A property can have one or more values. A property cannot have zero values; a property

without a value is simply unset. Each value for a property can be of a different type,

and can be the null value.

The datastore preserves the order of values as they are assigned. The Python API returns

the values in the same order as they were set.

In Python, a property with multiple values is represented as a single Python list value:

e.prop = [1, 2, 'a', None, 'b']

Property Values | 113](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-134-2048.jpg)

![Because a property must have at least one value, it is an error to assign

an empty list ([] in Python) to a property on an entity whose Python

class is based on the Expando class.

class Entity(db.Expando):

pass

e = Entity()

e.prop = [] # ERROR

In contrast, the Model base class includes a feature that automatically

translates between the empty list value and “no property set.” You’ll see

this feature in Chapter 7.

In the Java low-level datastore API, you can store multiple values for a property using

a Collection type. The low-level API returns the values as a java.util.List. The items

are stored in the order provided by the Collection type’s iterator. For many types, such

as SortedSet or TreeSet, this order is deterministic. For others, such as HashSet, it is

not. If the app needs the original data structure, it must convert the List returned by

the datastore to the appropriate type.

Keys and Key Objects

The key for an entity is a value that can be retrieved, passed around, and stored like

any other value. If you have the key for an entity, you can retrieve the entity from the

datastore quickly, much more quickly than with a datastore query. Keys can be stored

as property values, as an easy way for one entity to refer to another.

The Python API represents an entity key value as an instance of the Key class, in

the db package. To get the key for an entity, you call the entity object’s key() method.

The Key instance provides access to its several parts using accessor methods, including

the kind, key name (if any), and system-assigned ID (if the entity does not have a key

name).

The Java low-level API is similar: the getKey() method of the Entity class returns an

instance of the Key class.

When you construct a new entity object and do not provide a key name, the entity

object has a key, but the key does not yet have an ID. The ID is populated when the

entity object is saved to the datastore for the first time. You can get the key object prior

to saving the object, but it will be incomplete.

e = Entity()

e.prop = 123

k = e.key() # key is incomplete, has neither key name nor ID

kind = k.kind() # 'Entity'

e.put() # ID is assigned

114 | Chapter 4: Datastore Entities](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-135-2048.jpg)

![k_str = str(k)

# ...

k = db.Key(k_str)

And in Java:

String k_str = KeyFactory.keyToString(k);

// ...

Key k = KeyFactory.stringToKey(k_str);

The Java Key class’s toString() method does not return the key’s string encoding. You

must use KeyFactory.keyToString() to get the string encoding of a key.

Using Entities

Let’s look briefly at how to retrieve entities from the datastore using keys, how to inspect

the contents of entities, and how to update and delete entities. The API methods for

these features are straightforward.

Getting Entities Using Keys

Given a complete key for an entity, you can retrieve the entity from the datastore.

In the Python API, you can call the get() function in the db package with the Key object

as an argument:

from google.appengine.ext import db

k = db.Key('Entity', 'alphabeta')

e = db.get(k)

If you know the kind of the entity you are fetching, you can also use the get() class

method on the appropriate entity class. This does a bit of type checking, ensuring that

the key you provide is of the appropriate kind:

class Entity(db.Expando):

pass

e = Entity.get(k)

To fetch multiple entities in a batch, you can pass the keys to get() as a list. Given a

list, the method returns a list containing entity objects, with None values for keys that

do not have a corresponding entity in the datastore.

entities = db.get([k1, k2, k3])

116 | Chapter 4: Datastore Entities](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-137-2048.jpg)

![complete key (such as a key with a kind and a key name) and an entity already exists

with that key, the datastore replaces the existing entity with the new one.

If you want to test that an entity with a given key does not exist before

you create it, you can do so using the transaction API. You must use a

transaction to ensure that another process doesn’t create an entity with

that key after you test for it and before you create it. For more informa-

tion on transactions, see Chapter 6.

If you have several entity objects to save, you can save them all in one call using the

put() function in the db package. The put() function can also take a single entity object.

db.put(e)

db.put([e1, e2, e3])

As with a batch get, a batch put performs a single call to the service. The total size of

the call is subject to the API call limits for the datastore. The entity count is also subject

to a limit.

In Java, you can save entities using the put() method of a DatastoreService

instance. As with get(), the method takes a single Entity for a single put, or an

Iterable<Entity> for a batch put.

When the call to put() returns, the datastore is up to date, and all future queries in the

current request handler and other handlers will see the new data. The specifics of how

the datastore gets updated are discussed in detail in Chapter 6.

Deleting Entities

Deleting entities works similarly to putting entities. In Python, you can call the

delete() method on the entity object, or you can pass entity objects or Key objects to

the delete() function.

e = db.get('Entity', 'alphabeta')

e.delete()

db.delete(e)

db.delete([e1, e2, e3])

# Deleting without first fetching the entity:

k = db.Key('Entity', 'alphabeta')

db.delete(k)

In Java, you call the delete() method of the DatastoreService with either a single Key

or an Iterable<Key>.

As with gets and puts, a delete of multiple entities occurs in a single batch call to the

service, and is faster than making multiple service calls.

Using Entities | 119](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-140-2048.jpg)

![impossible to get the list of players for a particular trophy. (A query filter can’t match

patterns within string values.)

Another option is to record each trophy win in a separate property named after the

trophy. To get the list of players with a trophy, you just query for the existence of the

corresponding property. However, getting the list of trophies for a given player would

require either coding the names of all of the trophies in the display logic, or iterating

over all of the Player entity’s properties looking for trophy names.

With multivalued properties, we can store each trophy name as a separate value for the

trophies property. To access a list of all trophies for a player, we simply access the

property of the entity. To get a list of all players with a trophy, we use a query with an

equality filter on the property.

Here’s what this example looks like in Python:

p = Player.get_by_key_name(user_id)

p.trophies = ['Lava Polo Champion',

'World Building 2008, Bronze',

'Glarcon Fighter, 2nd class']

p.put()

# List all trophies for a player.

for trophy in p.trophies:

# ...

# Query all players that have a trophy.

q = Player.gql("WHERE trophies = 'Lava Polo Champion'")

for p in q:

# ...

MVPs in Python

The Python API represents the values of a multivalued property as a Python list. Each

member of the list must be of one of the supported datastore types.

class Entity(db.Expando):

pass

e = Entity()

e.prop = [ 'value1', 123, users.get_current_user() ]

Remember that list is not a datastore type; it is only the mechanism for manipulating

multivalued properties. A list cannot contain another list.

A property must have at least one value, otherwise the property does not exist. To

enforce this, the Python API does not allow you to assign an empty list to a property.

Notice that the API can’t do otherwise: if a property doesn’t exist, then the API cannot

know to represent the missing property as an empty list when the entity is retrieved

from the datastore. (This being Python, the API could return the empty list whenever

a nonexistent property is accessed, but that might be more trouble than it’s worth.)

154 | Chapter 5: Datastore Queries](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-175-2048.jpg)

![Because it is often useful for lists to behave like lists, including the ability to contain

zero items, the Python data modeling API provides a mechanism that supports assign-

ing the empty list to a property. We’ll look at this mechanism in Chapter 7.

MVPs and Equality Filters

As you’ve seen, when a multivalued property is the subject of an equality filter in a

query, the entity matches if any of the property’s values are equal to the filter value.

e1 = Entity()

e1.prop = [ 3.14, 'a', 'b' ]

e1.put()

e2 = Entity()

e2.prop = [ 'a', 1, 6 ]

e2.put()

# Returns e1 but not e2:

q = Entity.gql('WHERE prop = 3.14')

# Returns e2 but not e1:

q = Entity.gql('WHERE prop = 6')

# Returns both e1 and e2:

q = Entity.gql("WHERE prop = 'a'")

Recall that a query with a single equality filter uses an index that contains the keys of

every entity of the given kind with the given property and the property values. If an

entity has a single value for the property, the index contains one row that represents

the entity and the value. If an entity has multiple values for the property, the index

contains one row for each value. The index for this example is shown in Figure 5-14.

This brings us to the first of several odd-looking queries that nonetheless make sense

for multivalued properties. Since an equality filter is a membership test, it is possible

for multiple equality filters to use the same property with different values and still return

a result. An example in GQL:

SELECT * FROM Entity WHERE prop = 'a' AND prop = 'b'

App Engine uses the “merge join” algorithm described earlier for multiple equality

filters to satisfy this query using the prop single-property index. This query returns the

e1 entity because the entity key appears in two places in the index, once for each value

requested by the filters.

The way multivalued properties appear in an index gives us another way of thinking

about multivalued properties: an entity has one or more properties, each with a name

and a single value, and an entity can have multiple properties with the same name. The

API represents the values of multiple properties with the same name as a list of values

associated with that name.

Queries and Multivalued Properties | 155](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-176-2048.jpg)

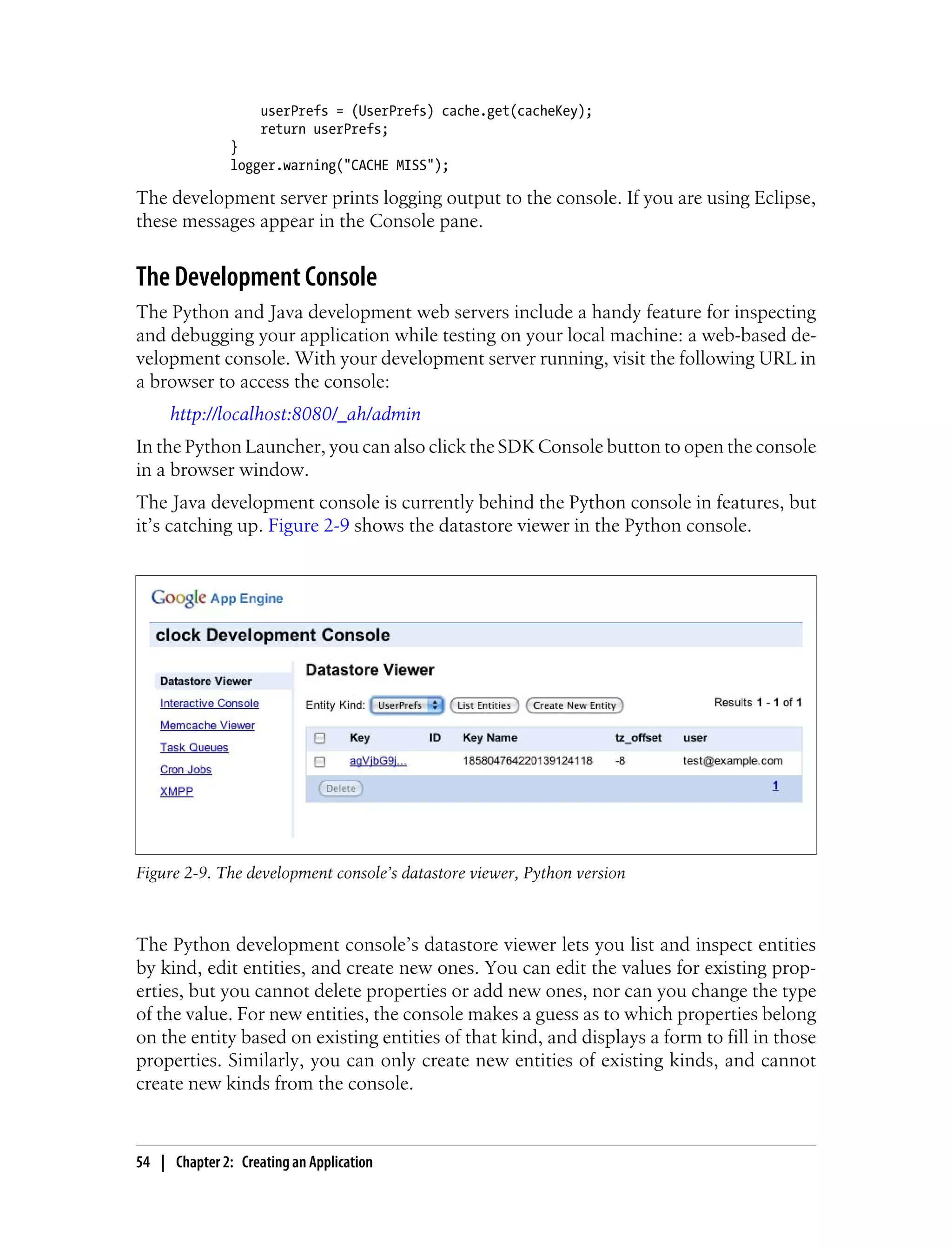

![Figure 5-14. An index of two entities with multiple values for the “prop” property, with results for

WHERE prop=‘a’

The datastore does not have a way to query for the exact set of values in a multivalued

property. You can use multiple equality filters to test that each of several values belongs

to the list, but there is no filter that ensures that those are the only values that belong

to the list, or that each value appears only once.

MVPs and Inequality Filters

Just as an equality filter tests that any value of the property is equal to the filter value,

an inequality filter tests that any value of the property meets the filter criterion.

e1 = Entity()

e1.prop = [ 1, 3, 5 ]

e1.put()

e2 = Entity()

e2.prop = [ 4, 6, 8 ]

e2.put()

# Returns e1 but not e2:

q = Entity.gql("WHERE prop < 2")

# Returns e2 but not e1:

q = Entity.gql("WHERE prop > 7")

# Returns both e1 and e2:

q = Entity.gql("WHERE prop > 3")

Figure 5-15 shows the index for this example, with the results of prop > 3 highlighted.

In the case of an inequality filter, it’s possible for the index scan to match rows for a

single entity multiple times. When this happens, the first occurrence of each key in the

156 | Chapter 5: Datastore Queries](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-177-2048.jpg)

![Figure 5-15. An index of two entities with multiple values for the “prop” property, with results for

WHERE prop > 3

index determines the order of the results. If the index used for the query sorts the

property in ascending order, the first occurrence is the smallest matching value. For

descending, it’s the largest. In the example above, prop > 3 returns e2 before e1 because

4 appears before 5 in the index.

MVPs and Sort Orders

To summarize things we know about how multivalued properties are indexed:

• A multivalued property appears in an index with one row per value.

• All rows in an index are sorted by the values, possibly distributing property values

for a single entity across the index.

• The first occurrence of an entity in an index scan determines its place in the result

set for a query.

Together, these facts explain what happens when a query orders its results by a mul-

tivalued property. When results are sorted by a multivalued property in ascending or-

der, the smallest value for the property determines its location in the results. When

results are sorted in descending order, the largest value for the property determines its

location.

This has a counterintuitive—but consistent—consequence:

e1 = Entity()

e1.prop = [ 1, 3, 5 ]

e1.put()

e2 = Entity()

Queries and Multivalued Properties | 157](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-178-2048.jpg)

![e2.prop = [ 2, 3, 4 ]

e2.put()

# Returns e1, e2:

q = Entity.gql("ORDER BY prop ASC")

# Also returns e1, e2:

q = Entity.gql("ORDER BY prop DESC")

Because e1 has both the smallest value and the largest value, it appears first in the result

set in ascending order and in descending order. See Figure 5-16.

Figure 5-16. Indexes of two entities with multiple values for the “prop” property, one ascending and

one descending

MVPs and the Query Planner

The query planner tries to be smart by ignoring aspects of the query that are redundant

or contradictory. For instance, a = 3 AND a = 4 would normally return no results, so

the query planner catches those cases and doesn’t bother doing work it doesn’t need

to do. However, most of these normalization techniques don’t apply to multivalued

properties. In this case, the query could be asking, “Does this MVP have a value that

is equal to 3 and another value equal to 4?” The datastore remembers which properties

are MVPs (even those that end up with one or zero values), and never takes a shortcut

that would produce incorrect results.

But there is one exception. A query that has both an equality filter and a sort order will

drop the sort order. If a query asks for a = 3 ORDER BY a DESC and a is a single-value

property, the sort order has no effect because all of the values in the result are identical.

For an MVP, however, a = 3 tests for membership, and two MVPs that meet that

condition are not necessarily identical.

158 | Chapter 5: Datastore Queries](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-179-2048.jpg)

![SELECT * FROM Message

WHERE ANCESTOR IS KEY(MessageBoard, 'The_Archonville_Times')

ORDER BY post_date DESC

LIMIT 10

Most queries that use an ancestor filter need custom indexes. There is one unusual

exception: a query does not need a custom index if the query also contains equality

filters on properties (and no inequality filters or sort orders). In this exceptional case,

the “merge join” algorithm can use a built-in index of keys along with the built-in

property indexes. In cases where the query would need a custom index anyway, the

query can match the ancestor to the keys in the custom index.

As we mentioned in Chapter 5, the datastore supports queries over entities of all kinds.

Kindless queries are limited to key filters and ancestor filters. Since ancestors can have

children of disparate kinds, kindless queries are useful for getting every child of a given

ancestor, regardless of kind:

SELECT * WHERE ANCESTOR IS KEY('MessageBoard', 'The_Archonville_Times')

While ancestor queries can be useful, don’t get carried away building

large ancestor trees. Remember that every entity with the same root

belongs to the same entity group, and more simultaneous users that

need to write to a group mean a greater likelihood of concurrency

failures.

If you want to model hierarchical relationships between entities without

the consequences of entity groups, consider using multivalued proper-

ties to store paths. For example, if there’s an entity whose path in your

hierarchy can be represented as /A/B/C/D, you can store this path as:

e.parents = ['/A', '/A/B', '/A/B/C'] Then you can perform a query

similar to an ancestor query on this property: ... WHERE parents = '/

A/B'.

What Can Happen in a Transaction

Entity groups ensure that the operations performed within a transaction see a consistent

view of the entities in a group. For this to work, a single transaction must limit its

operations to entities in a single group. The entity group determines the scope of the

transaction.

Within a transaction, you can fetch, update, or delete an entity using the entity’s key.

You can also create a new entity that either is a root entity of a new group that becomes

the subject of the transaction, or that has a member of the transaction’s entity group

as its parent.

You can perform queries over the entities of a single entity group in a transaction. A

query in a transaction must have an ancestor filter that matches the transaction’s entity

group. The results of the query, including both the indexes that provide the results as

168 | Chapter 6: Datastore Transactions](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-189-2048.jpg)

![p = Entity()

e = Entity(parent=p)

The parent key does not have to represent an entity that exists. You can construct a

fake key using db.Key.from_path() and use it as the parent:

p_key = db.Key.from_path('Entity', 'fake_parent')

e = Entity(parent=p_key)

You can use methods on the model object to get the key for the entity’s parent, or fetch

the entity’s parent from the datastore. These methods return None if the entity is a root

entity for its entity group.

p = e.parent()

p_key = e.parent_key()

Similarly, the Key object can return the Key of its parent, or None:

e_key = e.key()

p_key = e_key.parent()

The Python API uses function objects to handle transactions. To perform multiple

operations in a transaction, you define a function that executes the operations, then

you pass it to the run_in_transaction() function (in the ...ext.db module).

import datetime

from google.appengine.ext import db

class MessageBoard(db.Expando):

pass

class Message(db.Expando):

pass

def create_message_txn(board_name, message_name, message_title, message_text):

board = db.get(db.Key.from_path('MessageBoard', board_name))

if not board:

board = MessageBoard(key_name=board_name)

board.count = 0

message = Message(key_name=message_name, parent=board)

message.title = message_title

message.text = message_text

message.post_date = datetime.datetime.now()

board.count += 1

db.put([board, message])

# ...

try:

db.run_in_transaction(create_message_txn,

170 | Chapter 6: Datastore Transactions](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-191-2048.jpg)

![Batch Updates

When you read, create, update, or delete an entity, the runtime environment makes a

service call to the datastore. Each service call has some overhead, including serializing

and deserializing parameters and transmitting them between machines in the data cen-

ter. If you need to update multiple entities, you can save time by performing the updates

together as a batch in one service call.

We introduced batch calls in Chapter 4. Here’s a quick example of the Python batch

API:

# Creating multiple entities:

e1 = Message(key_name='m1', text='...')

e2 = Message(key_name='m2', text='...')

e3 = Message(key_name='m3', text='...')

message_keys = db.put([e1, e2, e3])

# Getting multiple entities using keys:

message_keys = [db.Key('Message', 'm1'),

db.Key('Message', 'm2'),

db.Key('Message', 'm2')]

messages = db.get(message_keys)

for message in messages:

# ...

# Deleting multiple entities:

db.delete(message_keys)

When the datastore receives a batch call, it bundles the keys or entities by their entity

groups, which it can determine from the keys. Then it dispatches calls to the datastore

machines responsible for each entity group. The datastore returns results to the app

when it has received all results from all machines.

If the call includes changes for multiple entities in a single entity group, those changes

are performed in a single transaction. There is no way to control this behavior, but

there’s no reason to do it any other way. It’s faster to commit multiple changes to a

group at once than to commit them individually, and no less likely to result in concur-

rency failures.

Each entity group involved in a batch update may fail to commit due to a concurrency

failure. If a concurrency failure occurs for any update, the API raises the concurrency

failure exception—even if updates to other groups were committed successfully.

Batch updates in disparate entity groups are performed in separate threads, possibly

by separate datastore machines, executed in parallel to one another. This can make

batch updates especially fast compared to performing each update one at a time.

Remember that if you use the batch API during a transaction, every entity or key in the

batch must use the same entity group as the rest of the transaction. Also, be aware that

App Engine puts a maximum size on service calls and responses, and the total size of

the call or its responses must not exceed these limits. The number of items in a batch

Batch Updates | 179](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-200-2048.jpg)

![s.song_key = 'C# min'

# The song_key attribute is stored as the

# datastore property named 'key'.

s.put()

You can declare that a property should contain only one of a fixed set of values by

providing a list of possible values as the choices argument. If None is not one of the

choices, this acts as a more restrictive form of required: the property must be set to one

of the valid choices using a keyword argument to the constructor.

_KEYS = ['C', 'C min', 'C 7',

'C#', 'C# min', 'C# 7',

# ...

]

class Song(db.Model):

song_key = db.StringProperty(choices=_KEYS)

s = Song(song_key='H min') # db.BadValueError

s = Song() # db.BadValueError, None is not an option

s = Song(song_key='C# min') # OK

All of these features validate the value assigned to a property, and raise a

db.BadValueError if the value does not meet the appropriate conditions. For even

greater control over value validation, you can define your own validation function and

assign it to a property declaration as the validator argument. The function should take

the value as an argument, and raise a db.BadValueError (or an exception of your choos-

ing) if the value should not be allowed.

def is_recent_year(val):

if val < 1923:

raise db.BadValueError

class Book(db.Model):

copyright_year = db.IntegerProperty(validator=is_recent_year)

b = Book(copyright_year=1922) # db.BadValueError

b = Book(copyright_year=1924) # OK

Nonindexed Properties

In Chapter 5, we mentioned that you can set properties of an entity in such a way that

they are available on the entity, but are considered unset for the purposes of indexes.

In the Python API, you establish a property as nonindexed using a property declaration.

If the property declaration is given an indexed argument of False, entities created with

that model class will set that property as nonindexed.

188 | Chapter 7: Data Modeling with Python](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-209-2048.jpg)

![is saved for the first time, and left untouched thereafter. If the current user is not signed

in, the value is set to None.

class BookReview(db.Model):

created_by_user = db.UserProperty(auto_current_user_add=True)

last_edited_by_user = db.UserProperty(auto_current_user=True)

br = BookReview()

br.put() # created_by_user and last_edited_by_user set

# ...

br.put() # last_edited_by_user set again

At first glance, it might seem reasonable to set a default for a

db.UserProperty this way:

from google.appengine.api import users

class BookReview(db.Model):

created_by_user = db.UserProperty(

default=users.get_current_user())

# WRONG

This would set the default value to be the user who is signed in when

the class is imported. Requests that use a cached instance of the appli-

cation may end up using a previous user instead of the current user as

the default.

To guard against this mistake, db.UserProperty does not accept

the default argument. You can use only auto_current_user or

auto_current_user_add to set an automatic value.

List Properties

The data modeling API provides a property declaration class for multivalued properties,

called db.ListProperty. This class ensures that every value for the property is of the

same type. You pass this type to the property declaration, like so:

class Book(db.Model):

tags = db.ListProperty(basestring)

b = Book()

b.tags = ['python', 'app engine', 'data']

The type argument to the db.ListProperty constructor must be the Python represen-

tation of one of the native datastore types. Refer back to Table 4-1 for a complete list.

The datastore does not distinguish between a multivalued property with no elements

and no property at all. As such, an undeclared property on a db.Expando object can’t

store the empty list. If it did, when the entity is loaded back into an object, the property

simply wouldn’t be there, potentially confusing code that’s expecting to find an empty

190 | Chapter 7: Data Modeling with Python](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-211-2048.jpg)

![list. To avoid confusion, db.Expando disallows assigning an empty list to an undeclared

property.

The db.ListProperty declaration makes it possible to keep an empty list value on a

multivalued property. The declaration interprets the state of an entity that doesn’t have

the declared property as the property being set to the empty list, and maintains that

distinction on the object. This also means that you cannot assign None to a declared list

property—but this isn’t of the expected type for the property anyway.

The datastore does distinguish between a property with a single value and a multivalued

property with a single value. An undeclared property on a db.Expando object can store

a list with one element, and represent it as a list value the next time the entity is loaded.

The example above declares a list of string values. (basestring is the Python base

type for str and unicode.) This case is so common that the API also provides

db.StringListProperty.

You can provide a default value to db.ListProperty using the default argument. If you

specify a nonempty list as the default, a shallow copy of the list value is made for each

new object that doesn’t have an initial value for the property.

db.ListProperty does not support the required validator, since every list property tech-

nically has a list value (possibly empty). If you wish to disallow the empty list, you can

provide your own validator function that does so:

def is_not_empty(lst):

if len(lst) == 0:

raise db.BadValueError

class Book(db.Model):

tags = db.ListProperty(basestring, validator=is_not_empty)

b = Book(tags=[]) # db.BadValueError

b = Book() # db.BadValueError, default "tags" is empty

b = Book(tags=['awesome']) # OK

db.ListProperty does not allow None as an element in the list because it doesn’t match

the required value type. It is possible to store None as an element in a list for an unde-

clared property.

Models and Schema Migration

Property declarations prevent the application from creating an invalid data object, or

assigning an invalid value to a property. If the application always uses the same model

classes to create and manipulate entities, then all entities in the datastore will be con-

sistent with the rules you establish using property declarations.

Property Declarations | 191](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-212-2048.jpg)

![b.put()

br = BookReview()

br.book = b # sets br's 'book' property to b's key

br.book = b.key() # same thing

We’ll explain what collection_name does in a moment.

The referenced object must have a “complete” key before it can be assigned to a refer-

ence property. A key is complete when it has all of its parts, including the string name

or the system-assigned numeric ID. If you create a new object without a key name, the

key is not complete until you save the object. When you save the object, the system

completes the key with a numeric ID. If you create the object (or a db.Key) with a key

name, the key is already complete, and you can use it for a reference without saving it

first.

b = Book()

br = BookReview()

br.book = b # db.BadValueError, b's key is not complete

b.put()

br.book = b # OK, b's key has system ID

b = Book(key_name='The_Grapes_of_Wrath')

br = BookReview()

br.book = b # OK, b's key has a name

db.put([b, br])

A model class must be defined before it can be the subject of a db.ReferenceProperty.

To declare a reference property that can refer to another instance of the same class, you

use a different declaration, db.SelfReferenceProperty.

class Book(db.Model):

previous_edition = db.SelfReferenceProperty()

b1 = Book()

b2 = Book()

b2.previous_edition = b1

Reference properties have a powerful and intuitive syntax for accessing referenced ob-

jects. When you access the value of a reference property, the property fetches the entity

from the datastore using the stored key, then returns it as an instance of its model class.

A referenced entity is loaded “lazily”: it is not fetched from the datastore until the

property is dereferenced.

br = db.get(book_review_key)

# br is a BookReview instance

title = br.book.title # fetches book, gets its title property

Modeling Relationships | 193](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-214-2048.jpg)

![The key list method is best suited for situations where there are fewer objects on one

side of the relationship than on the other, and the short list is small enough to store

directly on an entity. In this example, many players each belong to a few guilds; each

player has a short list of guilds, while each guild may have a long list of players. We

put the list property on the Player side of the relationship to keep the entity small, and

use queries to produce the long list when it is needed.

The link model method

The link model method represents each relationship as an entity. The relationship entity

has reference properties pointing to the related classes. You traverse the relationship

by going through the relationship entity via the back-references.

class Player(db.Model):

name = db.StringProperty()

class Guild(db.Model):

name = db.StringProperty()

class GuildMembership(db.Model):

player = db.ReferenceProperty(Player, collection_name='guild_memberships')

guild = db.ReferenceProperty(Guild, collection_name='player_memberships')

p = Player()

g = Guild()

db.put([p, g])

gm = GuildMembership(player=p, guild=g)

db.put(gm)

# Guilds to which a player belongs:

for gm in p.guild_memberships:

guild_name = gm.guild.name

# ...

# Players that belong to a guild:

for gm in g.player_memberships:

player_name = gm.player.name

# ...

This technique is similar to how you’d use “join tables” in a SQL database. It’s a good

choice if either side of the relationship may get too large to store on the entity itself.

You can also use the relationship entity to store metadata about the relationship (such

as when the player joined the guild), or model more complex relationships between

multiple classes.

The link model method is more expensive than the key list method. It requires fetching

the relationship entity to access the related object.

Modeling Relationships | 197](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-218-2048.jpg)

![self.first_name = first_name

self.surname = surname

def is_valid(self):

return (isinstance(self.first_name, unicode)

and isinstance(self.surname, unicode)

and len(self.surname) >= 6)

class PlayerNameProperty(db.Property):

data_type = basestring

def validate(self, value):

value = super(PlayerNameProperty, self).validate(value)

if value is not None:

if not isinstance(value, PlayerName):

raise db.BadValueError('Property %s must be a PlayerName.' %

(self.name))

# Let the data class have a say in validity.

if not value.is_valid():

raise db.BadValueError('Property %s must be a valid PlayerName.' %

self.name)

# Disallow the serialization delimiter in the first field.

if value.surname.find('|') != -1:

raise db.BadValueError(('PlayerName surname in property %s cannot ' +

'contain a "|".') % self.name)

return value

def get_value_for_datastore(self, model_instance):

# Convert the data object's PlayerName to a unicode.

return (getattr(model_instance, self.name).surname + u'|'

+ getattr(model_instance, self.name).first_name)

def make_value_for_datastore(self, value):

# Convert a unicode to a PlayerName.

i = value.find(u'|')

return PlayerName(first_name=value[i+1:],

surname=value[:i])

And here’s how you’d use it:

p = Player()

p.player_name = PlayerName('Ned', 'Nederlander')

p.player_name = PlayerName('Ned', 'Neder|lander')

# db.BadValueError, surname contains serialization delimiter

p.player_name = PlayerName('Ned', 'Neder')

# db.BadValueError, PlayerName.is_valid() == False

Here, the application value type is a PlayerName instance, and the datastore value type

is that value encoded as a Unicode string. The encoding format is the surname field,

followed by a delimiter, followed by the first_name field. We disallow the delimiter

character in the surname using the validate() method. (Instead of disallowing

Creating Your Own Property Classes | 203](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-224-2048.jpg)

![selects the requested fields and assembles the results. This is only true if one of the

requested fields is a datastore property, and is not true if the only field is a key field (@Id).

If the query is for one field, each result is a value of the type of that field. If the query

is for multiple fields, each result is an Object[] whose elements are the field values in

the order specified in the query.

Query query = em.createQuery("SELECT isbn, title, author FROM Book");

// Fetch complete Book objects, then

// produce result objects from 3 fields

// of each result

@SuppressWarnings("unchecked")

List<Object[]> results = (List<Object[]>) query.getResultList();

for (Object[] result : results) {

String isbn = result[0];

String title = result[1];

String author = result[2];

// ...

}

You specify filters on fields using a WHERE clause and one or more conditions:

SELECT b FROM Book b WHERE author = "John Steinbeck"

AND copyrightYear >= 1940

To filter on the entity key, refer to the field that represents the key in the data class (the

@Id field):

SELECT b FROM Book b WHERE author = "John Steinbeck"

AND isbn > :firstKeyToFetch

You can perform an ancestor filter by establishing a parent key field (as we did in the

previous section) and referring to that field in the query:

SELECT br FROM BookReview br WHERE bookKey = :pk

As with find(), you can use any of the four key types with parameterized queries, but

the most portable way is to use the type used in the class.

You specify sort orders using an ORDER BY clause. Multiple sort orders are comma-

delimited. Each sort order can have a direction of ASC (the default) or DESC.

SELECT b FROM Book b ORDER BY rating DESC title

The App Engine implementation of JPQL includes a couple of additional tricks that

the datastore can support natively. One such trick is the string prefix trick:

SELECT b FROM Book b WHERE title LIKE 'The Grape%'

The implementation translates this to WHERE title >= 'The Grape', which does the

same thing: it returns all books with a title that begins with the string The Grape, in-

cluding "The Grape", "The Grapefruit", and "The Grapes of Wrath".

Queries and JPQL | 219](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-240-2048.jpg)

![Keys are strings. The API also accepts a memcached-style tuple of a hash code and a string

as a key for compatibility, but it ignores the hash code. The string can be any size;

strings larger than 250 bytes are hashed to 250 bytes.

Values can be any Python value that can be serialized using the pickle module. This

includes most simple values, and data structures and objects whose members are

pickleable values. Pretty much any db.Model instance falls into this category. You can

check the documentation for the pickle module for a more complete description.

Setting and Getting Values in Python

To store a value in memcache, you call the set() function (or method, if you’re using

the Client class) with the key and the value:

from google.appengine.api import memcache

# headlines is a pickle-able object

headlines = ['...', '...', '...']

success = memcache.set("headlines", headlines)

if not success:

# Problem accessing memcache...

set() returns True on success, or False if the value could not be stored.

If there already exists a value with the given key, set() overwrites it. If there is no value

for the key, set() sets it.

To set a value only if there is no value for the key, you call the add() function with

similar arguments:

success = memcache.add("headlines", headlines)

if not success:

# Key already set, or another problem setting...

add() works like set(), but does not update the value and returns False if a value is

currently set for the given key.

To update a value only if it’s already set, you call the replace() function, also with

similar arguments:

success = memcache.replace("headlines", headlines)

if not success:

# Key not set, or another problem setting...

To get a value, you call the get() method with the key:

headlines = memcache.get("headlines")

if headlines is None:

# Key not set, or another problem getting...

get() returns the value, or None if there is no value set for the given key.

The Python Memcache API | 229](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-250-2048.jpg)

![Setting and Getting Multiple Values

You can set and get multiple values at a time using the batch interface. A batch call to

memcache is performed as a single call to the service, which is faster than multiple

individual calls for many items. Like the datastore batch operations, the total amount

of data sent to or received from memcache in a single call must not exceed one

megabyte.

set_multi(), add_multi(), and replace_multi() are similar to their singular counter-

parts, except instead of taking a key and a value as arguments, they take a Python

mapping (such as a dict) of keys to values.

article_summaries = {'article00174': '...',

'article05234': '...',

'article15820': '...',

}

failed_keys = memcache.set_multi(article_summaries)

if failed_keys:

# One or more keys failed to update...

These methods return a list of keys that failed to be set. A completely successful batch

update returns the empty list.

To get multiple values in a single batch, you call the get_multi() method with a list of

keys:

article_summary_keys = ['article00174',

'article05234',

'article15820',

]

article_summaries = memcache.get_multi(article_summary_keys)

get_multi() returns a dict of keys and values that were present and retrieved

successfully.

All of the batch operations accept a key_prefix keyword argument that prepends every

key with the given prefix string. When used with get_multi(), the keys on the returned

values do not include the prefix.

article_summaries = {'00174': '...',

'05234': '...',

'15820': '...',

}

failed_keys = memcache.set_multi(article_summaries,

key_prefix='article')

if failed_keys:

# ...

# ...

article_summary_keys = ['00174',

230 | Chapter 9: The Memory Cache](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-251-2048.jpg)

!['05234',

'15820',

]

article_summaries = memcache.get_multi(article_summary_keys,

key_prefix='article')

Batch calls to the memcache service are not atomic actions.

set_multi() may be successful for some items and not others.

get_multi() will return the data seen at the time of each individual get,

and does not guarantee that all retrieved data is read at the same time.

Memcache Namespaces

You can specify an optional namespace for a memcache key with any setter or getter

call. Namespaces let you segment the key space by the kind of thing you are storing,

so you don’t have to worry about key collisions between disparate uses of the cache.

All of the setter and getter functions accept a namespace argument, a string that declares

the namespace for all keys involved in the call. The batch functions use one namespace

for all keys in the batch.

article_summaries = {'article00174': '...',

'article05234': '...',

'article15820': '...',

}

failed_keys = memcache.set_multi(article_summaries,

namespace='News')

if failed_keys:

# ...

# ...

summary = memcache.get('article05234',

namespace='News')

Namespaces are different from key prefixes in batch calls. Key prefixes merely prepend

keys in a batch with a common string, as a convenience for batch processing. The

namespace is a separate part of the key, and must be specified with an explicit

namespace argument in all calls.

Cache Expiration

You can tell the memcache to delete (or evict) a value automatically after a period of

time when you set or update the value.

All of the setter methods accept a time argument. Its value is either a number of seconds

from the current time up to one month (up to 2,678,400 seconds), or it is a Unix epoch

time (seconds since January 1, 1970, such as returned by time.time()) in the future.

The Python Memcache API | 231](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-252-2048.jpg)

![The callback function is called without arguments. But a common use for a callback

function is to process the results of the fetch, so the function needs access to the RPC

object. There are several ways to give the callback function access to the object.

One way is to use a bound method, a feature of Python that lets you refer to a method

of an instance of a class as a callable object. Define a class with a method that processes

the results of the fetch, using an RPC object stored as a member of the class. Create an

instance of the class, then create the RPC object, assigning the bound method as the

callback by passing it as the callback argument to the create_rpc() function. Exam-

ple 10-6 demonstrates this technique.

Example 10-6. Using an object method as a callback to access the RPC object

from google.appengine.api import urlfetch

# ...

class CatalogUpdater(object):

def prepare_urlfetch_rpc(self):

self.rpc = urlfetch.create_rpc(callback=self.process_results)

urlfetch.make_fetch_call(self.rpc, 'http://api.example.com/catalog_feed')

return self.rpc

def process_results(self):

try:

results = self.rpc.get_result()

# Process results.content...

except urlfetch.Error, e:

# Handle urlfetch errors...

class MainHandler(webapp.RequestHandler):

def get(self):

rpcs = []

catalog_updater = CatalogUpdater(self.response)

rpcs.append(catalog_updater.prepare_urlfetch_rpc())

# ...

for rpc in rpcs:

rpc.wait()

Another way to give the callback access to the RPC object is to use a nested function

(sometimes called a closure). If the callback function is defined in the same scope as a

variable whose value is the RPC object, the function can access the variable when it is

called. To allow for this style of calling, the URL Fetch API lets you assign the callback

function to the RPC object’s callback property after the object has been created.

Example 10-7 demonstrates the use of a nested function as a callback. The

create_callback() function creates a function object, a lambda expression, that calls

another function with the RPC object as an argument. This function object is assigned

to the callback property of the RPC object.

248 | Chapter 10: Fetching URLs and Web Resources](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-269-2048.jpg)

![Example 11-1. An example of sending an email message in Python, using several features

from google.appengine.api import mail

def send_registration_key(user_addr, software_key_data):

message_body = '''

Thank you for purchasing The Example App, the best

example on the market! Your registration key is attached

to this email.

To install your key, download the attachment, then select

"Register..." from the Help menu. Select the key file, then

click "Register".

You can download the app at any time from:

http://www.example.com/downloads/

Thanks again!

The Example Team

'''

html_message_body = '''

<p>Thank you for purchasing The Example App, the best

example on the market! Your registration key is attached

to this email.</p>

<p>To install your key, download the attachment, then select

<b>Register...</b> from the <b>Help</b> menu. Select the key file, then

click <b>Register</b>.</p>

<p>You can download the app at any time from:</p>

<p>

<a href="http://www.example.com/downloads/">

http://www.example.com/downloads/

</a>

</p>

<p>Thanks again!</p>

<p>The Example Team<br />

<img src="http://www.example.com/images/logo_email.gif" /></p>

'''

message = mail.EmailMessage(

sender='The Example Team <admin@example.com>',

to=user_addr,

subject='Your Example Registration Key',

body=message_body,

html=html_message_body,

attachments=[('example_key.txt', software_key_data)])

message.send()

260 | Chapter 11: Sending and Receiving Mail and Instant Messages](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-281-2048.jpg)

![// There was an error contacting the Mail service.

// ...

}

As shown here, you call methods on the MimeMessage to set fields and to add recipients

and content. The simplest message has a sender (setFrom()), one “To” recipient

(addRecipient()), a subject (setSubject()), and a plain-text message body (setText()).

The setFrom() method takes an InternetAddress. You can create an InternetAddress

with just the email address (a String) or the address and a human-readable name as

arguments to the constructor. The email address of the sender must meet the require-

ments described earlier. You can use any string for the human-readable name.

The addRecipient() method takes a recipient type and an InternetAddress. The

allowed recipient types are Message.RecipientType.TO (“To,” a primary recipient),

Message.RecipientType.CC (“Cc” or “carbon-copy,” a secondary recipient), and

Message.RecipientType.BCC (“Bcc” or “blind carbon-copy,” where the recipient is sent

the message but the address does not appear in the message content). You can call

addRecipient() multiple times to add multiple recipients of any type.

The setText() method sets the plain-text body for the message. To include an

HTML version of the message body for mail readers that support HTML, you create a

MimeMultipart object, then create a MimeBodyPart for the plain-text body and another

for the HTML body and add them to the MimeMultipart. You then make the

MimeMultipart the content of the MimeMessage:

import javax.mail.Multipart;

import javax.mail.internet.MimeBodyPart;

import javax.mail.internet.MimeMultipart;

// ...

String textBody = "...text...";

String htmlBody = "...HTML...";

Multipart multipart = new MimeMultipart();

MimeBodyPart textPart = new MimeBodyPart();

textPart.setContent(textBody, "text/plain");

multipart.addBodyPart(htmlPart);

MimeBodyPart htmlPart = new MimeBodyPart();

htmlPart.setContent(htmlBody, "text/html");

multipart.addBodyPart(htmlPart);

message.setContent(multipart);

You attach files to the email message in a similar way:

Multipart multipart = new MimeMultipart();

// ...

byte[] fileData = getBrochureData();

String fileName = "brochure.pdf";

262 | Chapter 11: Sending and Receiving Mail and Instant Messages](https://image.slidesharecdn.com/programminggoogleappengine-100608200404-phpapp01/75/Programming-google-app-engine-283-2048.jpg)

![The development server console (http://localhost:8080/_ah/admin/) includes a fea-

ture for simulating incoming email by submitting a web form. The development server

cannot receive actual email messages.

If the app has the incoming mail service enabled but does not have a

request handler for the appropriate URL, or if the request handler re-

turns an HTTP response code other than 200 for the request, the mes-

sage gets “bounced” and the sender receives an error email message.

Receiving Email in Python

To receive email in Python, you map the incoming email URL path to a script handler

in app.yaml file:

handlers:

- url: /_ah/mail/.+

script: handle_email.py

The app address used for the message is included in the URL path, so you can set up

separate handlers for different addresses directly in the configuration:

handlers:

- url: /_ah/mail/support%40.*app-id.appspotmail.com

script: support_contact.py

- url: /_ah/mail/.+

script: handle_email.py

Email addresses are URL-encoded in the final URL, so this pattern uses %40 to represent

an @ symbol. Also notice you must include a .* before the application ID when using

this technique, so the pattern works for messages sent to version-specific addresses

(such as support@dev.latest.app-id.appspotmail.com).

The Python SDK includes a class for parsing the POST content into a convenient object,

called InboundEmailMessage (in the google.appengine.api.mail package). It takes the

multipart MIME data (the POST body) as an argument to its constructor. Here’s an

example using the webapp framework:

from google.appengine.api import mail

from google.appengine.ext import webapp

from google.appengine.ext.webapp.util import run_wsgi_app

class IncomingMailHandler(webapp.RequestHandler):

def post(self):

message = mail.InboundEmailMessage(self.request.body)

# sender = message.sender

# recipients = message.to

# body = list(message.bodies(content_type='text/plain'))[0]

# ...

application = webapp.WSGIApplication([('/_ah/mail/.+', IncomingMailHandler)],

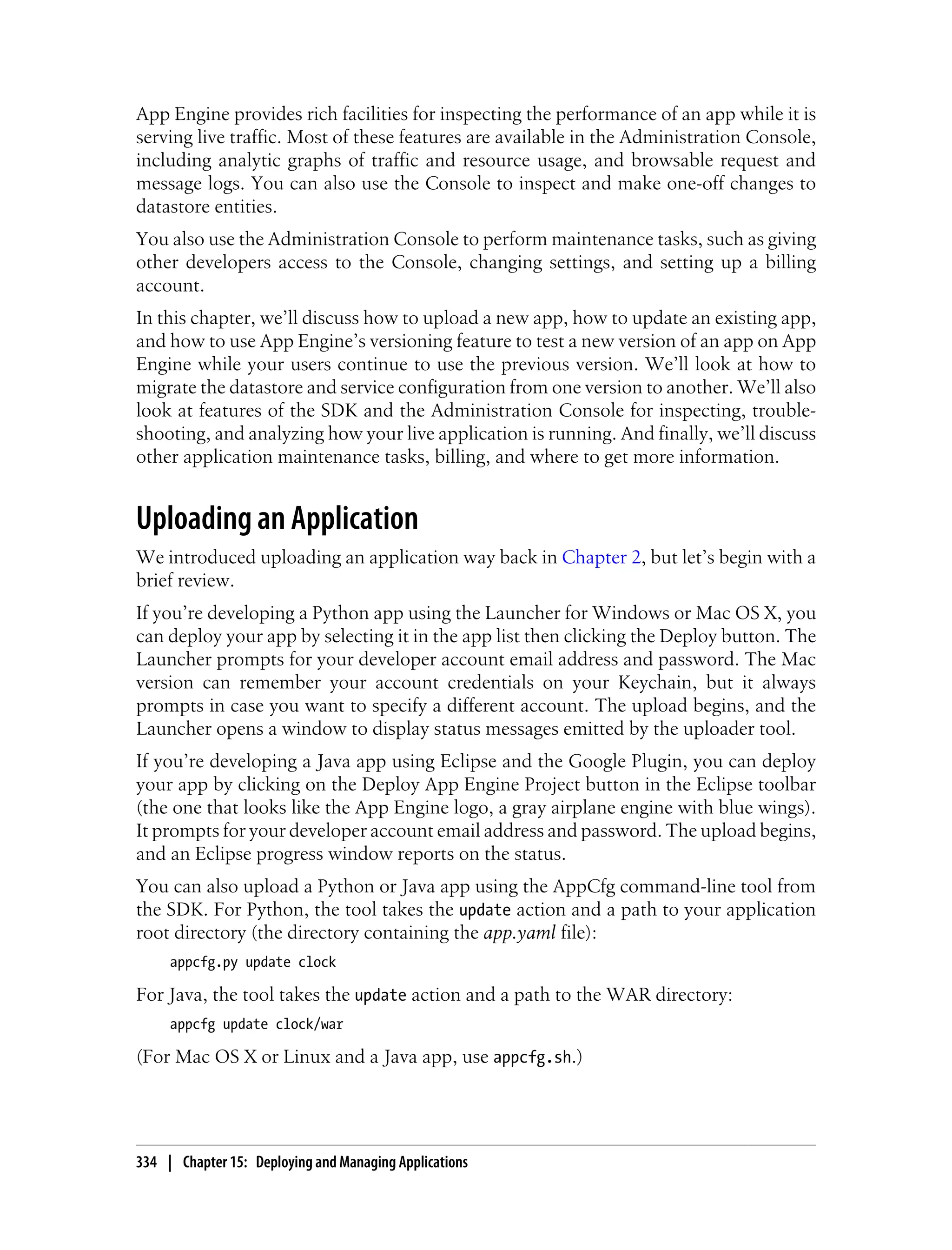

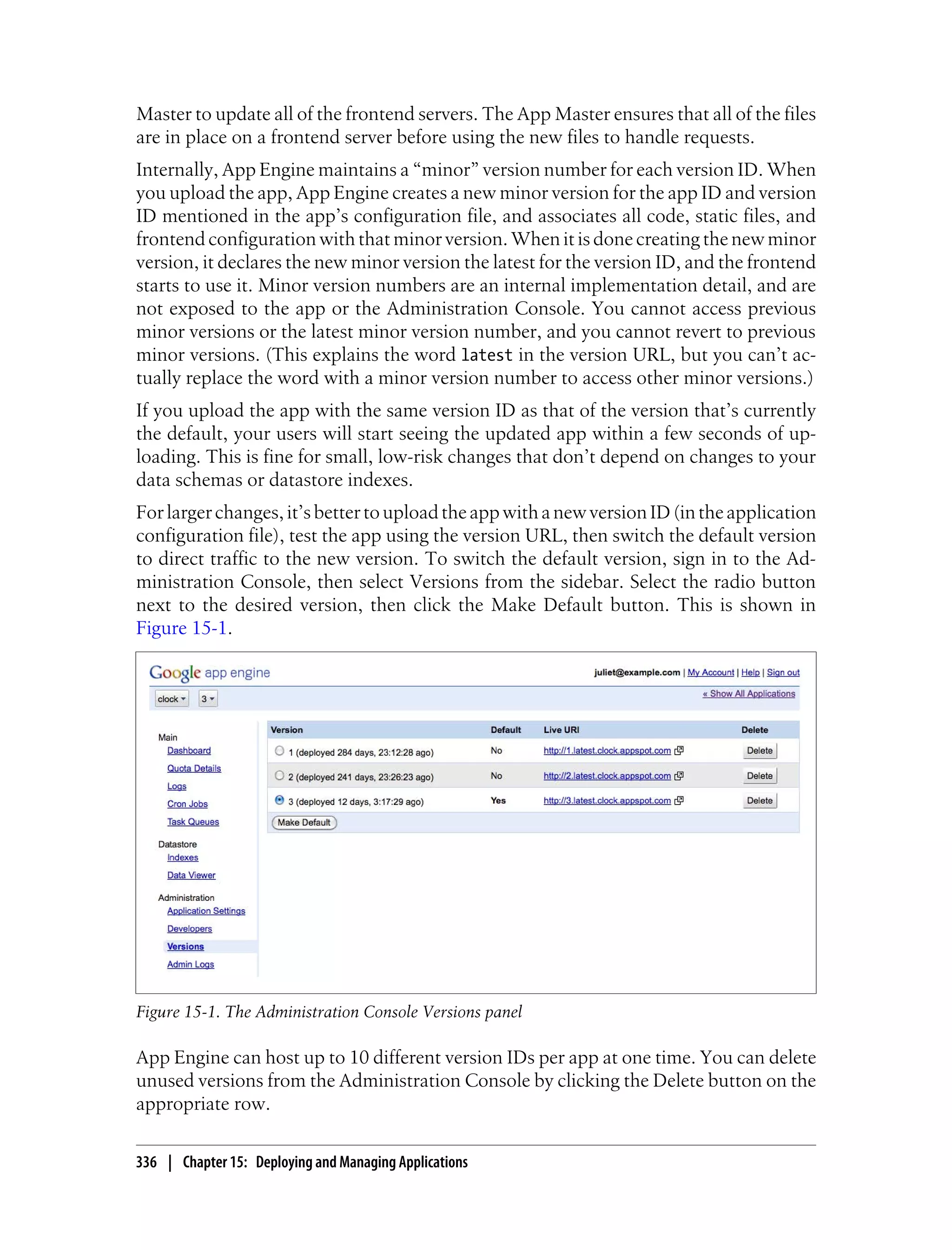

debug=True)