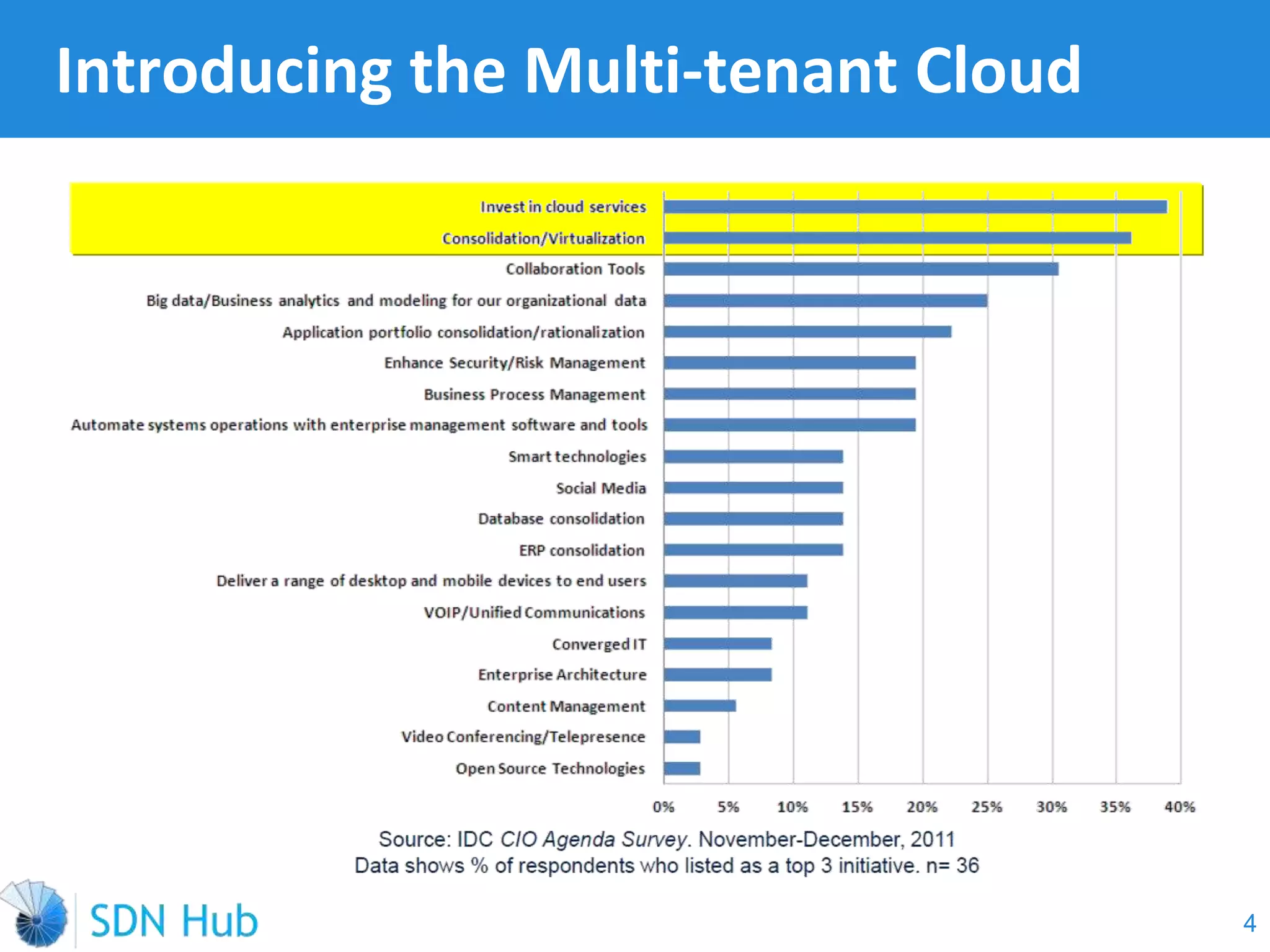

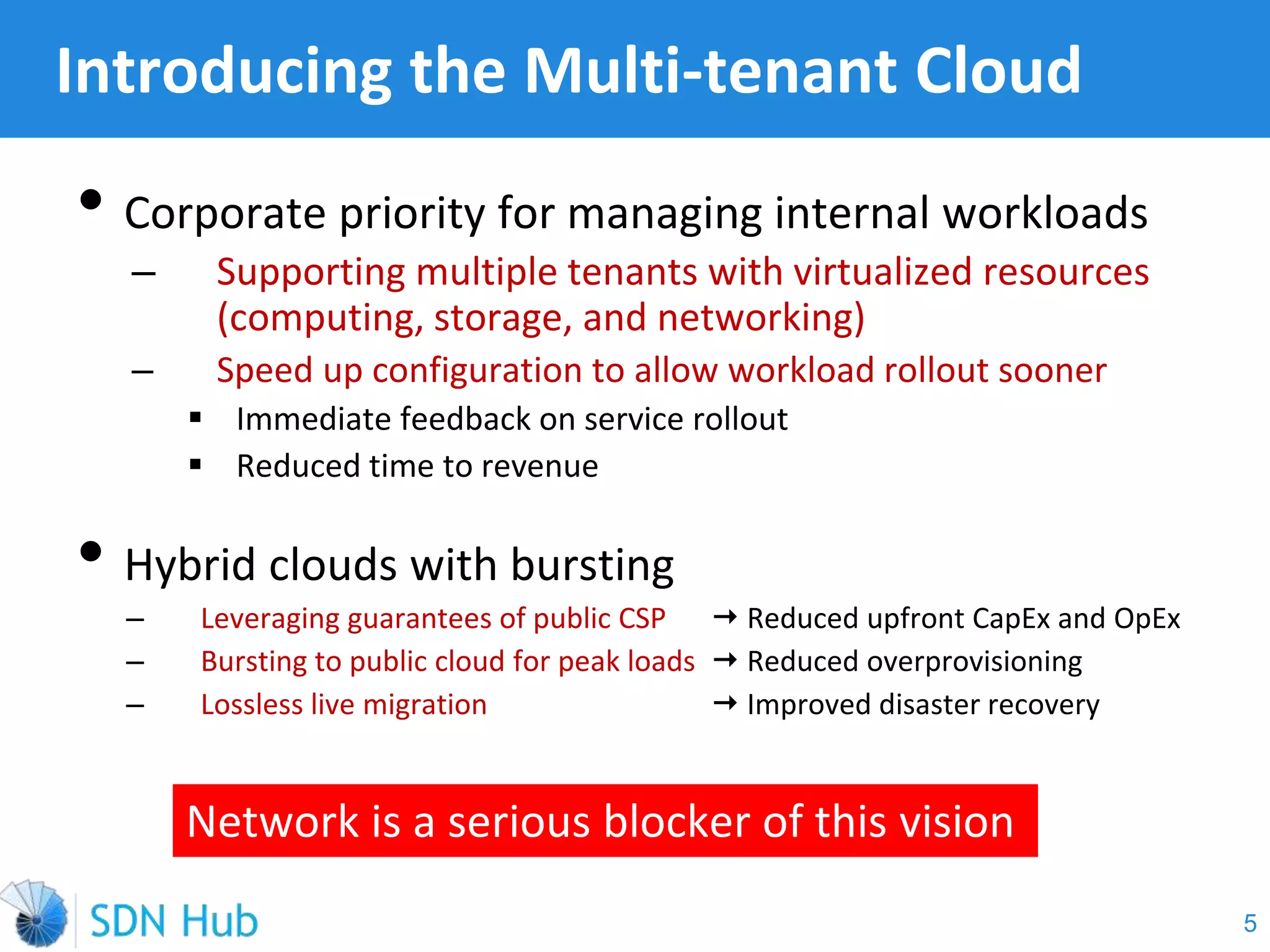

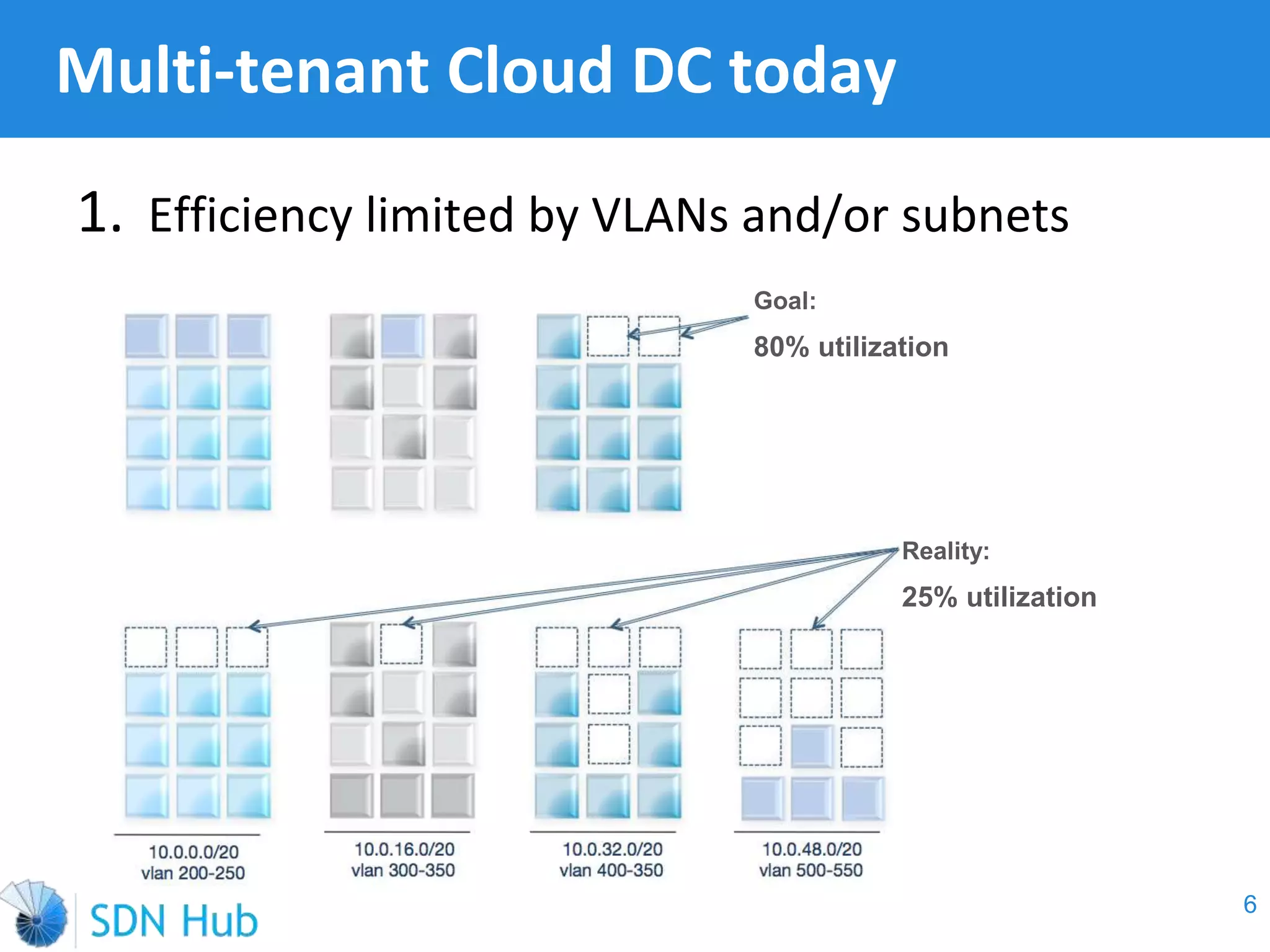

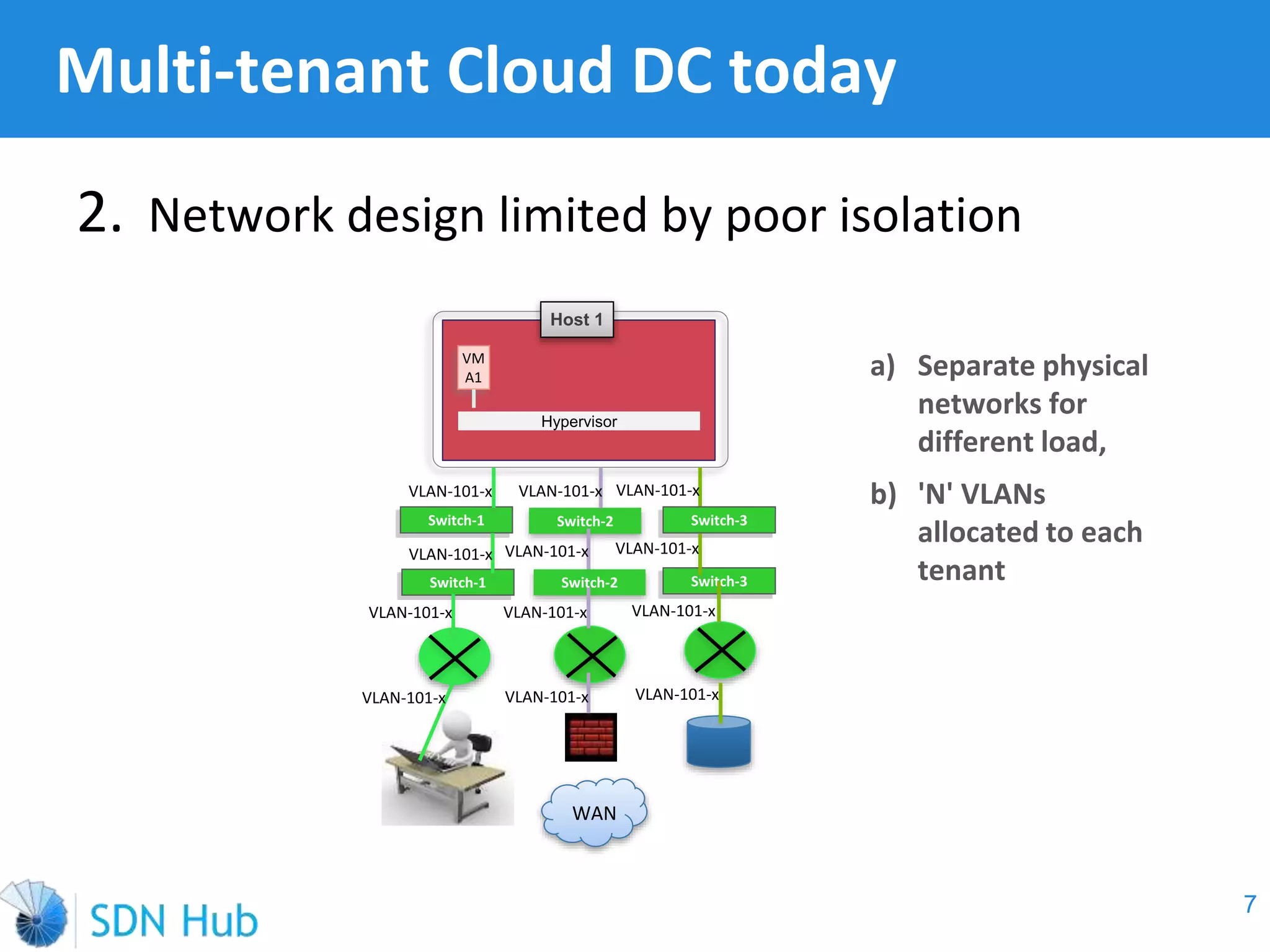

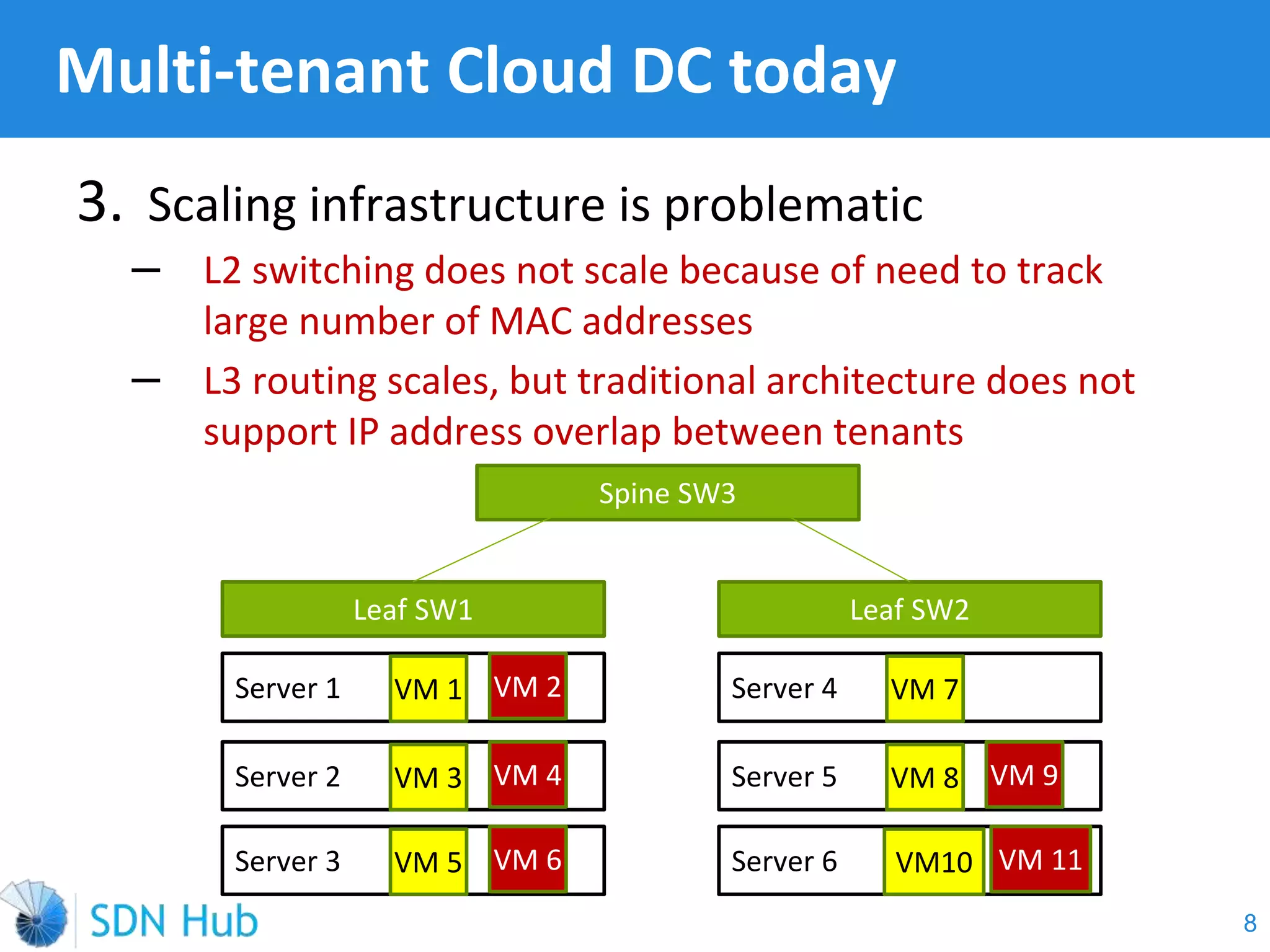

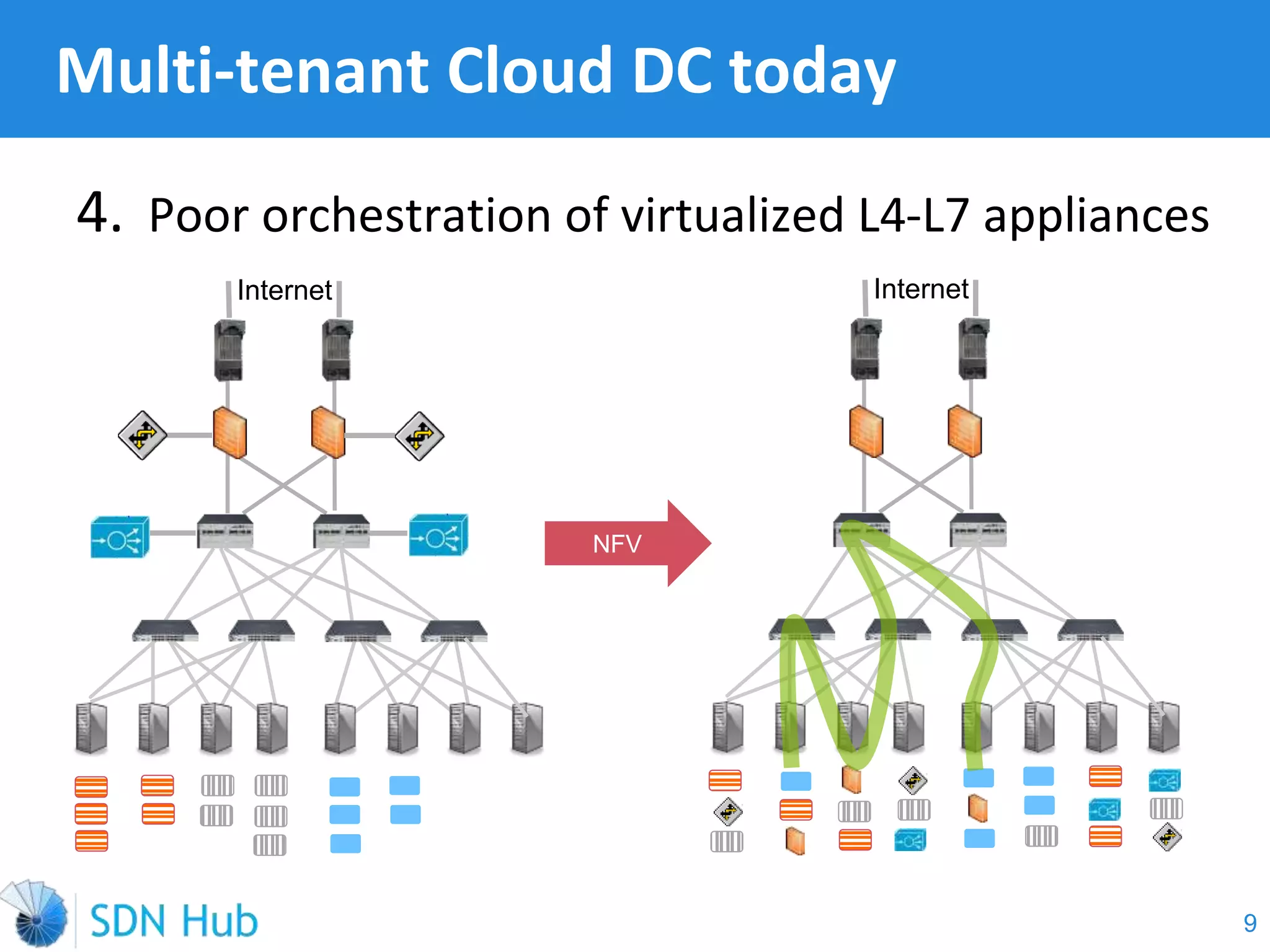

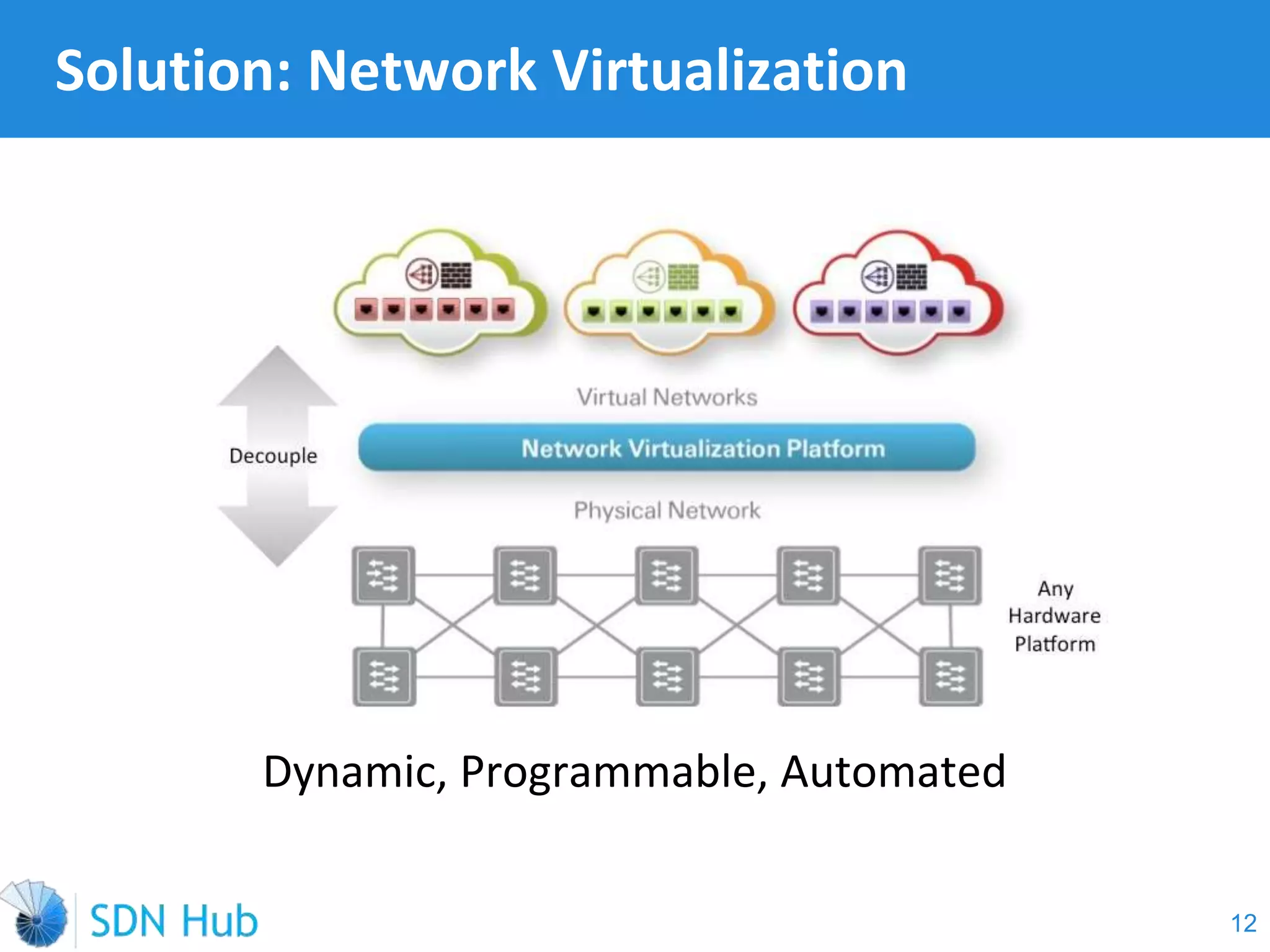

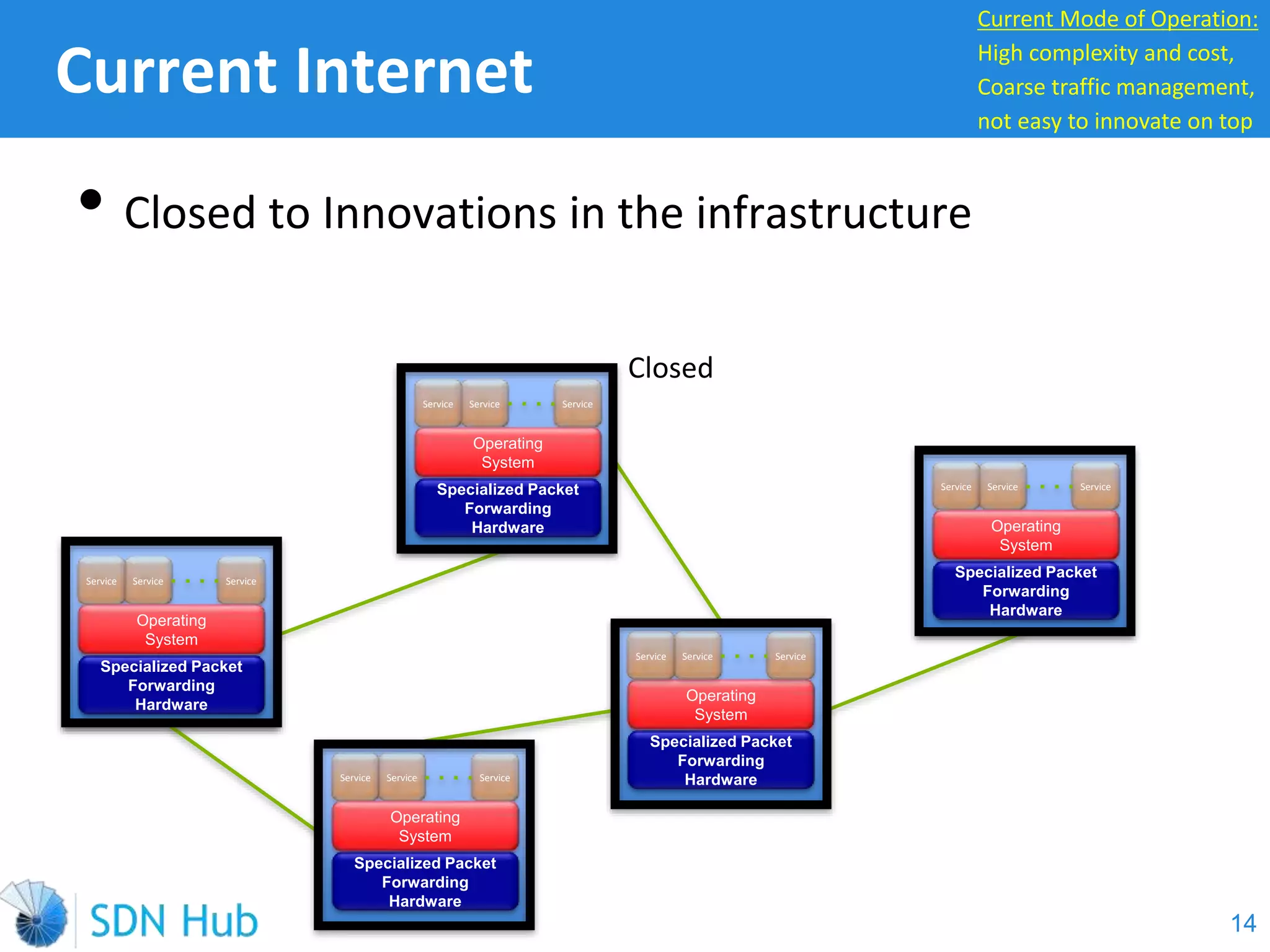

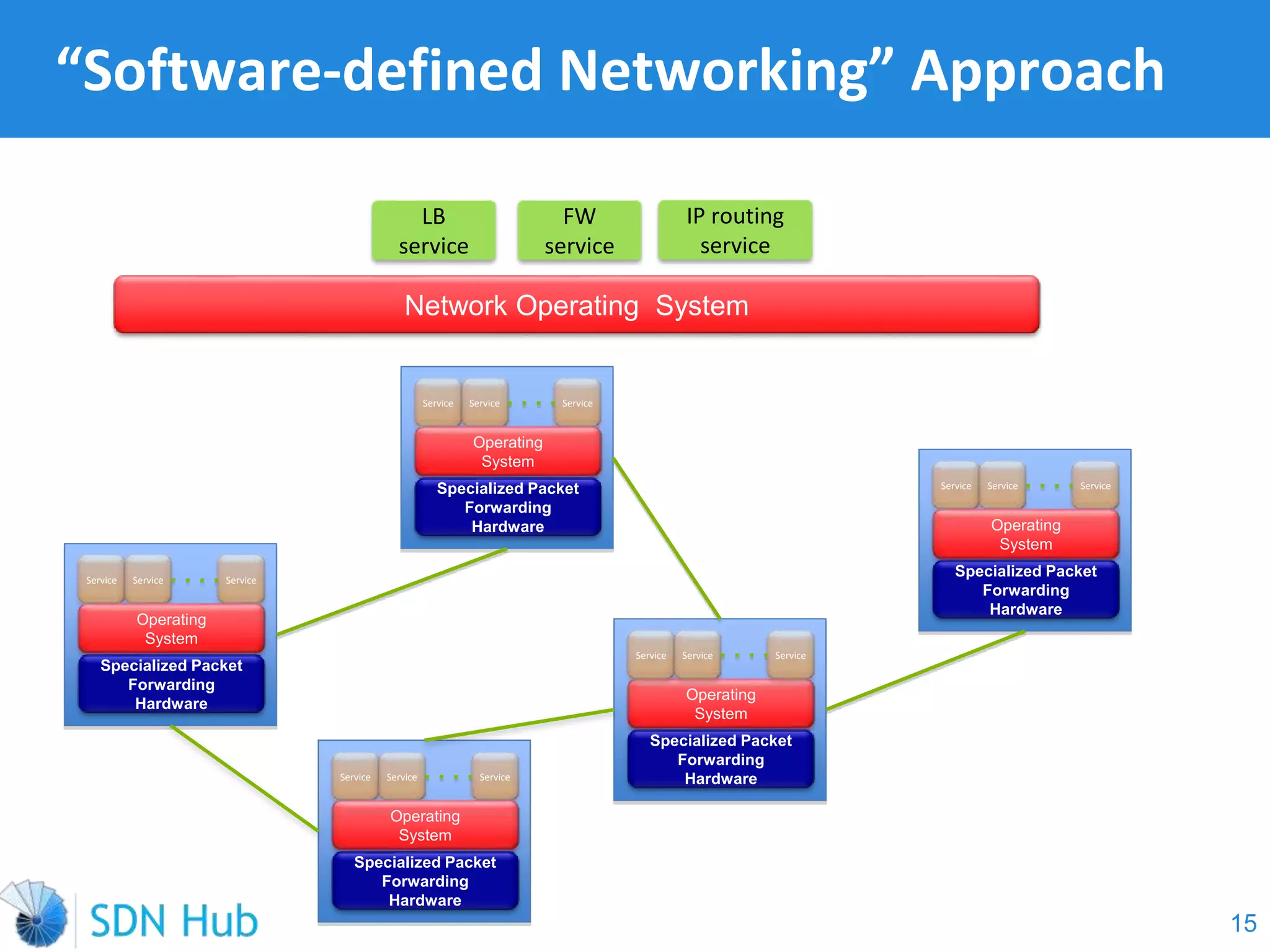

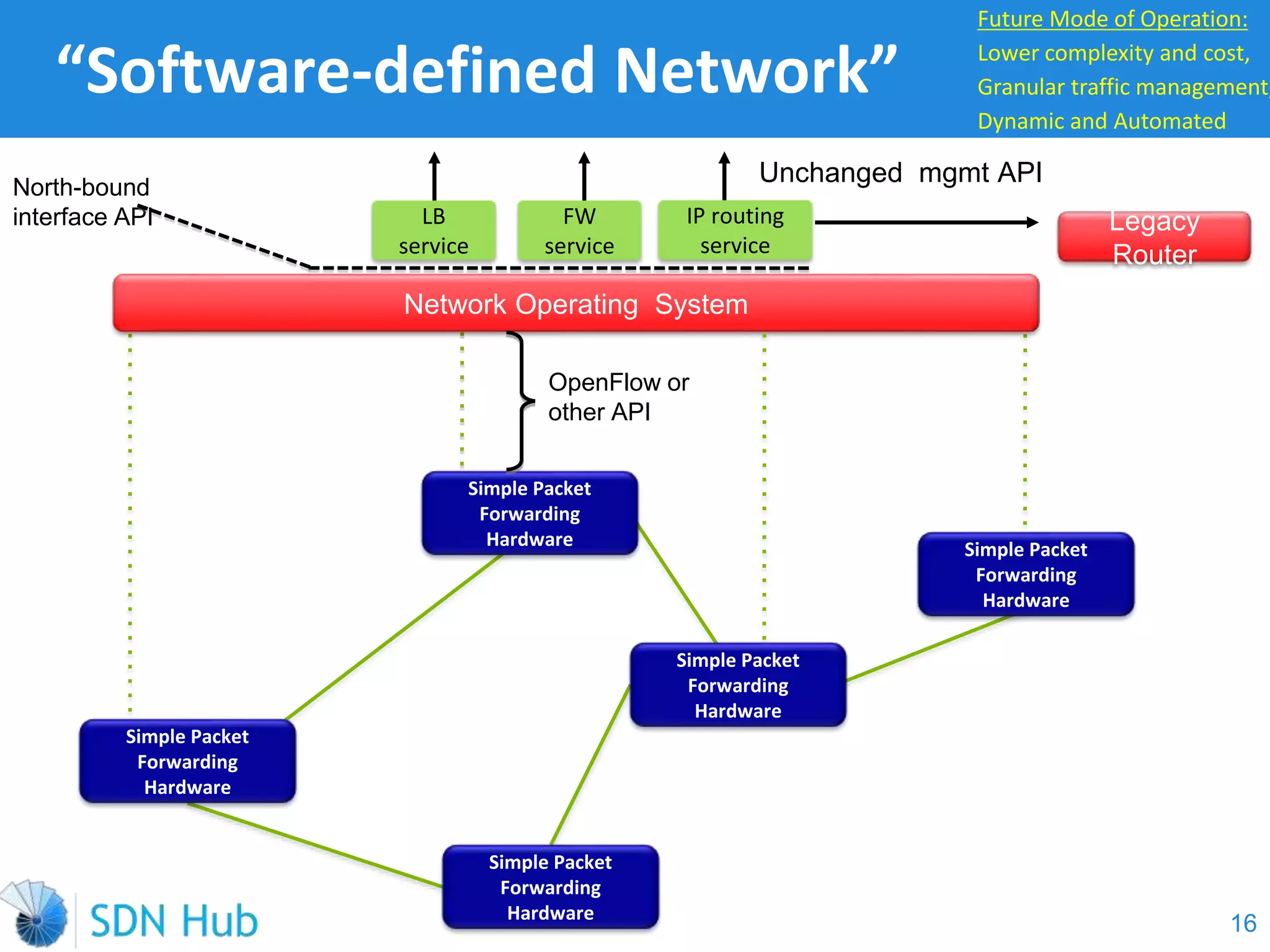

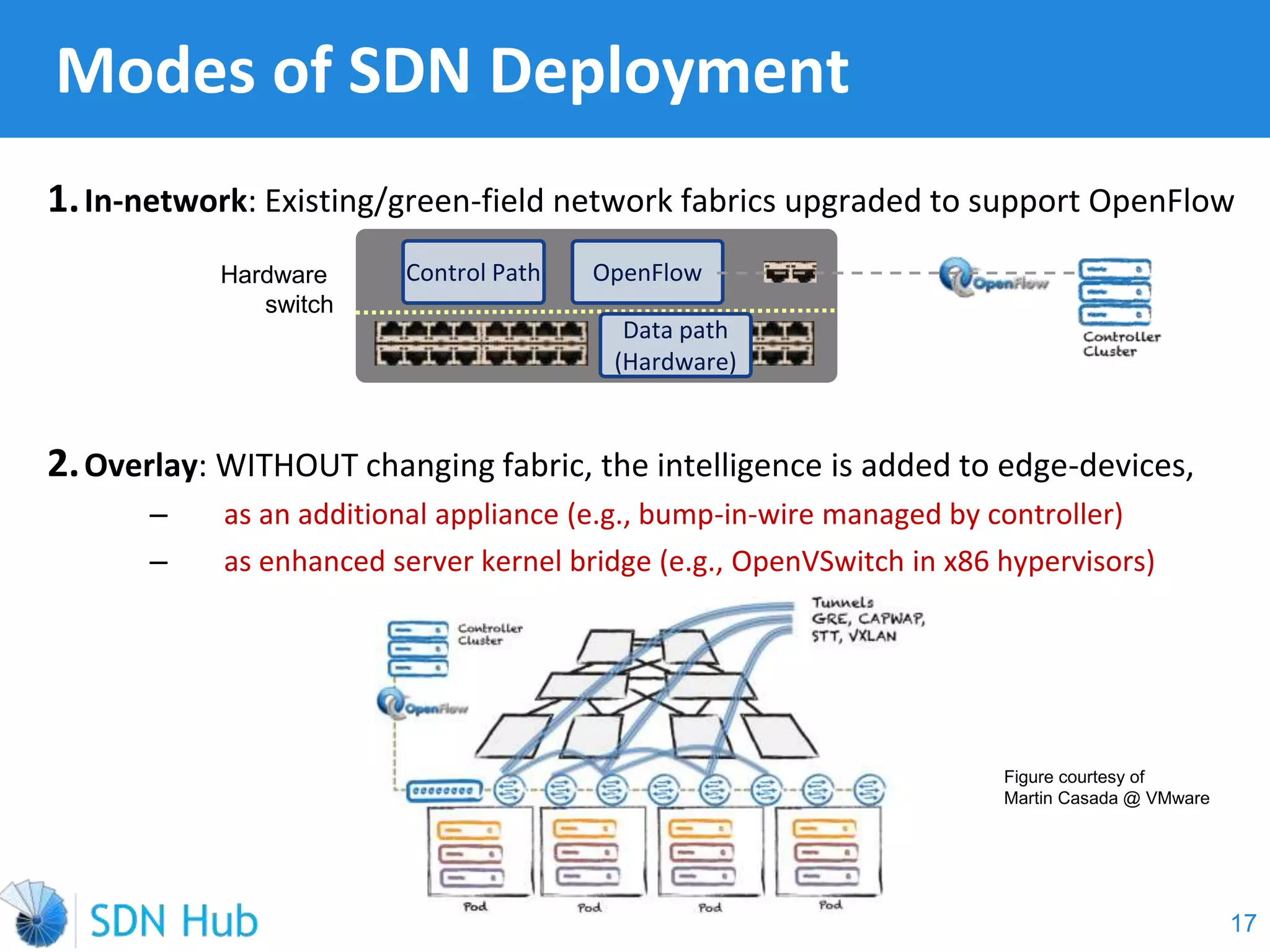

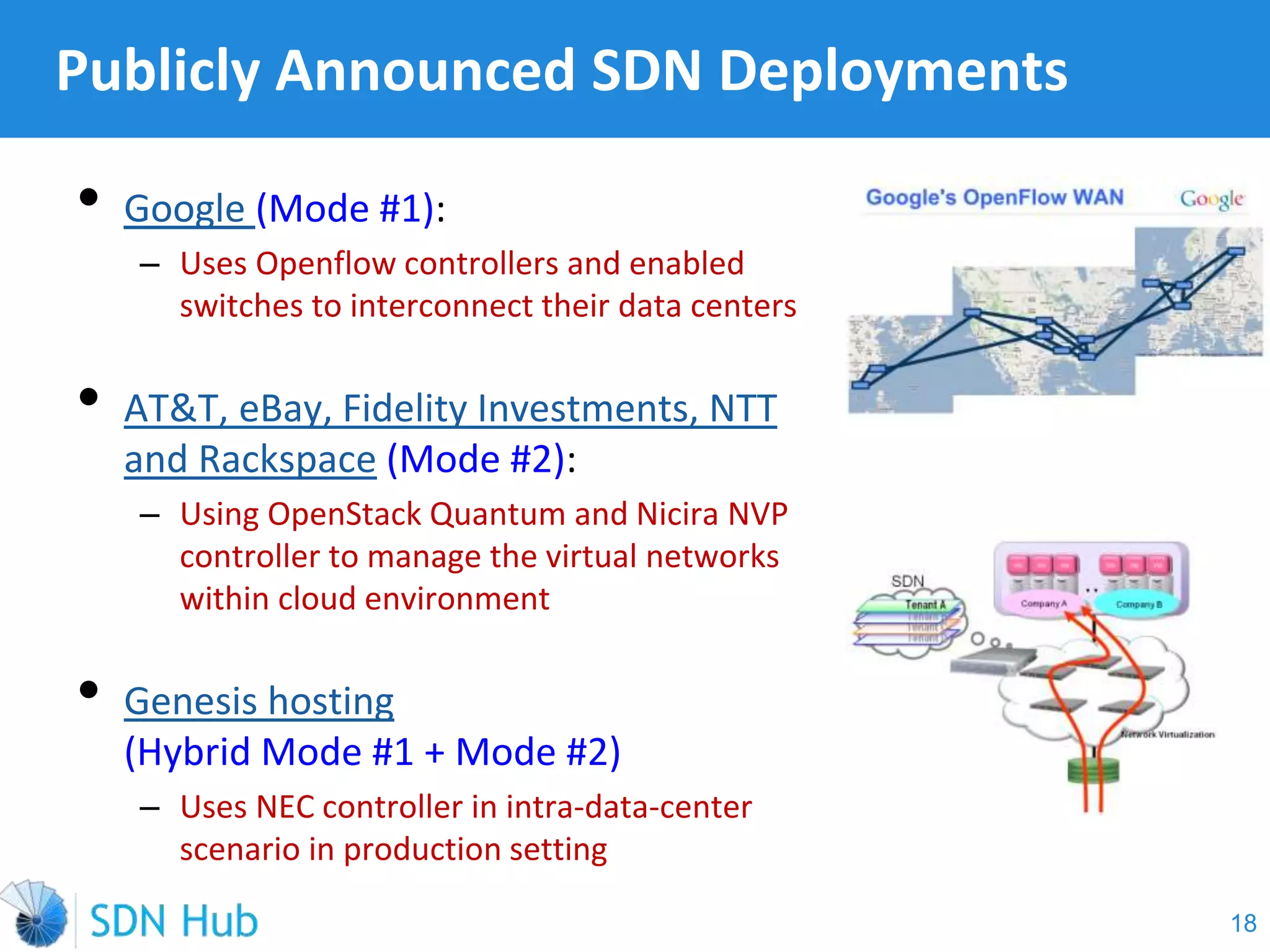

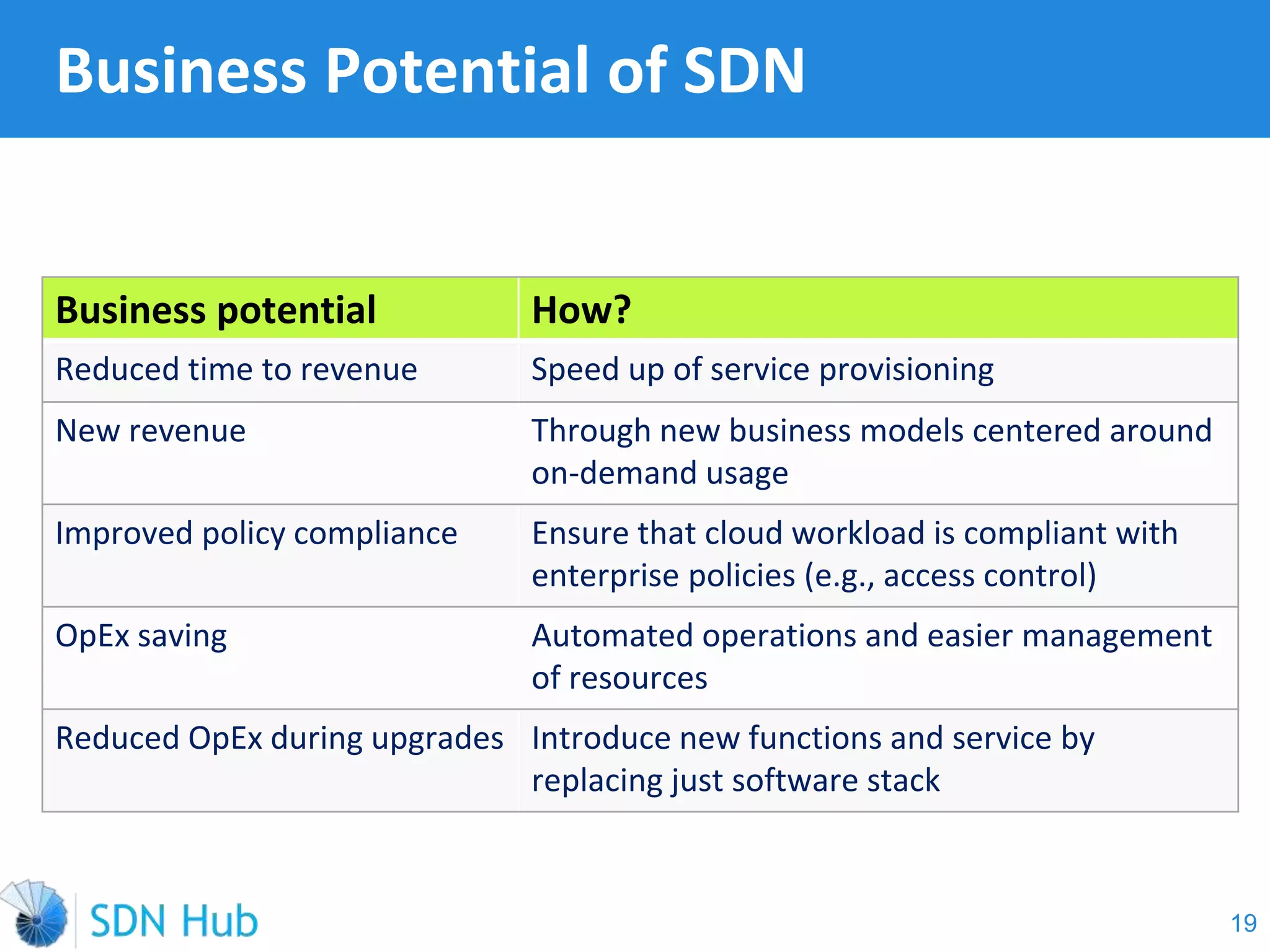

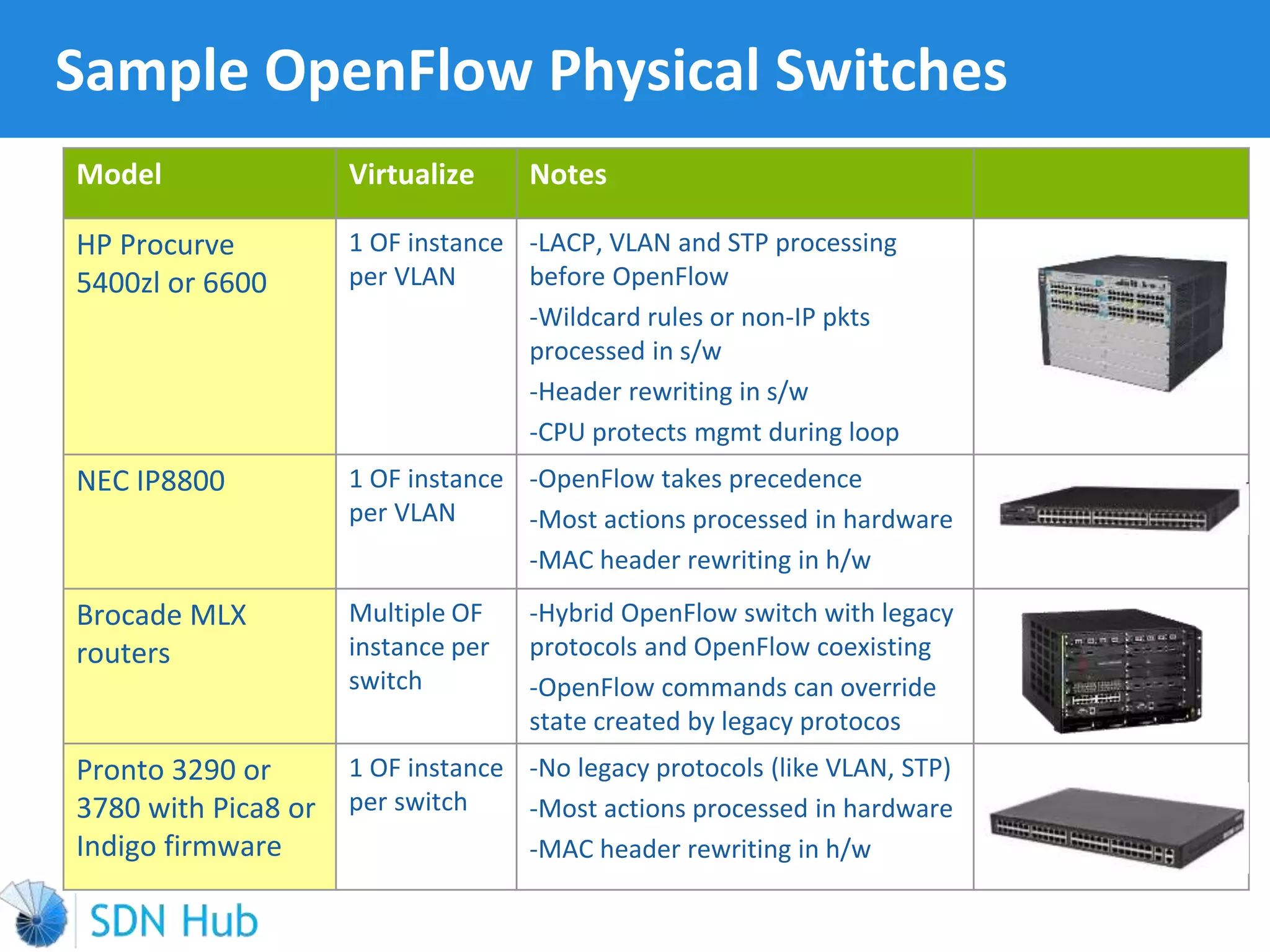

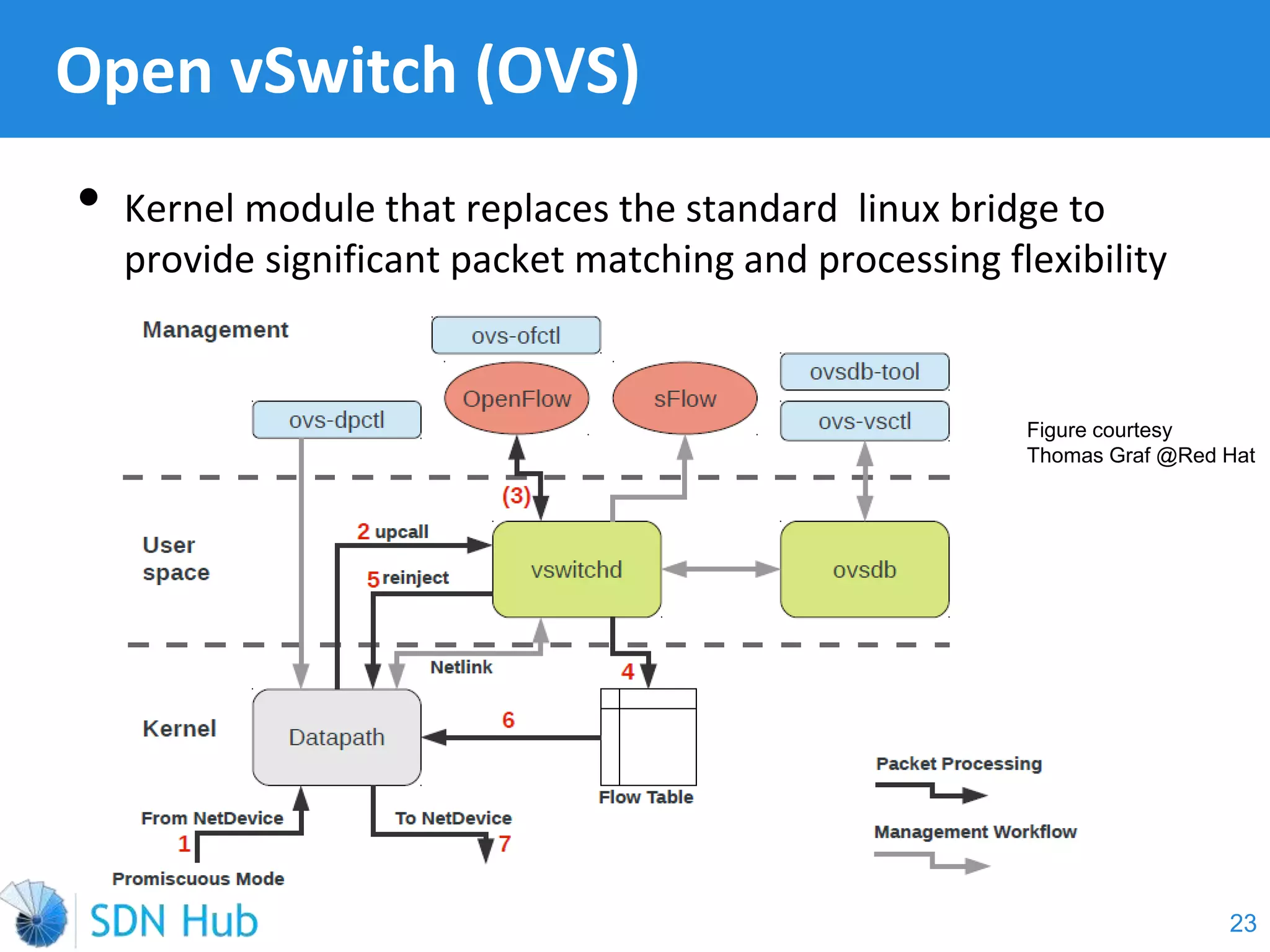

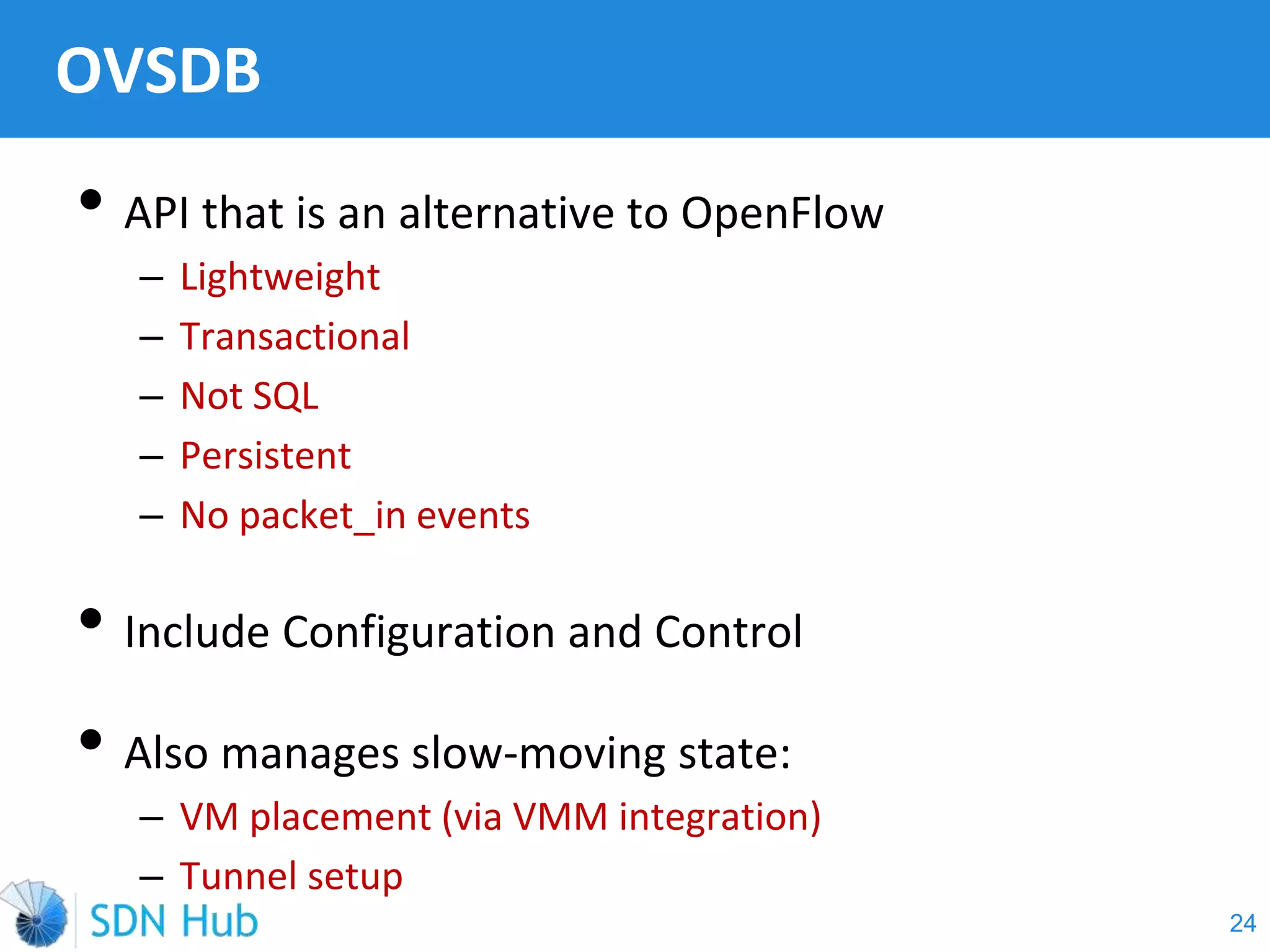

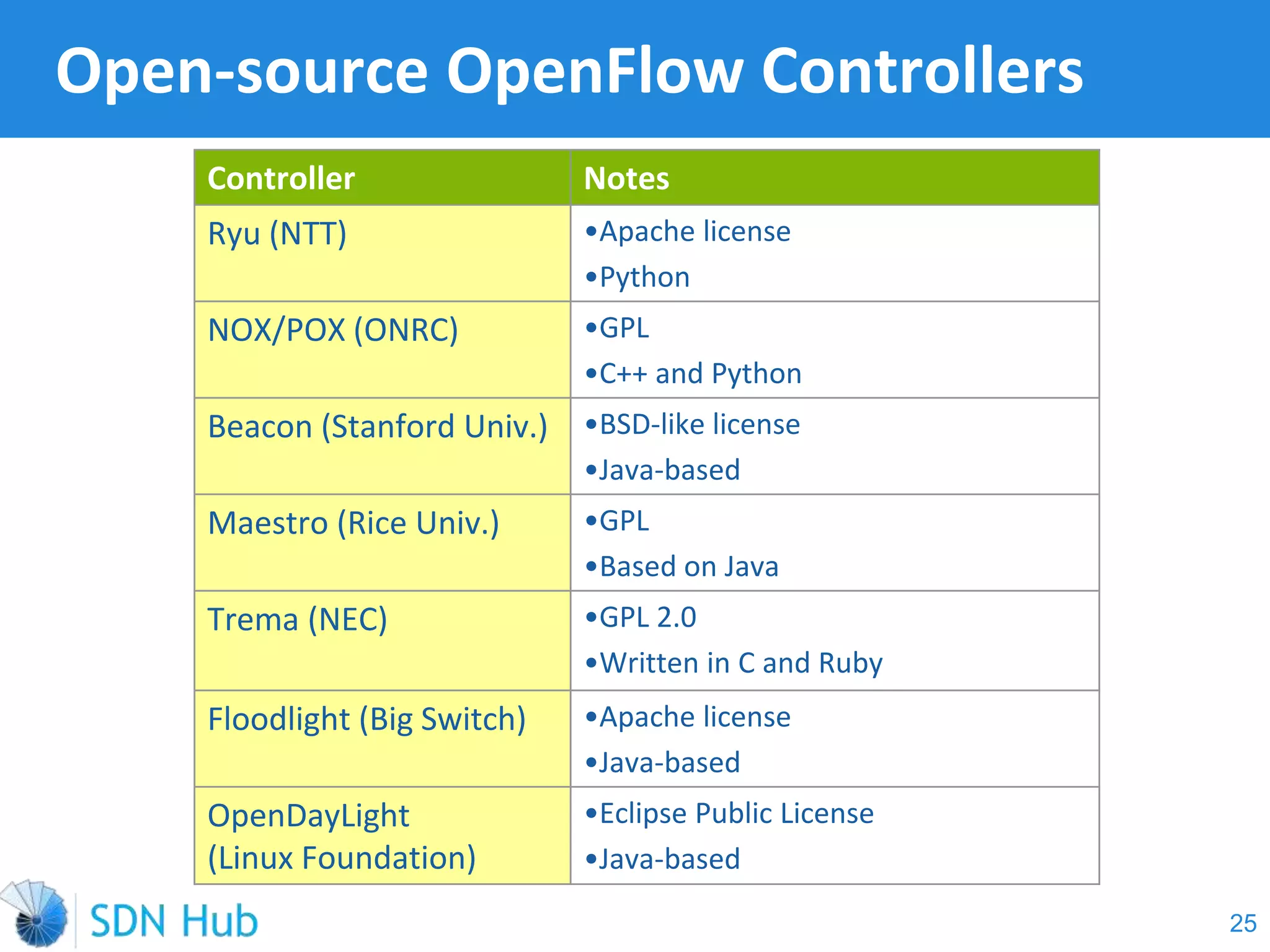

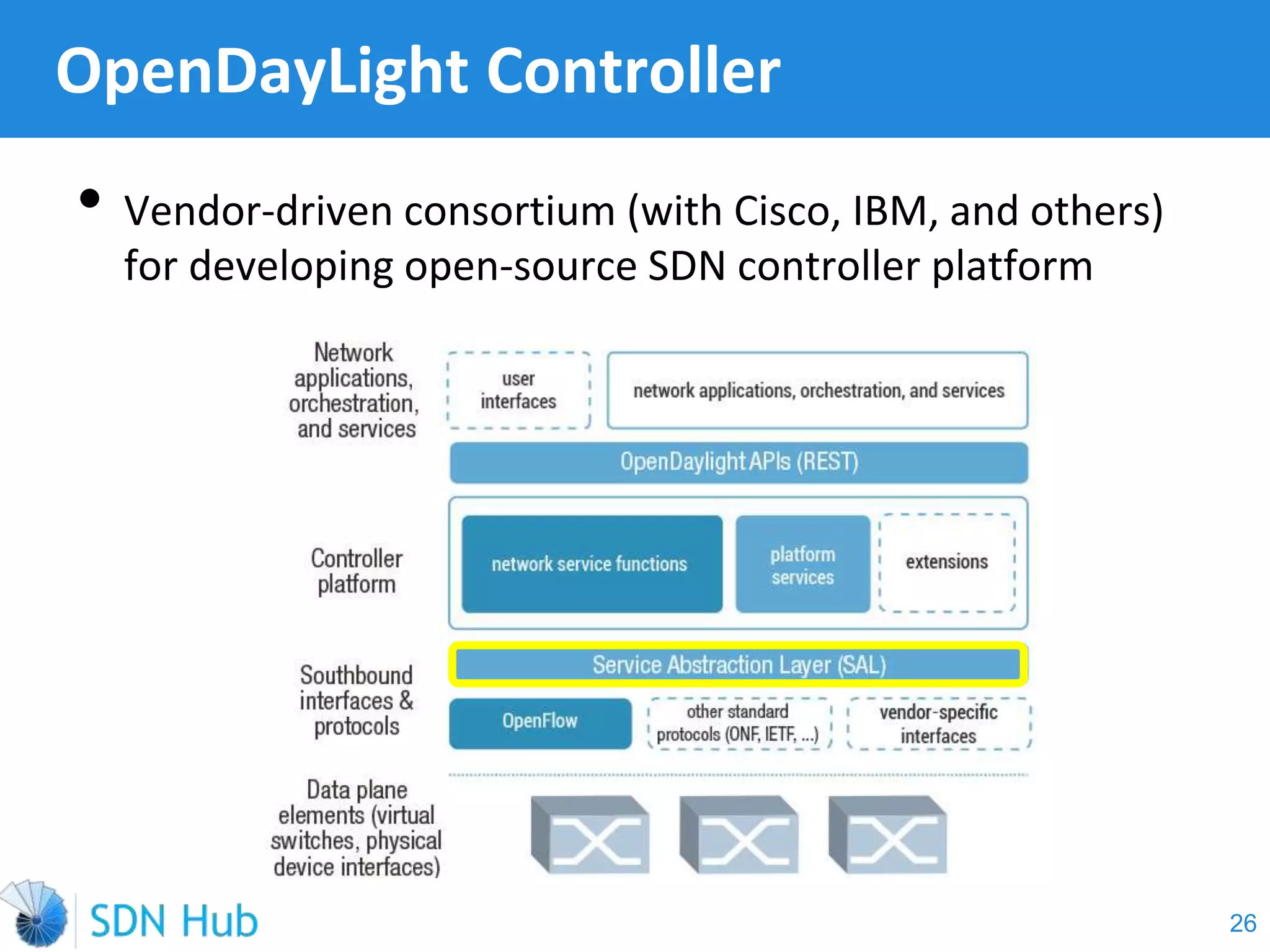

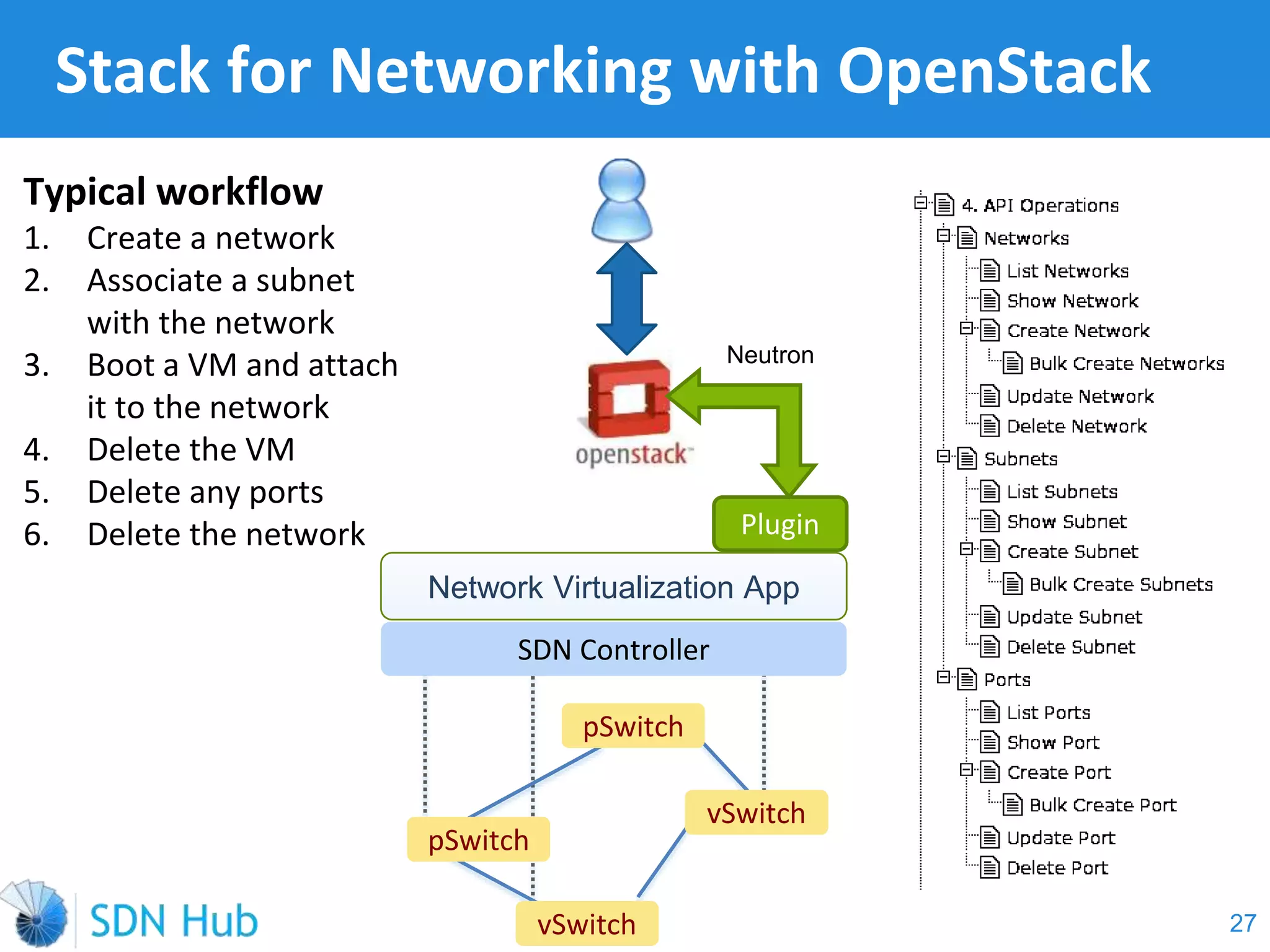

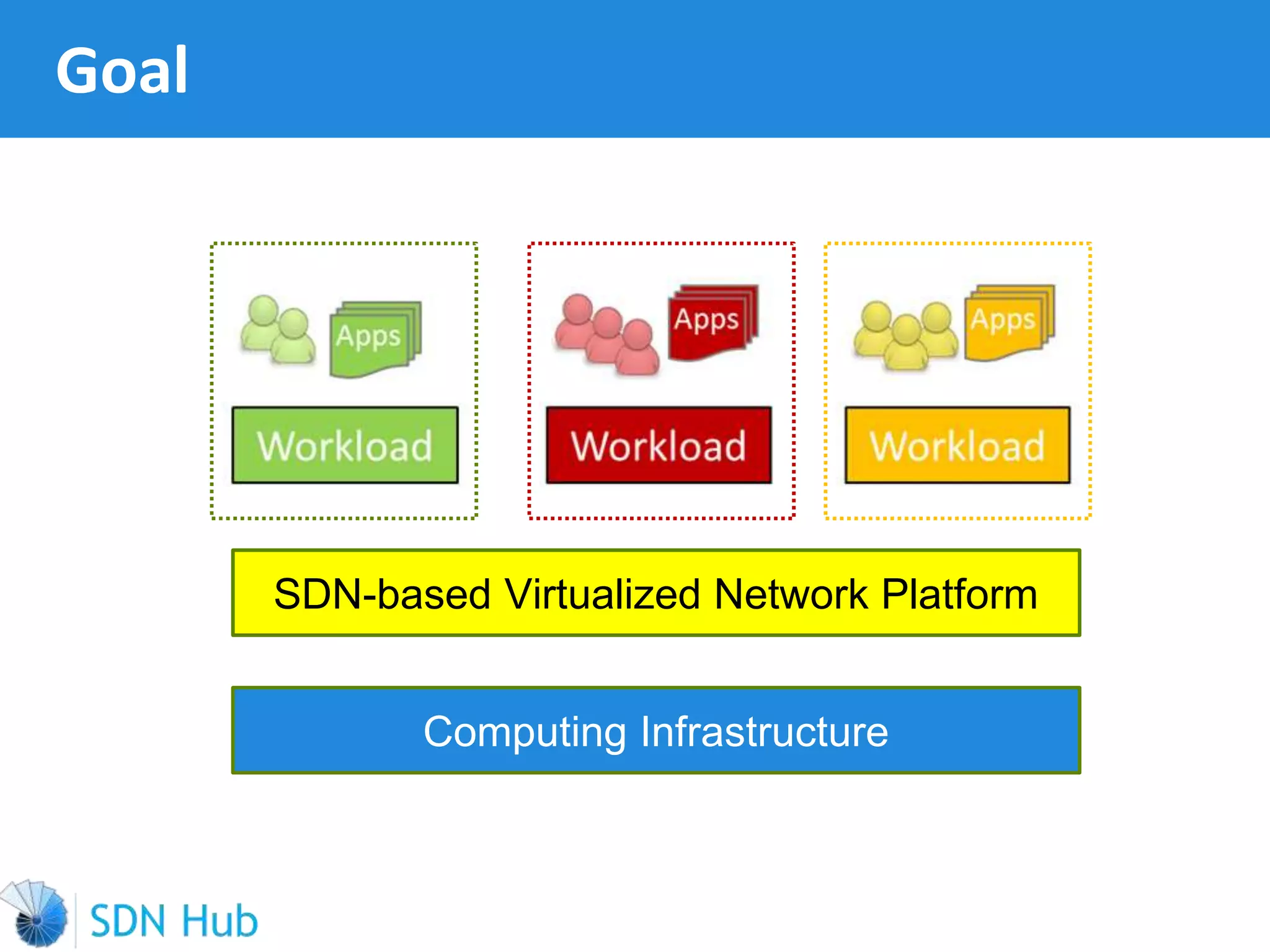

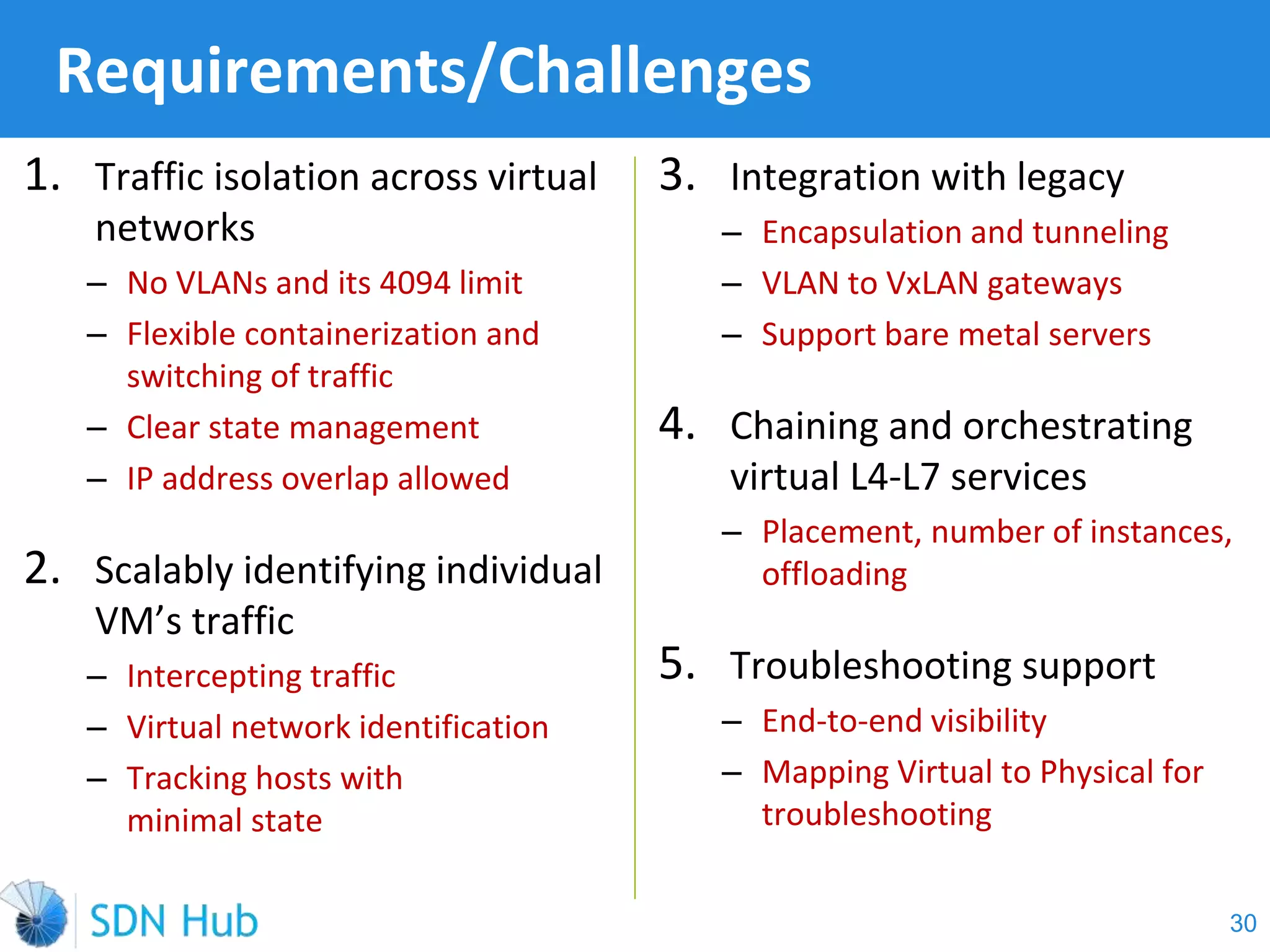

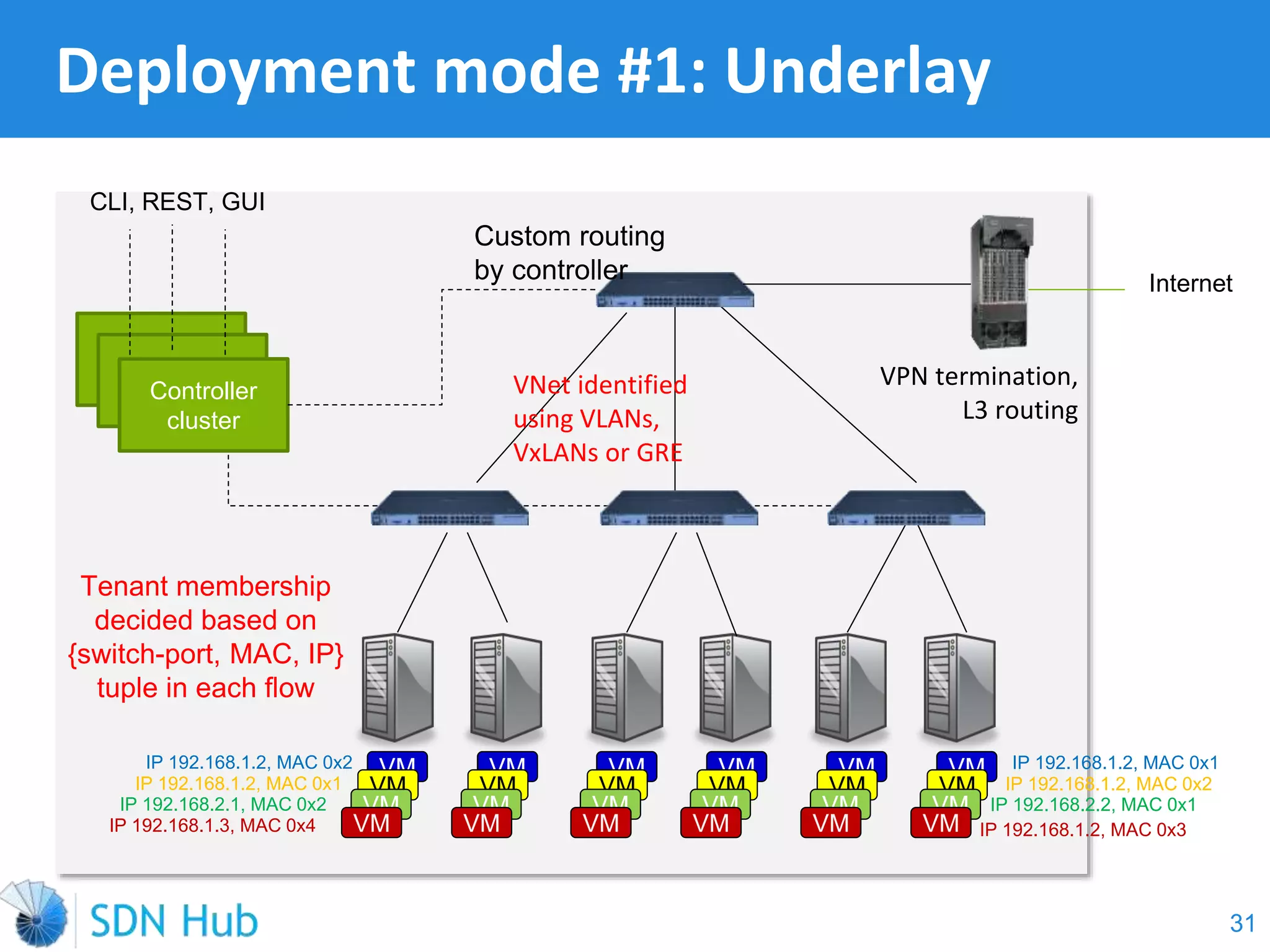

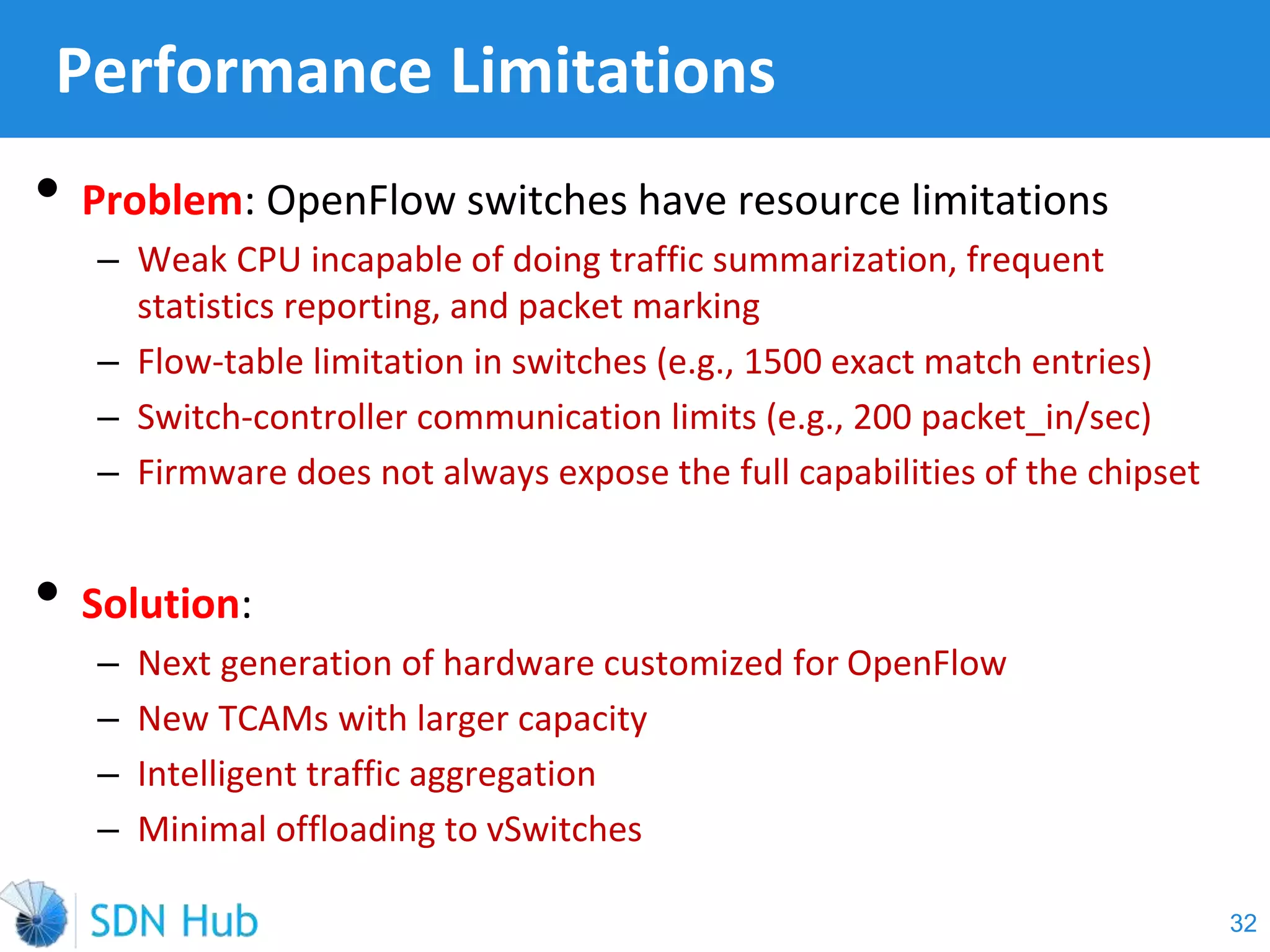

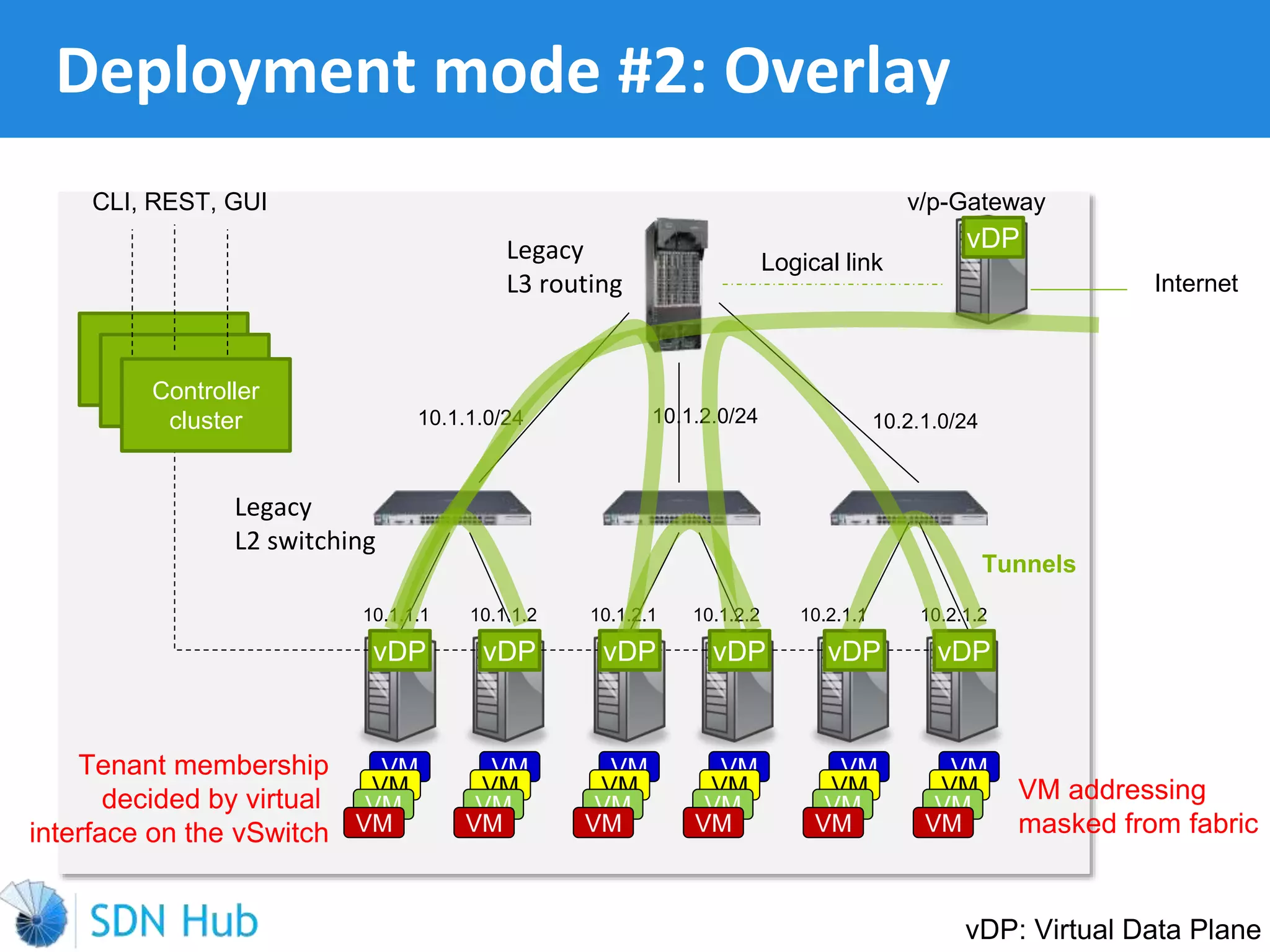

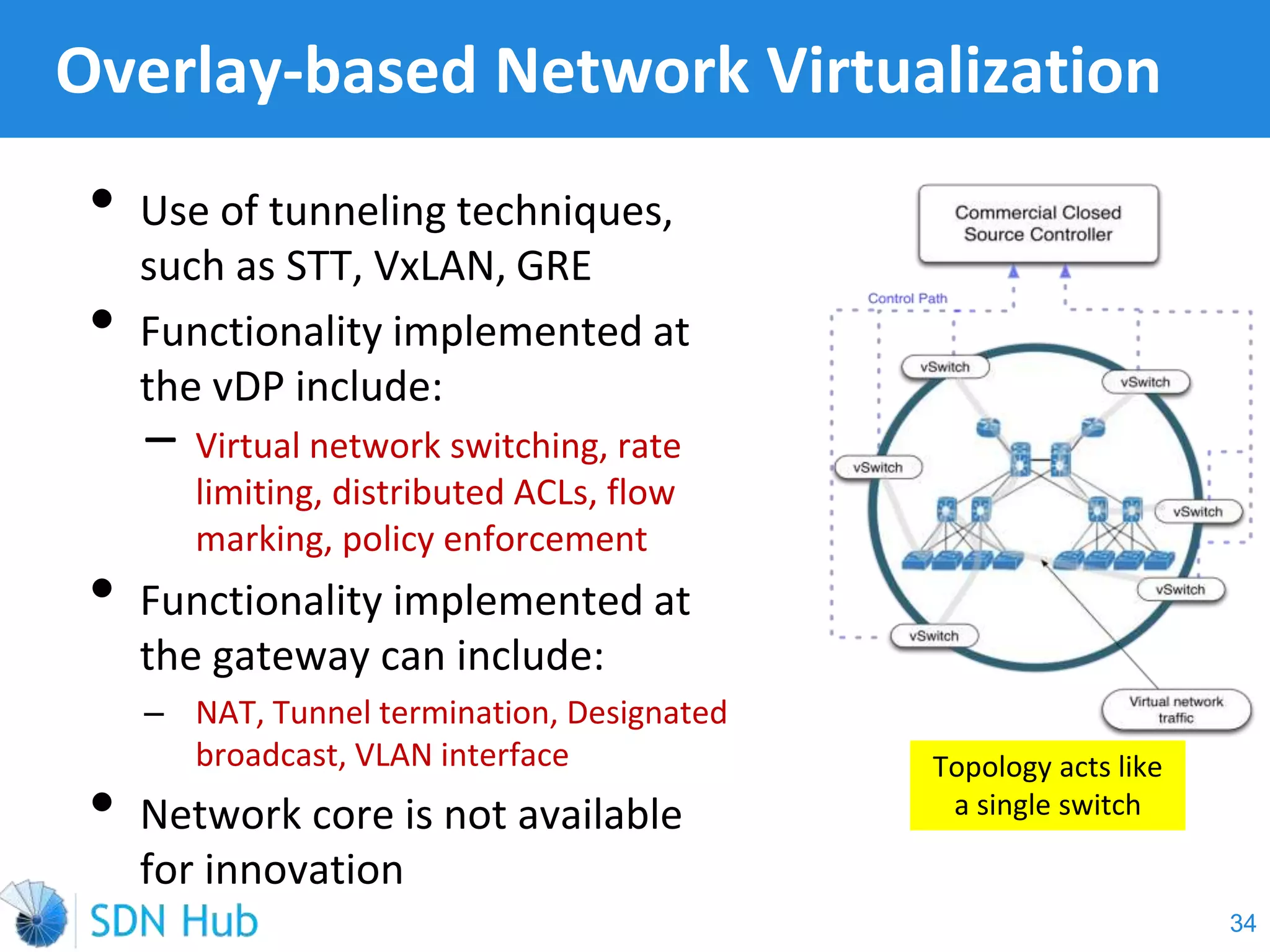

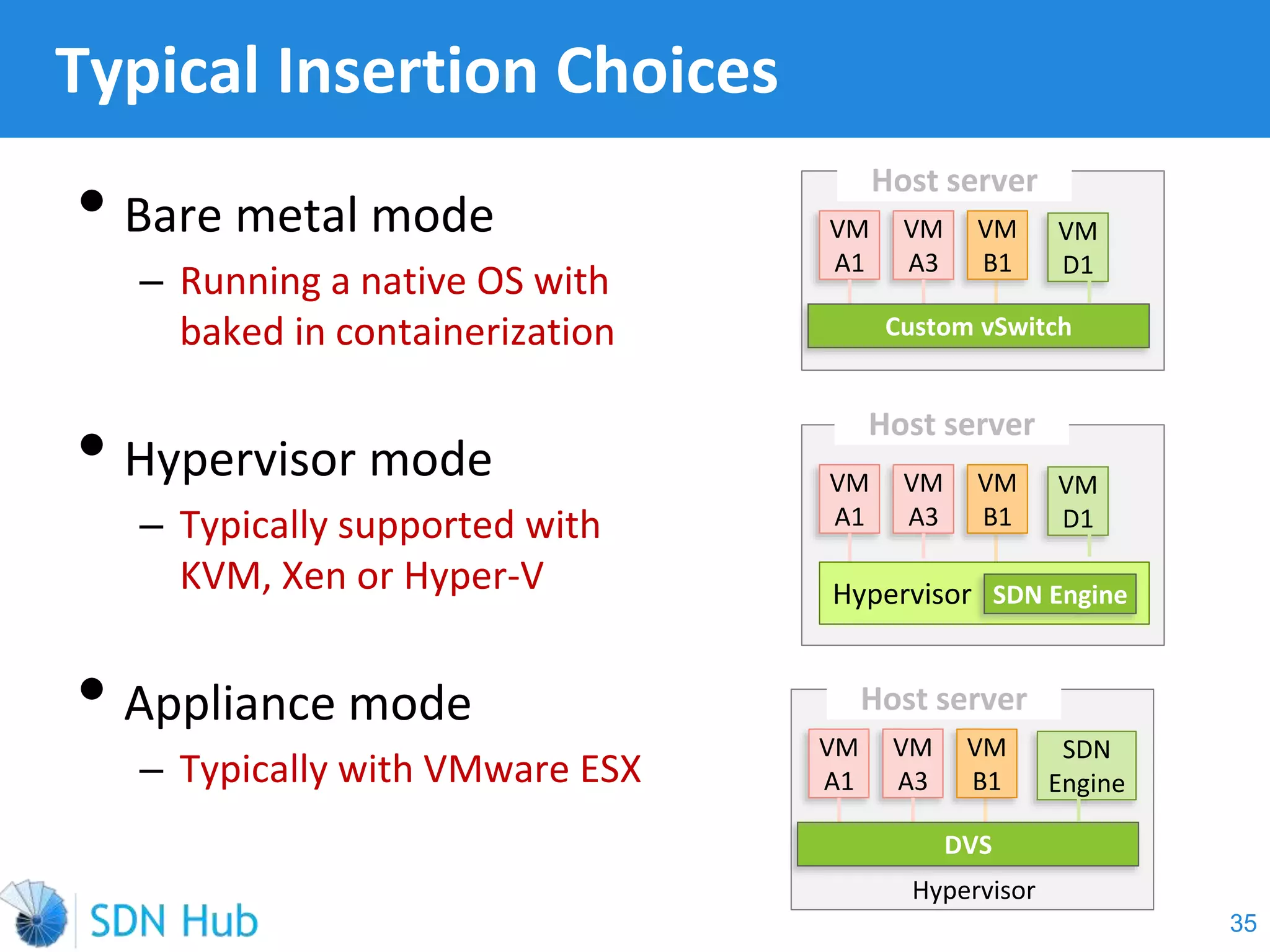

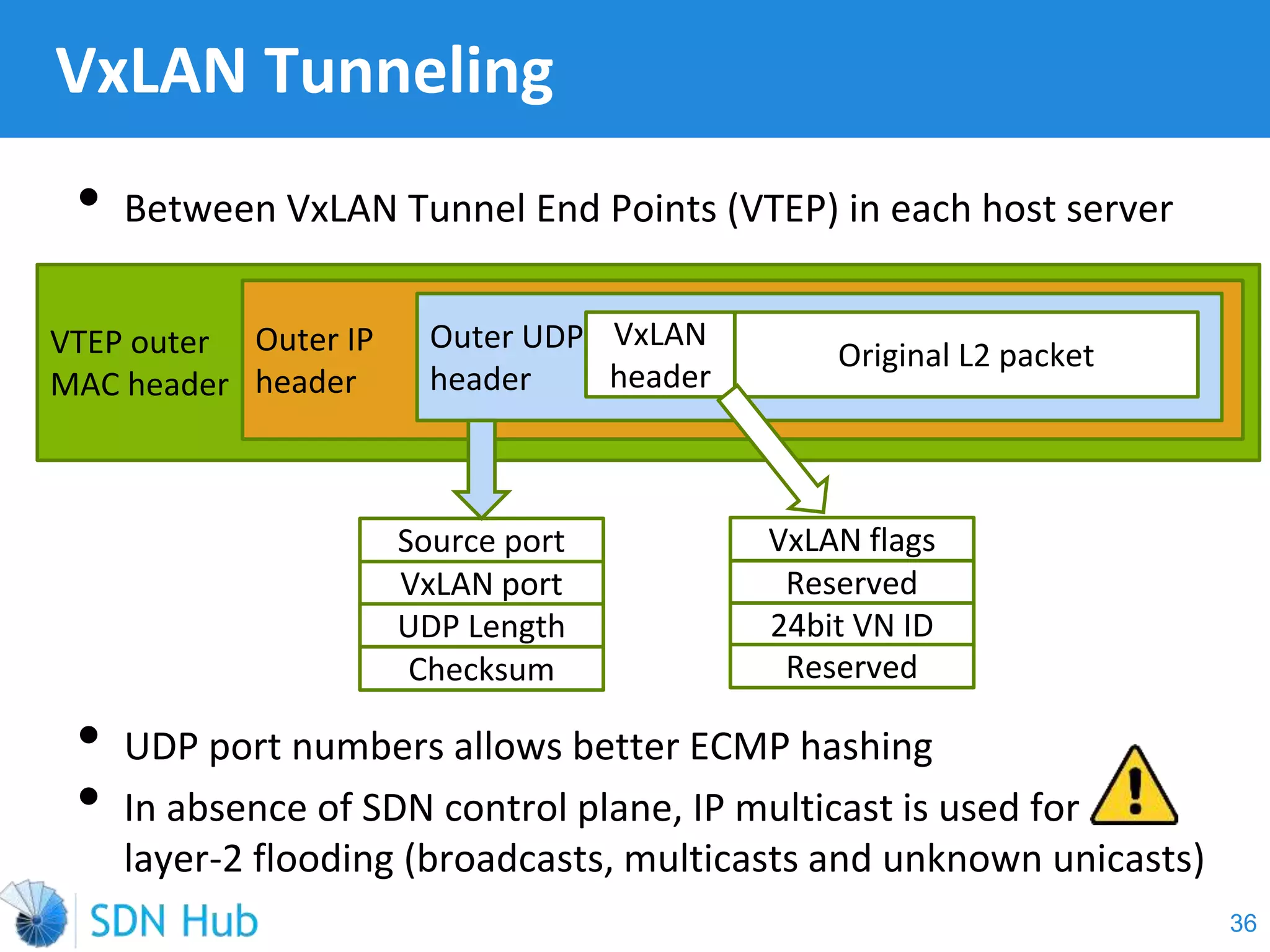

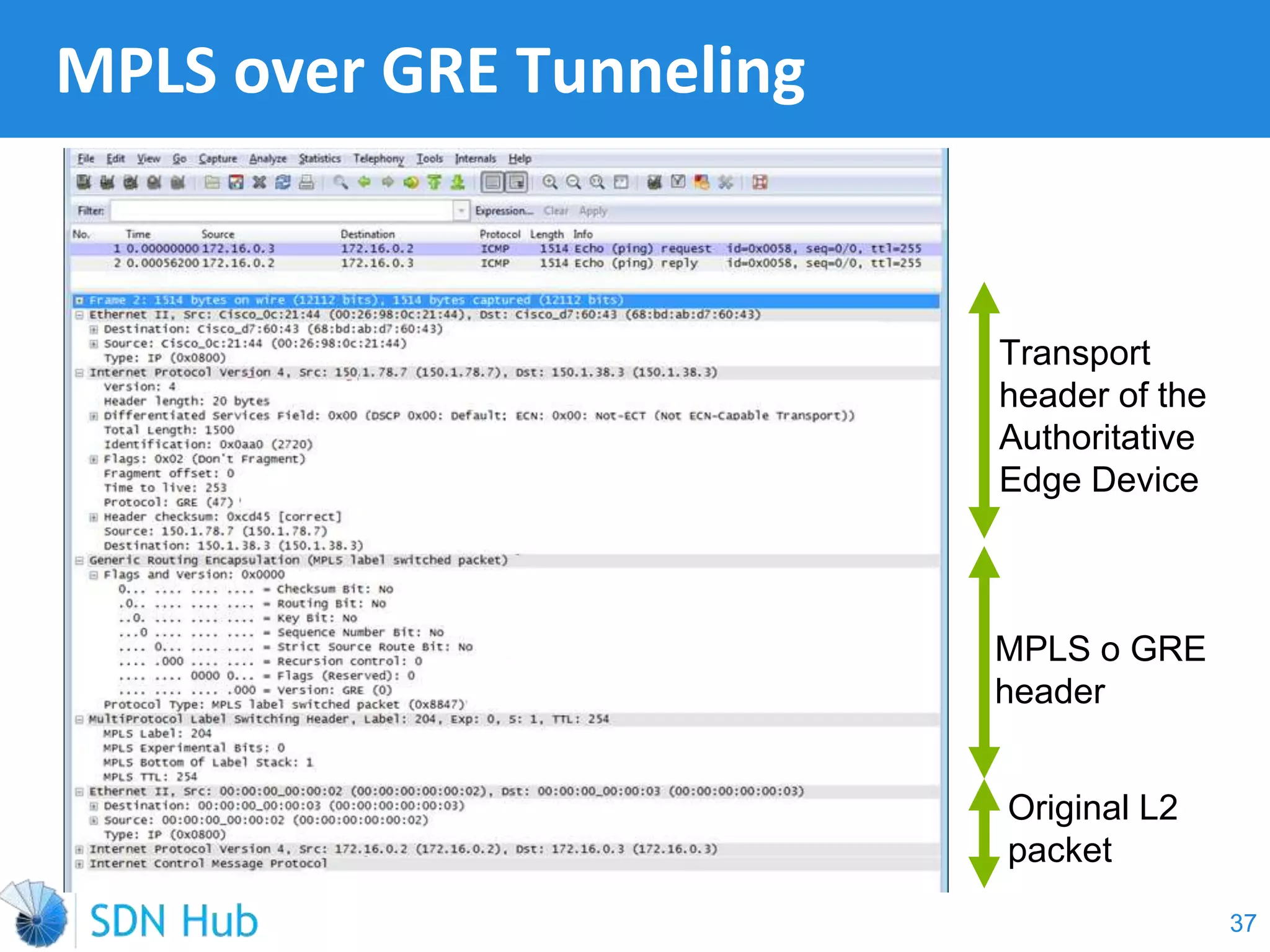

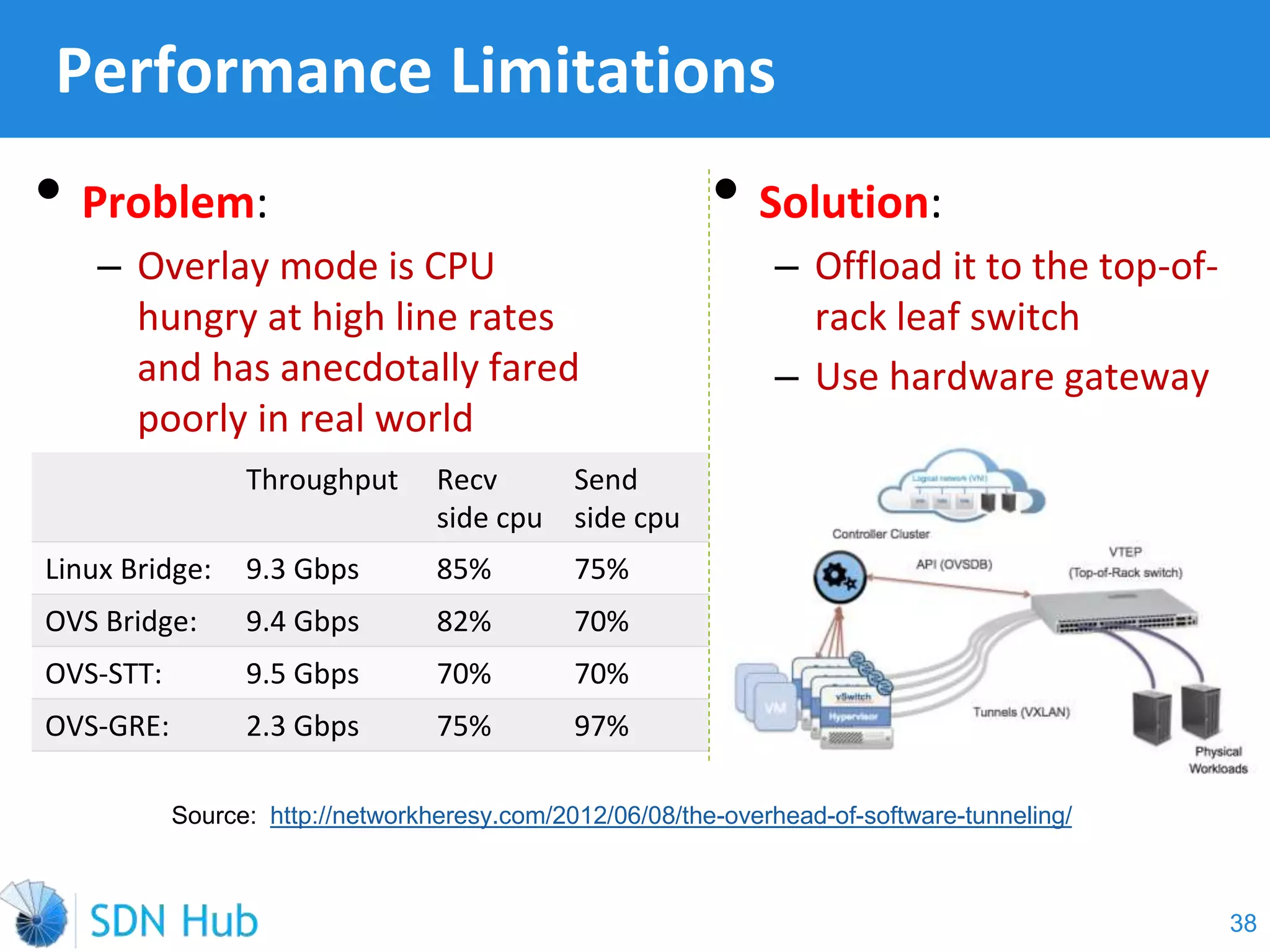

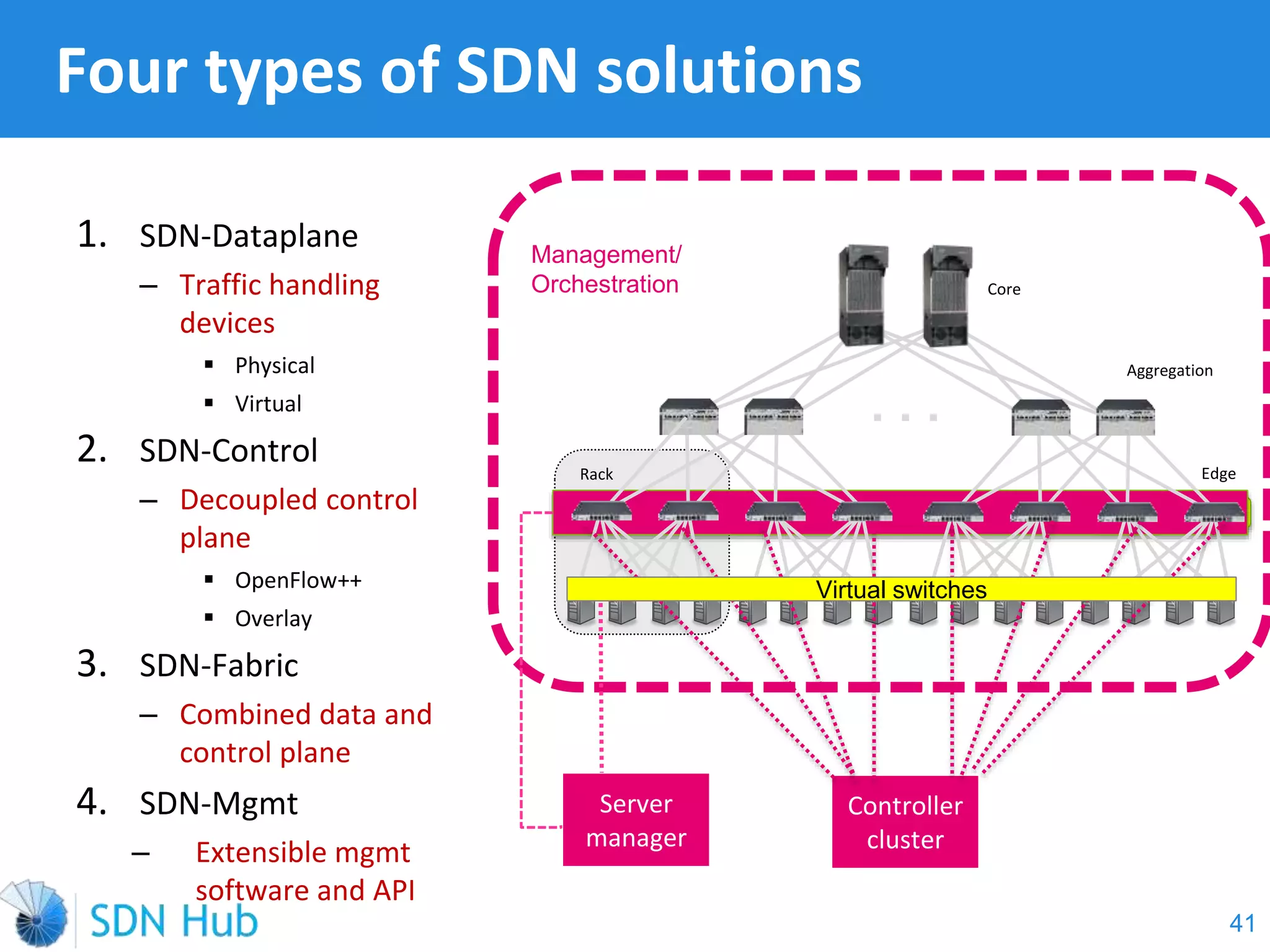

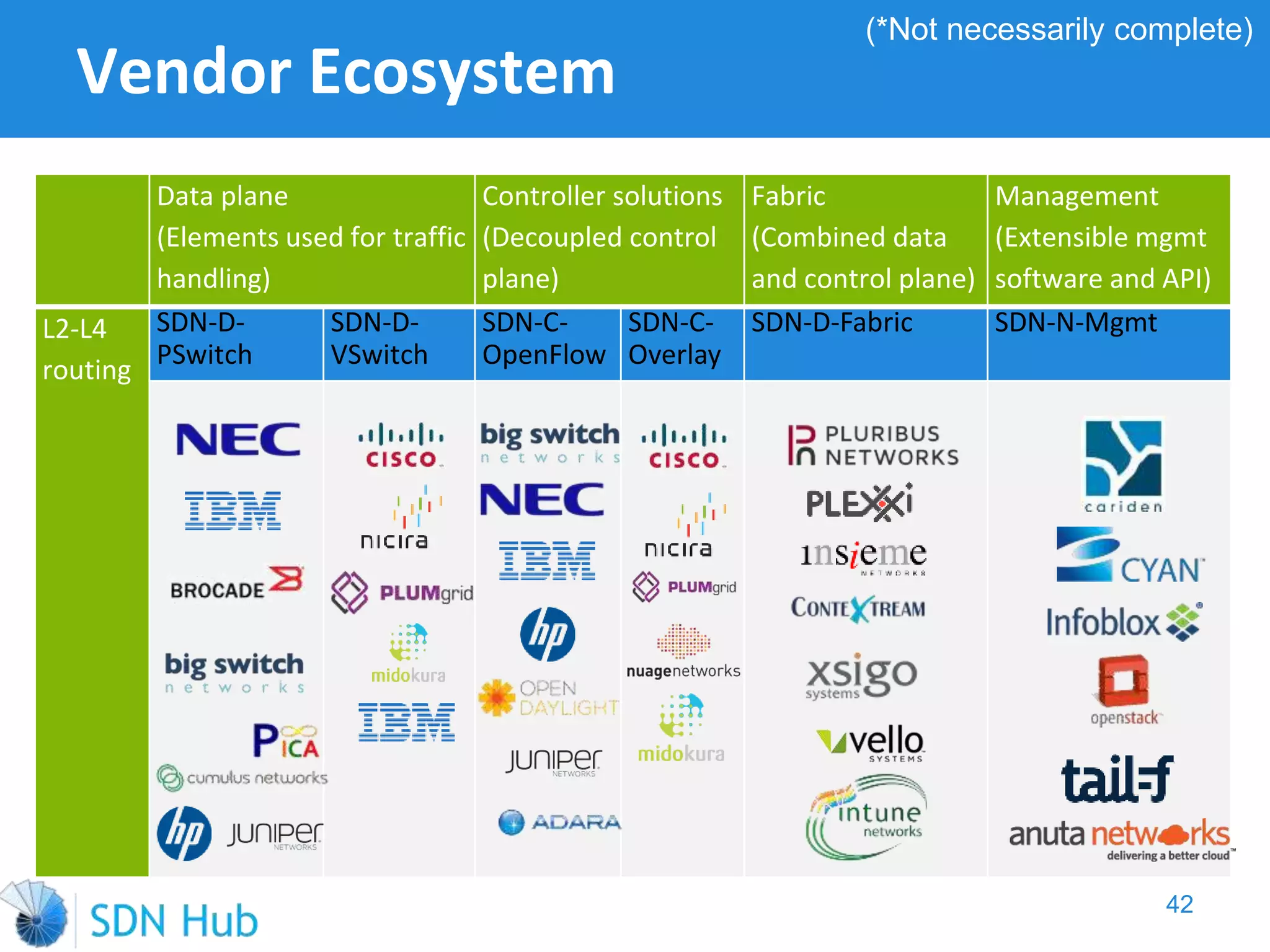

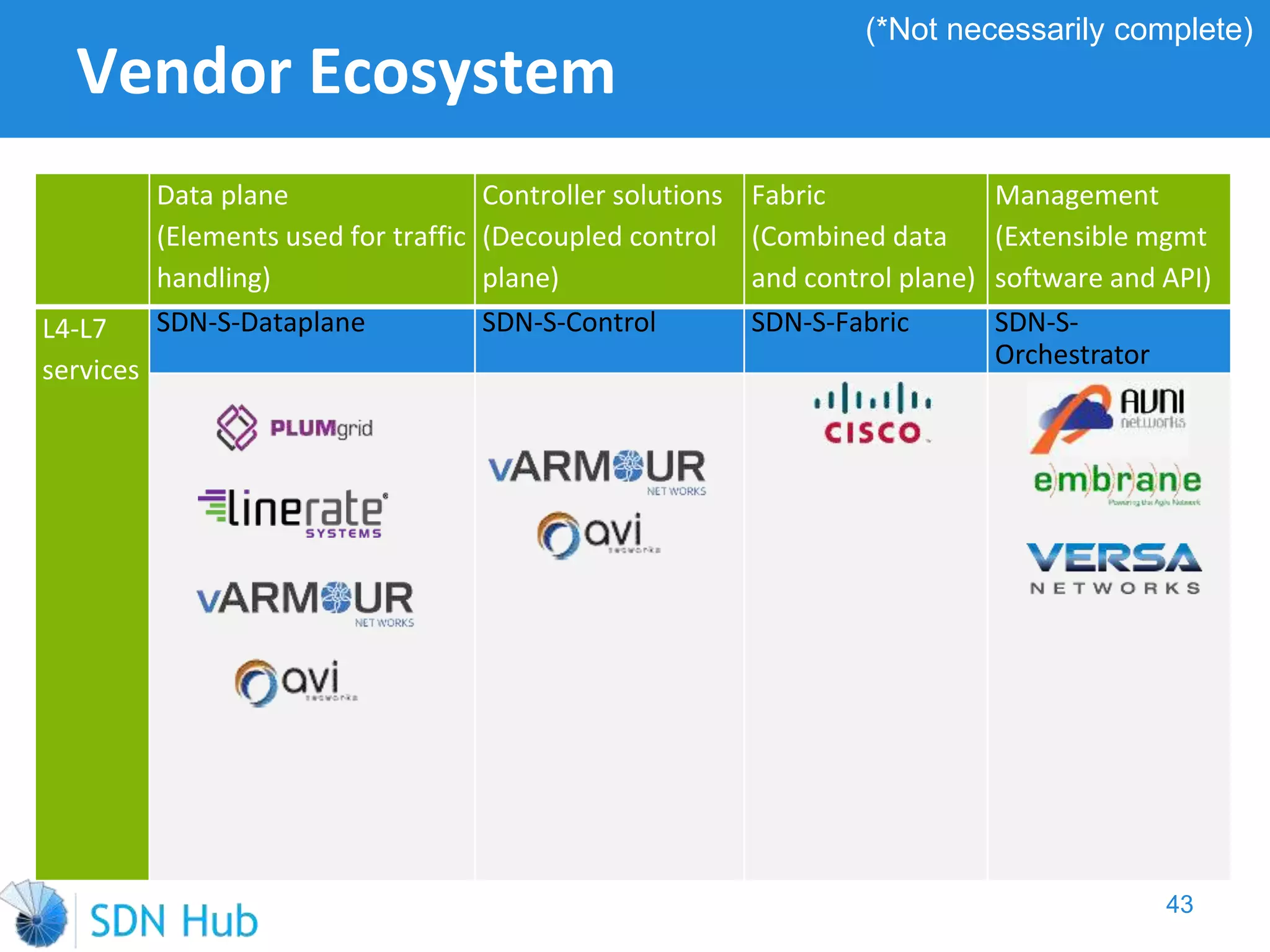

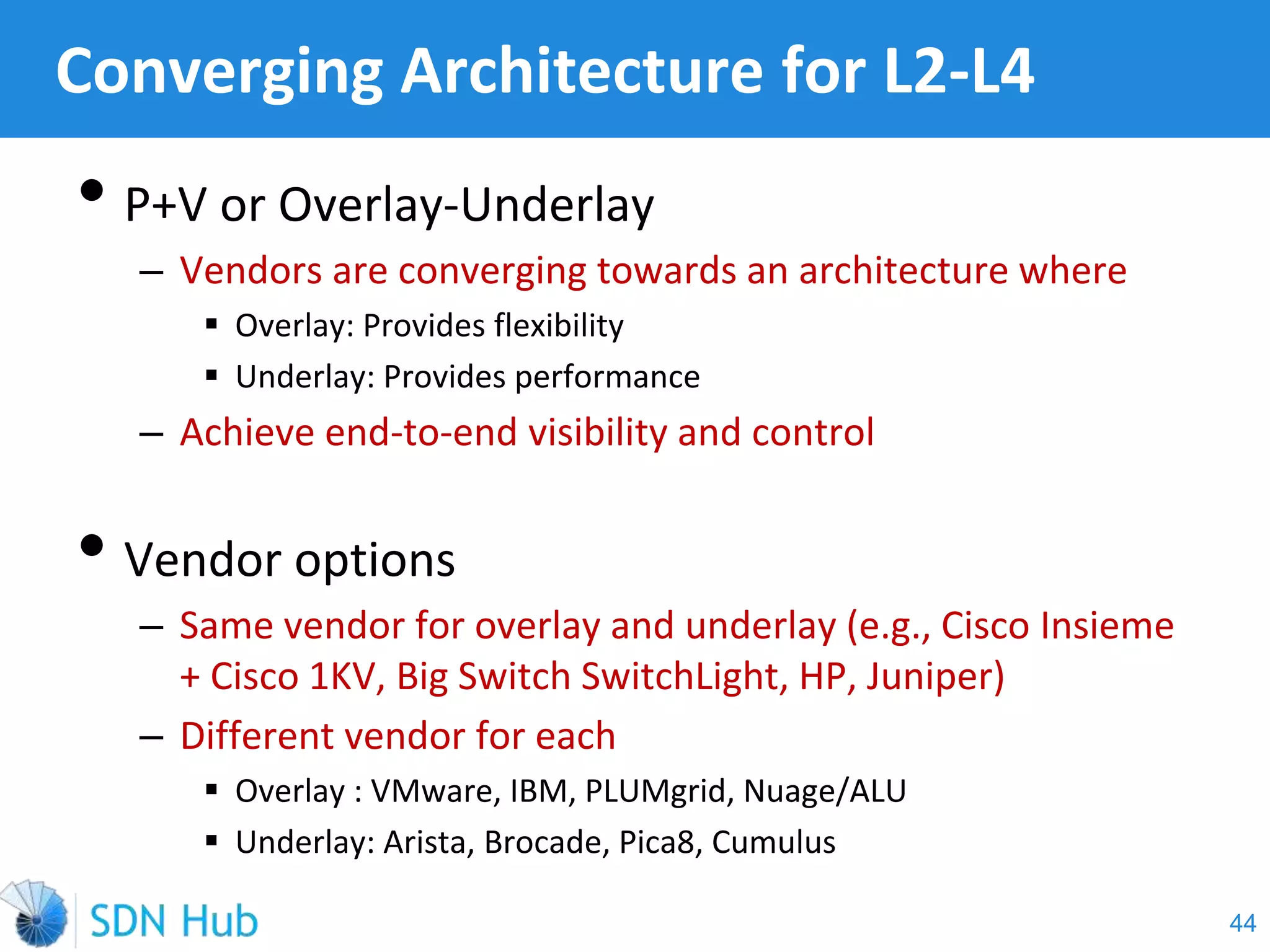

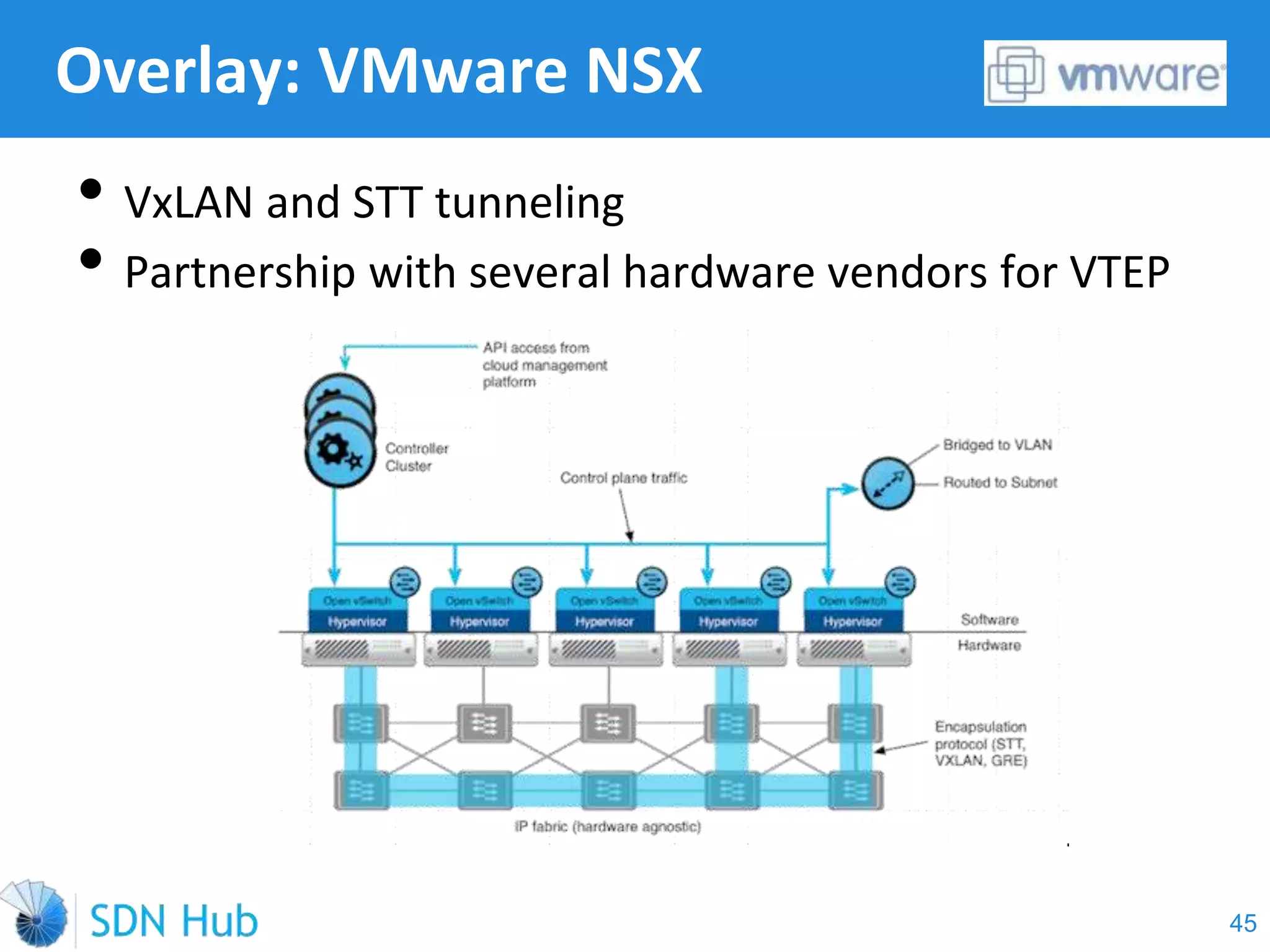

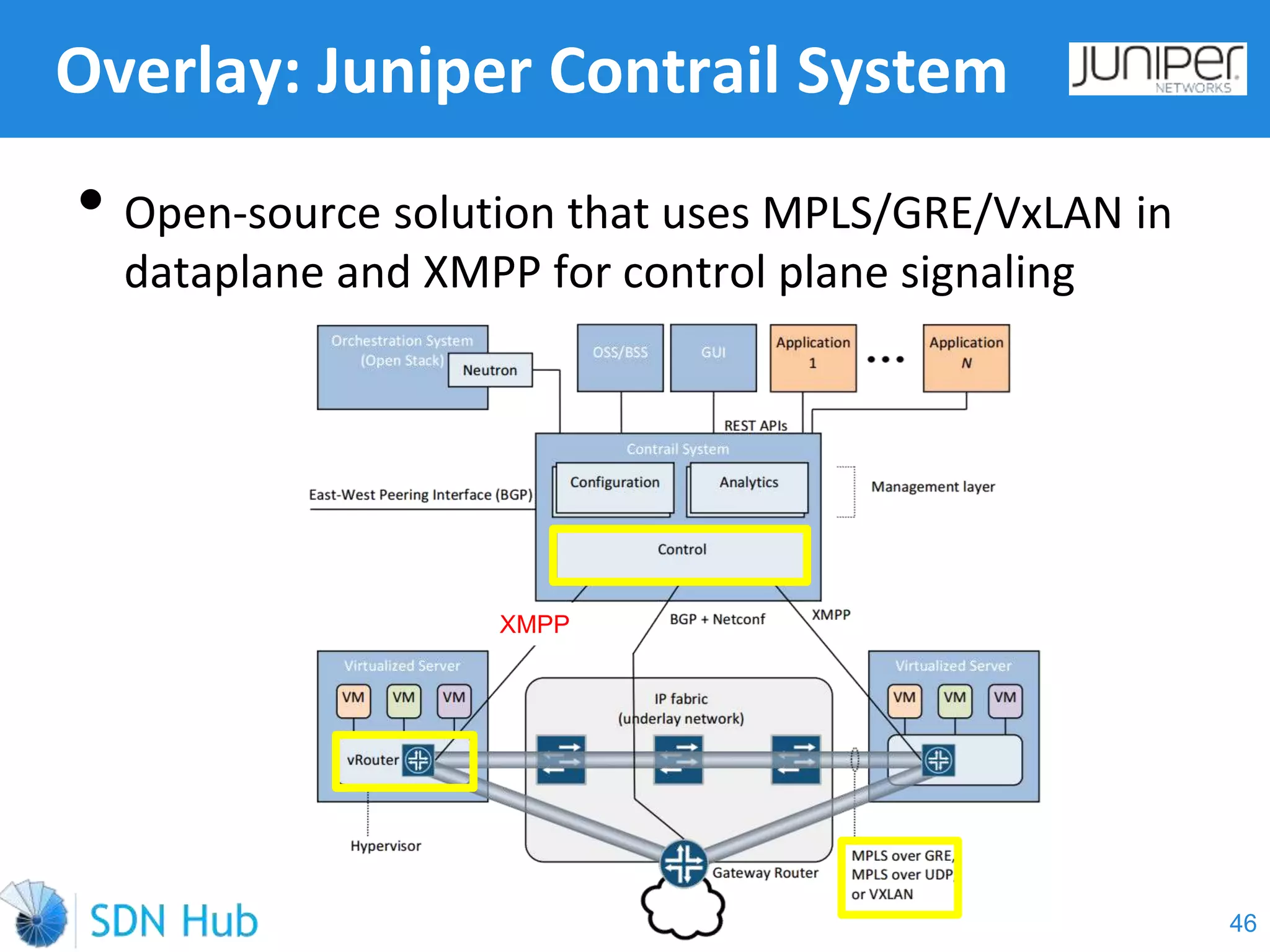

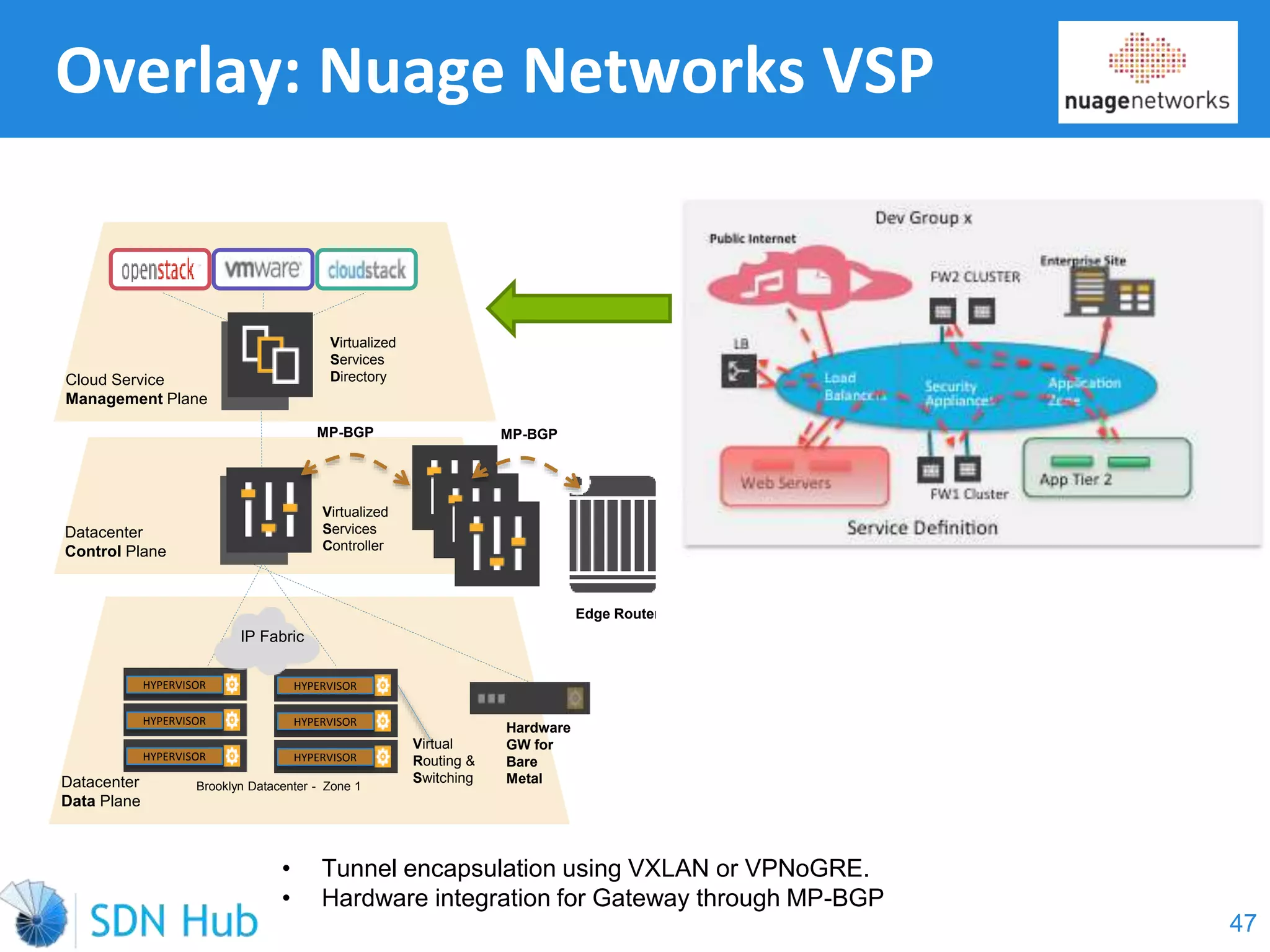

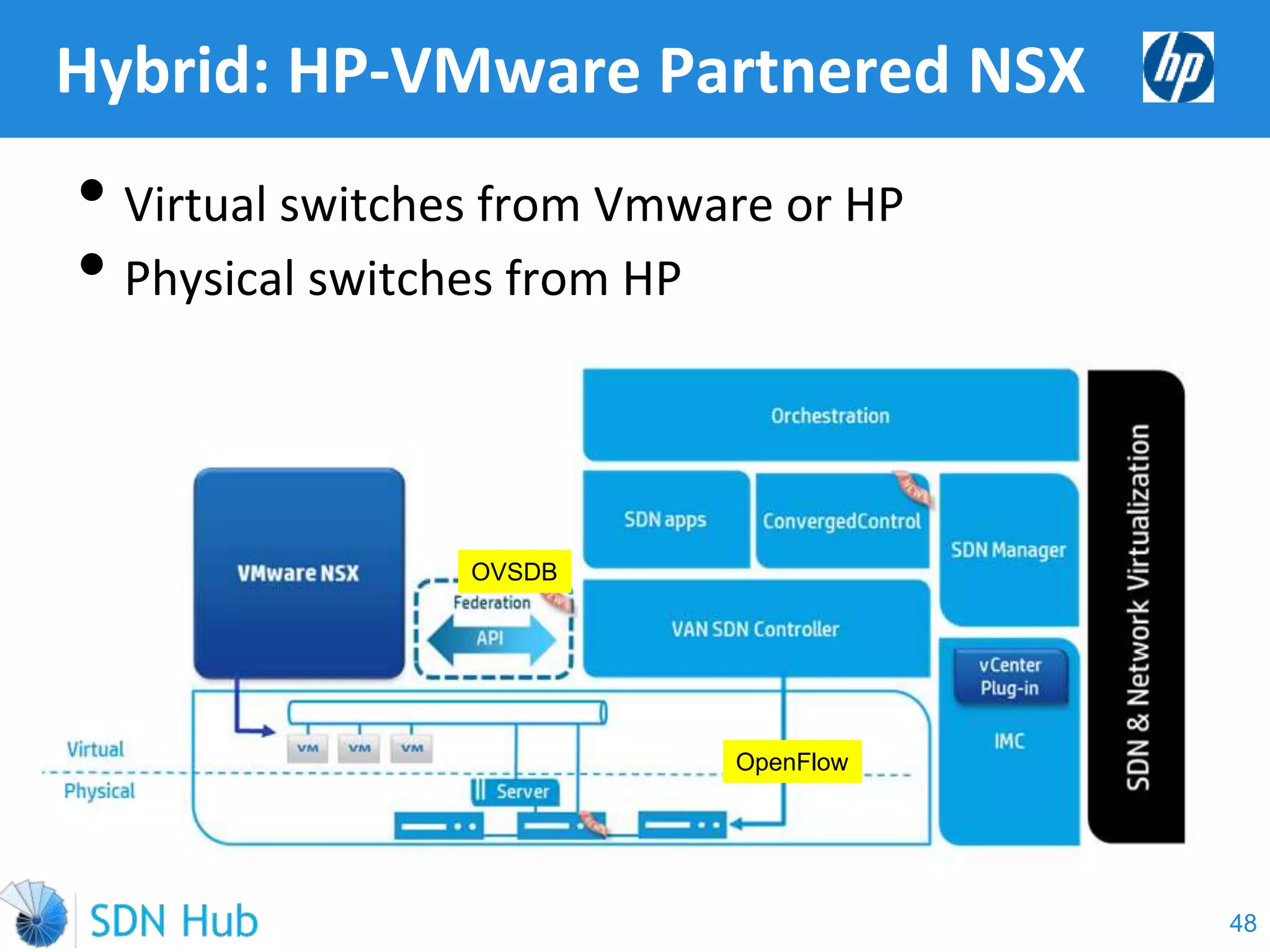

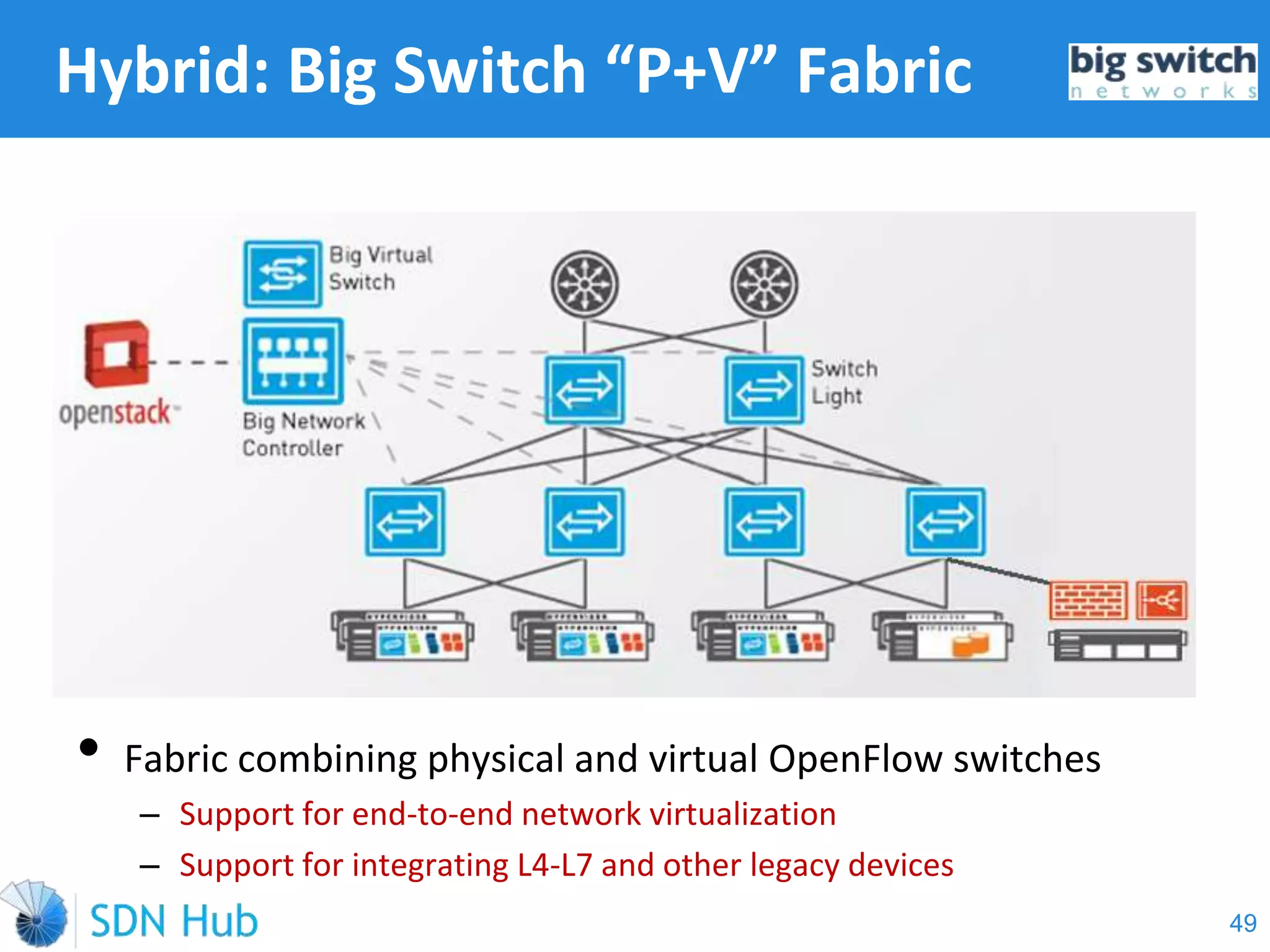

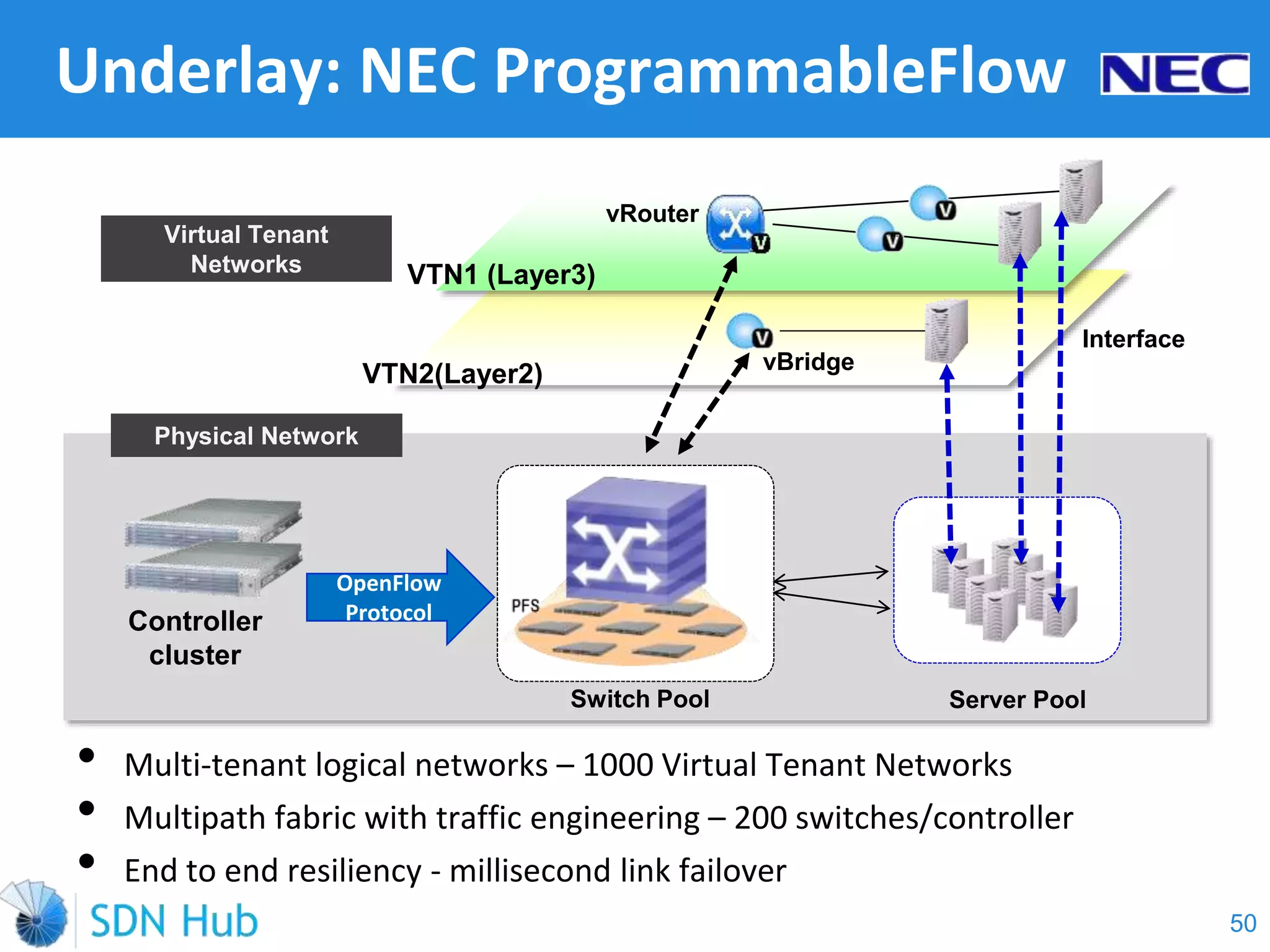

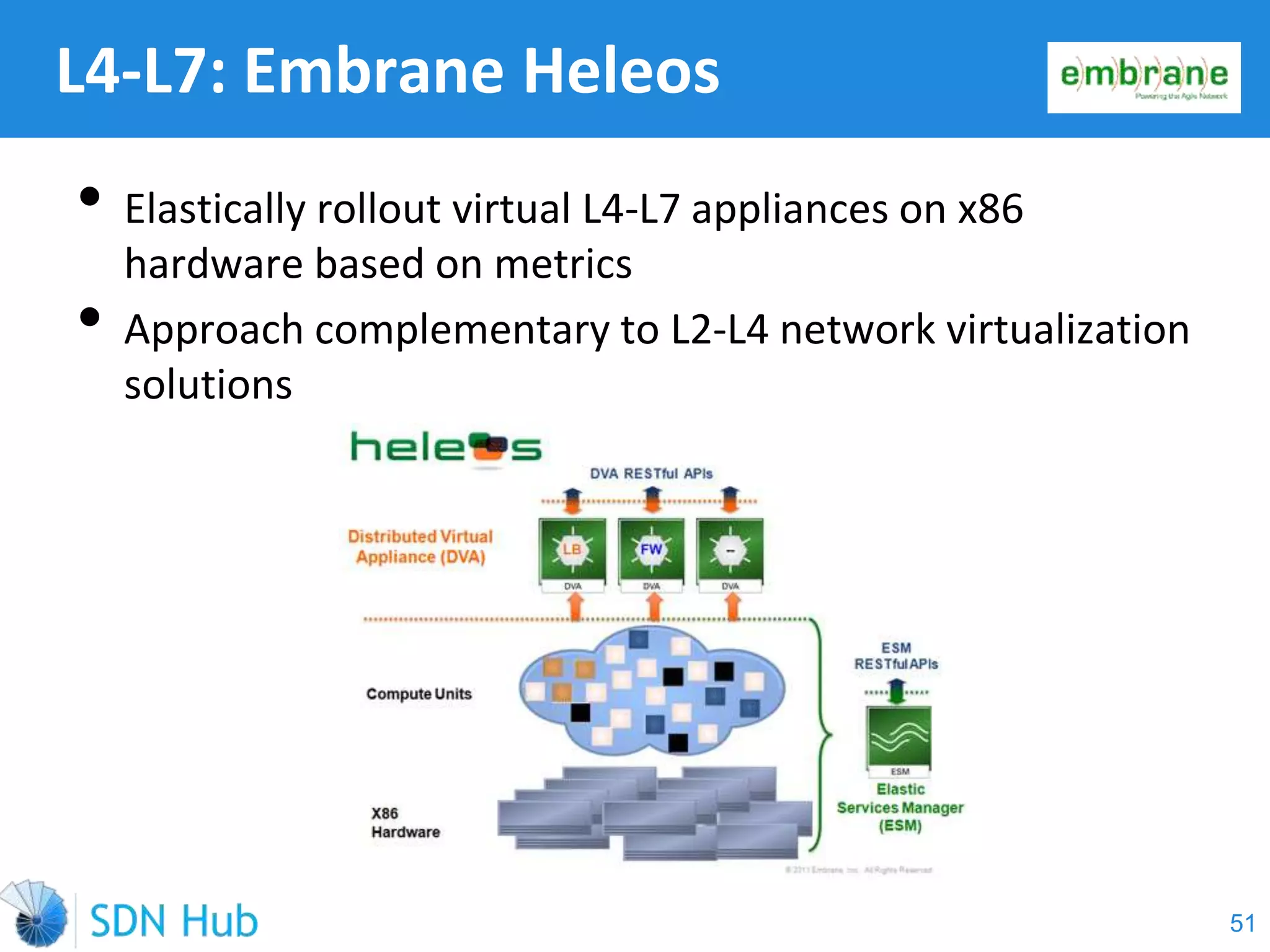

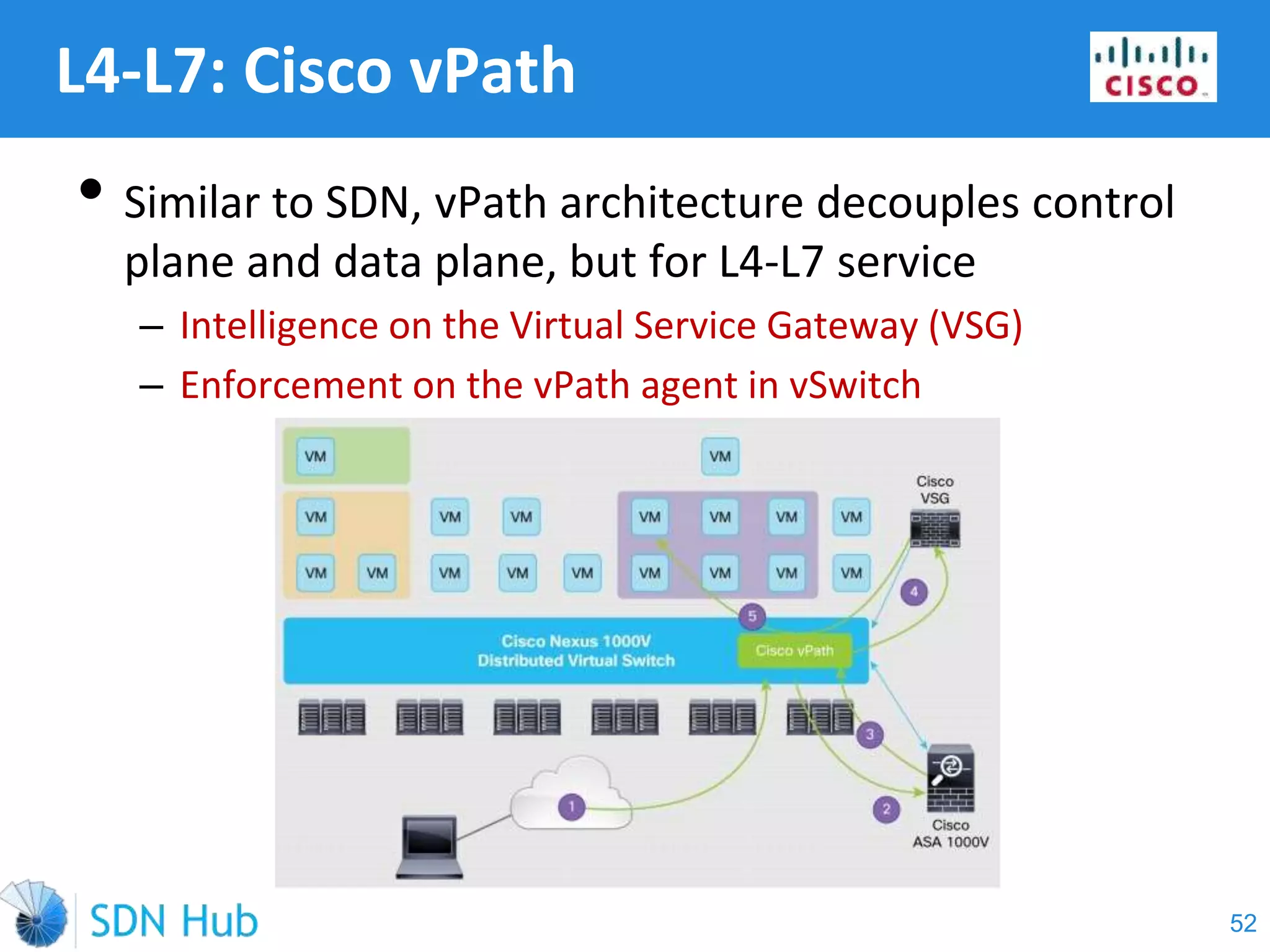

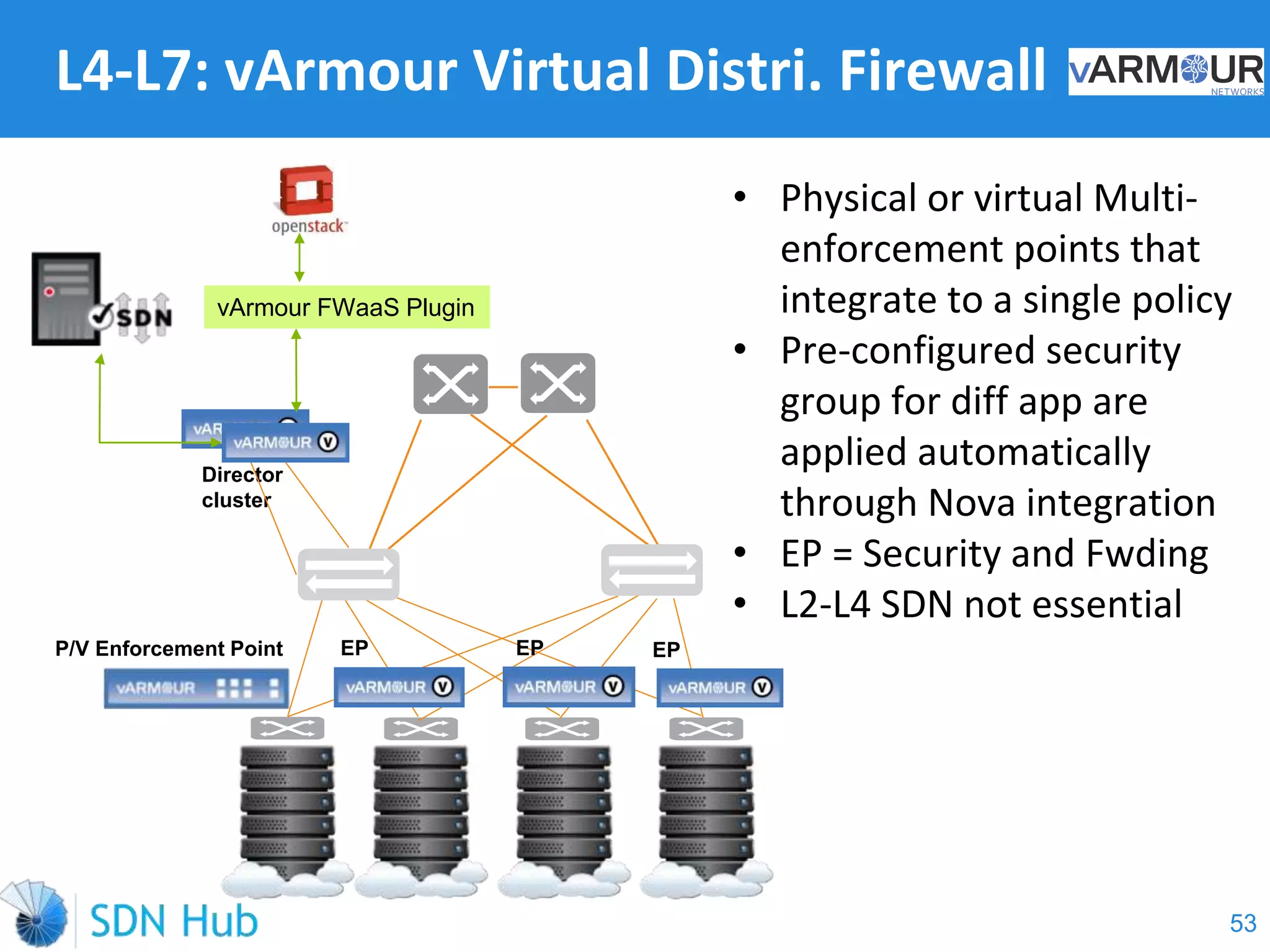

The document discusses the theory and implementation of network virtualization, emphasizing the benefits of software-defined networking (SDN) for managing multi-tenant cloud environments. It details challenges related to current network infrastructures, including inefficient utilization and poor isolation, while presenting SDN as a solution to enhance efficiency, scalability, and orchestration of network resources. The document also surveys various vendor solutions and emerging technologies in the network virtualization landscape.