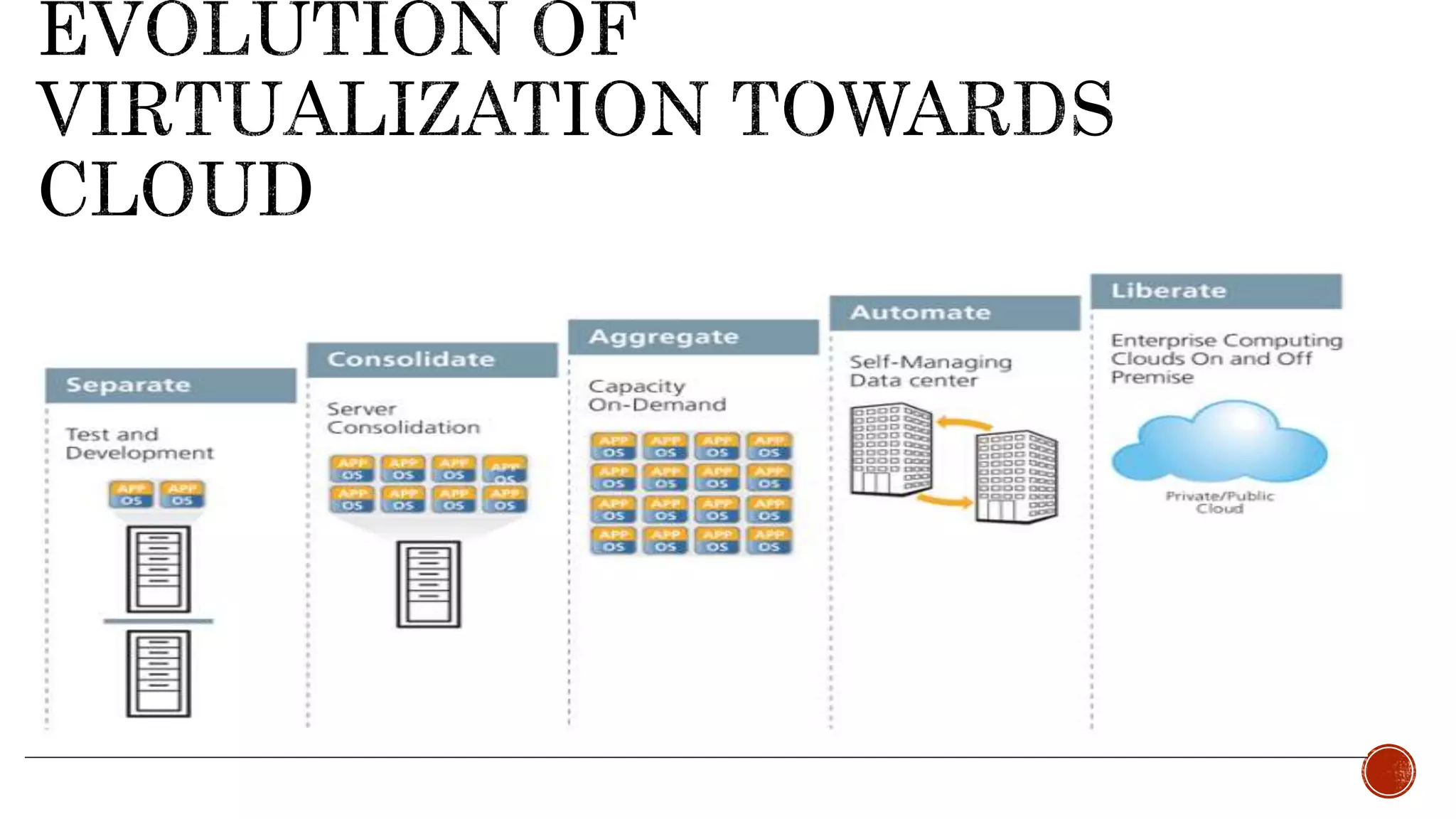

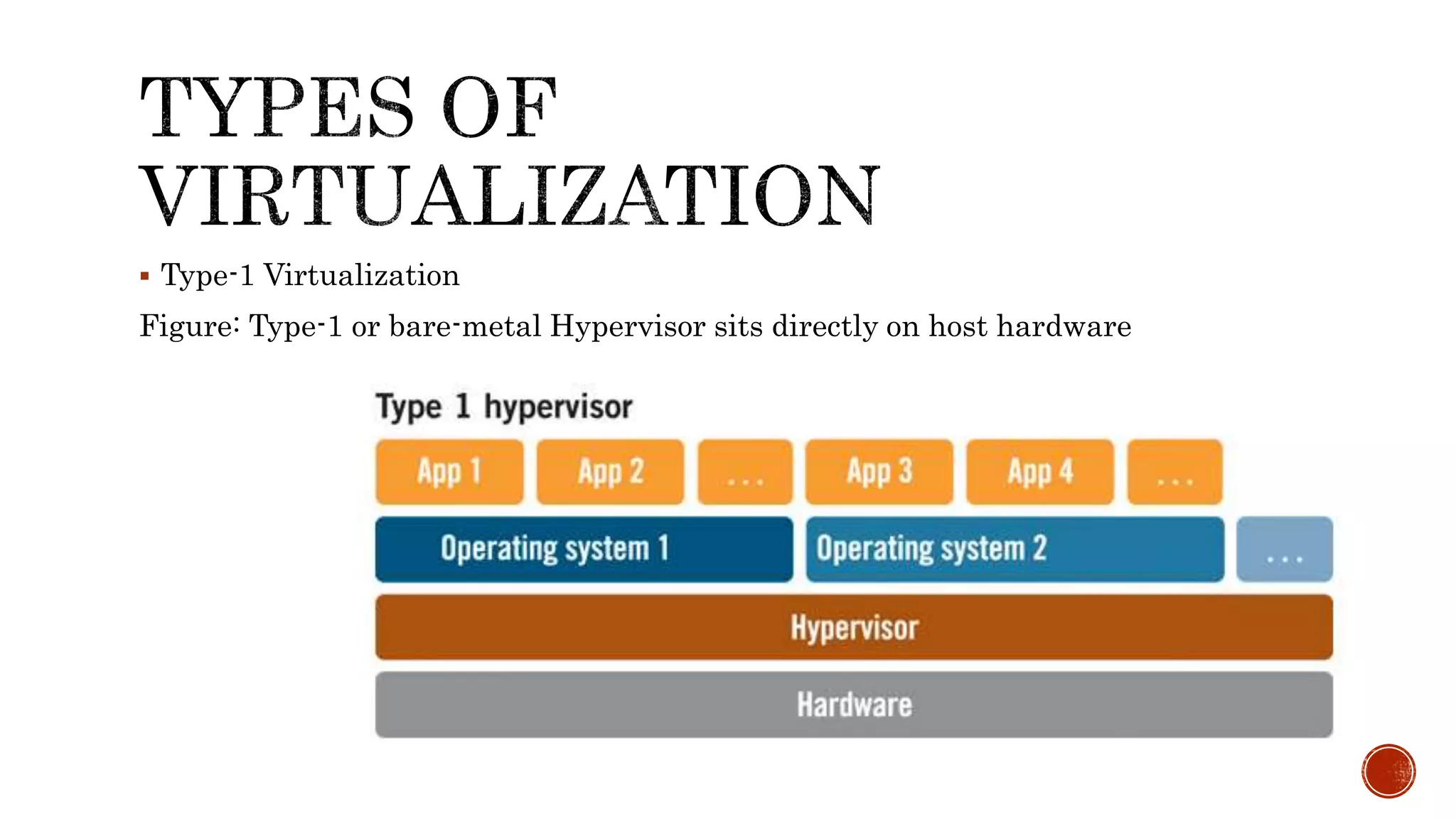

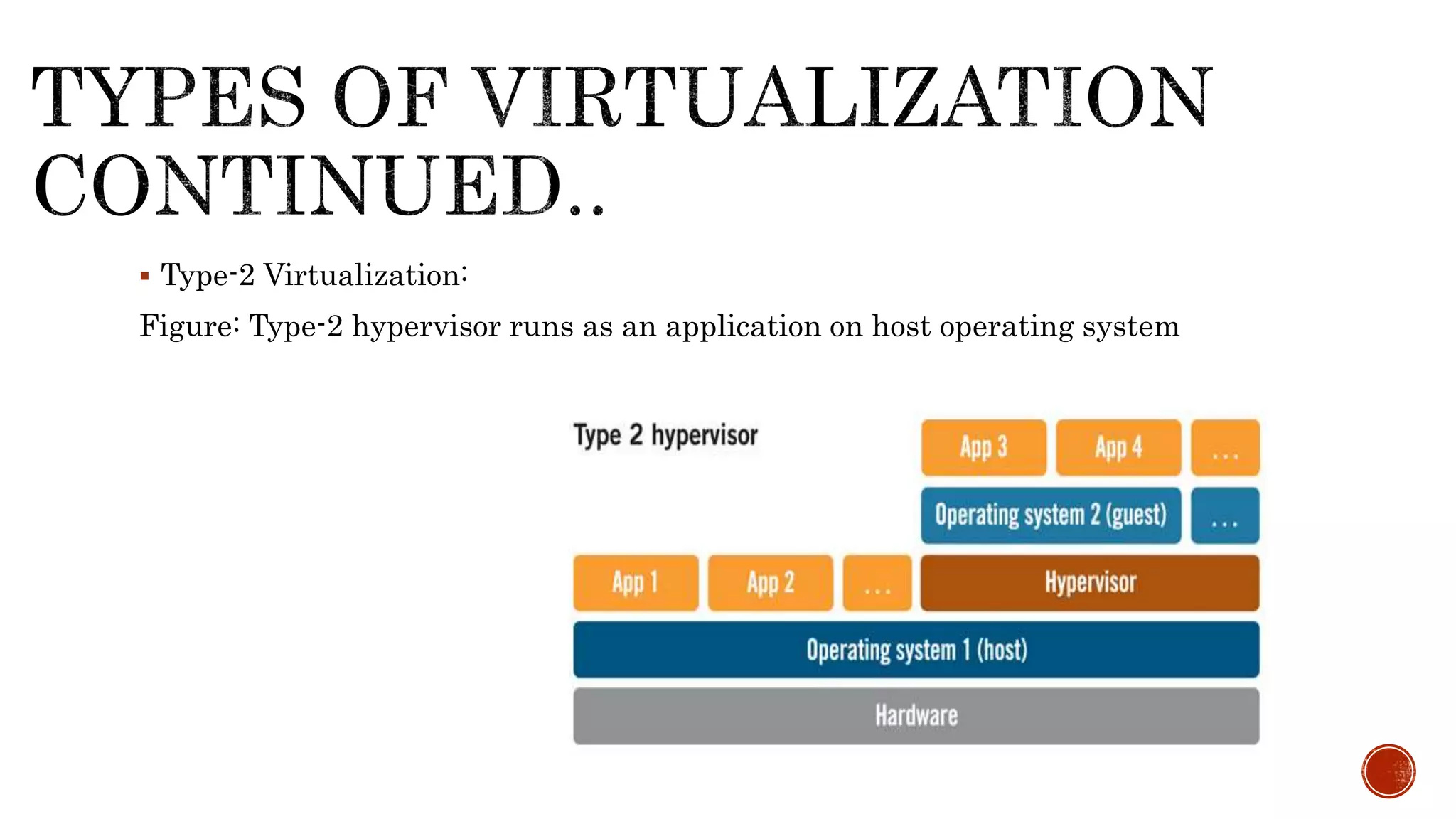

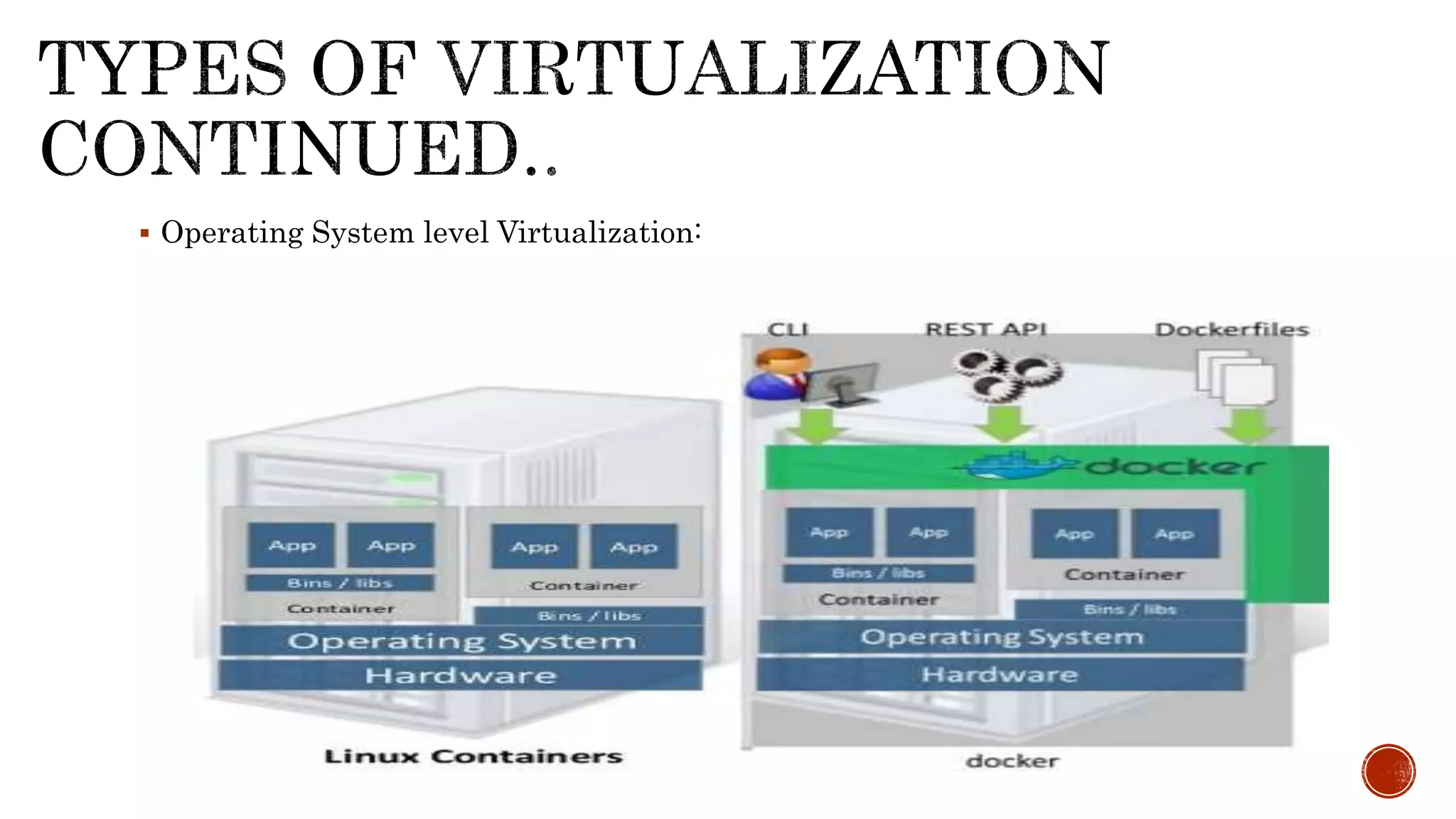

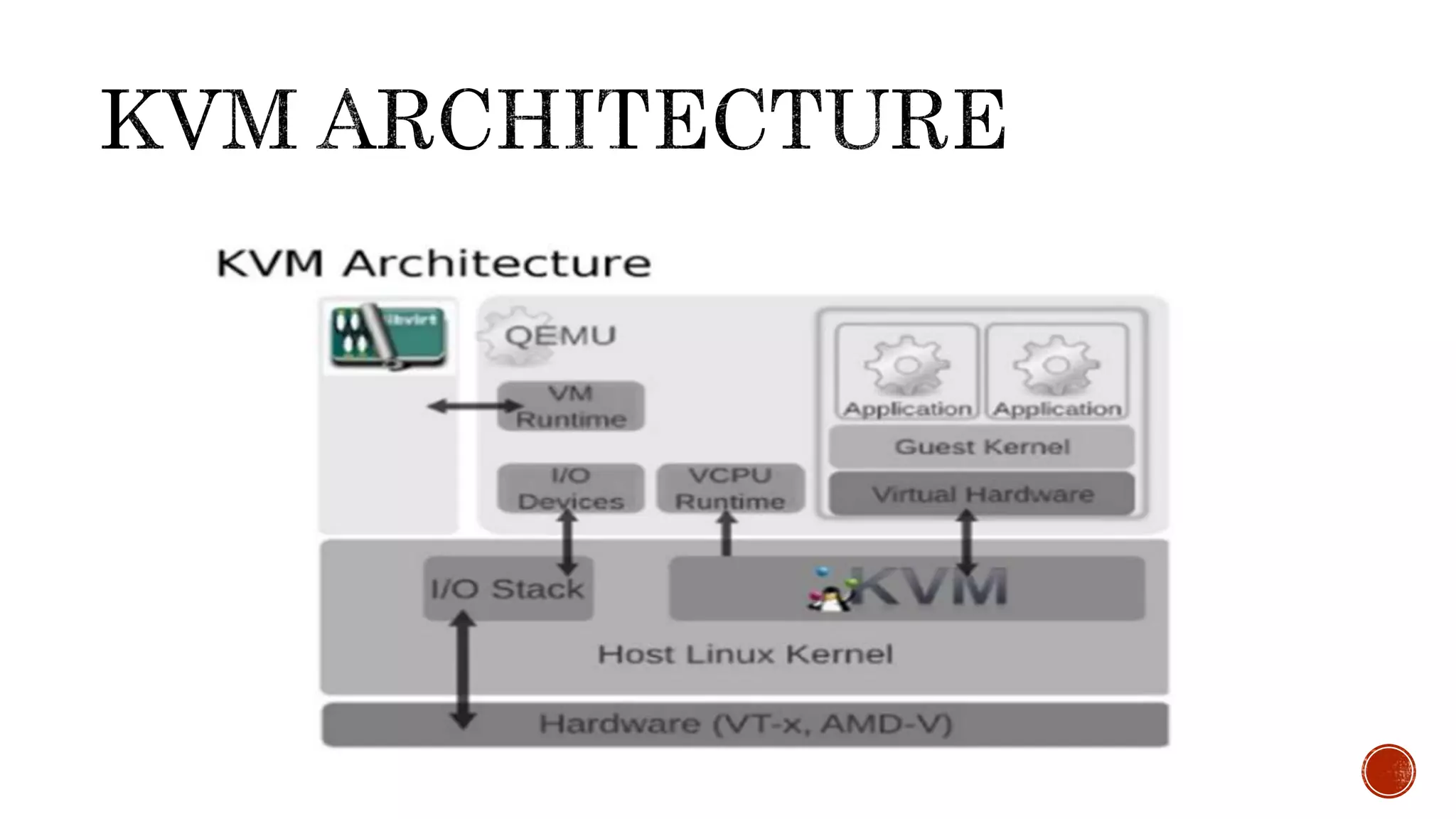

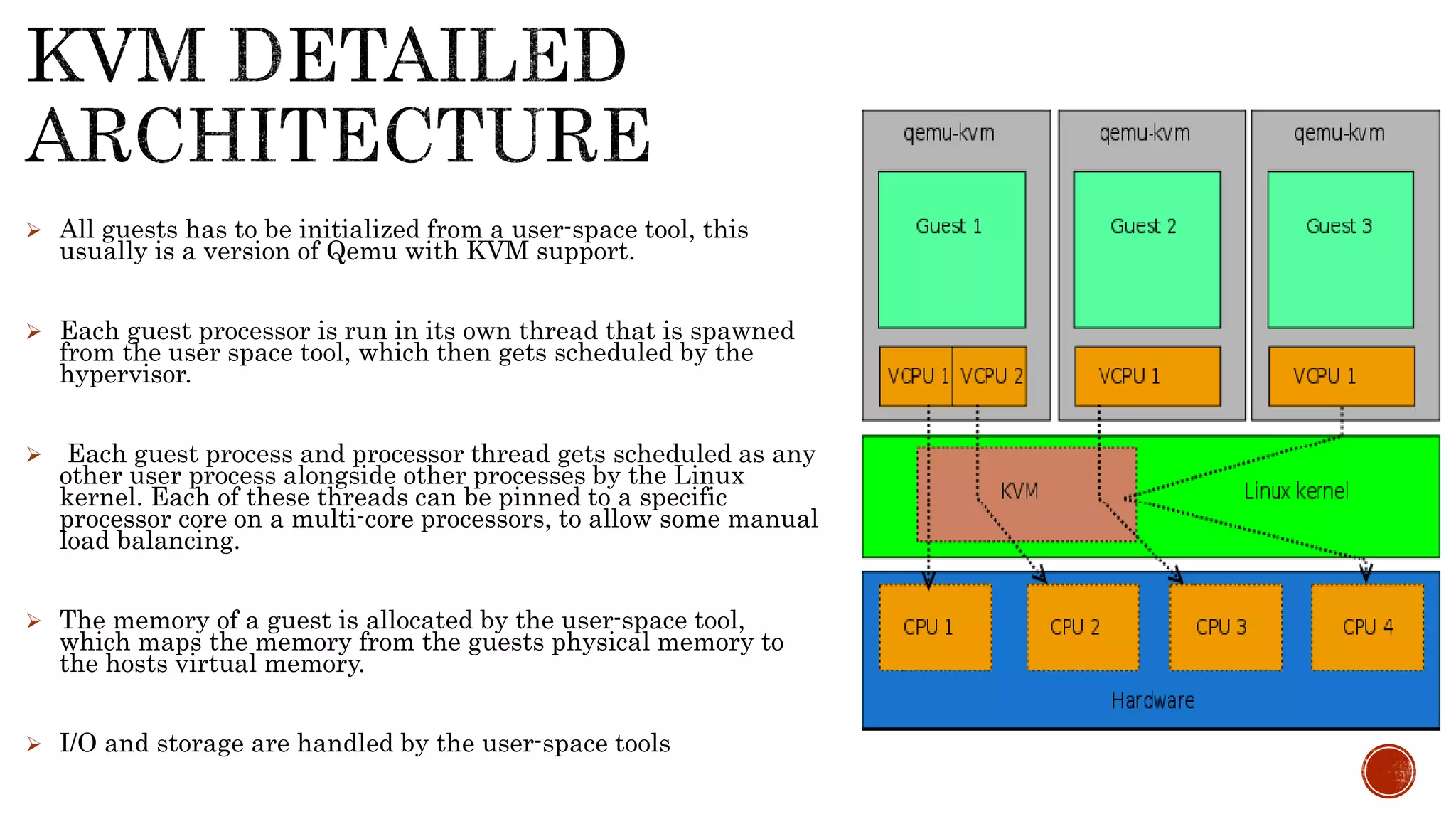

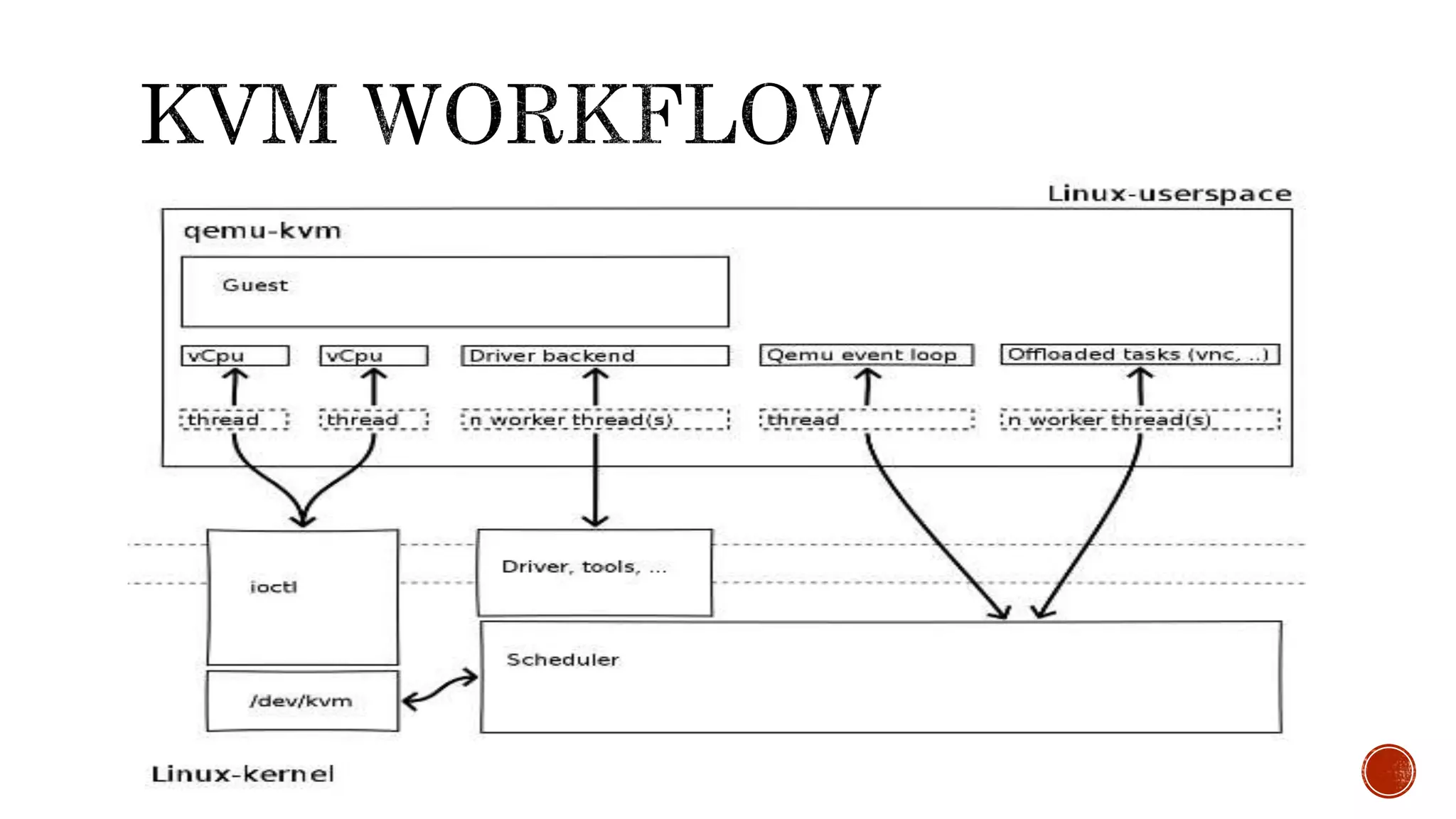

The document provides an overview of virtualization concepts, focusing on hypervisors and their types, particularly Type-1 and Type-2, as well as virtualization tools like KVM and QEMU. It highlights the benefits of virtualization, such as server consolidation and improved management, alongside its drawbacks, including performance hits and single points of failure. Additionally, it explains how KVM utilizes Linux kernel features to facilitate virtualization while allowing guests to run as regular processes.