-

Notifications

You must be signed in to change notification settings - Fork 30.9k

Allow saved_model export of TFCLIPModel in save_pretrained #16886

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

The documentation is not available anymore as the PR was closed or merged. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you @seanmor5 for these valuable changes 🙏 We know that serialization (and compiling into graphs) is dodgy at the moment, and we are planning to improve it soon -- perhaps borrowing some changes from this PR.

Added a few minor notes.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Converting to a tuple would break the assumption in the type hint. Can we remove this line?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Updated, but unfortunately the test fails because TFCLIPOutput is a nested dataclass---I'm not 100% if TF can trace through nested dataclasses or not, or if the reason it's failing lies somewhere else

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Uhmmm that is inconvinient. Changing the output type would mean a different TF-PT API, which we would like to avoid, but failing at serving time is also very undesirable 🤔

Can you share the stack trace of the error?

|

Tagging @ydshieh -- this PR adds several tests |

bcbb239 to

177684a

Compare

|

@gante No problem, glad to help now and if you have plans to improve graph/saved_model serialization in the future I will be glad to help then as well :D |

|

@seanmor5 Thank you for this PR 🚀 ! @gante Let me take a look before merge. I haven't checked yet, but from the author @seanmor5 's description (regarding Therefore I would like to check a bit more on my side 😄 |

I think we will have to touch a significant number of models for XLA/Saved Model, to be honest 😅 |

|

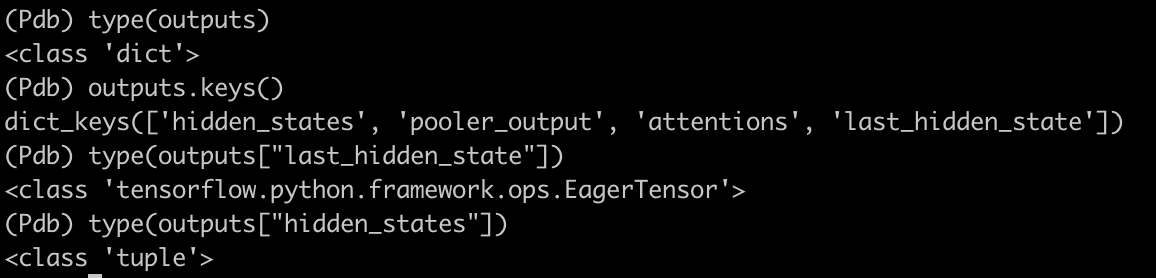

Hi, I could confirm the issue from If I change the signature of I will check running the model in |

|

Regarding Code snippetfrom transformers import TFCLIPTextModel, TFCLIPVisionModel, TFCLIPModel, CLIPConfig

import os

import tensorflow as tf

ckpt = "openai/clip-vit-base-patch32"

config = CLIPConfig.from_pretrained(ckpt)

text_config = config.text_config

model = TFCLIPTextModel.from_config(text_config)

def get_inputs(batch_size, seq_len):

input_ids = tf.constant(1, shape=[batch_size, seq_len], dtype=tf.int32)

attention_mask = tf.constant(1, shape= [batch_size, seq_len], dtype=tf.int32)

inputs = {"input_ids": input_ids, "attention_mask": attention_mask}

return inputs

inputs_1 = get_inputs(3, 5)

inputs_2 = get_inputs(4, 7)

outputs = model(**inputs_1)

# print(outputs)

@tf.function

def foo(inputs):

outputs = model(**inputs)

return outputs

outputs = foo(inputs_1)

outputs = foo(inputs_2) |

|

Sorry for being a bit picky, but I would prefer to get better context in order to decide such changes. In particular, if this issue only occurs during saving to saved_model, I think we can do some more research first to see if there is better solution. |

|

@ydshieh No problem! You are right, I was assuming there might be issues with EDIT: This is a failing case for I am open to exploring whatever other options you think might be better |

|

OK. Maybe doing some more research is good. I will find time to get some more ideas. I always feel that these limitations not easy to handle, but so far (my own) use cases could use a fixed shape (other than batch dim). |

|

For context, we have been suggesting to users to pad and set the second dimension of the shape to the model's maximum sequence length, in this sort of situation. However, it's unoptimized, a manual process, and it doesn't work well in all situations (e.g. in auto-regressive text generation with models like GPT-2, the defined padded input length has to be smaller than the max sequence length, to allow new tokens to come in, but big enough to handle all prompts). |

|

I understand, and that's what I will do although not the best ideally. One question is: if we use |

|

@ydshieh So I applied the patch with One thing to note is that without the input signature to relax the sequence length constraint, the function is retraced which can be a performance hit. With But this version is retraced without an input signature: |

|

@seanmor5 Thank you for all the effort providing the information. I will take a look (you know a lot about the TF graph thing 🚀 ! |

|

I could confirm all @seanmor5 states, and also find the TF doc here I start to think that's the design. Not sure why TF decides to do so, maybe there are reasons to separate the static constants and dynamic constants (performance consideration?). I am in favor to approve. I will take some time to check the added tests, and think about it a bit more in the meantime. |

|

@ydshieh Thank you! I've had to debug way too much TF code in my life so I've gotten use to it :) So unfortunately the last thing that needs to be addressed is the failing |

|

@seanmor5 that exception is raised here -- i.e. on the TF serving side, so outside our control. It means we have to change something about our API if we want to support serving for all outputs. I'm calling in for second opinions: @sgugger, as he's more experienced in these situations, and @Rocketknight1, my fellow TF MLE. @sgugger @Rocketknight1 some context:

A few additional notes:

Potential solutions:

|

|

For more information, is it possible to convert the nested fields (here |

|

I haven't tried TF serving yet (probably just once). For HF models, while |

@sgugger Yes, it is possible, and it should solve the problem ( |

I guess @sgugger doesn't necessarily mean we should use I would much prefer using dictionary though. Maybe let us check what really gives as outputs when we use HF's TF serving model to make the decision? |

@ydshieh Can confirm that the output of a loaded model using (output types of a reloaded I experimented with converting the problematic variables to multiple formats:

All of them result in the same exception. I also tried to look into documentation on how to cast into The only thing that seems to work is a flat serving structure, without nested components. @seanmor5, I got the exact same exception when attempting your original solution, with |

|

Are those composite outputs really useful for the serving? Can't we just remove them entirely? |

|

Probably. We can leave them as a TODO and wait for an issue :) @seanmor5 would you be okay with that? (I'm afraid we are hitting a hard problem for a feature we probably don't need) |

|

Thank you for the work, @gante ! Surprised that Could you share the code you use 🙏 ? |

|

@ydshieh Thank you for the review! I believe I addressed all comments |

|

@seanmor5 Thank you. I will take a look. Regarding the tests:

|

|

@ydshieh Thank you! I was running black locally but it seemed for some reason it was not catching the formatting issues. I have fixed the issues, though it seems the code quality is still failing but from the |

|

Regarding the style, could you follow this comment, please? And I just merged a (big) PR, so you will need to move a bit the test file, please see this comment: Thank you so much! |

Co-authored-by: Joao Gante <joaofranciscocardosogante@gmail.com>

This reverts commit a4abfa6ba3b7875a13538dbc2ddc4eb17dfcca8d.

b41515f to

eb2a2a7

Compare

|

@ydshieh Beautiful! That worked great! |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM. Let's wait @sgugger approval too, and see if @Rocketknight1 has any further comment :-)

Co-authored-by: Yih-Dar <2521628+ydshieh@users.noreply.github.com>

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for all the work on this!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Quick review: Replacing tf.constant with tf.fill for potentially variable sizes is completely correct, good job! The added test is also very valuable, so I'm happy to approve this.

|

Merged. Thank you again, @seanmor5 . Let's see if we could find a way to make |

…ce#16886) * CLIP Serving * Add type hints per code review * Use black, flake8, and isort * Update src/transformers/models/clip/modeling_tf_clip.py Co-authored-by: Joao Gante <joaofranciscocardosogante@gmail.com> * Rollback serving_output and add TODO * Remove irrelevant portions of failing tests * Revert "Rollback serving_output and add TODO" This reverts commit a4abfa6ba3b7875a13538dbc2ddc4eb17dfcca8d. * Rollback to original test/serving_output * Fix unused var * Apply suggestions from code review * Update formatting with black * Fix style again from rebase * Update tests/models/clip/test_modeling_tf_clip.py Co-authored-by: Yih-Dar <2521628+ydshieh@users.noreply.github.com> Co-authored-by: Joao Gante <joaofranciscocardosogante@gmail.com> Co-authored-by: Sean Moriarity <sean.l.moriarity.mil@army.mil> Co-authored-by: Yih-Dar <2521628+ydshieh@users.noreply.github.com>

What does this PR do?

I apologize if this is out of scope. There were a few bugs in TFCLIPModel which prevented the model from being exported using the TensorFlow SavedModel format:

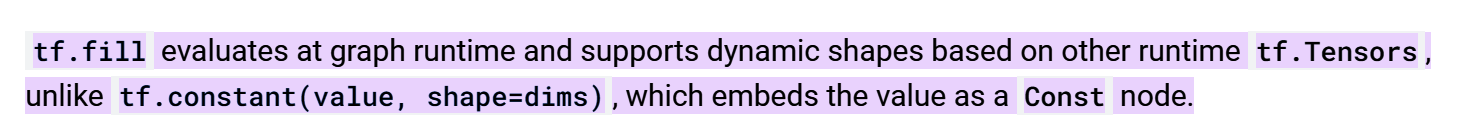

_build_causal_attention_maskmakes use oftf.constantwith a runtime dynamic value. It seemsshape_listmakes use oftf.shapewhich returns a symbolic tensor (inside autograph), which prevents the graph from being fully traced.tf.constantdoes not allow runtime dynamic values, buttf.filldoes, so I replacedtf.constantwith atf.castandtf.fillcombo. I don't even thinkTFCLIPModelwould run inside atf.functionwithout this change because the autograph trace fails.TFCLIPTextModelneeds to override theservingdefault implementation. The default implementation expectstoken_type_idswhich is not a valid input here.serving_outputfor TFCLIPModel has some issue with tracing through nested dataclasses, which I can't seem to get right just quite yet. Ideally it should be as easy as callingserving_outputontext_model_outputandvision_model_output(since there is someconvert_to_tensorstuff going on in each output). I was having problems with TensorFlow sayingTFBaseModelOutputWithPoolingnot being a tensor, so I figured the tuple conversion would work, but it doesn't seem to be the fix.I added tests for exporting each of

TFCLIPModel,TFTextModelandTFVisionModelas saved models to verify individual components work and that it's the integration of both that's failingcc @LysandreJik for TensorFlow changes