-

Notifications

You must be signed in to change notification settings - Fork 25.7k

[jit]maskrcnn & bert AD coverage part 1 #16689

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This looks good. I have a few nits, most important of which is to avoid saving self if all that is needed is self.sizes(). Saving something just for its sizes potentially uses a lot of memory. I tried to find all the places where just the sizes could be saved.

|

|

||

| - name: mean(Tensor self, IntList dim, bool keepdim) | ||

| self: sum_backward(grad, self.sizes(), dim, keepdim) / _safe_size(self.sizes(), dim) | ||

| self: sum_backward(grad, self.sizes(), dim, keepdim) / at::_safe_size(self.sizes(), dim) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

in person: microbenchmark that moving these functions into at:: did not add any overhead. Microbenchmark by just running, e.g. mean forward/backward on small sizes.

torch/csrc/jit/symbolic_script.cpp

Outdated

| auto pos = schema_name.find_last_of('_'); | ||

| auto schema_name_suffix = schema_name.substr(pos + 1); | ||

| std::string key = canonicalSchemaString(actual_schema); | ||

| if (!schema_name_suffix.empty() && schema_name_suffix.find_first_not_of("0123456789") == string::npos) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can you refactor the string processing into function?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why are we adding a bunch of backward ops instead of putting them as Python code in AD? From what I understand the plan is to deduplicate them later, and start using Python for everything, so it doesn't seem necessary to do that in every case

| return torch.mean(self), backward | ||

| def mean_1(self, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What is mean_0 and mean_1? How do we match up those things with definitions later?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

These suffixes will be removed later when looking up function schema cache. This is a hack to simulate function overloading we have in aten. https://github.com/pytorch/pytorch/pull/16689/files/1a3d72ec52ddc4755008dfe920d4c0a25b8a1e13#diff-758cb565d2c84fc5268de0b63958f78dR330

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

-

name: mean(Tensor self)

-

name: mean(Tensor self, ScalarType dtype)

-

name: mean(Tensor self, IntList dim, bool keepdim)

-

name: mean(Tensor self, IntList dim, ScalarType dtype)

-

name: mean(Tensor self, IntList dim, bool keepdim, ScalarType dtype)

There are mean overloads that has dtype in the signature, should we also include those overloads?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@wanchaol Yea but this dtype option is not exposed in our public api. Thus these ops are not specifically tested in common_methods_invocations.py. Do you know some ops that call into the dtype version of mean?

I didn't want to add some ops without proper tests, thus those were not in this PR.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I found one callsite in here, but that's only a test and no ops found that calls mean with dtype, so I guess it should be fine.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

aha this option is actually exposed but not documented :( a = torch.mean(a, dtype=torch.double) works. I'm can add AD & test for them.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actually I realized all dtypes here are keyword only arguments, and since we don't document them I assume it's rarely used in real models :P

I would prefer to add them together with proper keyword-only argument parser so that we are less hacky on this. But if there's a usecase that needs this AD formula, let me know so that I can prioritize. :D

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't think we have too much use cases on this either. Let's just add this with the keyword arg parser together.

|

@apaszke I added those backward function since they already existed in In general I think if we want Python for everything in future, maybe we can move them after we enrich our Torchscript with a larger subset of Python language? (eg. list comprehension etc). |

56d3f49 to

14dd5b9

Compare

14dd5b9 to

e89a93f

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@ailzhang has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

|

6.277 vs 6.821 is like an 8% slowdown. Is that number stable across runs, in which case it seems bad, or is it just noise? The test of the mean operator should be compared against the base revision as well since we want to know if we regressed the backward speed by adding the aten op. |

|

My old benchmarks were run with |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@ailzhang has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

Summary: - Moved a few functions from `autograd` namespace to `aten` namespace to be visible from JIT nativeResolver. - Added a hack to loop up keyword only argument. Will add proper support for kw only later - Simulate function overload in aten using `_<number>` as function name suffix. - Even `forward` returns multiple outputs like in `kthvalue`, there's at most one requires grad that we currently support. - Removed the `TensorList` related ops here since partial `TensorList` support is prone to bugs. Our symbolic diff for `cat` was never tested with autodiff, and it seems broken. Need to find another proper way to support these ops(either by properly supporting `TensorList` or sth like `prim::ConstantChunk` and leave them for next PR. Ops supported in this PR: ``` erf expand_as index kthvalue mean permute pow rsub select sqrt squeeze t to topk transpose view var embedding logsumexp // grad is None _dim_arange contiguous nonzero ones_like ``` Pull Request resolved: pytorch/pytorch#16689 Differential Revision: D14020806 Pulled By: ailzhang fbshipit-source-id: a5e2c144a7be5a0d39d7ac5f93cb402ec12503a5

| auto schema_name_suffix = schema_name.substr(pos + 1); | ||

| std::string schema_string = canonicalSchemaString(schema); | ||

| if (!schema_name_suffix.empty() | ||

| && schema_name_suffix.find_first_not_of("0123456789") == string::npos) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

missing std:: before string::npos, failing here on all my builds :/, weird that no CI catched this. @ailzhang

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@sinkingsugar Would you mind using the collect_env.py (https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py) to let me know your environment?

Basically this is indeed a typo on my side, but it got hidden by this header file included indirectly. https://github.com/pytorch/pytorch/blob/master/c10/util/string_utils.h#L6 We likely want to fix this on our side. But I'm curious why your build failed. Thanks!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

At a guess, probably some ifdefs toggled differently causing the header to not be included.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@ezyang Yea agree, would love to know @sinkingsugar 's build environment, maybe worth adding to our CI?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We can probably figure it out just by tracing the include path ourselves :)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

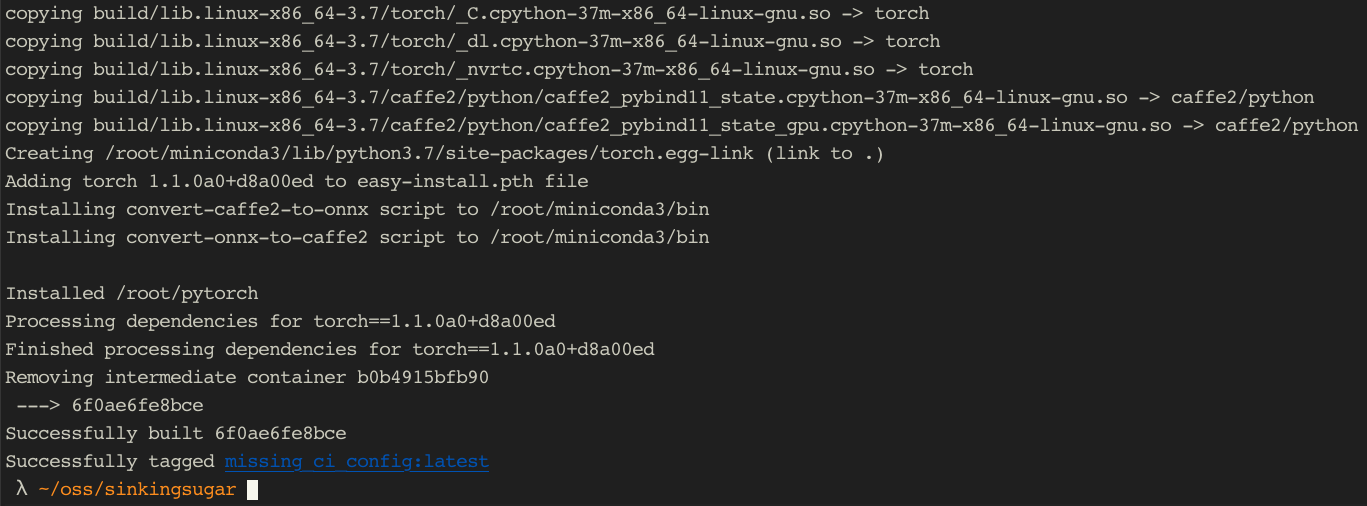

@ailzhang

well, didn't have time to run the script but I will just give you the full Dockerfile 😄

https://gist.github.com/sinkingsugar/81a42c6104bf01efd00e1d4ff9358c9b

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@sinkingsugar Interestingly the Dockerfile built successfully on my end :(

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hmm weird :) pretty sure I gave you the right one! but on master it's now fixed anyway so all good I suppose!

Summary: This PR removes a few size of `self` that passed from forward pass to backward pass when `self` is already required in backward pass. This could be reason that cause the potential slow down in #16689 . I will attach a few perf numbers (still a bit volatile among runs tho) I got in the comment. Pull Request resolved: #17187 Differential Revision: D14179512 Pulled By: ailzhang fbshipit-source-id: 5f3b1f6f26a3fef6dec15623b940380cc13656fa

Summary: - Moved a few functions from `autograd` namespace to `aten` namespace to be visible from JIT nativeResolver. - Added a hack to loop up keyword only argument. Will add proper support for kw only later - Simulate function overload in aten using `_<number>` as function name suffix. - Even `forward` returns multiple outputs like in `kthvalue`, there's at most one requires grad that we currently support. - Removed the `TensorList` related ops here since partial `TensorList` support is prone to bugs. Our symbolic diff for `cat` was never tested with autodiff, and it seems broken. Need to find another proper way to support these ops(either by properly supporting `TensorList` or sth like `prim::ConstantChunk` and leave them for next PR. Ops supported in this PR: ``` erf expand_as index kthvalue mean permute pow rsub select sqrt squeeze t to topk transpose view var embedding logsumexp // grad is None _dim_arange contiguous nonzero ones_like ``` Pull Request resolved: pytorch#16689 Differential Revision: D14020806 Pulled By: ailzhang fbshipit-source-id: a5e2c144a7be5a0d39d7ac5f93cb402ec12503a5

) Summary: It is done by flattening all tensor lists that are inputs/outputs to the graph into the inputs/outputs list in the autograd graph. This is less desirable than simply allowing IValues to exist in the inputs/outputs of autograd::Function but it is substantially less intrusive. CaptureList describes the variables captured for backward in a single class. UnpackInstructs describes how the flattened inputs to backwards are re-packed into lists. ailzhang This PR is also part 2 of covering maskrcnn & bert AD formulas, following #16689. Ops added in this PR: ``` cat index meshgrid reshape split split_with_sizes stack unbind ``` I will also add a few perf numbers here. Pull Request resolved: #16784 Differential Revision: D14104063 Pulled By: ailzhang fbshipit-source-id: 5ceadadfd67ccaac60c5fd6740786c5354e252b9

…orch#16784) Summary: It is done by flattening all tensor lists that are inputs/outputs to the graph into the inputs/outputs list in the autograd graph. This is less desirable than simply allowing IValues to exist in the inputs/outputs of autograd::Function but it is substantially less intrusive. CaptureList describes the variables captured for backward in a single class. UnpackInstructs describes how the flattened inputs to backwards are re-packed into lists. ailzhang This PR is also part 2 of covering maskrcnn & bert AD formulas, following pytorch#16689. Ops added in this PR: ``` cat index meshgrid reshape split split_with_sizes stack unbind ``` I will also add a few perf numbers here. Pull Request resolved: pytorch#16784 Differential Revision: D14104063 Pulled By: ailzhang fbshipit-source-id: 5ceadadfd67ccaac60c5fd6740786c5354e252b9

autogradnamespace toatennamespace to be visible from JIT nativeResolver._<number>as function name suffix.forwardreturns multiple outputs like inkthvalue, there's at most one requires grad that we currently support.TensorListrelated ops here since partialTensorListsupport is prone to bugs. Our symbolic diff forcatwas never tested with autodiff, and it seems broken. Need to find another proper way to support these ops(either by properly supportingTensorListor sth likeprim::ConstantChunkand leave them for next PR.Ops supported in this PR: