-

Notifications

You must be signed in to change notification settings - Fork 25.7k

int32 indexing for Tensor Iterator Reduction #17428

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

Summary: 1. Enabling int32 indexing for cases where TI cannot accumulate in output due to incompatible data types (e.g. Welford). 2. Updating Welford kernel to use int32 instead of int64 indexing on GPU. This change improves performance for torch.var / torch.std Implementation: 1. Allocated extra buffer to handle accumulation between sub Tensor Iterators. 2. Removed int64 indexing in gpu_reduce_kernel 3. WelfordOps now supports index type / combination typeas a template parameter. While GPU uses int32_t and float, CPU implementation uses int64_t and double.

|

Ping @ngimel @umanwizard @colesbury for visibility. |

Pointer arithmetic with floats and casting has been updated to safely use size_t

|

Test failure seems to be machine error. |

|

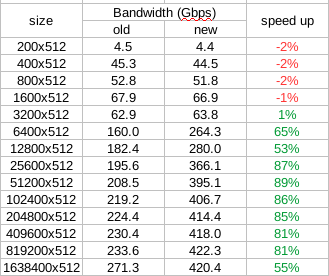

Benchmark torch.std Benchmark script: |

|

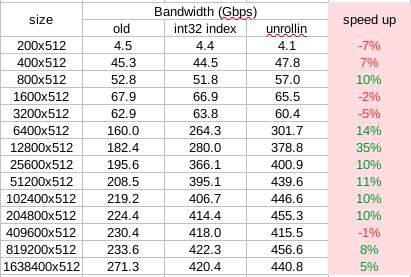

I have another PR that resolves the dependent loop unrolling in current Tensor Iterator. It gives further speedup (table above shows relative speedup to int32 indexing) The other PR is dependent on this one, I'll issue the other PR once this is merged. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@umanwizard has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

updating fraction reduction function; change shared_ptr to unique_ptr for recursive gpu_reduce_kernel call;

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@umanwizard has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

|

Hi @jjsjann123 , could you address @apaszke 's comments, and also rebase to see if the failing tests pass then? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@umanwizard has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

|

Failure looks like infra issue. (Can I) How do I trigger circleci test? |

Summary: 1. Enabling int32 indexing for cases where TI cannot accumulate in output due to incompatible data types (e.g. Welford). 2. Updating Welford kernel to use int32 instead of int64 indexing on GPU. This change improves performance for torch.var / torch.std Implementation: 1. Allocated extra buffer to handle accumulation between sub Tensor Iterators. 2. Removed int64 indexing in gpu_reduce_kernel 3. WelfordOps now supports index type / combination typeas a template parameter. While GPU uses int32_t and float, CPU implementation uses int64_t and double. Pull Request resolved: pytorch/pytorch#17428 Differential Revision: D14264608 Pulled By: umanwizard fbshipit-source-id: 3eb54451de925b469dbc1127e5ea7443c4431036

Summary:

incompatible data types (e.g. Welford).

This change improves performance for torch.var / torch.std

Implementation:

While GPU uses int32_t and float, CPU implementation uses int64_t and double.