-

Notifications

You must be signed in to change notification settings - Fork 25.7k

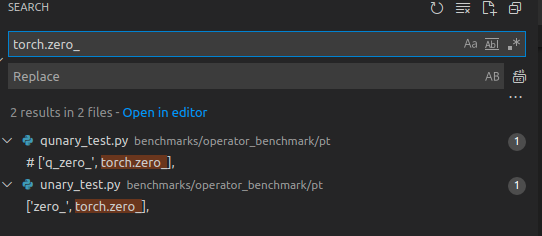

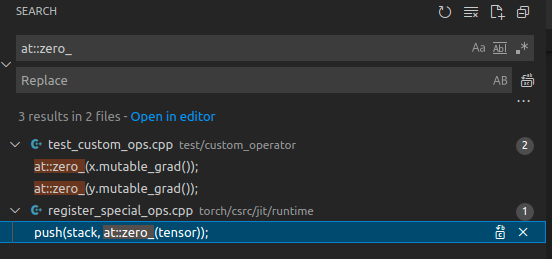

OpInfo: zero_

#58731

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

OpInfo: zero_

#58731

Conversation

💊 CI failures summary and remediationsAs of commit 79781e3 (more details on the Dr. CI page):

🕵️ 1 new failure recognized by patternsThe following CI failures do not appear to be due to upstream breakages:

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @krshrimali .

Have asked a few questions.

| sample_inputs_func=sample_inputs_xlogy), | ||

| OpInfo('zero_', | ||

| dtypes=all_types_and_complex_and(torch.bfloat16, torch.half, torch.bool), | ||

| supports_autograd=False, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This should support autograd.

pytorch/tools/autograd/derivatives.yaml

Line 1274 in 6de11c2

| - name: zero_(Tensor(a!) self) -> Tensor(a!) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is the error I got for almost all the tests:

RuntimeError: a leaf Variable that requires grad is being used in an in-place operation.

Do you have any idea what is the solution to this? @kshitij12345

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do you still get an error after wrapping the operator in lambda?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think the tests always assume that the operator's function variant doesn't modify the input inplace (which was always true except for this operator) .

eager consistency test has some mechanism to deal with this,

Line 321 in d88d321

| cloned = clone_input_helper(sample.input) if variant in inplace_ops else sample.input |

Need to dig a bit further.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

REG: #58731 (comment)

Yes, still getting the same errors after wrapping the operator in lambda.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Inspired by

Line 321 in d88d321

| cloned = clone_input_helper(sample.input) if variant in inplace_ops else sample.input |

I tried,

OpInfo('zero',

op=lambda x: torch.zero_(x.clone()),

dtypes=all_types_and_complex_and(torch.bfloat16, torch.half, torch.bool),

supports_autograd=True,

supports_out=False,

sample_inputs_func=sample_inputs_zero_),which works for all test except jit and eager consistency.

jit consistency has issues with op being wrapped in lambda.

The eager test fails for more subtle reasons.

pytorch/torch/testing/_internal/common_methods_invocations.py

Lines 224 to 228 in d88d321

| method_variant = getattr(torch.Tensor, name, None) | |

| # attributes like real, imag are not callable | |

| self.method_variant = method_variant if callable(method_variant) else None | |

| inplace_name = name + "_" | |

| self.inplace_variant = getattr(torch.Tensor, inplace_name, None) |

We get the method variant and inplace variant in the above way.

For this case, method variant is Tensor.zero_ and inplace variant is None.

Thus in eager tests at the point below,

Lines 321 to 323 in d88d321

| cloned = clone_input_helper(sample.input) if variant in inplace_ops else sample.input | |

| if variant in inplace_ops and sample.broadcasts_input: |

We incorrectly don't clone the inputs for method variant (which is actually the inplace variant) and hence we get,

RuntimeError: a leaf Variable that requires grad is being used in an in-place operation.To mitigate this, I propose to allow setting method_variant and inplace_variant via the OpInfo constructor.

cc: @mruberry

@krshrimali can you take a look at how is this handled by method_tests ? Thanks!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, @kshitij12345 for digging into this. Taking a look at test/test_autograd.py to understand how autograd is tested for method_tests, it seems like requires_grad is set to False (/reset) for inplace ops. There is an additional comment that mentions that it needs to be fixed. Please see the relevant snippet below.

is_magic_method = name[:2] == '__' and name[-2] == '__'

is_inpalce = name[-1] == "_" and not is_magic_method

self_variable = create_input((self_size,), dtype=dtype, device=device)[0][0]

# FixMe: run grad checks on inplace self

if is_inplace:

self_variable.requires_grad = FalseReference: https://github.com/pytorch/pytorch/blob/master/test/test_autograd.py#L5474

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For the scope of this PR, I suggest to jit and eager consistency checks, and we can file this an issue (on allowing to set method_variant and inplace_variant via OpInfo constructor) separately and take the discussion there.

Looking forward to know your views, @mruberry and @kshitij12345.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Skipping those tests and following up by allowing method and inplace variants to be specified sounds good

| cases_scalar = ((),) | ||

| cases_non_scalar = ((S, S, S), | ||

| (S, S), | ||

| (S, )) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think it is okay merge these two. It won't be hard to read.

This comment has been minimized.

This comment has been minimized.

| def sample_inputs_zero_(op_info, device, dtype, requires_grad, **kwargs): | ||

| make_arg = partial(make_tensor, device=device, dtype=dtype, requires_grad=requires_grad) | ||

|

|

||

| cases = ((), (S, S, S), (S, S), (S,)) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nit: this probably doesn't need the (S, S) case

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Overall this looks but, per discussion, some follow-up is needed.

For now, actually, I'd keep "supports_autograd=False" and add a comment with a link to an issue for that not being correct, but our tests not correctly dealing with this case.

Then I'd file another issue for setting the method variant and inplace variants for operators directly, AND we should support ops that don't have function variants but only have method and/or inplace variants, too. Solving this latter issue should let us address the first issue.

How does that sound @krshrimali, @kshitij12345?

I think that is fine since even

On this, it actually has a function variant is which weird, @mruberry what do you think? |

Unfortunately we're inconsistent today with how the inplace versions of operators are exposed. Your question is a good one but this is one discrepancy we probably don't want to worry about at the moment as it's dependent on some future decisions about how we represent operators. |

|

Updates: Adding |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM! Thanks!

| OpInfo('zero_', | ||

| op=lambda x: torch.zero_(x.clone()), | ||

| method_variant=None, | ||

| inplace_variant=torch.Tensor.zero_, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice use of the new architecture

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice work, @krshrimali!

|

@mruberry has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator. |

Summary: See pytorch#54261 Pull Request resolved: pytorch#58731 Reviewed By: ngimel Differential Revision: D28784083 Pulled By: mruberry fbshipit-source-id: f06de8045afd3728b1fedc014c091d8fd1955a9f

See #54261