-

Notifications

You must be signed in to change notification settings - Fork 25.7k

Update CMake and use native CUDA language support #62445

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. [ghstack-poisoned]

🔗 Helpful links

💊 CI failures summary and remediationsAs of commit e53d9dd (more details on the Dr. CI page):

🕵️ 29 new failures recognized by patternsThe following CI failures do not appear to be due to upstream breakages:

|

| Job | Step | Action |

|---|---|---|

| Unknown | 🔁 rerun |

This comment was automatically generated by Dr. CI (expand for details).

Follow this link to opt-out of these comments for your Pull Requests.Please report bugs/suggestions to the (internal) Dr. CI Users group.

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. [ghstack-poisoned]

|

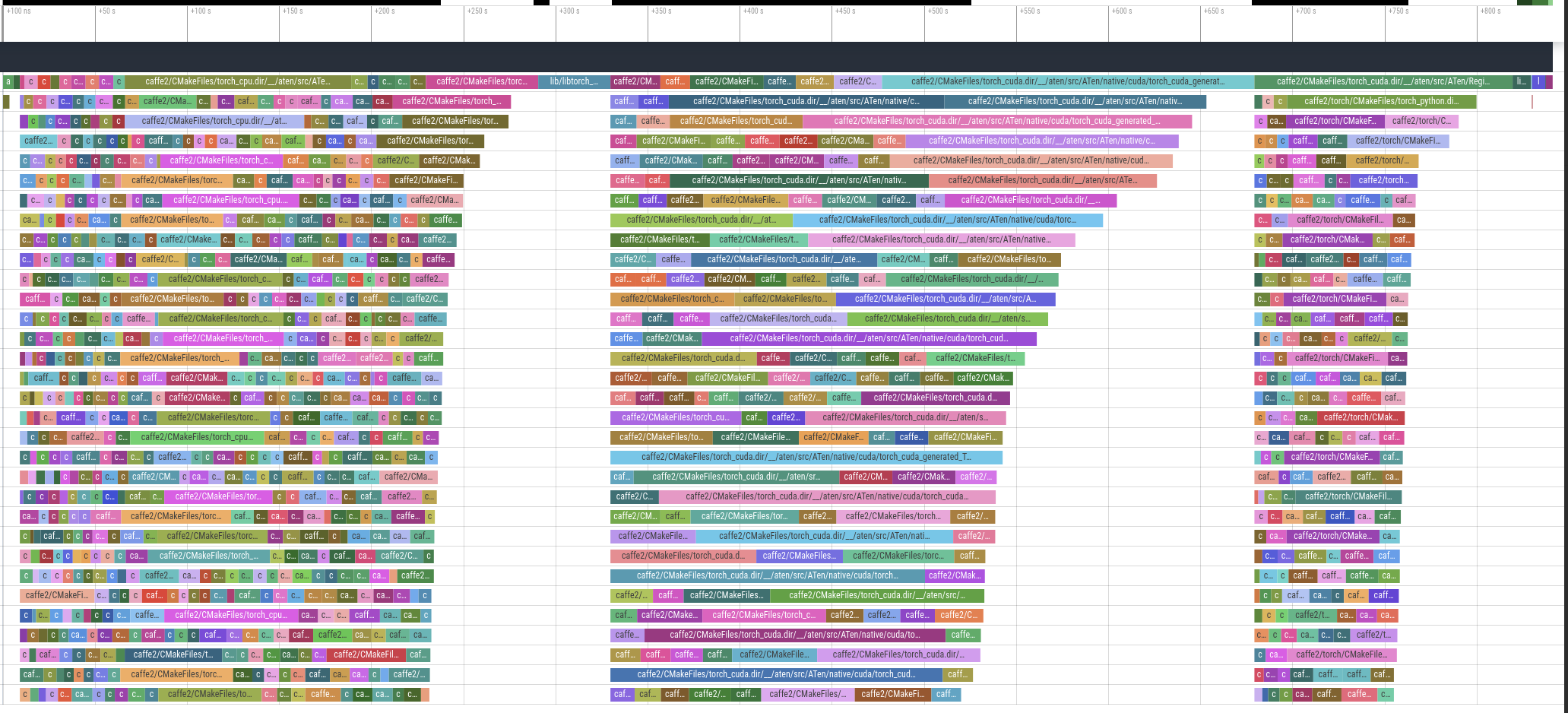

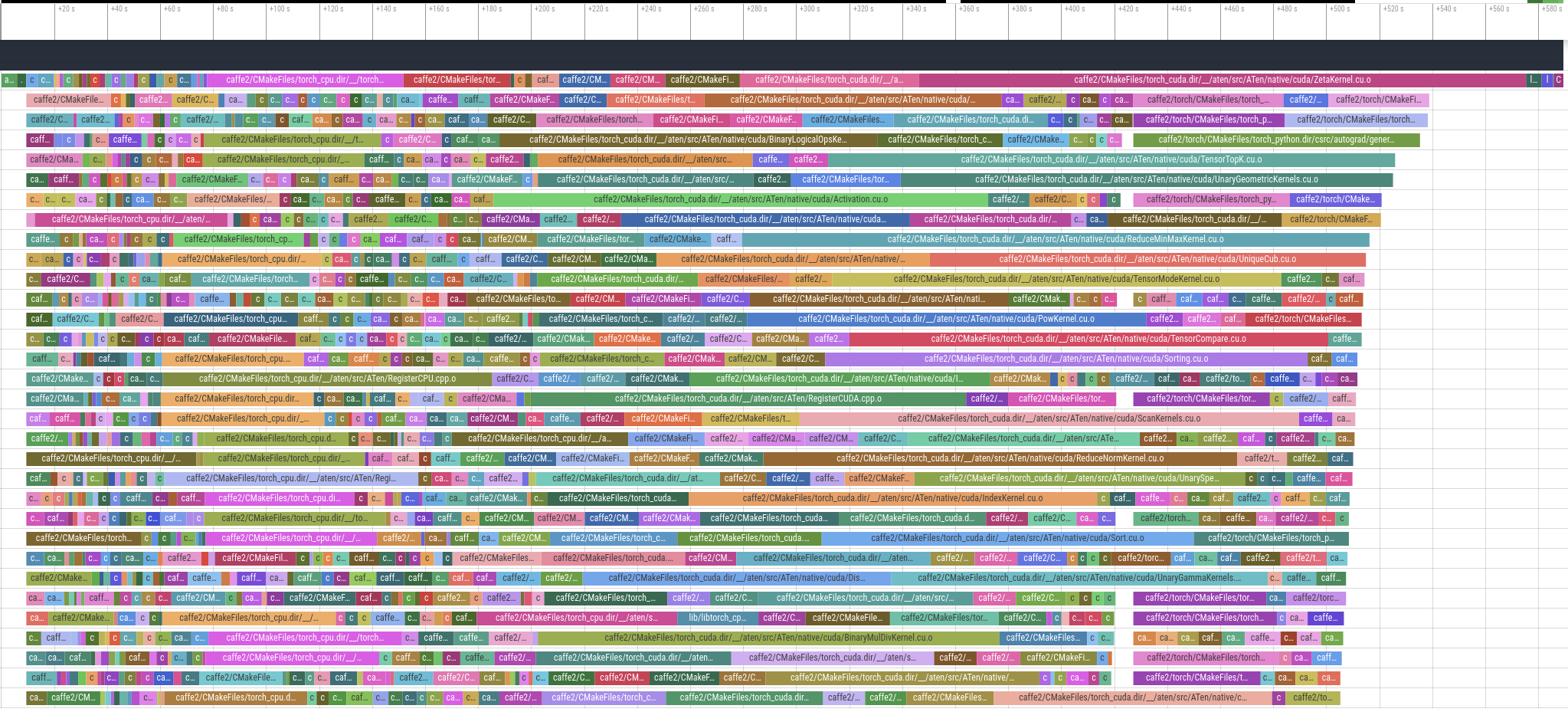

@malfet do you know if requiring a newer CMake version is okay? 3.12 was released in 2018 so it's 3 years old at this point. One major benefit from the new style is CMake understands the dependencies far better. Previously, all Compare with a trace with the changes from this PR, where the build time is down from 14 minutes to 10 minutes: |

|

@peterbell10 this sounds great, but moving to 3.12 is a bit challenging, as cmake-3.10 is the only version available in ubuntu-18.04 How hard would it be to have |

|

When PR is finalized, please add an explanation why you are replacing |

| -Wall | ||

| -Wextra | ||

| -Wno-unused-parameter | ||

| -Wno-unused-variable |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Target compile flags are propagated to all languages by default, so we now have stricter warning settings on CUDA code. These -Wno- settings are added to CMAKE_CXX_FLAGS in the root CMakeLists.txt which of course isn't propagated to CUDA.

Lines 690 to 716 in 73ba166

| string(APPEND CMAKE_CXX_FLAGS " -O2 -fPIC") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-narrowing") | |

| # Eigen fails to build with some versions, so convert this to a warning | |

| # Details at http://eigen.tuxfamily.org/bz/show_bug.cgi?id=1459 | |

| string(APPEND CMAKE_CXX_FLAGS " -Wall") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wextra") | |

| string(APPEND CMAKE_CXX_FLAGS " -Werror=return-type") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-missing-field-initializers") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-type-limits") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-array-bounds") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-unknown-pragmas") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-sign-compare") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-unused-parameter") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-unused-variable") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-unused-function") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-unused-result") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-unused-local-typedefs") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-strict-overflow") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-strict-aliasing") | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-error=deprecated-declarations") | |

| if(CMAKE_COMPILER_IS_GNUCXX AND NOT (CMAKE_CXX_COMPILER_VERSION VERSION_LESS 7.0.0)) | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-stringop-overflow") | |

| endif() | |

| if(CMAKE_COMPILER_IS_GNUCXX) | |

| # Suppress "The ABI for passing parameters with 64-byte alignment has changed in GCC 4.6" | |

| string(APPEND CMAKE_CXX_FLAGS " -Wno-psabi") | |

| endif() |

3.10 should be possible. I'll have a go later today. |

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. Differential Revision: [](https://our.internmc.facebook.com/intern/diff/) cc malfet seemethere [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. Differential Revision: [](https://our.internmc.facebook.com/intern/diff/) cc malfet seemethere [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. Differential Revision: [](https://our.internmc.facebook.com/intern/diff/) cc malfet seemethere [ghstack-poisoned]

…se native CUDA language support" PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. Differential Revision: [](https://our.internmc.facebook.com/intern/diff/) cc malfet seemethere [ghstack-poisoned]

|

@malfet any updates? |

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. Differential Revision: [](https://our.internmc.facebook.com/intern/diff/) cc malfet seemethere [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. Differential Revision: [](https://our.internmc.facebook.com/intern/diff/) cc malfet seemethere [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. Differential Revision: [](https://our.internmc.facebook.com/intern/diff/) cc malfet seemethere [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. Differential Revision: [](https://our.internmc.facebook.com/intern/diff/) cc malfet seemethere [ghstack-poisoned]

PyTorch currently uses the old style of compiling CUDA in CMake which is just a bunch of scripts in `FindCUDA.cmake`. Newer versions support CUDA natively as a language just like C++ or C. Differential Revision: [](https://our.internmc.facebook.com/intern/diff/) cc malfet seemethere [ghstack-poisoned]

|

@malfet has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator. |

|

I don't know what am I doing wrong with the tool, but it stripped authorship attribution from this PR when it got landed :( |

|

@ezyang any ideas? |

|

The authorship never showed up on the initial import. @malfet if you can pull out the rage for that invocation that would be a big help. Separately, we can add a note in the release notes reattributing this commit properly, it's the least we can do. Who is RM for 1.10? |

Stack from ghstack:

PyTorch currently uses the old style of compiling CUDA in CMake which is just a

bunch of scripts in

FindCUDA.cmake. Newer versions support CUDA natively asa language just like C++ or C.

Differential Revision:

cc @malfet @seemethere

Differential Revision: D31503350