100% found this document useful (1 vote)

232 views25 pagesClassification Problems

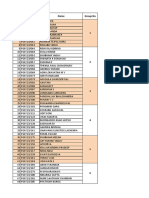

The document discusses building and evaluating a logistic regression model to perform credit classification on financial data. Key steps include:

1. Preprocessing a German credit dataset containing 1000 records and 14 features to encode categorical data.

2. Splitting the data into train and test sets and fitting a logistic regression model on the training data.

3. Evaluating the model by identifying significant predictors, refitting the model using only significant variables, and making predictions on the test data.

Uploaded by

sushanthCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

100% found this document useful (1 vote)

232 views25 pagesClassification Problems

The document discusses building and evaluating a logistic regression model to perform credit classification on financial data. Key steps include:

1. Preprocessing a German credit dataset containing 1000 records and 14 features to encode categorical data.

2. Splitting the data into train and test sets and fitting a logistic regression model on the training data.

3. Evaluating the model by identifying significant predictors, refitting the model using only significant variables, and making predictions on the test data.

Uploaded by

sushanthCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 25