0 ratings0% found this document useful (0 votes)

272 views60 pagesML Notes Updated

Uploaded by

Prithviraj BiswasCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF or read online on Scribd

0 ratings0% found this document useful (0 votes)

272 views60 pagesML Notes Updated

Uploaded by

Prithviraj BiswasCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF or read online on Scribd

You are on page 1/ 60

——————

Machire coaeaers (¢s 8028)

ve : as

, Mere system Gre ore ae of AL thot provides

fe . n audomebi ‘

from experience ojehout being expliewy ess 2 jropeove

grammed.

ao prima lees

Jearn auto eptervertion

earn!

aim jg to allow the

Fraticetiy wiekowt human

0 Supervised Leatnin .

prerin Lhe machine ust? data which is wei! tabead

wien correct oubpleh:

2 As dataset is “ that the algoriahm

identipies WE adurnes emplicry p cesvies ub

mectictions OF Bass iepication aeeordinert

. As bre Erarne

do identify pera tionships get? vera

can predict a new

a darasel de 2 teacher OF supervisor

to brain the machine / model.

o We

can crgsume

0 we

is

Q its vole

9 an Hetero avcess | PR ©

re keo ce prediction pit is

7 feed back uotil tt achieves un

given

‘evel ed performance

+g gries “do model metationships 2 dependencies

between dhe predictor output 2 soput

porters Such ahert machine can edict Lhe oul ped

1 date based 0? ahese rele tionthife

fonjen ee reened piso previews lets et:

fa ssifrcertion Legress (on

PS

© This

algorithm

eg. ¢

Z

Types ef supervised Lecerni ng.

eres

upesviaeel Leaving

cy Uae

unlabeled alate

geal wrth

Ne ecrchen / supers vi for C taining datases) $2 provides

: No draining win ee given to the machine. 90

machine wilt werk 0 Ws own to discover inflo

neve. dask ef reehine 19 tO coup unsortedl indformatia,

. , fk i :

qecoring 10 pimila Mites, godderns 2 ddpperences wirkey

any prior qimining of clarta-

yng ervised leawn ing a lo allows us to perfec

compared £0 Supewviged

more

4, unouper vided learning -Pinds

usepel Por cate ovizcrtion

unknow” pattern

Tyee of esupervised

iy clustering i)

cluster”

_

yer Jem ‘tg where

A cluster ro bh *

‘ we wart ¢ HELE in peve mt

In olata.

Jreup ag /categares

reg grouping customs by

purchari7g behavior

lugterirg divides clatabase

into olicpp. groups 2 pird

Gaups ahat are VETY diff.

-Ptom each other but of Srenllar

roember.

¢

+ 9 gprs dataset Ao grups

based on Similar rties

eg: of Clustering e'Jo -

Kemeans cluster

fuzzy Je -means crus ering

comple processing dasks

Learnin

In alta.

features which cur be

TL Pirds atl kinds af

Association .

Association

j_. _Asseciatiem

2 An association rule leawnisp

problem js where we war

40 dhecover rules that

deserize large patiesns af

your data.

° eg: people that buy xeMik)

aco tend 40 buy YC bree)

involves determina tiens

of affinity how Ferquertly

wo or more things

occur degether.

.

a1 iderlipier set Of Meng

which often occurs

tayetier in dlertased,

* Og. ef Association Myo

Agtion alge

PP growth - Algo

—

i) Reinforcement: Leam ng (RL)

. ‘dy pe of ML fn which computer jearne to perform Q@

tack through Te peated Anal 2 errr foteract long ibs

a dynamic enViroemend -

© Er RL, an agent wilt Agave to sntewmot with CM Vitonme,,

2 Pind oul “what if the beot outcome. Agert Porous

conceps af hik £ tal method , Apert 9 rewarded oy

penalized with @ font fer a covréct or orang. eA.

* 0m the basis af! positive rewernt points gained the

moecel trains ttselP. once Gets trained s4 se ready

to predict the new data presented £0 fy

* RE algo Continucusly Jearns Prom environment jn. an

interative fashion unlit tL exprores Pull Tange of

possible States

eg. - Chess Jame bot

Robotics for indusitial automation.

- Am Of RL is to Jearn series Of actone

8

Supervised Learn! ng

Ursupervised PEGE RIS

eee EEE

. oS kKrewn and labeled | © Uses Unknown 2 wr haben

eta ao inpud, data aS input:

° No: of classes are known. *No. Of Classes are not

KNOWN .

| oe Jearn} mode! + Ursuper vised feorninrg mode)

re tt

Pp cis the” output. Pinds the bfdden patter,

in date

°¢ Ft heeds Supervision +46

zrain the model

° Accurate

Tesults

and Re tlable

e very Complem

* can be Cate gor/zed a)

- Class) fy cation

- Regression

.

doesn ’L needle any Suberys,

° Moderate acnncte 4»

reftas/e Tesuite

* lecos Com puta tionc | complexit

> Can be Classified sn

- Clustering

~ Association

* Example Algorithms :

Lineat RE9TeSSi0M , Log fedic

Regression, SYM, peer sen

Tree , Bayesian Log'c

= Eaam ple Alyo :

[knuu} Aprier! a Gorthm

Clusters n

J

f

2 A classipicetion problem /s

when output variave is @

Catepory ouch as as

Rel ov Blue , car er Bite

|

|

| © classification Lechnigue

Clasci fication Reparssion

ey regression problem IP L4q

output vavriable 12 Fea

Value. pech es price or

weight

+ Reqrecsion techmgue predicg.

Grps the output indo a grmple output velue :

@ chrss. using @ eee olata

QL Separates the data. [> gL Pits the deta,

Examble~ Example ~

= peterminiry whether or not i

wgorneone © witt b€ deferutter Risk assessment

of Joan. — gcore peedicbien

— price prediction In Ste

— Tis mail 8 Spam or not

— fraud detection

— Image ctassicpication

_ waarther prediction

— House price predictor

2 74 helpr to map joput value|* 21 helps 10 Map input vel

: ! P

with output variable Which | 9 continuous output voor):

Ie discrete in nature -

2 usedonty Por contimos

ed

* Types of Regression Algo:

2 used only por discrete data|

+ Types of class: fication Me

Logistic Regresic

on g ? Simple Linecer Reguesien

NN

vm Sufpord Vector Regresso

Decision Tree Classification DECTSonTVEE Leweession

fandom forest classification Random Forest RACH

Polynomial Regws an

— . ’

gr stare Space ts get of all

ocsible oT

gances wwaet ner they + dlecevibable

ave :

present 'n Our dataset

psu p his set has gome grometric aoe

for exc ple. ip an features acre PUMmEDICA , “Lien

cach Pemtre ean bee cette coordinate in

cartesian coordina t& ayste™

7 eigpbor Cleef

gaam ple - Linear oles iefer, nearest n igh fier

peome tric model conseructrd olivec tly in instane

apace ugtn jo conce pts guch os Hines,

. r

i

pranes 2 dances.

A decision bound 7 pn an unspecified pumbet Of

ime sions to cated & hyper plane

gp arere exists & [nea decision Zour aay ce paretirg

4wo classes, we SAY that date fs Nnerty seperrable.

Probasliliebic trode!

ji.

Example - Bayesia” Classifier.

* The expproeech rg do assure gpere 19 gore undesty ig

qandom process tbat dee values @fer thee

yariaples, according v0 a wel ~dapined aut

unknown pSreael adigeribudion.

= ‘Legice!” pecauge models of this type pe esily

aranslatet — tnto ules pet. one under slerda tle by

humans. Buch TURS are easily ongan'zed Ine

tree structure cated cpeapure EEE

feature frees whose Jeaves re japetied with cleceas

are cetied deci si07 Pees.

- A prediction assignet by % deci” pre could be

erplained by cpoad tr. off cond brens wat ted the

prediction from Spot to feat

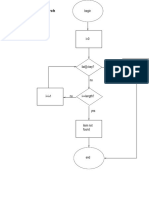

ioding p troubeg — 4 repntely SUSY

Smaner Subsets:

a lot

ia

rot 7) ine Yes

"e, [Bim

Grouping

Superv A ney ] [Pabeevised J

%.

4 [Association [ee] mm

[ee]

auaie (Tre wosp horses o,f mt)

A gure can be thoupht of as @ kino od

measurement that can be easily performed on an

Ingtance + J p he ¢

mapae matical yt, baey are functions that rap poom

Ing-tance. Spuce to Lerme get af Peatures valus

caned oman of Peatire.

The gm most commen feature domain j¢ Set of

Leal numbew,

Dimension Reduction

_ a data set having

Ti is @ process

vast. dimensions int0 a

dimensions. ae EnsuTes phat - a

data set conveys giro lat jnporeeter conciseg

Benefits *

a the

«zy compresses the R48 aod gays Tete

storage Space reg ecireme? iS :

dime veered PO pes tar

ss comput rer?

2 mt reduces the 3

since 1esS dimensiors® require Je.

aedundant feotere

« at efiminateS the

» 24 ienpooves the mode! penserrnne

ipeqrentieg Rectan

Principal component Fisher Lineat piscriean ant

Analysis eca) Analgsif (L2V

nt Analysis (pea)

| 4. Principal compre

yariables into & new sey of

Fonsposms the

Principe! components.

| variables called as

° These principal components are linear cam nation

of origina! yariables & are ortrggonal:

accounts foe mast

Tne first principal _componert

of the possible variation oviginet! deaths

anent foes its dart to

+ qne Second principal _corn ponee

iy the lata

capture the panance #0

2 qnere can be only two Principal componerbltry-

two dimension! date set:

f fe oO

por is a dh p 5

opie & dimen clone - reduction mettood tbat is

pre data Po ioe the dimensionality of

yoriables ad grans form ny a jange set

contains most nas one brat sti)l

set» informatian jn re lege

KNN Ck- Nearest Neighbors)”

ML aporthm Whe

toh as wel] %£,

lasciefication]

Ph Gk

ze “ a type of Supervised

“ ae : used fer both classifica

q fon predictive problems (mostly ¢

a classypies a date poynt gased on ibs Neighbor's

esiftcarions. 24 Stores alt qyajasie cXses anol

classifies pew cases based ‘on SAVE POOLMES,

|

| .

| = KNW is ran-paramedric ayerithm as it door %

assume anything about the under}ying dares

kN, ig laey Leweninp algorithm decaure rg, cleeer’

fave & Specialized trainee place aetvad, a7 We

eee a erainieg wile 7 classification

stores tse ler testes

cracsifves that

roves tO

| 2 KNN at the trwning phase just

| g when it gers new date, ther it

| deri inte Za cedepony that if much &

| the new data 4

|

Steps :

4. gelect the number & af the neiprsone

2. Calcutate te gucidean distance of k pumber Of

neighbors

{ JZ qake we kK nearer. neighbors as per te ca scusoted

\ guchidean distance

4. nrory qhese ok neighbors > count the pumber of) date

points tn euch co tepory

do that calgoy for

uy ofcede points

the nejghsor PS MAT mun

.-

the number of

| wonich

é our model 12 ready

yous rene

00° °

20° ° o

9 7 ° _ a

2 > clasB ago

° f°

Qans _

c tarssicfied it

To Classify

as cle

3 wher do we use kNN?

> wher

cb pataset 19 labeled

Ui) peta ie noise-free

py pataset 7s " 4

uw) Small, a9 kN if a@ 12y jearney

pvarta yes:

TD Simple and eacy to fro plement

® yersatile as je Con be used for classification

as well as repression

@ pas relatively Ah accumu cy

G

@ ver useful Pos Mon - rear data becouse there jc

~ no assumption Aboud deta tn ths algorithm

Disadvar tapes

annem

© Computa tionally a bi expensive 2h rithm decauce rt

Stores au te braning data

© prediction is Slow ir cuse Of ign

@ goes work wer with large dataset as the cost op

cajcu tating distance between dhe mew point fF each

evisting point re Auge, whech degrades performance.

Applications :

ima,

© Ganking system -

7 predict whether a7

goes Wat individual fave

10 the defoulter one?

® Cajeuleting owed it Ratings

90 fircl an individuals cred’t varting by comparing with

Pe pemmar tavicg Baer erate.

dividual / fit for loenr appre

athe chavactenisdics Simijur

® Pontes

é fardwtiting gerection

© Speech recepn tion

oy. Ape eS OT ae OT Excttoan &

cents finn ite Aarne aad Meance we gee! that the “fst

veers ard rate predictions 07 feresing, cata hve meignaare belong te close "4" op y.

Jest case Trstance : 7

Kt = B09 Sik is concivded that test core +

UN: 836 pence

> Trstance (% SFI, % = 99k) detong to gh

qo ake fredictiont, we need 40 Pind k-

nearest neighter Of ter instane led’: say

we cheese “e=5., Then nearest — neizther

cre prem Rank to Pank-5 , Mm this

5 : fase Qil 5 neighsors hove label 2 , So we

fredich Uet “lest instance detengs to

class 4

>> Buctidean distance fg calculated by using wis

efermula

4.285

03) 4 (Ee

3.93 2.33 | y

; 2-93 9.96),

| IE) Fee fea

= B22

ens

296 (feaveten a |

wet | wer [(secnen™+ werzag' = ere

5am

(18-300

ae | Ieapstenls (oe 2

a [ate | Viet een) 4 (ee 299"

rae [yes hea

25> _

Kav

IE 2.566

np as —t

ama | aa2 | Ld

o.806

743

hae Perform) KNN Classification a [porither on follows

| ata see and predict the crass for (pats

and Peon), k= 3.

pa Pa - Class

7 7 false

7 4 | palse

pies bi. al

| 3 | 4% | fewe

| 4 Tree

@ i) folse

@?) False

@ ini) 3 4” qrue

@iy| i? [ty | 7" —

Euchidean distance, gm, (% i) = (a-v* + (7 =e

—

——

D3 (Hi) = fern? 4 a

Dri (7, Ti) = fa? +O = F

> Div (4,1) 2 fe-p* + (ew - Vigz 36

FG-1

=.

divea, k2=3% 8 nearect nelghbours

Rank

Irelean

pa Pa Class ee bance

qT 4q False 4

|

7 y | Feelse an 5

3 4 | Prue 3

L —|

! TOUL 3.6 }

on Checking 3 neurect Neighbours

we hove 2 True ane 4 False,

Since 22>) then we conclude that

x (P2=3 ancl Pas7) hes True class,

have ”

9 augue pe folewing 2p dataml. (rer

doit we (2F8) 28 a yeery oo.

gaabare point paused Or Ce a

puctidenn distance 3 on ey

a /

query uid

sinter

i

eo

a

a>

x

CS

concur ate

pore : 7 _ 7

a= \ [ i Xj) ou A

5 vectors % Ps

fhe cosire gjeniterity petween quo VeCurs ay

5 (rd) = ad whrre >is PLAN SH

ya tell

of vector X, }fal] #¢ gu chilean ee Cae oe

ol *

(lal! 1s Fuclidee” n of vow” ae ; ie

0

essentially CoB NE off angle between vec x 7

2 t%adn 1

= UF, 4 44r4

pot predvet,

[joalxlla)

e Boss proelw t

n

ae) Zu;

trays (aon ate

a

tue 9)

(tw! . ty. tde

carte dh

“vector Y

2

dr +4,

~ ae

[ casiné Siar):

Exctidean olistance |

|_ eect aft. eee thy

ear agrigh | RAEN Cy

Dae ese

; :

a | (O94 watch [oa vy *Ceetg

= 0.6708 ;

[|= eener 0 29247

o

% | ne | pe frente eee te (HEE HOG she

= 0.2828 yerane® xo nap

= 0-44

a [12 ro] (ca-ys Ueno (64-12) + Che ns

= 0.2236 [oP tie* xl

¢ eee x] ats

: ae = 0.99902

M . _ (e419) + Cee vy)

= 06-6083

en oe = 0+46536

UCU

Tnese vatues prodluce oo

Vow ir

datos” geased ruil md TE king g Of date.

Euctilean distance

a ae 1%3_, tea

Cosine Simyaqri % % oe

(Bigger t smaley) res hy Ha) he

=> Nave Bayes Classi fer 1s @ foobabs listic ML algorits,

used for chassifyeation tasks such as text :

Clasifreation, Sam SPirrenreg , sentiment ANA YS's, ege

T1 uses Rayes’ theorem tO ca fousate probabiity '

a fiver sample belonging to a particular

jos Peatures. ;

class fased on

Tre “naive” assumption of ee lgerrlhm ig that each

featrre is independent of atl other Peatuves,

vue An wactice byt

which js not alwags

Simplifies the cajesieten =» eit’ food vesurts.

Bages! Theorem: | PCA/a) =(PCa/h) ¥ POM / Pee,

cohere,

‘ PCat) is probability Of hypothesis h given the

data ad. This is called Postesjor probability

to probability ef data

dyporresis A wes true.

is probability of hypothesis

( tegerd less of data) This .is

° P (4/4) ot given treat

Ye ae

price, probability 5

© Ph)

° Pld) 1s probabrity of qeria (aeganlies of

ry fotvesis ).

Condition! pyrobability .

oc usving

has

Bayes? theorem works on

Te ip the probability of 9° event X

when we aredy know that eveet Y°

occurred.

-

—

phat hac man probability is sinted as

f ciess

quieable clam, at js alto caled Marimum fi Porierion

or

marc”) = mat p(rid)

max (Pp Cale) pen)) | rea?

= max (P(d]h) * pend)

pia) is used tn normalization ty won Lb aehfect

ape resulh if pcd) +p wemoved:

class probabilities © Probability af each Single class that’s

present in draining data

Cond tone! proba bility of every ioped

Independent value when & girgle lag

yalue fs given

wechction ptob lems

conditional Pooba bility:

i) Mive murtiple class Pp

pevartages:

ii) onk needs a smal amount of anaining

data +0 appoorimate aest data. Thus,

earning Lime 18 SE

| i) Lineets classifier . faster with bg deta

as compareel to. KNN

disadvantages: jy gp test data has @ categoria! variable

that wasnt see tn train olata, then

‘ mode! wor’ make a poedictio

") waive Bages automaticaly assumes ‘thet

every variable tS not Grrelat ed which

cloetn't happen in veal life

yt be able to

sceneries.

working of Algorithm:

1) calculate prior proba bility

ji) Foe each ure

) grotobi Maran) of samples, calculate cond tional

in] 4 pat feature Jiven each Class

Fo classrf

ealdy new Sample, calculate der"

fmbabitiy af Liat sample belOegrn pe

iy usin Bayes? cen y to each class

Select clas ,

swith —higt .

predicted class fir — —s probability as

. le.

of each class.

a tate class _probaaiiiver , conditional frotabilities \

ee prediction® on fondwieg data:

a

7

i — r ,

er car { class |

ever | | oe

E 3 | Co-0u

guerg | werk go | |

fairy | grower | 6,0 -0u8 |

: | go -ovt |

sueny work'J 4

out

sunny | work! a

Gao out ‘

guary | work'd

pair} | aroken |.

Rainy Broken stag heme

sung 1 werking guay here

Sunny Breen | stay-nome i

| | ‘

Rainy | Broken | stay home

1

: /

> Je calculate class probabilities , count no. Of instances

Sex exch etass gp divide by total ne. ef instances

p( class = Cre-out) = oe 0.

10

PC class= stay. home) ~ 5 +05

10

now, we need to calcueele conditional probabilities

dor ecteh ayinsute cand class combination

For weather atinbute |

P [tenther = Suany fiers 5 te-ont)

”

l=

"

Pp (weather Rainy | crass = to-ewt)

Pf wearer.

athes Sumy [ ergy» Stey-home = 2 oy

Plwectie torr) cleat = Sey home = 3 2 66-

|

PC weather = Qe 5

; Cee “) = 8 60.6, PC Weather =Rainy)eY cont

tor Car ayivbele 1

~ - Class Probabiry,

b (coe wert ew: oo ou) = + 08

- t -

Pp fears Broken J tess = toncut) = oe = 0.2

tL

, ‘ - = > O02

Pp fears werery Clase = SLayn home) =

(rors worry | ¢ yr home)

Pi Paar preked J cress = Slay one = Zt eee

: class

care working) > Bee ©, Plea Broken) 25,2 0F cee

make predictions using Naive Bayes :

ae can Pe

Bayes qheore” rs fiver by p( ale) = Pp (a[») *PO» xP) |

i) |

peq’s take fist gecord [torn daasel 2 use “tee cto

predict whieh Class ft belongs. .

thers Suord , care working

wea

P (cise aancut [werters sunny, Core working )

Cro-out

77° pfuccther=Suong JSP? Go-out ) x p (ae-eut)

P(gunny ) =>

(cat uertig fonts te-ot) xP (c1ass= no-ov4”)

ig

| p(catsworky)

“+

ee tO) oy Creme. 7 cee

0.5

0.6

2 6.86

a

Stayhome cuse : YS

fer

ey eathw= | - :

{cts saay heme [- sd sunny , Car swork 7)

L p (eee sunny enss= sisa-bent) xP (classe stay dame’)

P (weathers sunny)

x p (torsworkind [cress sty-tome) we P (clasts sig by

"(cor = working J

oy X28 x 0-2 x 0.5 1

& 0.5

2 oll

pe can see o.ge 7 Oly therefore WE predicd

class = GOO -out por this Instance

Bima" - we » can do bats for all fastancs ‘

in daraset

weabhee Cor Class esi yay a

~ Sunng iar ho-out | o.8¢ oll (10-04

any | Broken | Go -0ut 0.05 0.6 Stay - home

Suneg working | tro -out 088 oll go-ovt

Sung working Gro~out 0: 8F ol Go-out

Sunny | work | Go - out 0.88 oll ho-ovb

Rainy Broken geag-tere 0.05 0-6 Sday-home

| Rainy Aken | Stay -home 6.05 0.6 ‘stay. home

i

Sunny working eeay-home aan to owt

Sunny Broken | sery-home | 0.133 | 0.266 Shay. home

Rainy | Baten Stay-home | 0.0

7 | | 05 6.6 Stay -home.

~

Pla Tennis dectaset if Given below:

the chill can play outside o@ 0b

Hob, High , False > using

find Whebheon

wrth atrribute

naive Bes C1 aS

zg Rain ,

dataset:

Outlook | Tem pererture Humidity Wwinely cla se

j Sunny Hot High False NO

Sunny Hot High qeue. NO

overcast | Hot Hig Fetwe Yes

Pain mile High False Ves

Lain coo! Nooma fo lse Yes |

Rain cool Novmal True NO

| evence st Cool Nov Mme} True Yes }

Tt Suny miiel | High Fale No |

[senng | coo! Fr) Nowra) | Fa lee Ves k

! r

j ‘

J

Dut 100K Temperature | Humility windy Flaky

Rain Mire normal | False yes

Sunog mid normal | True yes

overca se mid Hy b grue yes

Our ease Hot Normal | false ves

L Rain Tia rp True T No

T

|

2s, PC Yes) = Sry = O64

PCNo) = Bfyy = 0:35

Outlook |

P (suony J Yes) = 2/9 | PCsunry Jo) = 275

P (overcast /Yes) = 4/9 PC ovescast/No) = o/s=0

j p (Rein / yes) = 9/9 P (ovemmenst-/Wo = a/s

femperature 7 {

+/ No) = os

p (Hot /ves) = 2/9 P (Ho+/ Ne)

: = 2

p (aid) yes) = 4/9 p (mid /no) = / 95

p. (cool /ves) = 3/9 P ( cool/ne) = Ve

Humidity

PC High J Yes) = 3/9

P (Normal / yer) 2 6/9

a

pcHigh JnoJ= 4/5 |

PCnormal /no)= ts |

ae

Pe?

windy

P(974e /Yes)= 3/4 | PCT euesno)= B/¢

[P (Fate/ v5) = 6 7, |

PlFalse /N= 2 /¢

Sample X= < Rain, Hot, High, Fae7

POX /¥es). P( Yes) = P(Rain/yes), PC Hot/ yes ) .

P(High]¥es). P(False /yes). PLYes)

x 2

14

x

Coley

2 3

“GX a

2

= 0.0105

I 163

P(x /wo). PCNo) - P( Rain /no ) PC Hot /no). PCHIZh/Nv).

P(False/ No). PC No)

= 2y2xtx2x =

5 5 a a 14

= 16

eo

1S

= 0-0182

Since, 00-0162 7 0.0105

So, we Choose the class had mane? this

; wi)

probability. qnis means eheet new ing bunce IL

be classified as NO.

Hence, for Given sample with apembube

. *

Centwoid

© defines oliszance between Clusters as being ve

clistance fetween ther centroid s.*

After calcu lating centroid fer exch, cluster,

distance between those centtoide is. computed

using @ distance function

° TRS methed tends to woduce clusters ee ave

gimijas in Size 2 shape 2p is less Sersitive to

outers thar Semple OF Complete linkage.

Howeve™, rt can create Clusters thet eve Not

vey compact.

cemimaid Linkage

ih triven gia elata points as (h!), (QV, (85), ™

3), U8), (6). AbBIY Hiewarchical cluster

algorithm Lo develop ofendogeam using there

poiets . Find no. of. clusters Pound ir ce bove

alendog sor. °

> Let's consider ACh), BC 21), €(35), DOW), pa)

and F (6-4) be the points

we first need to caurcu late

cea seting AISeAnees between

a D puetidean distance

olistance matrix by .

each pats of ponds

using oe

Lucnleanr distance is giver by | ales) (%9-%) a Gas) ®

Distance madTinm om + _ ;

ata, Ba [ecas) [oc4.s) [EH Fe) |

ACh 0

B02") te ° woe fee pe

ct3s) 4.47 4a ° |

: 7. I

dc43) | 3.60 2.g2° | 2.29 7 |

E (U6) 5.83 5.38 Lan 3 ° |

—— = ;

(4), 5-83 5 3.16 2.23 | 2.92

To apply Single or Simple Linkage Agglomerative

Herarchica! clustering, we ‘wilt find Closest Pair

clusters BP merge dum we, we win Pind the parr

which has minimum olistance perween them in

distance matysa.

Tn above matrix, pais (4/5) has’ minimum dsdane,

Se, wen merge trem, at distance 1.

— “art eras) Loew E C4, 7

t [ we se, 2043), lH ¢ 6) | ey]

AC), Bt) 0 |

{. -

Ea | 412 | YN

c (35) ae —— 6 T Ts

Cc 4 : 2.82 2.22] - ]

py sot ° ay

cow | 598 | el 2

Fee) 5 we ee Ae oe 2.23 | 26 |

d(C, CA,9) = ain facare) AC Be} zmmin fan, ah,

d (9, (4))=minfaC ap) , dC B.0)} Sin) 380, 2.827 = 2.8

| -

. , d(£.(48))=min fd (46), (B,E) P= ming 5.03, 5.364 = 5.e

} |) 4

A (F, (A.B) = ming (AF), (.)} =min§ pax5f 25

|

he min. distance in above matwin js between patie Ae,

So, ih wil be merged , at distance }.4)

— ACI, 802.1) ]eC3.5), E4,c) | oc4,3) |F (ta)

| A(t), 82,1) o

a “e(35), Btw) 42 a

Lt 043) 2.9% 2523 o

om

F (64) 5 22) 2.03 4

ae att |e

a[@®), Ce, = mop, (489), ale. con) boing yor, 5981"

ado, C)}= nf d€:0) AC6,0) Foming 905,93 2003

STC Jemint dees) a (pes - poe, es} - eA oa”

2.52

“

oS

Now, PoE) YD will merge with (< and Fo

vE) and F.

at distance 2.9

mr 7E), Fe o): = minf A (8), (c, e)) a((a. 18), F), aeons

smn [az 5, 2-82] oe

G8) and (¢.0, #2) win be merged

oe iene 2

daw dense gram for this.

NOW,

Now, well

Total m0. of clusters

ioill be oh

ober S “from * afendogram *

trveshole for form clusles

90 count Nb. of clu

— choose % distance.

whith will be peigat of now(zontel rine

y Sag 3 in ths fe SO.

Jine cet chosen height.

‘zontal

bis pos 20 al

— Qraw hor

— Count no. of

Hne intersects

no. of clusters

pines taect ti

verti ce!

vepres ents

This 0.

y

DEscam (Density - Based Spatial Clustering Application),

a re it

Yogupervised ML algo hat makes rene

upon density of’ data porns eee * satele

data point i. The points wahich ted ae

the dense Befions ove exclude '

roise OF pullers.

Important Parameters +

Epsilon ‘or eps or € 1 ~ used to Specify aedliuss OF a

= neighbor hood

= HE ig measure Of rejghborhoad .

Neighborhood : Considew a point 2 daw O Ciacle

aneund this point making this as a

center 9 add a distance Epsilon (E)

So, this circle is this points nejshborlee!

\ ° € B& Yebresenis Tadius of crrcle atound faeticuln

point ttat we are Jer'rg to consider re igh bothord

ep that form ‘

* Neighboshood object O is 8S Within» @ Ewvadius

ighbor) af an obj ig Space Bithnge orale

MinPts os min_Sample’ t

ULL

* Shecigies density tnreshold ef dense Tions.

+ Minimum no. of olata points that should be

around that point within tbe a wadiuc

Classification of data points:

‘) Core foints :

* A point whose E-neishberheod contains ab least

MinPts points.

& Object oF point di j'

bi poi which has et feast ii a

Within wadlius of 6. a reals ov

E22 | minPls = 3

P, is cove point

>

a gounel

WV . ,

2 An oaject or cot Which ISnL a core point |

pt EP neighborhood af core poi within. Tadius

no of nejgrhbors must be fess than — MninPte.

girect versity teachable — poin-b-

Noise Pore (outsier)

WW) _ —

a penther & core point

nor goundaty font

: Let minPis = 4

jz cove poi

6,010 = neise

B, P= Gounderey Poirt

Algor he? Steps :

Label Core point

2 Nose powt

a

barking point, Sag 0

nt O usirg gadiut E.

this neighborhood

— Select gandom &

— redentify nejg beorhoed af this po:

Count no. of porns , Sg Kin

including point 0.

Pes then mark O as core porel.

- Sf k >= min

— Else iva be marked as noise porn.

= Select new unvisited port report aise steps

eee Norse point can become Boundary point

Tp Moise point rs elivectly density reachable

(ie. wAhin boundery of radius € fem core point)

mark it as Qoundar Oink pwn *

port op cluster. J pont ' ee

2. Check

— pont which is neither cove poind nor boundayy

poink is marked as Moise poirb.

SUUUPSSeSeSESSSESSESOSSCSSSURSOSSSUOSSSSSSSSSSSSOSOSSSSSSRSSSSSSOSSSSSSSSSSSUSRSSICERNSS

tyiven Siz data points a8 cu) ERY, bow

{7 HD, Os), (-2,0)- Asply DBSCAN ajo ep,

core pornts, Boun ley Points and Noise points

Also fine Nesghbors of each date poines Cohang

nejghbernood vadiVS is SF Pr alan ll

neighbor criterion jg 2

? diver. é = ntighberhood wadius = 5

minPbs = 2

parrwise

an A

Tes, we Compute ctietances beiween darta

bores wsinf ‘Eucteds| tebance

we get the following d

ietance mabe.

(13) fh | OOD wa) C® (40)

=

~ (Us) oo 9-48 7.07 5.93 | 2-23 | Hay

8) 9.48 7 2.82 1442 | 10.04 Bue

(6.4) | 7:07 2.82 0 1.66 fot

(4,-2)| 5.83 ue Nee

Oo | 228 | 632

(2,6)| 2.23 | 1004

—— : F.06 7.

ne ee 640

(2.0)| Heh | eu8 :

ial 4 5.65 6.32 6.40 0

pois saan! hein neighbor wylhie boundary of

/ (): (2.5) , (2,0)

(86) + (-6,4)

1 (64): (8,6)

{ (4-2) + None

@s + 03)

(-2,0)* (1,9) ;

7 Core ports are ports which have at least

negnbors within Ttadius E25

min Pts = 2

SO, (1-3)

86

{ oo -» cote ports

—o7

(2S)

($20)

be noise OF boundary pont

Ard (HD EP

(4-2) ts not directly density yeachable point

“ 7 pint:

, 4 pea chable from = 4°F core P

ne ; a nose fort.

erefore U--D 'g |

ecar Yow1.

yarn’

i n

ke a function wn

‘9 pe ONOT

te fra)

are pesian mate of f i

reriAat ofan gered oder part ial

Beri vayivek of A.

jee HELLION motrin Of 7 * poi "Fe

ve nna matvAit * , , ,

iy

pie fein) ty @ Lo Ban DS

(a) 7 7 , “

fy) AO Fae 7)

y ° ‘ »

Fil? p, (a) vee Ply

6, Compute Hessian matte

P(%, a) = 4, - 47 - Wt.”

=) order partial derivatives °

#,) (%%) 2 24, Mo -

Lf, (4/9) ney %

Nenee, we get ord order partial derivative

f," (Ha) = 2

ty” (%,%) =

f, eG

oe (%,%2) = -

. Hessran mattrn is fren) + . i)

e “2

ee

» Hessian mattix descr

He ' ssoribes the joce! behavior

pune? ee ce CB tic point. Of &

a. 4a. ‘

a Bs : im pot oe too] (7 oped smizadion bheck

goovides tof asoud eurvalure af. func? ave ef

' , : at

cvibice! point» which ean be uted to dletéamine

peure = Of critical point and divection of

odee pest ascent ov descent:

— tet H be Hessian matin .

i) 4 is positive definite (all Engen values > 0)

hen critical pornt to jocal minimum

ii) ys negative definite C all engen values Taeory :

zen A PEIN Ciba,

marviz of SF

a by de letin

tet A be Symmetric é

a mines obtaine

mines of order k is

nek rows and columns where ee delete the

tion ag column osition- Ghe collect;

ow post

same f pre ro a be

all rincipal minors 0.f. 0

denoted AY.

Gre number of principal minors of o

ay

ny.

G) "KL (a-#)]

qe Leacting principal Mine? ‘sg obtained by

deleting japh nek ows 2 Columns.

der k

A Principal Minor. of & mateix tg tre detesmina

of & square Submattix obtained , by gerecling

any k rows and k columns of otigical mattim ,

where KIS positive integer.

Jo calcukte KA paincipal Miner of ce 3X3

matvin , we need LP gefect Kk rows 2 K colunt

Of martin, ahen calculate determinant of

pesuiti of Submatsix.

rg

For — Row column > ho toon 2

ead] Paintba! )

ama

a =

fits order principal mines are

quree

a 2 and 3

secord order principal minor :

e 3 I Bn .0 4 2 |

a ? [; 2 , $9

A, : “2 : |

: fy.deleting Oy cleletirg

me Low Column Low -column-4

Row- Column 2 feet)

2 f Red, 34, - 304

qnree beced order Principal minors arc

99,34 and -%

por third Princpal Minor |

A,* > det. (A)

> a )

= 9 ,

7 2

-2 e

= 3Cy SE 4C;,) 4 Ci

2 2 6 ae HAT * 7 ‘|

: 3 e4 + 24 ~2 §

= dY¥ -80 Bu nde of f0

% p2707

beading

You might also like

- Genetic Algorithms Versus Traditional MethodsNo ratings yetGenetic Algorithms Versus Traditional Methods7 pages

- Implementing Logic Gates Using Neural Networks (Part 2) - by Vedant Kumar - Towards Data ScienceNo ratings yetImplementing Logic Gates Using Neural Networks (Part 2) - by Vedant Kumar - Towards Data Science3 pages

- Unit V - Paths, Path Products and Regular Expressions100% (1)Unit V - Paths, Path Products and Regular Expressions32 pages

- Chapter 3 Solutions: Unit 1 Colutions Oomd 06CS71No ratings yetChapter 3 Solutions: Unit 1 Colutions Oomd 06CS7114 pages

- MUST-DO Questions For Interviews (DBMS, CN and OS)No ratings yetMUST-DO Questions For Interviews (DBMS, CN and OS)3 pages

- Robotics and Machine Vision Internal 3 Important QuestionsNo ratings yetRobotics and Machine Vision Internal 3 Important Questions1 page

- LAB # 07 Facts and Rules in PROLOG: ObjectiveNo ratings yetLAB # 07 Facts and Rules in PROLOG: Objective6 pages

- Unit: III Subject Name Optimization & Numerical Techniques (AAS0404) Course Details B Tech-4th SemNo ratings yetUnit: III Subject Name Optimization & Numerical Techniques (AAS0404) Course Details B Tech-4th Sem54 pages

- Three Address Code (TAC) : Addresses and InstructionsNo ratings yetThree Address Code (TAC) : Addresses and Instructions28 pages

- Introduction To Theory of Computation: Prof. (DR.) K.R. ChowdharyNo ratings yetIntroduction To Theory of Computation: Prof. (DR.) K.R. Chowdhary17 pages