0% found this document useful (0 votes)

76 views9 pagesOpen - FOAM - V6 - User - Guide - Running - Applications - Parallel

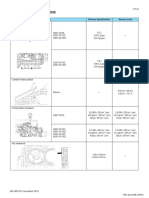

The OpenFOAM v6 User Guide section 3.4 explains how to run applications in parallel using domain decomposition across distributed processors. It details the decomposition of mesh and field data, the various methods available for decomposition, and the file input/output processes in parallel. Additionally, it covers running decomposed cases, distributing data across disks, and post-processing options for parallel processed cases.

Uploaded by

BEHNAM FALLAHCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

76 views9 pagesOpen - FOAM - V6 - User - Guide - Running - Applications - Parallel

The OpenFOAM v6 User Guide section 3.4 explains how to run applications in parallel using domain decomposition across distributed processors. It details the decomposition of mesh and field data, the various methods available for decomposition, and the file input/output processes in parallel. Additionally, it covers running decomposed cases, distributing data across disks, and post-processing options for parallel processed cases.

Uploaded by

BEHNAM FALLAHCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 9