0% found this document useful (0 votes)

104 views8 pagesSentiment Analysis Pipeline Guide

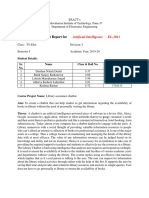

The document outlines two assignments focused on building machine learning applications: an End-to-End Sentiment Analysis Pipeline using the IMDB dataset and a Retrieval-Augmented Generation (RAG) Chatbot utilizing a vector database. Each assignment includes detailed steps for data collection, model training, API development with Flask, and database setup, along with deliverables and evaluation criteria. The assignments are designed to be completed in 2-3 days and emphasize code quality, completeness, and documentation.

Uploaded by

sushantgaurav80Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as DOCX, PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

104 views8 pagesSentiment Analysis Pipeline Guide

The document outlines two assignments focused on building machine learning applications: an End-to-End Sentiment Analysis Pipeline using the IMDB dataset and a Retrieval-Augmented Generation (RAG) Chatbot utilizing a vector database. Each assignment includes detailed steps for data collection, model training, API development with Flask, and database setup, along with deliverables and evaluation criteria. The assignments are designed to be completed in 2-3 days and emphasize code quality, completeness, and documentation.

Uploaded by

sushantgaurav80Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as DOCX, PDF, TXT or read online on Scribd

/ 8