0% found this document useful (0 votes)

19 views51 pagesCache Memory

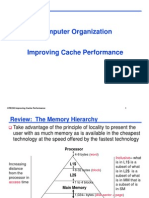

Cache memory is a small, fast type of memory that sits between the CPU and main memory, designed to speed up data access by storing frequently requested data. It can be organized in various ways, including direct mapping, associative mapping, and set associative mapping, each with its own pros and cons. Cache memory is more expensive than main memory but is crucial for enhancing CPU performance and efficiency.

Uploaded by

Noore NaheedCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

19 views51 pagesCache Memory

Cache memory is a small, fast type of memory that sits between the CPU and main memory, designed to speed up data access by storing frequently requested data. It can be organized in various ways, including direct mapping, associative mapping, and set associative mapping, each with its own pros and cons. Cache memory is more expensive than main memory but is crucial for enhancing CPU performance and efficiency.

Uploaded by

Noore NaheedCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 51