0% found this document useful (0 votes)

128 views33 pagesSoft Computing Lab

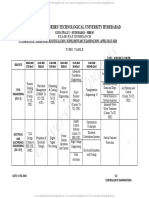

The document is a record notebook for the CCS364 Soft Computing laboratory course at Government College of Engineering, Erode, detailing experiments conducted by students in the Information Technology branch. It includes various experiments such as fuzzy control systems, discrete perceptron classification, XOR implementation using backpropagation, self-organizing maps, and genetic algorithms, each with aims, procedures, programs, and results. The notebook serves as a formal record for practical examinations during the academic year 2024-2025.

Uploaded by

rajesh21590845Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

128 views33 pagesSoft Computing Lab

The document is a record notebook for the CCS364 Soft Computing laboratory course at Government College of Engineering, Erode, detailing experiments conducted by students in the Information Technology branch. It includes various experiments such as fuzzy control systems, discrete perceptron classification, XOR implementation using backpropagation, self-organizing maps, and genetic algorithms, each with aims, procedures, programs, and results. The notebook serves as a formal record for practical examinations during the academic year 2024-2025.

Uploaded by

rajesh21590845Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 33