Development of a ROS2-Based Robot Simulation System in Gazebo Controlled Using

Python.

The objective of this project is to simulate a mobile robot equipped with a rectangular base,

four wheels, and an upward-protruding rod to support a camera. Your primary task involves

creating this robot within the Gazebo Simulator environment, enabling its navigation in a

virtual scene and capturing images via the camera. Once the system has been implemented,

you must design and propose your implementation ideas on an AI-based system to enable

the robot to perform autonomous context-aware behaviour in an unstructured environment,

such as obstacle avoidance. All proposed required perception devices and software

modules, the idea for decision-making, and an overall diagram of the proposed cognitive

system must be included in your report. Additionally, you need to have a discussion on the

proposed system and justify your choices.

The culmination of your project will be a singular launch file that, when executed, initialises

the Ignition Gazebo with your robot model, ready for ROS2-based control.

1. Guidelines

a. World and Model Creation:

You are required to design the “world” and the “model” .sdf files. The model .sdf

file must encapsulate all necessary components to construct the project’s

wheeled robot, complete with a camera.

Utilize the Gazebo Classic Model Editor to craft the general robot model.

b. Model Configuration:

Ensure the camera within your .sdf model functions as a sensor capable of

streaming images. Additionally, configure the model to allow wheel control,

facilitating the robot's navigation within the simulation.

Upon launching the project’s launch file, the robot model should be visible in

ignition Gazebo, enabling interaction via ROS2. You may create custom ROS2

nodes or leverage tools like rqt for straight-forward data manipulation and

camera stream access.

c. Design Specification:

The robot’s dimensions are at your discretion, with no stringent size

requirements.

Only the front wheels need to be controllable; the rear wheels do not require this

connection.

The camera should be motorised, allowing independent angle adjustments

separate from the wheel movements.

All the components of the robot, including the front wheels, and the camera

horizontal angle, must be controllable through ROS2 Actions (Client/server).

d. Programming Requirements:

All scripting must be conducted exclusively in Python using VSCode.

The project must utilise ROS2 Humble for the control system and data

communication framework.

To manage the robot’s wheels and camera motions, you must develop your

ROS2 Actions (Client/server) communication network and verify the

controllability using existing tools like rqt for control.

�2. Deliverables

Implementation, Live Demonstration and Testing:

A crucial part of your assessment, showcasing the functionality and responsiveness

of your simulated robot within the Gazebo environment. You will have an individual

meeting with the teaching team to explain your code and your implementation

strategy and test your implementation. You will also need to discuss the development

of your proposed AI-based cognitive architecture for the behavioural control of the

robot.

Video Submission:

A recorded video file must be submitted to demonstrate the working model,

highlighting control, image capture, and data communication and control capabilities.

Implementation Report:

A concise document detailing your strategy for implementing the project, including

any challenges faced and how they were overcome. This report should provide

insight into your development process and discuss the development of your

proposed AI-based cognitive architecture for the behavioural control of the robot. All

the details, including the proposed overall diagram, must be included, too (3-10

pages).

This project offers a hands-on opportunity to apply ROS2 and Python in a simulated

robotics context, emphasising practical skills in robot simulation, sensor integration,

and control systems. Your successful completion will demonstrate proficiency in

these areas, contributing significantly to your understanding and application of

robotics simulation technologies.

Here are some ideas to get you started.

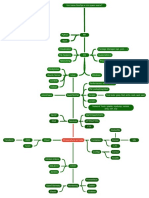

The initial robot’s model could look like the image below, which has been done in

Gazebo Classic. However, it is up to you to make it more advanced and redesign it:

�The final model tested in the ignition Gazebo using ROS2 and rqt is presented

below. As you can see, the right-hand image shows the image stream from the

camera. In addition to the following, you must develop your ROS2 Actions

(Client/server) communication network.

�3. Submission

2.1. Demonstration

All submissions need to be presented as an individual unless, by special

circumstance, the demonstration will be handled in a group. The demonstration

is likely to be on TBA At the end of your test session time, upload your completed

demo as required by the demo specification.

Note:

If you do not attend your demonstration session, you will be given ZERO (0)

marks.

Students with a Study Needs Agreement entitled to extra time should attend

the specified session.

4. Marks Distributions

The assessment for the project will be evaluated based on the following marking rubrics.

No Mark

Description of Criteria Weightage

. Allotted

1. Deliverable A (Implementation, Live Demonstration and Testing)

Implementation 10 marks

Live demonstration 10 marks

Testing and evaluation 10 marks

Development ideas 10 marks

Q&A session 10 marks

2. Deliverable B (Video Submission)

System Performance 10 marks

Creativity 10 marks

3. Deliverable C (Implementation Report)

Report with proper format 10 marks

� Proposed development ideas 5 marks

4. Extra Features

Adding extra functionality to the robot (e.g., autonomy)

15 marks

through adding extra sensors and data analysis

Total Marks 100 marks